Connected Rail Solution Implementation Guide

This document is the Cisco Connected Rail Solution Implementation Guide, which provides details about the test topology, relevant feature configuration, and deployment of this solution. It is meant to be representative of a deployed solution and not all-inclusive for every feature presented. It will assist in deploying solutions faster by showing an end-to-end configuration along with relevant explanations.

Previous releases of the Connected Transportation System focused on Positive Train Control, Connected Roadways, and Connected Mass Transit.

Audience

The audiences for this document are Cisco account teams, Cisco Advanced Services teams, and systems integrators working with rail authorities. It is also intended for use by the rail authorities to understand the features and capabilities enabled by the Cisco Connected Rail Solution design.

Organization

This guide includes the following sections:

|

|

|

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Solution Overview

This section will provide an overview of the Connected Rail Solution services, including the Connected Trackside implementation, Connected Train, overlay services such as video surveillance and infotainment, and onboard Wi-Fi service.

■![]() The Connected Trackside implementation includes the network topology supporting the data center services, Multiprotocol Label Switching (MPLS) backhaul, Long Term Evolution (LTE), and trackside wireless radios. When the train is in motion, it must maintain a constant seamless connection to the data center services by means of a mobility anchor. This mobility anchor maintains tunnels over each connection to the train and can load share traffic or failover links if one of the links fail.

The Connected Trackside implementation includes the network topology supporting the data center services, Multiprotocol Label Switching (MPLS) backhaul, Long Term Evolution (LTE), and trackside wireless radios. When the train is in motion, it must maintain a constant seamless connection to the data center services by means of a mobility anchor. This mobility anchor maintains tunnels over each connection to the train and can load share traffic or failover links if one of the links fail.

■![]() The Connected Train implementation includes the network topology supporting the intra-train communications among all the passengers, employees, law enforcement personnel, and onboard systems. It also helps enable the video surveillance, voice communications, and data traffic offloading to the trackside over the wireless network.

The Connected Train implementation includes the network topology supporting the intra-train communications among all the passengers, employees, law enforcement personnel, and onboard systems. It also helps enable the video surveillance, voice communications, and data traffic offloading to the trackside over the wireless network.

■![]() The overlay services depend on the Connected Train implementation and include video surveillance, infotainment, and network management. Video surveillance is provided by the Cisco Video Surveillance Management system, which includes the Video Surveillance Operations Manager (VSOM), and Long Term Storage (LTS) server in the data center, a Video Surveillance Media Server (VSMS) locally onboard the train, and a number of rail-certified IP cameras on the train. The passengers can access local information or entertainment from the onboard video servers and the employees or law enforcement officers can see the video surveillance feeds in real-time. The Davra RuBAN network management system is used for incident monitoring triggered by a number of soft or hard triggers.

The overlay services depend on the Connected Train implementation and include video surveillance, infotainment, and network management. Video surveillance is provided by the Cisco Video Surveillance Management system, which includes the Video Surveillance Operations Manager (VSOM), and Long Term Storage (LTS) server in the data center, a Video Surveillance Media Server (VSMS) locally onboard the train, and a number of rail-certified IP cameras on the train. The passengers can access local information or entertainment from the onboard video servers and the employees or law enforcement officers can see the video surveillance feeds in real-time. The Davra RuBAN network management system is used for incident monitoring triggered by a number of soft or hard triggers.

■![]() The onboard Wi-Fi service provides connectivity to all train passengers with separate Service Set Identifiers (SSIDs) for passengers, employees, and law enforcement personnel. This traffic is tunneled back to the data center and relies on the seamless roaming provided by the Connected Train implementation to provide a consistent user experience.

The onboard Wi-Fi service provides connectivity to all train passengers with separate Service Set Identifiers (SSIDs) for passengers, employees, and law enforcement personnel. This traffic is tunneled back to the data center and relies on the seamless roaming provided by the Connected Train implementation to provide a consistent user experience.

QoS, performance and scale, and results from a live field trial to test wireless roaming at high speed are also covered in later chapters.

The Cisco Connected Rail Design Guide is a companion document to this Implementation Guide. The Design Guide, which includes design options for all the services and this guide details the validation of all the services but not necessarily all the available options, can be found at the following URL:

■![]() https://docs.cisco.com/share/proxy/alfresco/url?docnum=EDCS-11479438

https://docs.cisco.com/share/proxy/alfresco/url?docnum=EDCS-11479438

Network Topology

In this solution, two distinct ways exist to implement the proposed solutions. In both, the passengers and other riders on the train need access to network resources from the Internet, the provider's data center, or within the train. Both implementations use a gateway on the train that forms tunnels with a mobility anchor in the provider's network.

■![]() The Lilee solution uses Layer 2 tunneling to bridge the train Local Area Network (LAN) to a LAN behind the mobility anchor. In this respect, the Lilee solution is similar to a Layer 2 Virtual Private Network (L2VPN).

The Lilee solution uses Layer 2 tunneling to bridge the train Local Area Network (LAN) to a LAN behind the mobility anchor. In this respect, the Lilee solution is similar to a Layer 2 Virtual Private Network (L2VPN).

■![]() The Klas Telecom solution relies on Cisco IOS and specifically PMIPv6 to provide the virtual connection from the train gateway to the mobility anchor in the data center. The networks on the train are advertised to the mobility anchor as Layer 3 mobile routes. These mobile routes are only present on the mobility anchor and not the intermediate transport nodes, so the Klas Telecom solution is similar to a Layer 3 Virtual Private Network (L3VPN).

The Klas Telecom solution relies on Cisco IOS and specifically PMIPv6 to provide the virtual connection from the train gateway to the mobility anchor in the data center. The networks on the train are advertised to the mobility anchor as Layer 3 mobile routes. These mobile routes are only present on the mobility anchor and not the intermediate transport nodes, so the Klas Telecom solution is similar to a Layer 3 Virtual Private Network (L3VPN).

The onboard network behind the mobility gateway is common to both solutions. Each car has a number of switches that are connected to the cars in front and behind to form a ring. Cisco Resilient Ethernet Protocol (REP) is configured on the switches to prevent loops and reduce the convergence time in the event of a link or node failure. The proposed switches are the IP67-rated Cisco IE 2000 or the Klas Telecom S10/S26, which is based on the Cisco Express Security Specialization (ESS) 2020. In each carriage, one or more wireless access points (the hardened Cisco IW3702) exist to provide wireless access to the passengers. These access points communicate with a Wireless LAN Controller (WLC) installed in the data center. For video surveillance, each carriage also has a number of IP cameras, which communicate with an onboard hardened server running the Cisco VSMS. An onboard infotainment system is also supported on the train to provide other services to the passengers.

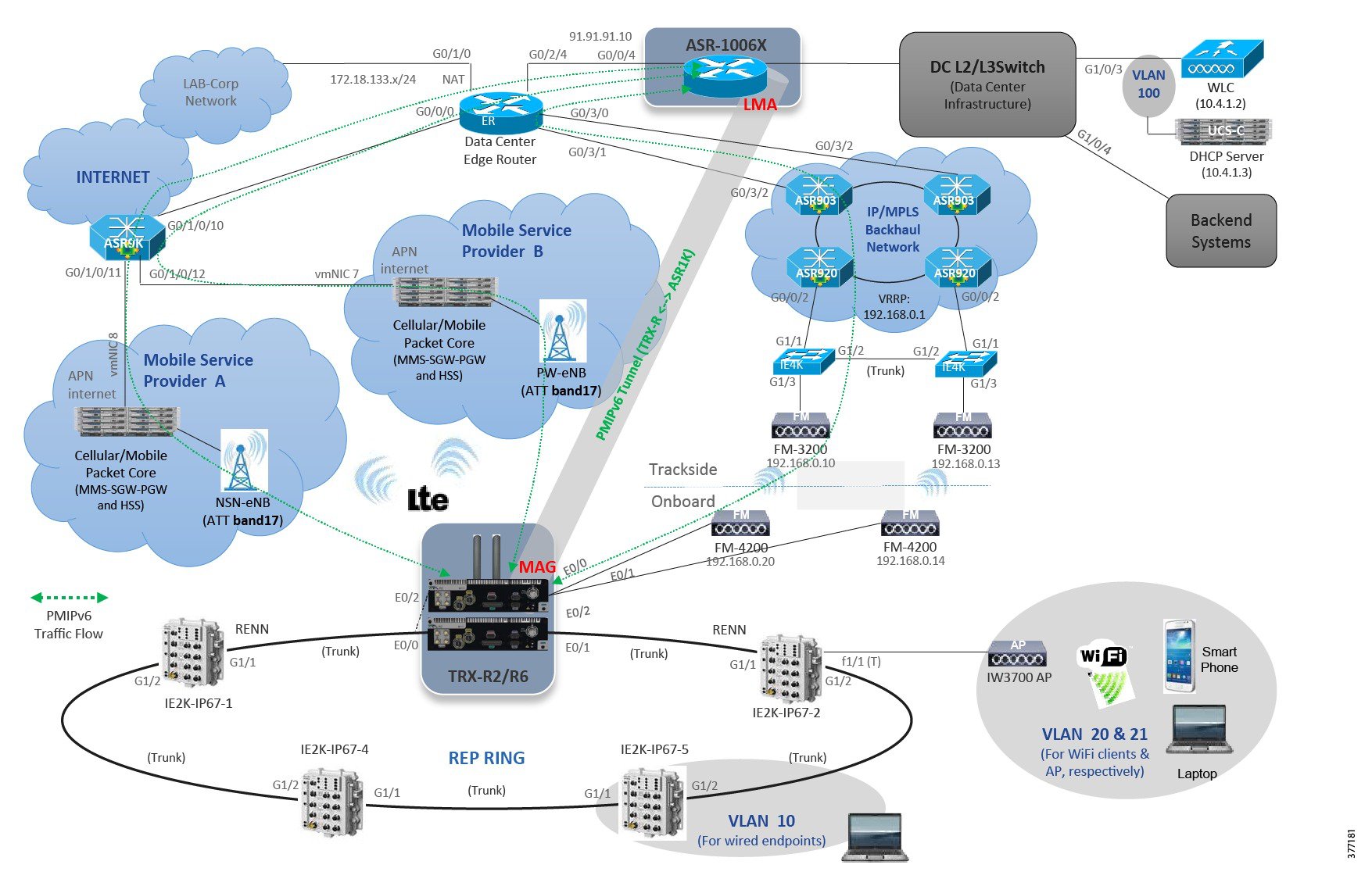

The Klas gateway solution is based on a virtualized Cisco Embedded Services Router (ESR) with Proxy Mobile IPv6 (PMIPv6) performing the mobility management running on the Klas TRX-R6 or TRX-R2. The Klas gateway on the train performs the role of Mobile Access Gateway (MAG) while a Cisco Aggregation Services Router (ASR) 100X in the data center performs the role of Local Mobility Anchor (LMA).

An example of an end-to-end system based on the IOS/Klas Telecom gateway is shown in Figure 1.

Figure 1 Topology Diagram for Solution Based on IOS/Klas Gateway

In the system based on the Lilee gateway, the MIG2450-ME-100 (sometimes referred to as ME-100) mobile gateway on the train builds an Layer 2 tunnel over the infrastructure to the virtual Lilee Mobility Controller (vLMC) in the data center. After the tunnel is formed, the vLMC will bridge all the train traffic to an access VLAN or VLAN trunk.

An example of an end-to-end system based on the Lilee gateway is shown in Figure 2.

Figure 2 Topology Diagram for System Based on Lilee Gateway

Solution Components

The Connected Rail Solution includes onboard, trackside, backhaul, and data center equipment.

■![]() Klas Telecom TRX-R2/R6 (for the Cisco IOS-based solution)

Klas Telecom TRX-R2/R6 (for the Cisco IOS-based solution)

■![]() Lilee Systems ME-100 (for the Lilee Systems-based solution)

Lilee Systems ME-100 (for the Lilee Systems-based solution)

■![]() Klas Telecom TRX-S10/S26 switch

Klas Telecom TRX-S10/S26 switch

■![]() Cisco IPC-3050/IPC-7070 IP camera

Cisco IPC-3050/IPC-7070 IP camera

■![]() Cisco VSMS on a rail certified server

Cisco VSMS on a rail certified server

The trackside equipment includes:

To support the train and trackside deployment, the data center includes:

■![]() Cisco Unified Computing System (UCS) to support applications including:

Cisco Unified Computing System (UCS) to support applications including:

Hardware model numbers and software releases that were validated are listed in Table 1..

Connected Trackside Implementation

This section includes the following major topics:

■![]() Per VLAN Active/Active MC-LAG (pseudo MC-LAG)

Per VLAN Active/Active MC-LAG (pseudo MC-LAG)

Wireless Offboard

The trackside wireless infrastructure includes everything needed to support a public or private LTE network and the Fluidmesh radio network. A public mobile operator using a public or private Access Point Name (APN) provides the LTE network in this solution. The Fluidmesh radios operate in the 4.9 - 5.9 GHz space using a proprietary implementation to facilitate nearly seamless roaming between trackside base stations.

Long Term Evolution

The LTE implementation in this solution relies on a public mobile operator. A detailed description of this setup is out of scope for this document. Because the train operator may use multiple LTE connections, the mobility anchor address must be reachable from each public LTE network. In both the Klas Telecom and Lilee Systems gateway implementations, the mobility anchor must have a single publicly reachable IP address to terminate the tunnels over the LTE connections.

Fluidmesh

The trackside radios are responsible for bridging the wireless traffic from the train to the trackside wired connections. Within a group of trackside radios, one is elected or configured as a mesh end and the rest are mesh points. The mesh point radios will forward the data from the connected train radios to the mesh end radio. The mesh end radio is similar to a root radio and acts as the local anchor point for all the traffic from the trackside radios. It is configured with a default gateway and performs all the routing for the trackside radio data.

The trackside radios are connected to the trackside switch network on a VLAN shared with the other trackside radios, which is connected to the MPLS backhaul via a service instance (Bridge Domain Interface or BDI) on the provider edge router. The trackside switched network is configured in a REP ring connected to a pair of provider edge routers for redundancy. The provider edge routers run Virtual Router Redundancy Protocol (VRRP) between the BDIs to provide a single virtual gateway address for the trackside radios. The BDIs are then placed in a L3VPN Virtual Routing and Forwarding (VRF) for transit across the MPLS backhaul to the data center network.

The radios are configured through a web interface with the default IP set to 192.168.0.10/24. Figure 3 shows an example of a mesh end radio configuration.

Figure 3 Trackside Radio General Mode

The radio is configured as a trackside radio on the FLUIDITY page. The unit role in this case is Infrastructure.

Figure 4 Trackside Radio FLUIDITY Configuration

After performing this configuration, new links will be available, FLUIDITY Quadro and FMQuadro.

Figure 5 Trackside Radio FLUIDITY Quadro

In this view, the trackside radios are displayed with the associated train radio seen as a halo around it. The real time signal strength of the train radio is also shown.

During a roam, the train radio halo will move to the strongest trackside radio in range. In Figure 6, the signal strength is shown after a roaming event.

Figure 6 Trackside Radio FLUIDITY Quadro - Roam

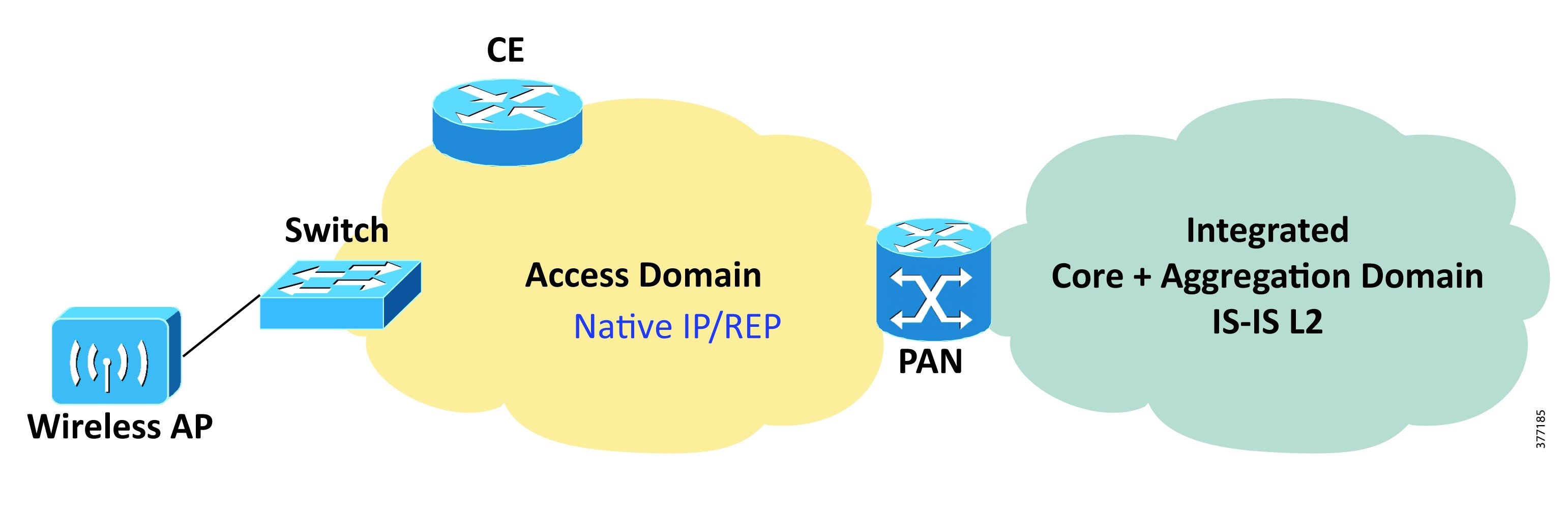

MPLS Transport Network

The core and aggregation networks are integrated with a flat Interior Gateway Protocol (IGP) and Label Distribution Protocol (LDP) control plane from the core to the Pre-Aggregation Nodes (PANs) in the aggregation domain. An example MPLS transport network is shown in Figure 7.

Figure 7 Flat IGP/LDP Network with Ethernet Access

All nodes--MPLS Transport Gateway (MTG), Core, Aggregation Node (AGN), and PAN--n the combined core-aggregation domain make up the IS-IS Level-2 domain or Open Shortest Path First (OSPF) backbone area.

In this model, the access network could be one of the following options:

■![]() Routers configured as Customer Edge (CE) devices in point-to-point or ring topologies over fiber Ethernet running native IP transport, supporting L3VPN services. In this case, the CEs pair with PANs configured as L3VPN Provider Edges (PEs), enabling layer 3 backhaul. Other options are any Time Division Multiplexing (TDM) circuits connected directly to the PANs, which provide Circuit Emulation services via pseudowire-based circuit emulation to the MTG.

Routers configured as Customer Edge (CE) devices in point-to-point or ring topologies over fiber Ethernet running native IP transport, supporting L3VPN services. In this case, the CEs pair with PANs configured as L3VPN Provider Edges (PEs), enabling layer 3 backhaul. Other options are any Time Division Multiplexing (TDM) circuits connected directly to the PANs, which provide Circuit Emulation services via pseudowire-based circuit emulation to the MTG.

■![]() Ethernet Access Nodes in point-to-point and REP-enabled ring topologies over fiber access running native Ethernet. In this case, the PANs provide service edge functionality for the services from the access nodes and connect the services to the proper L2VPN or L3VPN service backhaul mechanism. The MPLS services are always enabled by the PANs in the aggregation network.

Ethernet Access Nodes in point-to-point and REP-enabled ring topologies over fiber access running native Ethernet. In this case, the PANs provide service edge functionality for the services from the access nodes and connect the services to the proper L2VPN or L3VPN service backhaul mechanism. The MPLS services are always enabled by the PANs in the aggregation network.

MPLS Transport Gateway Configuration

This section shows the IGP/LDP configuration required on the MTG to build the Label Switched Paths (LSPs) to the PANs.

Figure 8 MPLS Transport Gateway

Interface Configuration

IGP Configuration

Pre-Aggregation Node Configuration

This section shows the IGP/LDP configuration required to build the intra-domain LSPs. Minimal BGP configuration is shown as the basis for building the transport MPLS VPN.

Figure 9 Pre-Aggregation Node (PAN)

Interface Configuration

IGP/LDP Configuration

Dual Homed Hub-and-Spoke Ethernet Access

Dual homed topologies for hub-and-spoke access have been implemented in the following mode:

■![]() Per Node Active/Standby Multi-Chassis Link Aggregation Group (MC-LAG)

Per Node Active/Standby Multi-Chassis Link Aggregation Group (MC-LAG)

■![]() Per VLAN Active/Active MC-LAG (pseudo Multichassis Link Aggregation Control Protocol or mLACP)

Per VLAN Active/Active MC-LAG (pseudo Multichassis Link Aggregation Control Protocol or mLACP)

Figure 10 Per Node Active/Standby MC-LAG

Per Node Active/Standby MC-LAG

The Ethernet access node is Dual Homed to the AGN nodes using a bundle interface. The AGN node establishes an inter-chassis bundle and correlates the states of the bundle member ports using Inter-Control Center Communications Protocol (ICCP).

At steady state, links connected to AGN1 are selected as active, while links to AGN2 are kept in standby state ready to take over in case of a failure.

The following configuration shows the implementation of the AGN nodes, AGN-K1101 and AGN-K1102, and the Ethernet Access Node.

Aggregation Node Configuration

AGN1: Active Point-of-Attachment (PoA) AGN-K1101: ASR9000

For reference throughout this document, the following is a list of settings used for MC-LAG configuration.

The access-facing virtual bundle interface is configured as follows:

■![]() Suppress-flaps timer set to 300 ms. This prevents the bundle interface from flapping during a LACP failover.

Suppress-flaps timer set to 300 ms. This prevents the bundle interface from flapping during a LACP failover.

■![]() Associated with ICCP redundancy group 300.

Associated with ICCP redundancy group 300.

■![]() Lowest possible port-priority (to ensure node serves as active PoA initially).

Lowest possible port-priority (to ensure node serves as active PoA initially).

■![]() Media Access Control (MAC) address for bundle interface. This needs to match the MAC address configured on the other PoA's bundle interface.

Media Access Control (MAC) address for bundle interface. This needs to match the MAC address configured on the other PoA's bundle interface.

■![]() Wait-while timer set to 100 ms to minimize LACP failover time.

Wait-while timer set to 100 ms to minimize LACP failover time.

■![]() Maximum links allowed in the bundle limited to 1. This configuration ensures that the access node will never enable both links to the PoAs simultaneously if ICCP signaling between the PoAs fails.

Maximum links allowed in the bundle limited to 1. This configuration ensures that the access node will never enable both links to the PoAs simultaneously if ICCP signaling between the PoAs fails.

For reference throughout this document, the following is a list of settings used for ICCP configuration. The ICCP redundancy group is configured as follows:

■![]() mLACP node ID (unique per node).

mLACP node ID (unique per node).

■![]() mLACP system MAC address and priority (same for all nodes). These two values are concatenated to form the system ID for the virtual LACP bundle.

mLACP system MAC address and priority (same for all nodes). These two values are concatenated to form the system ID for the virtual LACP bundle.

■![]() ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

■![]() Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

AGN2: Active Point-of-Attachment (PoA) AGN-A9K-K1102: ASR9000

The ICCP redundancy group is configured as follows:

■![]() mLACP node ID (unique per node).

mLACP node ID (unique per node).

■![]() mLACP system MAC address and priority (same for all nodes). These two values are concatenated to form the system ID for the virtual LACP bundle.

mLACP system MAC address and priority (same for all nodes). These two values are concatenated to form the system ID for the virtual LACP bundle.

■![]() ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

■![]() Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

Ethernet Access Node Configuration

The following configuration is taken from a Cisco router running IOS. Configurations for Ethernet switches and other access nodes can be easily derived from the following configuration.

Per VLAN Active/Active MC-LAG (pseudo MC-LAG)

The Ethernet access node connects to each AGN via standalone Ethernet links or Bundle interfaces that are part of a common bridge domain(s). All the links terminate in a common multi-chassis bundle interface at the AGN and are placed in active or hot-standby state based on node and VLAN via ICCP-SM negotiation.

In steady state conditions, each AGN node forwards traffic only for the VLANs is responsible for, but takes over forwarding responsibility for all VLANs in case of peer node or link failure.

The following configuration example shows the implementation of active/active per VLAN MC-LAG for VLANs 100 and 101, on the AGN nodes, AGN-K1101 and AGN-K1102, and the Access Node, ME-K0904.

Figure 11 Per VLAN Active/Active MC-LAG

Aggregation Nodes Configuration

AGN1: Active Point-of-Attachment (PoA) AGN-A9K-K1101: ASR9000

For reference throughout this document, here is a list of settings used for ICCP-SM configuration. The ICCP-SM redundancy group is configured as follows:

■![]() Multi-homing node ID (1 or 2 unique per node).

Multi-homing node ID (1 or 2 unique per node).

■![]() ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

ICCP peer address. Since ICCP works by establishing an LDP session between the PoAs, the peer's LDP router ID should be configured.

■![]() Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

Backbone interfaces. If all interfaces listed go down, core isolation is assumed and a switchover to the standby PoA is triggered.

Standby Point-of-Attachment (PoA) AGN-A9K-K1102: ASR9000

The ICCP redundancy group is configured as follows:

In this example, the Ethernet access node is a Cisco Ethernet switch running IOS. Configurations for other access node devices can be easily derived from this configuration example, given that it shows a simple Ethernet trunk configuration for each interface.

Ethernet Access Rings

In addition to hub-and-spoke access deployments, the Connected Rail Solution design supports native Ethernet access rings off of the MPLS Transport domain. These Ethernet access rings are comprised of Cisco Industrial Ethernet switches, providing ruggedized and resilient connectivity to many trackside devices.

The Ethernet access switch provides transport of traffic from the trackside Fluidmesh radios and other trackside components. To provide segmentation between services over the Ethernet access network, the access switch implements 802.1q VLAN tags to transport each service. Ring topology management and resiliency for the Ethernet access network is enabled by implementing Cisco REP segments in the network.

The Ethernet access ring is connected to a pair of PANs at the edge of the MPLS Transport network. The PAN maps the service transport VLAN from the Ethernet access network to a transport MPLS L3VPN VRF instance, which provides service backhaul across the Unified MPLS transport network. The REP segment from the access network is terminated on the pair of access nodes, providing closure to the Ethernet access ring.

If the endpoint equipment being connected at the trackside only supports a single default gateway IP address, VRRP is implemented on the pair of PANs to provide a single virtual router IP address while maintaining resiliency functionality.

Pre-Aggregation Node Configuration

The following configurations are the same for both access nodes.

Route Target (RT) constrained filtering is used to minimize the number of prefixes learned by the PANs. In this example, RT 10:10 is the common transport RT which has all prefixes. While all nodes in the transport network export any connected prefixes to this RT, only the MTG nodes providing connectivity to the data center infrastructure and backend systems will import this RT. These nodes will also export the prefixes of the data center infrastructure with RT 1001:1001. The PAN nodes import this RT, as only connectivity with the data center infrastructure is required.

Ethernet Access Ring NNI Configuration

IP/MPLS Access Ring NNI Configuration

This interface has two service instances configured. The untagged service instance provides the Layer 3 connectivity for the MPLS transport. The tagged service instance closes the Ethernet access ring and REP segment with the other access node.

The following configuration example shows how VRRP is implemented on each access node to enable a single gateway IP address for an endpoint device.

Ethernet Access Node Configuration

The identical configuration is used for each Ethernet access switch in the ring. Only one switch configuration is shown here.

Ethernet Ring NNI Configuration

UNI to Trackside Radio Configuration

L3VPN Service Implementation

Layer 3 MPLS VPN Service Model

This section describes the implementation details and configurations for the core transport network required for Layer 3 MPLS VPN service model.

This section is organized into the following sections:

■![]() MPLS VPN Core Transport, which gives the implementation details of the core transport network that serves all the different access models.

MPLS VPN Core Transport, which gives the implementation details of the core transport network that serves all the different access models.

■![]() L3VPN Hub-and-Spoke Access Topologies, which describes direct endpoint connectivity at the PAN.

L3VPN Hub-and-Spoke Access Topologies, which describes direct endpoint connectivity at the PAN.

■![]() L3VPN Ring Access Topologies, which provides the implementation details for REP-enabled Ethernet access rings.

L3VPN Ring Access Topologies, which provides the implementation details for REP-enabled Ethernet access rings.

Note: ASR 903 RSP1 and ASR 903 RSP2 support L3VPN Services with non-MPLS access.

Figure 12 MPLS VPN Service Implementation

This section describes the L3VPN PE configuration on the PANs connecting to the access network, the L3VPN PE configuration on the MTGs in the core network, and the route reflector required for implementing the L3VPN transport services.

This section also describes the Border Gateway Protocol (BGP) control plane aspects of the L3VPN service backhaul.

Figure 13 BGP Control Plane for MPLS VPN Service

MPLS Transport Gateway MPLS VPN Configuration

This is a one-time MPLS VPN configuration done on the MTGs. No modifications are made when additional access nodes or other MTGs are added to the network.

MTG-1 VPNv4/v6 BGP Configuration

MTG-2 VPNv4/v6 BGP Configuration

Note: Each MTG has a unique RD for the MPLS VPN VRF to properly enable BGP FRR Edge functionality.

Centralized CN-RR Configuration

The BGP configuration requires the small change of activating the neighborship when a new PAN is added to the core/aggregation network.

Centralized vCN-RR Configuration

L3VPN over Hub-and-Spoke Access Topologies

This section describes the implementation details of direct endpoint connectivity at the PAN over hub-and-spoke access topologies.

Direct Endpoint Connectivity to PAN Node

This section shows the configuration of PAN K1401 to which the endpoint is directly connected.

MPLS VPN PE Configuration on PAN K1401

Directly-attached Endpoint UNI

L3VPN over Ring Access Topologies

L3VPN transport over ring access topologies are implemented for REP-enabled Ethernet access rings. This section shows the configuration for the PANs terminating the service from the Ethernet access ring running IOS-XR, as well as a sample router access node.

PAN dual homing is achieved by a combination of VRRP, Routed pseudowire (PW), and REP providing resiliency and load balancing in the access network. In this example, the PANs, AGN-1 and AGN-2, implement the service edge (SE) for the Layer 3 MPLS VPN transporting traffic to the data center behind the MTG. A routed BVI acts as the service endpoint. The Ethernet access network is implemented as a REP access ring and carries a dedicated VLAN to Layer 3 MPLS VPN-based service. A PW running between the SE nodes closes the service VLAN providing full redundancy on the ring.

VRRP is configured on the Routed BVI interface to ensure the endpoints have a common default gateway regardless of the node forwarding the traffic.

Sample Access Node Configuration

Connected Train Implementation

This section includes the following major topics:

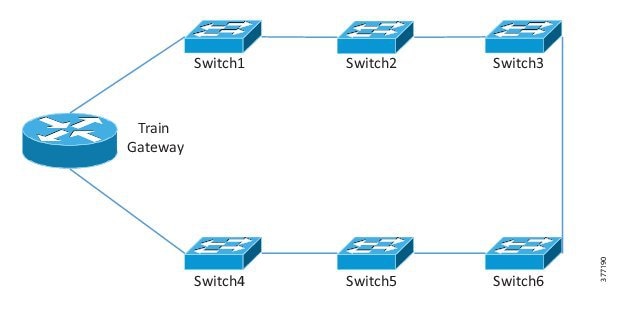

REP Ring

To maintain a resilient switched network onboard the train, the switches are connected in a ring topology configured with Cisco REP. The onboard gateway can be connected in line with the ring or attached to the ring as a "router-on-a-stick." If the onboard gateway is cabled in line with the ring, it must be configured to close the ring. If the ring is not closed, it will not have the proper failover protection. Figure 14 shows an example with the gateway in line with the ring and Figure 15 shows an example of the gateway attached to a single switch.

Figure 14 Train Gateway in line with REP Ring

Figure 15 Train Gateway Singly Attached to Switch

Neither the Lilee ME-100 nor the Klas Telecom TRX routers support REP; therefore, if put in line with the ring, the connected switches must be configured with REP Edge No-Neighbor (RENN). This will allow the ring to close and maintain failure protection and a loop free architecture. The reasons to put the gateway in line with the REP ring are if the switches only have two Gigabit Ethernet connections. In this case, putting the gateway in line on the Gigabit ports will maintain a high bandwidth ring. If the switch ports are all the same speed, then attaching the router on a single port could be operationally less complex. The following is an example of a switch port connected to an in line gateway.

The following is an example of a switch configured as an edge when the gateway is not in line.

The interface facing the gateway in this case is configured as a trunk.

Gateway Mobility

Lilee Systems

The Lilee-based solution requires an onboard gateway, the ME-100, and an offboard mobility anchor, the virtual Lilee Mobility Controller (vLMC). The ME-100 supports a number of cellular, Wi-Fi, and Ethernet connections for the offboard WAN connectivity. In this system, the cellular and Ethernet ports were used for validating connectivity to the trackside infrastructure.

ME-100

Please refer to Wireless Offboard for the specific configurations for LTE and Fluidmesh.

Each mobile network must be attached to a VLAN interface configured on the ME-100. When the Layer 2 mobility function is enabled on the ME-100 and vLMC, these mobile networks will be connected at Layer 2 to the LAN side of the vLMC. It is therefore important to ensure the addresses in the mobile network subnet are not duplicated by the addresses on the LAN side of the vLMC.

The LAN connections can be configured as access ports or 802.1q trunk ports. In this system, the ME-100 was inserted into the REP ring with the LAN ports configured as trunks. The configuration is given below.

Enabling Layer 2 mobility on the ME-100 and vLMC will cause tunnels to be created between the devices and enable Layer 2 connectivity between the two LANs. This will enable the vLMC to manage seamless roaming between the WAN interfaces and maintain Layer 2 connectivity between the LANs.

vLMC

The Mobility Controller is used as the topological anchor point for the ME-100s. It is a Layer 3 device with the ability to bridge Layer 3 interfaces to Layer 2 VLANs. The Lilee Mobility Controller (LMC) can be installed as a physical network appliance or as a virtual machine. In this system, the LMC is virtualized and has dual WAN connections to keep the cellular network separate from the wireless backhaul network. The LAN Ethernet connection is bridged to a VLAN interface which is used for Layer 2 mobility.

Enabling the Layer 2 mobility service on the vLMC only requires configuring the interface that will be bridged to the VLAN interfaces and which VLANs will be bridged.

With the above configuration, the Ethernet port is logically equivalent to a trunk port, all frames will be VLAN tagged. To configure the bridge interface with a single VLAN, the line can be appended with a VLAN identifier.

In this scenario, the switch port should be configured as an access port in VLAN 10. In the former example, the switch port should be configured as an 802.1q trunk.

In the case of a vLMC with the Ethernet port acting as a trunk, the port associated with this virtual Ethernet interface should have the VLAN ID set to ALL (4095). Additionally, it must have promiscuous mode set to Accept. This is due to the behavior of the virtual machine environment. Even though the port is in a vSwitch, it does not do MAC learning. Because of this, it will filter out any traffic that does not match the MAC address of the Virtual Machine Network Interface Controller (vmNIC). The vLMC, however, uses a different MAC address for the VLAN interfaces, which does not match the vmNIC MAC. Without promiscuous mode, traffic to these VLANs would be dropped.

Load Balancing

The Lilee solution allows for equal and unequal load balancing between the different links used for roaming. The load balancing profile can also be changed depending on the system conditions. For instance, in the steady state, the Fluidmesh radios could receive 100% of the traffic. A condition could be configured that if the Fluidmesh connection were to become unavailable, then the traffic would be split evenly over the remaining cellular interfaces. This scenario is explained below.

With the “default" policy activated, the tunnel output looks like the following.

The 'U' flag indicates that the tunnel is up. The “A” flag indicates that the tunnel is active. When the Fluidmesh connection is lost and the Layer 2 mobility tunnel is no longer active on that link, the "lte-only" policy takes effect.

As seen above, both dialer interfaces are up and active while the VLAN 200 tunnel is not up or active.

The interfaces also support unequal load balancing as well as numerous event conditions to influence the load balancing and failover behavior. An example of unequal load balancing is given below.

With this configuration, dialer 1 will receive 50 times more traffic then dialer 0 and VLAN 200 will receive twice as much traffic as dialer 1 and 100 times more traffic then dialer 0. This can be seen in the show output of the tunnels.

This can also be verified in the traffic counters on the tunnel interfaces.

The TX bytes through Dialer 1 is approximately 50 times more than the TX bytes through Dialer 0 and VLAN 200 has approximately twice as many TX bytes as Dialer 1.

Other event conditions and mobility profile options can be found in the LileeOS Software Configuration Guide and Command Reference Guide.

Klas Telecom TRX-R6

The TRX product runs the ESR5921 router as a virtual machine. Therefore, the TRX hardware requires configuring independently of the virtual router. The configuration method is designed to look similar to Cisco IOS with much of the same look and feel. The steps to configuring it for a Cisco ESR5921 image are described below.

Virtual Machine Deployment

If a storage pool does not yet exist on the TRX, it must be created.

The ESR5921 qcow file then needs to be copied from a Secure Copy (SCP) or TFTP server to the TRX-R6 disk storage.

Once the image is copied to the filesystem, the virtual machine needs to be created.

The Klas router uses vSwitches to provide a connection from the physical interfaces to the virtual interfaces in the virtual machine. They must be created prior to configuring them in the virtual machine.

The vSwitches can now be configured on the virtual machine. It is important to choose the correct Network Interface Controller (NIC) type when adding them to a virtual machine. The options are virtio and e1000. Leaving off the NIC type will result in configuring it with the default type.

After configuring all the virtual machine options, it must be started.

Then the console can be connected.

Connecting to the console will first bring the user to the KlasOS wrapper which sits between the KlasOS hardware and the virtual machine. From this wrapper, the virtual machine must also be started.

Once the virtual machine is started, the ESR CLI can be accessed.

Single Gateway

PMIPv6 is used in this system to enable seamless roaming between the WAN interfaces on the train gateway to support failover and load sharing. The onboard IP gateway is known as the MAG and it anchors itself to the LMA hosted on an ASR-100X in the data center. Once the MAG is configured with all the available roaming interfaces, it can use any or all of the links for the train to trackside traffic.

Note: Changes to the PMIPv6 configuration cannot be made while there are bindings present.

Since PMIPv6 is built around IPv6, IPv6 must be enabled on the MAG.

Note: The MAG must also be time synced to the LMA. This can be accomplished by using a common Network Time Protocol (NTP) source.

Each MAG is configured with a unique IP address on a loopback interface that serves as the unique identifier to the LMA. It is known as the home address.

Each WAN interface must be configured with the proper layer 2/3 configurations to connect to the cellular or wireless network.

Please refer to Wireless Offboard, for the specific configurations for LTE and Fluidmesh.

All the data traffic from the onboard train network, including passengers and employees, enters into the MAG on one or more interfaces connected to the switching network. In this implementation, each traffic type was configured on a different subinterface with an 802.1q tag.

Just as the LMA identifies each MAG by its home address, the MAG must know the LMA by a single IP address. Each MAG WAN interface must have a route to the LMA's WAN-facing interface.

The PMIPv6 configuration is divided into two sections, pmipv6-domain and pmipv6-mag.

The domain configuration contains the encapsulation type, LMA definition, Network Access Indicator (NAI), and mobile map definition if desired. To enable the multipath component of the MAG, the encapsulation must be set to udptunnel instead of gre. The LMA address must be reachable from all WAN interfaces whether it is the public cellular network or the operator's wireless network. Since the MAG is acting as the Mobile Node (MN), it requires an NAI for itself, which takes on the form of [user]@realm. In this case, the user represents the MAG and the realm represents a way to bundle all the mobile networks from all MAGs in a single network definition on the LMA. This NAI then points to the previously configured LMA.

Contained in the pmipv6-mag section are all the details specific to the particular MAG. The MAG name, MAG_T1 in this case, must be unique among the other MAGs connected in this domain to the LMA. It represents an entire train and all the traffic behind it. An important feature to note is the heartbeat. When configured on the MAG and LMA, each device will send a heartbeat message with the source address of each configured roaming interface. The MAG and LMA will then maintain a table of the status of each roaming interface. If a connection fails, the heartbeat would time out and the tunnel over that connection would be brought down. This way the MAG and LMA can accurately know which paths are active.

The other key feature in use is the logical mobile network feature. With a traditional mobile node, the client device is seen as mobile and the LMA handles the addressing and mobility. With a logical mobile network, the MAG is considered mobile as well as all the networks configured behind it. In this case, the LMA handles mobility for the MAG and its subnets, but does not provide mobility to a specific client device. In other words, the LMA builds tunnels to the MAG, not the end device. The logical-mn configuration indicates which interfaces are connected to the end devices.

The LMA represents the topological anchor point for all the MAGs. The PMIPv6 tunnels are terminated here and are created dynamically when bindings are formed.

The LMA must have a single IP address that is reachable via the public cellular network and the private wireless network.

The LMA must have an ip local pool configured for the MAG home addresses as a placeholder. The LMA does not hand out DHCP addresses from this pool.

The PMIPv6 specific configuration is similar to the MAG in that it has a separate domain and LMA configuration section.

The minimum requirements for the domain definition are the udptunnel encapsulation and the NAI definition. If the MAGs can share a common summary address for the mobile networks, they can be known by the LMA with a common realm definition. This allows for one network definition to be used instead of unique network statements for each MAG.

Contained in the pmipv6-lma section are all the details specific to the LMA. The configuration here is similar to the MAG. Instead of configuring the MAGs statically in the case of traditional mobile nodes where the MAG is stationary, they are learned dynamically to allow the MAGs to connect with multiple roaming interfaces. The pool statement ensures that the connecting MAGs have a home interface in the defined range. The mobile network pool configures the subnets being used for all the traffic behind the trains. In this example, 10.0.0.0/8 indicates the entire pool of addresses in use across all the trains, but it will only expect mobile subnets with a 24-bit mask. This allows the operator to use the second octet to represent a specific train, the third octet to represent a specific traffic type, and the fourth octet to represent a specific host.

Once the configuration is complete, the MAG will initiate a connection with the LMA and the bindings will be created. It should be verified that the PMIPv6 tunnels are active and the LMA has learned the mobile networks from the MAG.

Mobile maps can be used to enable application-based routing for a greater degree of load sharing between the roaming interfaces. Without the mobile map feature, no way exists to guarantee traffic will choose one particular path when multipath is enabled. The mobile map feature works with access lists to assign a specific order of roaming interfaces to a given traffic type.

Access lists must be created for all traffic that will be subject to the mobile map configuration under PMIPv6.

The PMIPv6 domain configuration section contains the access list to link-type mapping. The link-type names must match the labels given to the roaming interfaces for the mobile maps to work properly.

Many mobile maps can be configured, but only one can be active on the MAG and LMA. Multiple access-lists can be added by using different sequence numbers in the mobile-map configuration. The link-type list defines which links will be used in order by the matching traffic. If the first link-type is unavailable, the second link will be used. The keyword NULL can be added to the end to drop traffic if the configured link-types are unavailable. If the link-type does not match the entry in the binding, the traffic will not be filtered properly.

Once the mobile map is configured, it must be enabled in the pmipv6-mag configuration section.

Once the bindings are established, there will be dynamic route-map entries created referencing those access-lists.

The mobile map configuration on the LMA is similar to the MAG. Access lists must be created for all traffic that will be subject to the mobile map configuration under PMIPv6.

In the following example, the traffic sources are networks behind the LMA in the data center.

The PMIPv6 domain configuration section contains the access list to link-type mapping. The link-type names must be consistent across the connected MAGs for the mobile maps to work properly.

As with the MAG, if the link-type does not match the entry in the binding, the traffic will not be filtered properly.

Once the mobile map is configured, it must be enabled in the pmipv6-lma configuration section.

The interface statement helps enable the mobile map on that interface. Once the bindings are established, dynamic route-map entries will be created.

Multi Gateway

A dual-MAG or multi-MAG setup gives the operator the flexibility to have more roaming interfaces for a set of mobile networks that can be used for redundancy, failover, or load sharing. However, one restriction in PMIPv6 is that two MAGs cannot advertise the same mobile network. Therefore, the redundant MAGs must be configured to look like a single MAG to the LMA. This is accomplished by using a First Hop Redundancy Protocol (FHRP) to let the MAGs share the mobile networks. One MAG will be the active router for a set of mobile networks and the other MAG will be the backup. The LMA will see one MAG entry with the roaming interfaces from both MAGs.

If MAG failover is the primary goal, configuring one MAG as active for one or more mobile networks and backup for the others will accomplish this. The limitation with this approach is that a MAG could receive a disproportionate amount of traffic depending on the mobile network distribution between the MAGs. Another approach is by splitting the user traffic in half and configuring each MAG to be the active router for each half of the traffic. This approach provides load balancing and failover for all traffic types.

The traffic must be split in a way that makes logical and logistical sense given the physical environment. If half of the wireless access points on a train are in one mobile network and the other half are on a different mobile network, when a wireless client roams, he will at some point receive a new IP address. This will cause some amount of disruption in his connection. If access points for both mobile networks are present in every car, if a user roams from car to car, he could potentially roam back and forth between the mobile networks. The advantage to this approach is that each car has the same configuration.

The alternate approach is to use one mobile network for the back half of a train and another mobile network for the front half. As long as a mobile user stays in his half of the train, he is not likely to roam to a different network. The disadvantage to this approach is that the cars cannot be added or removed without ensuring it is configured for the correct spot in the train consist.

Using multiple MAGs also has implications for the switching network on the train. With a single gateway, it can be connected in line with the REP ring or directly off one of the switches. However, with multiple MAGs, they cannot be connected in line. The reason for this is because a REP ring only supports one edge whereas an in line gateway must be configured as the edge. Therefore, multiple MAGs must be connected on trunk ports and not REP edge ports.

In this system, the first approach will be used with identically configured cars.

Each traffic type that is being load balanced across the MAGs will need its own unique subnet on each MAG. It may be preferable to keep some traffic on a single subnet for ease of deployment, like video surveillance. In this case, the traffic will have failover protection, but it won't be load balanced. Each subnet is then configured on a separate interface on both MAGs. In this system, VRRP is used as the FHRP to provide a virtual gateway for the mobile networks. One configuration example for a traffic type is given below. In this example, half the clients would be connected to VLAN 10 and the other half on VLAN 15.

The preempt delay is configured to ensure all forwarding paths are up before the router becomes the master again. The priority is set higher then default (100) to ensure it is the primary path.

As seen in the VRRP status, MAG1 is the master for VLAN 10 and the backup for VLAN 15 while MAG2 is the backup for VLAN 10 and the master for VLAN 15. In the event of a MAG failure, the other MAG would become master for both VLANs.

Because the MAGs are configured for load balancing, mobile maps are necessary for proper operation. As described in the single gateway configuration, access-lists must be configured for all the mobile networks. The access-lists should be the same on both MAGs to ensure consistent traffic matching.

Cases may exist where further control of the VRRP decision-making process is desired. For instance, if all the WAN links lost their connection but the gateway is still active, the VRRP state will not change. To mitigate this issue, VRRP object tracking with IP SLA can be used.

The client subinterface is then configured with VRRP tracking on the previously configured object.

Each MAG is configured nearly the same so the LMA will consider them as one MAG. This includes the loopback interface used as the home interface, the MAG name, the domain name, and the NAI. Since each MAG will be active for one set of mobile networks and backup for another, all the mobile networks must be configured in the PMIPv6 section.

The difference between the PMIPv6 configurations will be found in the roaming interface definition. Each MAG can use different interfaces for roaming and the labels must also be different between the MAGs.

The mobile-map definition is similar to the single gateway configuration for each respective MAG.

In this example, the traffic is configured to always prioritize the Fluidmesh connection over the cellular interfaces.

The LMA will see the two MAGs as a single MAG, which means the mobile maps and access-lists need to be configured properly to ensure an optimal traffic flow. Each access-list should contain the mobile networks that are active for a particular MAG. Mobile maps that point to these access-lists will ensure that traffic is routed properly.

The mobile map configuration will ensure that the MAGs receive the traffic that is active on them. In the event of a MAG failure, the second link-type configured will ensure that the traffic takes the higher bandwidth link on the remaining MAG. If the configured link-types are unavailable, the traffic will fall back to the standard PMIPv6 routing decisions.

Since the MAGs appear as one to the LMA, the show output will look similar to the single gateway model.

Wireless Offboard

LTE

Lilee

Each cellular modem requires a cellular-profile to connect to the service provider. This can include the access point name (APN), username, and password. Additionally, the cellular modem may have slots for one or two Subscriber Identity Model (SIM) cards.

Dialer interfaces must then be configured to bind the cellular profile to the physical modem.

Klas

The cellular configuration involves configuring the physical interfaces under KlasOS and the virtual interfaces within the ESR virtual machine.

The Klas router is designed to work with many different types of cellular modems and therefore must have a consistent way to interface with them. When the LTE modem receives an IP address from the mobile provider, it will perform Network Address Translation (NAT) and act as a DHCP server to the Klas gateway.

Therefore, the modem interface must be configured as a DHCP client.

The resulting interface status is shown below.

The modem interface must then be associated with the virtual machine. First, the virtual machine must have the appropriate number of vSwitches configured.

Next, a DHCP pool must be created where the ESR virtual interface connected to the modem interface is the DHCP client.

A vSwitch is then created and tied to the specific modem interface.

Finally, a Port Address Translation (PAT) statement is configured to match on all outgoing traffic leaving the modem interface.

After configuring the KlasOS modem interface, the virtual interface on the ESR is configured to receive a DHCP address from the pool created above.

Fluidmesh

In the Connected Rail Solution, Fluidmesh wireless radios are used to provide the connectivity from the train to the trackside network. For optimum Radio Frequency (RF) coverage, there should be at least two train radios, one at each end of the train consist. When the train radios are configured in the same mobility group, they will both evaluate the RF path to the trackside and the radio with the best connection will become the active path. The spacing between trackside radios should be determined by a site survey. Figure 16 depicts a train at two positions along the trackside. The green line indicates the active RF path while the red line depicts a backup path. As the train moves down the track, the path will change depending on which RF path is best.

Figure 16 Intra Train Fluidmesh Roaming

Train Radio Configuration

The radio configuration is done through a web interface. From a PC connected to the 192.168.0.X network, navigate to the radio's IP of 192.168.0.10. The first configuration page is the General mode under the General Settings section.

Figure 17 Train Radio General Mode

This is where the mode and IP addresses are set. All train radios should be configured as a mesh point. The default gateway should be the routed interface on the trackside network facing the Fluidmesh radios.

The next configuration step is on the Wireless Radio page.

Figure 18 Train Radio Wireless Radio

The passphrase configured here is used on all the train radios and trackside radios in the same network. The RF configuration can be done automatically or set manually. This configuration will depend on the site survey.

To configure the radio as a train radio and not a trackside, it must be configured in the FLUIDITY page under Advanced Settings.

Figure 19 Train Radio FLUIDITY Configuration

FLUIDITY is enabled, the unit role is Vehicle, and the vehicle ID is set. All train radios on the same train consist must have the same ID.

When the radio is properly configured, the trackside radios in range will show up under the Antenna Alignment and Stats page with the relative wireless strength.

Figure 20 Train Radio Antenna Alignment and Stats

Lilee

The Fluidmesh radios can be directly connected to the ME-100 gigabit interface or a switch connected to the ME-100 gigabit ports. In this system, they are connected directly to the ME-100.

Klas

Similar to the LTE configuration, both the Klas router physical ports and ESR virtual ports must be configured to connect to the Fluidmesh radios.

Like the cellular interfaces, the physical Ethernet interfaces must be tied to a vSwitch. If multiple Fluidmesh radios are connected to the Ethernet interfaces, they can be configured in the same vSwitch. A one-to-one relationship exists between a vSwitch and an Ethernet port in IOS; therefore, multiple ports in a vSwitch will show up as a single port in IOS.

Once the interface is configured in KlasOS, the interface must be configured within IOS. The port IP is configured in the same subnet as the Fluidmesh radio, which allows IP connectivity between the ESR and the Fluidmesh radios.

Overlay Services Implementation

This section includes the following major topic:

Video Surveillance

The Connected Rail Solution helps provide physical security for the operator's equipment, as well as the employees and passengers using the trains. Cisco VSM is used to provide live and recorded video to security personnel. This section describes the basic configuration and some of the options that are most relevant for a train operator.

Installation and Initial Setup

The Video Surveillance Media Server (VSMS), Video Surveillance Operations Manager (VSOM), Safety and Security Desktop (SASD), and IP Cameras should be installed and set up according to the official Cisco documentation below:

■![]() Cisco Video Surveillance Operations Manager User Guide, Release 7.8:

Cisco Video Surveillance Operations Manager User Guide, Release 7.8:

–![]() http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/7_8/admin_guide/vsm_7_8_vsom.pdf

http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/7_8/admin_guide/vsm_7_8_vsom.pdf

■![]() Cisco Video Surveillance Manager Safety and Security Desktop User Guide, Release 7.8:

Cisco Video Surveillance Manager Safety and Security Desktop User Guide, Release 7.8:

–![]() http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/7_8/sasd/vsm_7_8_sasd.pdf

http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/7_8/sasd/vsm_7_8_sasd.pdf

■![]() Cisco Video Surveillance Install and Upgrade Guide, Release 7.7 and Higher:

Cisco Video Surveillance Install and Upgrade Guide, Release 7.7 and Higher:

–![]() http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/install_upgrade/vsm_7_install_upgrade.pdf

http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/install_upgrade/vsm_7_install_upgrade.pdf

■![]() Cisco Video Surveillance Virtual Machine Deployment and Recovery Guide for UCS Platforms, Release 7.x:

Cisco Video Surveillance Virtual Machine Deployment and Recovery Guide for UCS Platforms, Release 7.x:

–![]() http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/vm/deploy/VSM-7x-vm-deploy.pdf

http://www.cisco.com/c/dam/en/us/td/docs/security/physical_security/video_surveillance/network/vsm/vm/deploy/VSM-7x-vm-deploy.pdf

■![]() Cisco Video Surveillance 7070 IP Camera Installation Guide:

Cisco Video Surveillance 7070 IP Camera Installation Guide:

–![]() http://www.cisco.com/c/en/us/td/docs/security/physical_security/video_surveillance/ip_camera/7000_series/7070/install_guide/7070.html

http://www.cisco.com/c/en/us/td/docs/security/physical_security/video_surveillance/ip_camera/7000_series/7070/install_guide/7070.html

■![]() Cisco Video Surveillance 3050 IP Camera Installation Guide:

Cisco Video Surveillance 3050 IP Camera Installation Guide:

–![]() http://www.cisco.com/c/en/us/td/docs/security/physical_security/video_surveillance/ip_camera/3000_series/3050/install_guide/3050.html

http://www.cisco.com/c/en/us/td/docs/security/physical_security/video_surveillance/ip_camera/3000_series/3050/install_guide/3050.html

The initial installation and setup includes installing instances of the VSMS virtual machine (via the Open Virtualization Format or OVF template available on Cisco.com) at the data center and onboard the train. The servers hosting the VSM virtual machines all run VMware ESXi for the hypervisor, and the hardware itself is expected to be a high performance Cisco UCS server in the data center. Onboard the train, a ruggedized server is used to survive the more severe conditions such as vibration, which are typical on a train. The data center instances should be configured to include a VSOM for management as well as a LTS. The VSMS instance onboard the train should be configured to act as a media server for the local cameras.

It is important that Layer 3 connectivity is available between the IP cameras and the VSMS server, as well as between the VSMS, VSOM, and LTS servers, before beginning the installation and configuration of these applications.

Camera Template - Basic 24x7 Recording

VSOM uses the concept of Camera Templates to manage the properties of all similar (by model, role, or other criteria) cameras. To create a new camera template, log in to VSOM and browse to Cameras > Templates. On the Templates tab, create a new Template that will apply to a group of cameras. In this example, a template has been created for all 3050 model cameras.

Figure 21 shows that the Streaming, Recording and Events tab has been selected. From this tab ensure that the Basic Recording: 24x7 schedule has been selected from the drop-down menu, and that for Video Stream A, the far right button has been selected, which indicates that video will be recorded continuously and motion events will be marked in the recording.

Figure 21 VSOM Camera Template - Continuous Recording

Notice that the Video Quality slider has been set to Custom. After doing this, click the Custom hyperlink, which brings up a pop-up window where the custom parameters can be defined. Using this option lets you fine tune the video quality (and perhaps more importantly, the resulting bandwidth that is consumed). In Figure 22, a modest custom quality has been defined that is appropriate for the camera's usage.

Figure 22 VSOM Custom Quality Setting

This is enough information to define the basic camera template for basic recording. Save the template and begin to add cameras.

Still on the Cameras tab, click the Camera tab (see Figure 23), and then click Add. On this page, fill in the required information for the camera including the IP address, access credentials, location, and most importantly the Template that was defined earlier.

Figure 23 VSOM Add Camera and Apply Template

Camera Template - Scheduled Recording

Continuous recording around the clock may be the most common and simplest recording scheme; however, based on security requirements and available storage, it may make more sense to only record during parts of the day. For example, if the trains are only used during specific hours of the day (morning and evening rush hour) and otherwise the trains sit parked, it may only be necessary to record during the active hours.

To implement this use case, a schedule can be defined in VSOM and subsequently applied to one or more camera templates. In the System Settings tab in VSOM, select Schedules under Shared Resources.

Figure 24 VSOM System Settings

Click Add and then enter general information as requested before setting the Recurring Weekly Patterns. In the example in Figure 25, a new Time Slot called Rush Hour is assigned the purple color. By using simple mouse clicks, the schedule is created so that Rush Hour is defined to be between 7:00 AM and 10:00 AM as well as 4:00 PM and 7:00 PM in the evening.

Figure 25 VSOM Custom Schedule

After the schedule is created, browse to the Camera Template as described earlier. This time, instead of selecting the default schedule called Continuous Recording: 24/7, we select the newly created schedule called Morning and Evening Rush Hour. Notice that after the schedule is selected, additional lines appear allowing a different recording option (off, motion, continuous, or continuous with motion) for each time slot on the schedule. Each time slot corresponds to a different color on the graphical schedule.

Figure 26 VSOM Camera Template - Scheduled Recording

Event-Based Recording Options

In addition to recording continuously or based on a pre-determined schedule, VSM allows for video to be intelligently recorded when some type of event or incident occurs. Events can be triggered by motion detection, contact opening/closure, or event a digital soft trigger from a panic button for example. When an event occurs, multiple actions can be taken—from raising an alert in SASD for security personnel, to automatically pointing the camera to a new position, to starting to record video for a set amount of time. Additional details about the possible triggers and actions for events are covered in the official Cisco documentation referenced at the beginning of this section. Figure 27 shows an example of several "Motion Started" events.

Connected Edge Storage

Many Cisco IP cameras (such as the 3050 and 7070 models) include a built-in MicroSD card slot that can optionally be used to add video storage directly on the camera. This functionality, which is called Connected Edge Storage, helps enable the camera to record to the MicroSD card instead of the VSMS server.

Enabling the camera to record locally to the MicroSD card allows it to have a backup copy of the video. If the VSMS itself fails, or connectivity between the camera and VSMS fails, the camera will continue to record locally. Using the Auto-Merge feature will allow the camera to automatically copy the locally recorded video over to the VSMS once connectivity is restored, allowing any gaps in the VSMS recording to be filled in from the local copy.

Configuring these features is done in the Advanced Storage section of the Camera Template, as shown in Figure 28. VSM 7.8 added the ability to do scheduled copies of recordings from the camera's MicroSD card to the VSMS.

Figure 28 VSOM Camera Template - Connected Edge Storage

Long Term Storage

The video storage space available both onboard the camera and the VSMS server will be limited. Based on the configured video quality settings, recording time will typically be on the order of hours to days. Depending on the security and data retention policies, it may be preferable to retain video for longer periods of time.

The Cisco VSM solution includes the LTS functionality, which consists of a centralized high-capacity server or servers dedicated to retaining video recordings for long periods of time. Using the camera templates, video archiving can be configured to retain all video, or only video containing motion events. Also, the camera template can be set to archive selected video at a certain time each day, such as 11:00 PM. Figure 29 shows that the 7070 camera template is selected, and within the Advanced Storage pop-up, a LTS policy is set to archive all video for a period of 10 days, and for the LTS upload to occur daily at 15:00.

Figure 29 VSOM Camera Template - Long Term Storage

Integration with Davra RuBAN

The latest release of Davra RuBAN greatly simplifies integration with Cisco VSM to provide single pane management of devices and video monitoring.

Before implementing video with RuBAN, make certain that the network device (for example, mobile gateway or switch) has been provisioned successfully. This device will be used to associate with the camera so that in a map view video can be viewed for all cameras in a specific location (per train, for example).

To begin integrating VSM into RuBAN, log in to RuBAN and go to the Administration page. From there, click VSOM Integration, as shown in Figure 30.

Figure 30 RuBAN Administration Page

On the Camera Management page, click Setup VSOM Server.

Figure 31 RuBAN Camera Management

In the dialog window that pops up, enter the IP address and login credentials for VSOM.

Figure 32 RuBAN Setup VSOM Server

After successfully adding the VSOM server, the Camera Management page will list all of the cameras that it discovered from VSOM. The next step is to associate the camera with a network device. In the IoT Gateway column, select the appropriate device—in this example, an IE2000 switch is selected. Also, add a Tag to the camera that will be used later when adding the video feed to the switch's dashboard. In Figure 33, the tag camera3 is chosen, but it could be any text.

Figure 33 RuBAN Camera List, Add Tag

A new Dashboard is created which can be used to display an enlarged view of the camera's video feed. Begin by clicking New Dashboard at the top of the RuBAN web interface. From there, use the highly customizable dashboard creation wizard to make the desired layout with one or more video streams. In Figure 34, a layout with a single pane is shown, and a Camera component (under the Security category) is added to the pane.

Figure 34 RuBAN Add New Dashboard for Video

Clicking the gear icon at the top right of the CAMERA pane opens a pop0up window where the camera tag we defined earlier is selected. This determines which camera's video is shown in this dashboard pane.

Figure 35 RuBAN Configure Camera for Video Dashboard

After dashboard configuration has been completed, save it by clicking on the three parallel bars and then click Save, as shown in Figure 36. This is the most basic video dashboard possible; extensive customization is available to get the exact content displayed with the desired look-and-feel.

Figure 36 RuBAN Save New Dashboard

In addition to the dashboard view shown above, RuBAN's map view can also display streaming video feeds from all cameras associated with a network device. To add video from a camera to a gateway or switch in the map view, edit the IoT Profile for the device. In the example in Figure 37, a profile is created that includes a single camera, identified by the camera tag camera3 that was defined earlier.

After the profile is saved and applied to the IoT device, the RuBAN Internet of Everything (IoE) Portal will display the pop-up dashboard including the video from the specified camera in a graphical map view, as shown in Figure 38. Clicking Cisco 3050 will cause the video pane to display.

Figure 38 RuBAN Map View with Video Pop Out

Wi-Fi Access Implementation

This section includes the following major topic:

In this implementation, Wi-Fi is used to enable connectivity for the passengers on the train in addition to law enforcement personnel and the rail employees. This guide is not meant to be an exhaustive resource for a wireless implementation, but rather one possible implementation of a train-based wireless solution. The complete configuration guide for the Wireless Controller Software used in this release—Cisco Wireless Controller Configuration Guide, Release 8.2—can be found at the following URL:

■![]() http://www.cisco.com/c/en/us/td/docs/wireless/controller/8-2/config-guide/b_cg82.html

http://www.cisco.com/c/en/us/td/docs/wireless/controller/8-2/config-guide/b_cg82.html

1.![]() The Wireless LAN Controller (WLC) must have the management interface configured to communicate with the access points on the train. This is the address the access points use to build a Control and Provisioning of Wireless Access Points (CAPWAP) tunnel for communication and management. See Figure 39.

The Wireless LAN Controller (WLC) must have the management interface configured to communicate with the access points on the train. This is the address the access points use to build a Control and Provisioning of Wireless Access Points (CAPWAP) tunnel for communication and management. See Figure 39.

2.![]() SSIDs must be configured under the WLAN section for each type of wireless client that will need access. The passengers will use Web-Passthrough while the employees and law enforcement personnel should use something secure like WPA2. See Figure 40.

SSIDs must be configured under the WLAN section for each type of wireless client that will need access. The passengers will use Web-Passthrough while the employees and law enforcement personnel should use something secure like WPA2. See Figure 40.

3.![]() In this implementation, the clients used FlexConnect Local Switching, which helps enable accessing local resources on the train network without being tunneled back to the WLC. This must be enabled per WLAN. See Figure 41.

In this implementation, the clients used FlexConnect Local Switching, which helps enable accessing local resources on the train network without being tunneled back to the WLC. This must be enabled per WLAN. See Figure 41.

Figure 41 WLC Enable FlexConnect

4.![]() The switchport connected to the access point must also be configured to support FlexConnect, which means enabling a trunk with VLANs for management and the wireless clients. In this example, VLAN 21 is used for the access point management traffic, VLAN 20 is for a regular passenger, VLAN 30 is for employees, and VLAN 40 is used for law enforcement. The management traffic must be configured to use the native VLAN.

The switchport connected to the access point must also be configured to support FlexConnect, which means enabling a trunk with VLANs for management and the wireless clients. In this example, VLAN 21 is used for the access point management traffic, VLAN 20 is for a regular passenger, VLAN 30 is for employees, and VLAN 40 is used for law enforcement. The management traffic must be configured to use the native VLAN.

5.![]() Klas—The router will act as the default gateway for all the devices and should therefore have a subinterface for all the wireless client types. This configuration is explained in the MAG Configuration section. It is important to note the presence of the ip helper-address command. This command forwards DHCP requests from the access point and clients to the DHCP server in the data center. The following configuration example is from the Klas router for the access point management traffic.

Klas—The router will act as the default gateway for all the devices and should therefore have a subinterface for all the wireless client types. This configuration is explained in the MAG Configuration section. It is important to note the presence of the ip helper-address command. This command forwards DHCP requests from the access point and clients to the DHCP server in the data center. The following configuration example is from the Klas router for the access point management traffic.

Once the switches and router are configured properly, the access point will try to get a DHCP address and the address for the WLC. Configuring the DHCP server with option 43 will enable the access point to contact the WLC. Once the access point successfully contacts the WLC, it will download the correct image and reboot. Once it finishes rebooting and reconnects to the WLC, it can be configured for FlexConnect mode.

Note: The MAG must have local-routing-mag configured under the pmipv6-mag section to enable the FlexConnect clients to access local resources without also traversing the PMIPv6 tunnel.

Lilee—The ME-100 should be the default gateway for all the clients to keep local traffic within the train network. The interface configuration can be found in the ME-100 subsection of Gateway Mobility. Because the access points and clients will use DHCP for address resolution, it is necessary to configure DHCP relay on the ME-100.

6.![]() In the WLC under the Wireless tab, click the access point that needs to be configured for FlexConnect.

In the WLC under the Wireless tab, click the access point that needs to be configured for FlexConnect.

Figure 42 WLC AP Configuration

7.![]() Under the General tab, the AP Mode must be changed from local to FlexConnect.

Under the General tab, the AP Mode must be changed from local to FlexConnect.

Figure 43 Figure 43 WLC AP Details

8.![]() Afterward, the FlexConnect tab must be used to configure the native VLAN and all the VLAN mappings. First configure the Native VLAN ID. In this case, it is 21.

Afterward, the FlexConnect tab must be used to configure the native VLAN and all the VLAN mappings. First configure the Native VLAN ID. In this case, it is 21.

Figure 44 FlexConnect Native VLAN

9.![]() Next, the VLAN mappings must be created for each SSID. This will allow traffic for each SSID to be isolated from each other.

Next, the VLAN mappings must be created for each SSID. This will allow traffic for each SSID to be isolated from each other.

Figure 45 FlexConnect VLAN Mappings

Web Passthrough

The web passthrough feature is one way to allow passengers to connect to the network without their needing to supply a username and password. When the passenger connects to the network and tries to navigate to a URL, he will be redirected to a splash page and required to accept the terms and conditions. After accepting the conditions, the user will have normal access to the network resources. The steps to configure this are described below.

The passenger WLAN must be configured with the correct security features. Layer 2 security doesn't exist, only Layer 3.

Figure 46 Passenger WLAN Layer 2 Security

Figure 47 Passenger WLAN Layer 3 Security

When a client connects and tries to navigate to a web page, he will be redirected to the splash page.