|

Chassis

|

One rack-unit (1RU) chassis

|

|

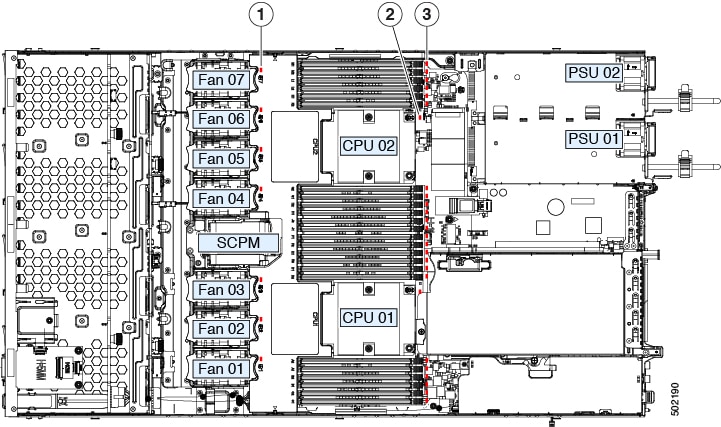

Central Processor

|

Up to two Socket AMD Zen2/3 Architecture supporting Rome/Milan processors

|

|

Memory

|

32 DDR4 DIMMs, up to 3200 MHz(1DPC), 2933 MHz (2DPC), with support for RDIMMs, LRDIMMs

|

|

Multi-bit error protection

|

Multi-bit error protection is supported

|

|

Video

|

The Cisco Integrated Management Controller (CIMC) provides video using the Matrox G200e video/graphics controller:

-

Integrated 2D graphics core with hardware acceleration

-

Embedded DDR memory interface supports up to 512 MB of addressable memory (8 MB is allocated by default to video memory)

-

Supports display resolutions up to 1920 x 1200 16bpp @ 60Hz

-

High-speed integrated 24-bit RAMDAC

-

Single lane PCI-Express host interface running at Gen 1 speed

|

|

Baseboard management

|

BMC, running Cisco Integrated Management Controller (Cisco IMC) firmware.

Depending on your Cisco IMC settings, Cisco IMC can be accessed through the 1-Gb dedicated management port, the 1-Gb/10-Gb

Ethernet LAN ports, or a Cisco virtual interface card.

|

|

Network and management I/O

|

Rear panel:

-

One 1-Gb Ethernet dedicated management port (RJ-45 connector)

-

One RS-232 serial port (RJ-45 connector)

-

One VGA video connector port (DB-15 connector)

-

Two USB 3.0 ports

-

One flexible modular LAN on motherboard (mLOM)/OCP 3.0 slot that can accommodate various interface cards

-

One KVM console connector (supplies two USB 2.0 connectors, one VGA DB15 video connector, and one serial port (RS232) RJ45

connector)

Front panel:

|

|

Modular LAN on Motherboard (mLOM)/ OCP3 3.0 slot

|

The dedicated mLOM/OCP 3.0 slot on the motherboard can flexibly accommodate the following cards:

|

|

WoL

|

The two 1-Gb/10-Gb BASE-T Ethernet LAN ports support the wake-on-LAN (WoL) standard.

|

|

Power

|

Up to two of the following hot-swappable power supplies:

-

770 W (AC)

-

1050 W (AC)

-

1050 W (DC)

-

1600 W (AC)

-

2300 W (AC)

One power supply is mandatory; one more can be added for 1 + 1 redundancy.

|

|

ACPI

|

The advanced configuration and power interface (ACPI) 4.0 standard is supported.

|

|

Front Panel

|

The front panel controller provides status indications and control buttons

|

|

Cooling

|

Eight hot-swappable fan modules for front-to-rear cooling.

|

|

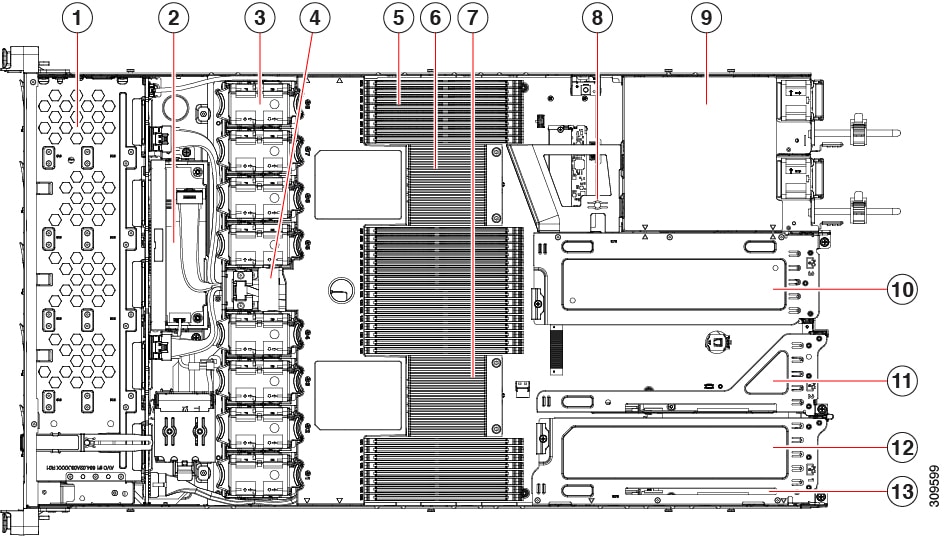

PCIe I/O

|

Horizontal PCIe expansion slots are supported by PCIe riser assemblies. The server supports either of the following configurations:

-

One half-height riser card in PCIe Riser 1

-

Three half-height riser cards in PCIe Riser 1, 2, 3

-

Two full-height riser cards

|

|

InfiniBand

|

The PCIe bus slots in this server support the InfiniBand architecture.

|

| Expansion Slots |

Three half-height riser slots

-

Riser 1 (controlled by CPU 1): One x16 PCIe Gen4 Slot, (Cisco VIC), half-height, 3/4 length

-

Riser 2 (controlled by CPU 1): One x16 PCIe Gen4 Slot, electrical x8, half-height, 3/4 length

-

Riser 3 (controlled by CPU 1): One x16 PCIe Gen4 Slot, (Cisco VIC), half-height, 3/4 length

Two full-height riser slots

-

Riser 1 (controlled by CPU 1): One x16 PCIe Gen4 Slot, (Cisco VIC), full-height, 3/4 length

-

Riser 3 (controlled by CPU 1): One x16 PCIe Gen4 Slot, (Cisco VIC), full-height, 3/4 length

|

|

Interfaces

|

Rear panel:

-

One 1Gbase-T RJ-45 management port

-

One RS-232 serial port (RJ45 connector)

-

One DB15 VGA connector

-

Two USB 3.0 port connectors

-

One flexible modular LAN on motherboard (mLOM) slot that can accommodate various interface cards

Front panel:

-

One KVM console connector (supplies two USB 2.0 connectors, one

-

VGA DB15 video connector, and one serial port (RS232) RJ45 connector)

|

|

Storage, front-panel

|

Cisco APIC M4 and L4 (APIC-SERVER-M4 and APIC-SERVER-L4)—The server is orderable in two different versions, each with a different

front panel/drive-backplane configuration.

|

|

Storage, internal

|

The server has these internal storage options:

-

One USB port on the motherboard.

-

Mini-storage module socket, optionally with either:

-

One micro-SD card socket on PCIe riser 1.

|

|

Integrated Management Processor

|

Baseboard Management Controller (BMC) running Cisco Integrated Management Controller (CIMC) firmware.

Depending on your CIMC settings, the CIMC can be accessed through the 1GE dedicated management port, the 1GE/10GE LOM ports,

or a Cisco virtual interface card (VIC).

CIMC manages certain components within the server, such as the Cisco 12G SAS HBA.

|

|

Storage Controllers

|

The Cisco 12G SAS RAID controller or Cisco 12G SAS HBA plugs into a dedicated slot. Only one of these at a time can be used

at a time.

|

|

Modular LAN over Motherboard (mLOM) slot

|

The dedicated mLOM slot on the motherboard can flexibly accommodate the following cards:

|

|

RAID backup

|

The server has a mounting bracket near the cooling fans for the supercap unit that is used with the Cisco modular RAID controller

card.

|

|

Integrated video

|

Integrated VGA video.

|

|

Intersight

|

Intersight provides server management capabilities

|

Feedback

Feedback