About PTP

The Precision Time Protocol (PTP) is a time synchronization protocol defined in IEEE 1588 for nodes distributed across a network. With PTP, you can synchronize distributed clocks with an accuracy of less than 1 microsecond using Ethernet networks. PTP's accuracy comes from the hardware support for PTP in the Cisco Application Centric Infrastructure (ACI) fabric spine and leaf switches. The hardware support allows the protocol to compensate accurately for message delays and variation across the network.

Note |

This document uses the term "client" for what the IEEE1588-2008 standard refers to as the "slave." The exception is instances in which the word "slave" is embedded in the Cisco Application Policy Infrastructure Controller (APIC) CLI commands or GUI. |

PTP is a distributed protocol that specifies how real-time PTP clocks in the system synchronize with each other. These clocks are organized into a master-client synchronization hierarchy with the grandmaster clock, which is the clock at the top of the hierarchy, determining the reference time for the entire system. Synchronization is achieved by exchanging PTP timing messages, with the members using the timing information to adjust their clocks to the time of their master in the hierarchy. PTP operates within a logical scope called a PTP domain.

The PTP process consists of two phases: establishing the master-client hierarchy and synchronizing the clocks. Within a PTP domain, each port of an ordinary or boundary clock uses the following process to determine its state:

-

Establish the master-client hierarchy using the Best Master Clock Algorithm (BMCA):

-

Examine the contents of all received

Announcemessages (issued by ports in the master state). -

Compare the data sets of the foreign master (in the

Announcemessage) and the local clock for priority, clock class, accuracy, and so on. -

Determine its own state as either master or client.

-

-

Synchronize the clocks:

-

Use messages, such as

SyncandDelay_Req, to synchronize the clock between the master and clients.

-

PTP Clock Types

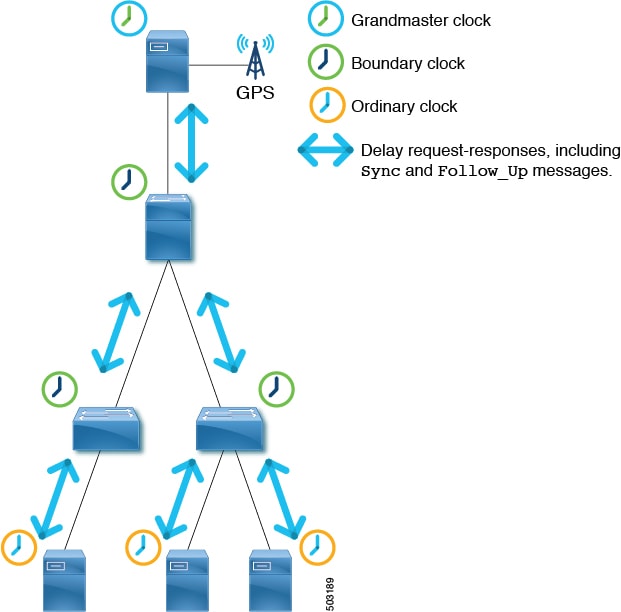

The following illustration shows the hierarchy of the PTP clock types:

PTP has the following clock types:

|

Type |

Description |

||

|---|---|---|---|

|

Grandmaster Clock (GM, GMC) |

The source of time for the entire PTP topology. The grandmaster clock is selected by the Best Master Clock Algorithm (BMCA). | ||

|

Boundary Clock (BC) |

A device with multiple PTP ports. A PTP boundary clock participates in the BMCA and each port has a status, such as master or client. A boundary clock synchronizes with its parent/master so that the client clocks behind itself synchronize to the PTP boundary clock itself. To ensure that, a boundary clock terminates PTP messages and replies by itself instead of forwarding the messages. This eliminates the delay caused by the node forwarding PTP messages from one port to another. |

||

|

Transparent Clock (TC) |

A device with multiple PTP ports. A PTP transparent clock does not participate in the BMCA. This clock type only transparently forwards PTP messages between the master clock and client clocks so that they can synchronize directly with one another. A transparent clock appends the residence time to the PTP messages passing by so that the clients can take the forwarding delay within the transparent clock device into account. In the case of a peer-to-peer delay mechanism, a PTP transparent clock terminates PTP Pdelay_xxx messages instead of forwarding the messages.

|

||

|

Ordinary Clock (OC) |

A device that may serve a source of time as a grandmaster clock or that may synchronize to another clock (such as a master) with the role as a client (a PTP client). |

PTP Topology

Master and Client Ports

The master and client ports work as follows:

-

Each PTP node directly or indirectly synchronizes its clock to the grandmaster clock that has the best source of time, such as GPS (Clock 1 in the figure).

-

One grandmaster is selected for the entire PTP topology (domain) based on the Best Master Clock Algorithm (BMCA). The BMCA is calculated on each PTP node individually, but the algorithm makes sure that all nodes in the same domain select the same clock as the grandmaster.

-

In each path between PTP nodes, based on the BMCA, there will be one master port and at least one client port. There will be multiple client ports if the path is point-to-multipoints, but each PTP node can have only one client port. Each PTP node uses its client port to synchronize to the master port on the other end. By repeating this, all PTP nodes eventually synchronize to the grandmaster directly or indirectly.

-

From Switch 1's point of view, Clock 1 is the master and the grandmaster.

-

From Switch 2's point of view, Switch 1 is the master and Clock 1 is the grandmaster.

-

-

Each PTP node should have only one client port, behind which exists the grandmaster. The grandmaster can be multiple hops away.

-

The exception is a PTP transparent clock, which does not participate in BMCA. If Switch 3 was a PTP transparent clock, the clock would not have a port status, such as master and client. Clock 3, Clock 4, and Switch 1 would establish a master and client relationship directly.

Passive Ports

The BMCA can select another PTP port that is in the passive state on top of the master and client. A passive port does not

generate any PTP messages, with a few exceptions such as PTP Management messages as a response to Management messages from other nodes.

Example 1

If a PTP node has multiple ports towards the grandmaster, only one of them will be the client port. The other ports toward the grandmaster will be passive ports.

Example 2

If a PTP node detects two master only clocks (grandmaster candidates), the port toward the candidate selected as the grandmaster becomes a client port and the other becomes a passive port. If the other clock can be a client, it forms a master and client relation instead of passive.

Example 3

If a master-only clock (grandmaster candidate) detects another master-only clock that is better than itself, the clock puts itself in a passive state. This happens when two grandmaster candidates are on the same communication path without a PTP boundary clock in between.

Announce Messages

The Announce message is used to calculate the Best Master Clock Algorithm (BMCA) and establish the PTP topology (master-client hierarchy).

The message works as follows:

-

PTP master ports send PTP

Announcemessages to IP address 224.0.1.129 in the case of PTP over IPv4 UDP. -

Each node uses information in the PTP

Announcemessages to automatically establish the synchronization hierarchy (master/client relations or passive) based on the BMCA. -

Some of the information that PTP

Announcemessages contain is as follows:-

Grandmaster priority 1

-

Grandmaster clock quality (class, accuracy, variance)

-

Grandmaster priority 2

-

Grandmaster identity

-

Step removed

-

-

PTP

Announcemessages are sent with an interval based on 2logAnnounceInterval seconds.

PTP Topology With Various PTP Node Types

PTP Topology With Only End-to-End Boundary Clocks

In this topology, the boundary clock nodes terminate all multicast PTP messages, except for Management messages.

This ensures that each node processes the Sync messages from the closest parent master clock, which helps the nodes to achieve high accuracy.

PTP Topology With a Boundary Clock and End-to-End Transparent Clocks

In this topology, the boundary clock nodes terminate all multicast PTP messages, except for Management messages.

End-to-end (E2E) transparent clock nodes do not terminate PTP messages, but simply add a residence time (the time the packet took to go through the node) in the PTP message correction field as the packets pass by so that clients can use them to achieve better accuracy. But, this has lower scalability as the number of PTP messages that need to be handled by one boundary clock node increases.

PTP BMCA

PTP BMCA Parameters

Each clock has the following parameters defined in IEEE 1588-2008 that are used in the Best Master Clock Algorithm (BMCA):

|

Parameter |

Possible Values |

Description |

|---|---|---|

|

Priority 1 |

0 to 255 |

A user configurable number. The value is normally 128 or lower for grandmaster-candidate clocks (master-capable devices) and 255 for client-only devices. |

|

Clock Quality - Class |

0 to 255 |

Represents the status of the clock devices. For example, 6 is for devices with a primary reference time source, such as GPS. 7 is for devices that used to have a primary reference time source. 127 or lower are for master-only clocks (grandmaster candidates). 255 is for client-only devices. |

|

Clock Quality - Accuracy |

0 to 255 |

The accuracy of the clock. For example, 33 (0x21) means < 100 ns, while 35 (0x23) means < 1 us. |

|

Clock Quality - Variance |

0 to 65535 |

The precision of the timestamps encapsulated in the PTP messages. |

|

Priority 2 |

0 to 255 |

Another user-configurable number. This parameter is typically used when the setup has two grandmaster candidates with identical clock quality and one is a standby. |

|

Clock Identity |

This is an 8-byte value that is typically formed using a MAC address |

This serves as the final tie breaker, and is typically a MAC address. |

These parameters of the grandmaster clock are carried by the PTP Announce messages. Each PTP node compares these values in the order as listed in the table from all Announce messages that the node receives and also the node's own values. For all parameters, the lower number wins. Each PTP node

will then create Announce messages using the parameters of the best clock among the ones the node is aware of, and the node will send the messages

from its own master ports to the next client devices.

Note |

For more information about each parameter, see clause 7.6 in IEEE 1588-2008. |

PTP BMCA Examples

In the following example, Clock 1 and Clock 4 are the grandmaster candidates for this PTP domain:

Clock 1 has the following parameter values:

|

Parameter |

Value |

|---|---|

|

Priority 1 |

127 |

|

Clock Quality (Class) |

6 |

|

Clock Quality (Accuracy) |

0x21 (< 100ns) |

|

Clock Quality (Variance) |

15652 |

|

Priority 2 |

128 |

|

Clock Identity |

0000.1111.1111 |

|

Step Removed |

* |

Clock 4 has the following parameter values:

|

Parameter |

Value |

|---|---|

|

Priority 1 |

127 |

|

Clock Quality (Class) |

6 |

|

Clock Quality (Accuracy) |

0x21 (< 100ns) |

|

Clock Quality (Variance) |

15652 |

|

Priority 2 |

129 |

|

Clock Identity |

0000.1111.2222 |

|

Step Removed |

* |

Both clocks send PTP Announce messages, then each PTP node compares the values in the messages. In this example, because the first four parameters have

the same value, Priority 2 decides the active grandmaster, which is Clock 1.

After all switches (1, 2, and 3) have recognized that Clock 1 is the best master clock (that is, Clock 1 is the grandmaster),

those switches send PTP Announce messages with the parameters of Clock 1 from their master ports. On Switch 3, the port connected to Clock 4 (a grandmaster

candidate) becomes a passive port because the port is receiving PTP Announce messages from a master-only clock (class 6) with parameters that are not better than the current grandmaster that is received

by another port.

The Step Removed parameter indicates the number of hops (PTP boundary clock nodes) from the grandmaster. When a PTP boundary clock node sends

PTP Announce messages, it increments the Step Removed value by 1 in the message. In this example, Switch 2 receives the PTP Announce message from Switch 1 with the parameters of Clock 1 and a Step Removed value of 1. Clock 2 receives the PTP Announce message with a Step Removed value of 2. This value is used only when all the other parameters in the PTP Announce messages are the same, which happens when the messages are from the same grandmaster candidate clock.

PTP BMCA Failover

If the current active grandmaster (Clock 1) becomes unavailable, each PTP port recalculates the Best Master Clock Algorithm (BMCA).

The availability is checked using the Announce messages. Each PTP port declares the timeout of the Announce messages after the Announce messages were consecutively missing for Announce Receipt Timeout times. In other words, for Announce Receipt Timeout x 2logAnnounceInterval seconds. This timeout period should be uniform throughout a PTP domain as mentioned in Clause 7.7.3 in IEEE 1588-2008. When

the timeout is detected, each switch starts recalculating the BMCA on all PTP ports by sending Announce messages with the new best master clock data. The recalculation can result in a switch initially determining that the switch

itself is the best master clock, because most of the switches are aware of only the previous grandmaster.

When the client port connected toward the grandmaster goes down, the node (or the ports) does not need to wait for the announce

timeout and can immediately start re-calculating the BMCA by sending Announce messages with the new best master clock data.

The convergence can take several seconds or more depending on the size of the topology, because each PTP port recalculates the BMCA from the beginning individually to find the new best clock. Prior to the failure of the active grandmaster, only Switch 3 knows about Clock 4, which should take over the active grandmaster role.

Also, when the port status changes to master from non-master, the port changes to the PRE_MASTER status first. The port takes Qualification Timeout seconds for the port to become the actual master, which is typically equal to:

(Step Removed + 1) x the announce intervalThis means that if the other grandmaster candidate is connected to the same switch as (or close to) the active grandmaster, the port status changes will be minimum and the convergence time will be shorter. See Clause 9.2 in IEEE 1588-2008 for details.

PTP Clock Synchronization

The PTP master ports send PTP Sync and Follow_Up messages to IP address 224.0.1.129 in the case of PTP over IPv4 UDP.

In One-Step mode, the Sync messages carry the timestamp of when the message was sent out. Follow_Up messages are not required. In Two-Step mode, Sync messages are sent out without a timestamp. Follow_Up messages are sent out immediately after each Sync message with the timestamp of when the Sync message was sent out. Client nodes use the timestamp in the Sync or Follow_Up messages to synchronize their clock along with an offset calculated by meanPathDealy. Sync messages are sent with the interval based on 2logSyncInterval seconds.

PTP and meanPathDelay

meanPathDelay is the mean time that PTP packets take to reach from one end of the PTP path to the other end. In the case of the E2E delay

mechanism, this is the time taken to travel between a PTP master port and a client port. PTP needs to calculate meanPathDelay (Δt in the following illustration) to keep the synchronized time on each of the distributed devices accurate.

There are two mechanisms to calculate meanPathDelay:

-

Delay Request-Response (E2E): End-to-end transparent clock nodes can only support this.

-

Peer Delay Request-Response (P2P): Peer-to-peer transparent clock nodes can only support this.

Boundary clock nodes can support both mechanisms by definition. In IEEE 1588-2008, the delay mechanisms are called "Delay" or "Peer Delay." However, the Delay Request-Response mechanism is more commonly referred to as the "E2E delay mechanism," and the Peer Delay mechanism is more commonly referred to as the "P2P delay mechanism."

meanPathDelay Measurement

Delay Request-Response

The delay request-response (E2E) mechanism is initiated by a client port and the meanPathDelay is measured on the client node side. The mechanism uses Sync and Follow_Up messages, which are sent from a master port regardless of the E2E delay mechanism. The meanPathDelay value is calculated based on 4 timestamps from 4 messages.

t-ms (t2 – t1) is the delay for master to client direction. t-sm (t4 – t3) is the delay for client to master direction. meanPathDelay is calculated as follows:

(t-ms + t-sm) / 2Sync is sent with the interval based on 2logSyncInterval sec. Delay_Req is sent with the interval based on 2logMinDelayReqInterval sec.

Note |

This example focuses on Two-Step mode. See Clause 9.5 from IEEE 1588-2008 for details on the transmission timing. |

Peer Delay Request-Response

The peer delay request-response (P2P) mechanism is initiated by both master and client port and the meanPathDelay is measured on the requester node side. meanPathDelay is calculated based on 4 timestamps from 3 messages dedicated for this delay mechanism.

In the two-step mode, t2 and t3 are delivered to the requester in one of the following ways:

-

As (t3-t2) using

Pdelay_Resp_Follow_Up -

As t2 using

Pdelay_Respand as t3 usingPdelay_Resp_Follow_Up

meanPathDelay is calculated as follows:

(t4-t1) – (t3-t1) / 2Pdelay_Req is sent with the interval based on 2logMinPDelayReqInterval seconds.

Note |

Cisco Application Centric Infrastructure (ACI) switches do not support the peer delay request-response (P2P) mechanism. See clause 9.5 from IEEE 1588-2008 for details on the transmission timing. |

PTP Multicast, Unicast, and Mixed Mode

The following sections describe the different PTP modes using the delay request-response (E2E delay) mechanism.

Multicast Mode

All PTP messages are multicast. Transparent clock or PTP unaware nodes between the master and clients result in inefficient

flooding of the Delay messages. However, the flooding is efficient for Announce, Sync, and Follow_Up messages because these messages should be sent toward all client nodes.

Unicast Mode

All PTP messages are unicast, which increases the number of messages that the master must generate. Hence, the scale, such as the number of client nodes behind one master port, is impacted.

Mixed Mode

Only Delay messages are unicast, which resolves the problems that exist in multicast mode and unicast mode.

PTP Transport Protocol

The following illustration provides information about the major transport protocols that PTP supports:

Note |

Cisco Application Centric Infrastructure (ACI) switches only support IPv4 as a PTP transport protocol. |

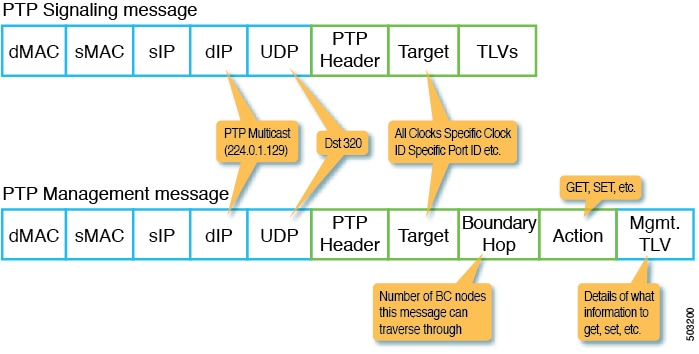

PTP Signaling and Management Messages

The following illustration shows the Signaling and Management message parameters in the header packet for PTP over IPv4 UDP:

A Management message is used to configure or collect PTP parameters, such as the current clock and offset from its master. With the message,

a single PTP management node can manage and monitor PTP-related parameters without relying on an out-of-band monitoring system.

A Signaling message also provides various types of type, length, and value (TLVs) to do additional operations. There are other TLVs that

are used by being appended to other messages. For example, the PATH_TRACE TLV as defined in clause 16.2 of IEEE 1588-2008 is appended to Announce messages to trace the path of each boundary clock node in the PTP topology.

Note |

Cisco Application Centric Infrastructure (ACI) switches do not support management, signal, or other optional TLVs. |

PTP Management Messages

PTP Management messages are used to transport management types, lengths, and values (TLVs) toward multiple PTP nodes at once or to a specific

node.

The targets are specified with the targetPortIdentity (clockID and portNumber) parameter. PTP Management messages have the actionField that specify actions such as GET, SET, and COMMAND to inform the targets of what to do with the delivered management TLV.

PTP Management messages are forwarded by the PTP boundary clock, and only to the Master, Client, Uncalibrated, or Pre_Master ports. A message

is forwarded to those ports only when the message is received on a port in the Master, Client, Uncalibrated, or Pre_Master

port. BoundaryHops in the message is decremented by 1 when the message is forwarded.

The SMTPE ST2059-2 profile defines that the grandmaster should send PTP Management messages using the action COMMAND with the synchronization metadata TLV that is required for the synchronization of audio/video

signals.

Note |

Cisco Application Centric

Infrastructure (ACI) switches do not process |

PTP Profiles

PTP has a concept called PTP profile. A PTP profile is used to define various parameters that are optimized for different use cases of PTP. Some of those parameters include, but not limited to, the appropriate range of PTP message intervals and the PTP transport protocols. A PTP profile is defined by many organizations/standards in different industries. For example:

-

IEEE 1588-2008: This standard defines a default PTP profile called the

Default Profile. -

AES67-2015: This standard defines a PTP profile for audio requirements. This profile is also called the

Media Profile. -

SMPTE ST2059-2: This standard defines a PTP profile for video requirements.

The following table shows some of the parameters defined in each standard for each PTP profile:

|

Profiles |

logAnnounce Interval |

logSync Interval |

logMinDelayReq Interval |

announceReceipt Timeout |

Domain Number |

Mode |

Transport Protocol |

|---|---|---|---|---|---|---|---|

|

Default Profile |

0 to 4 (1) [= 1 to 16 sec] |

-1 to +1 (0) [= 0.5 to 2 sec] |

0 to 5 (0) [= 1 to 32 sec] |

2 to 10 announce intervals (3) |

0 to 255 (0) |

Multicast / Unicast |

Any/IPv4 |

|

AES67-2015 (Media Profile) |

0 to 4 (1) [= 1 to 16 sec] |

-4 to +1 (-3) [= 1/16 to 16 sec] |

-3 to +5 (0) [= 1/8 to 32 sec] Or logSyncInterval to logSyncInterval + 5 seconds |

2 to 10 announce intervals (3) |

0 to 255 (0) |

Multicast / Unicast |

UDP/IPv4 |

|

SMTPE ST2059-2-2015 |

-3 to +1 (-2) [= 1/8 to 2 sec] |

-7 to -1 (-3) [= 1/16 to 16 sec] |

logSyncInterval to logSyncInterval + 5 seconds |

2 to 10 announce intervals (3) |

0 to 127 (127) |

Multicast / Unicast |

UDP/IPv4 |

Feedback

Feedback