VersaStack for Data Center with All-Flash Storage and VMware vSphere 6.0

Available Languages

VersaStack for Data Center with All-Flash Storage and VMware vSphere 6.0

Deploying Cisco Unified Computing System 3.1 and IBM FlashSystem V9000 with VMware vSphere 6.0 Update 1a

Last Updated: April 26, 2016

About Cisco Validated Designs

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2016 Cisco Systems, Inc. All rights reserved.

Table of Contents

VersaStack for Data Center Overview

Solution Design and Architecture

Cisco Nexus 9000 Initial Configuration Setup

Enable the Appropriate Cisco Nexus 9000 Features and Settings

Create VLANs for VersaStack IP Traffic

Configure Virtual Port Channel Domain

Configure Network Interfaces for the VPC Peer Links

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Management Plane Access for Servers and Virtual Machines

Cisco Nexus 9000 A and B using Interface VLAN Example 1

Cisco Nexus 9000 A and B using Port Channel Example 2

Cisco MDS 9148S Initial Configuration Setup

Enable Appropriate Cisco MDS Features and Settings

Enable VSANs and Create Port Channel and Cluster Zone

Secure Web Access to the IBM FlashSystem V9000 Service and Management GUI

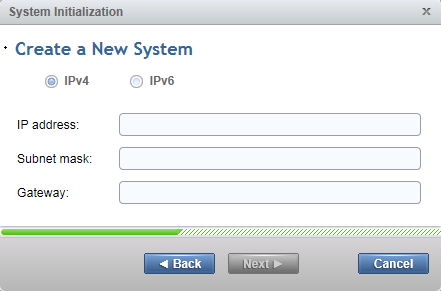

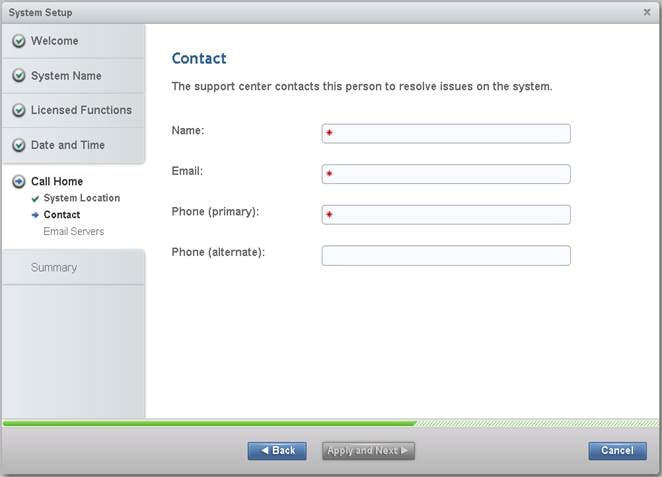

IBM FlashSystem V9000 Initial Configuration

IBM FlashSystem V9000 SSR Initialization

IBM FlashSystem V9000 Initial Configuration Setup

VersaStack Cisco UCS Initial Setup

VersaStack Cisco UCS Configuration

Cisco MDS 9148S Compute SAN Zoning

ESX and vSphere Installation and Setup

VersaStack VMware ESXi 6.0 Update 1a SAN Boot Installation

Log in to Cisco UCS 6200 Fabric Interconnect

vSphere Setup and ESXi configuration

Set Up VMkernel Ports and Virtual Switch

Mount Required VMFS Datastores

Build and Setup VMware vCenter 6.0

Install the Client Integration Plug-In

Building the VMware vCenter Server Appliance

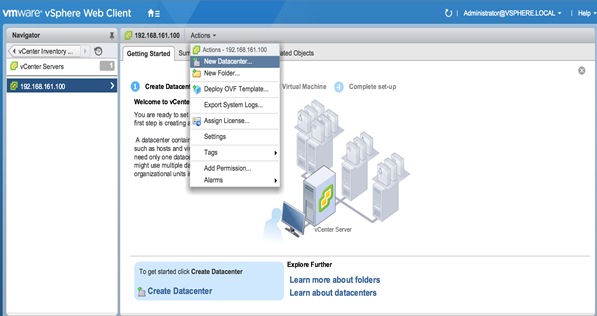

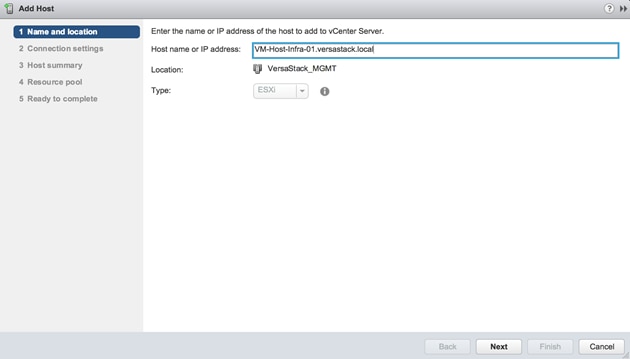

Setup vCenter Server with a Datacenter, Cluster, DRS and HA

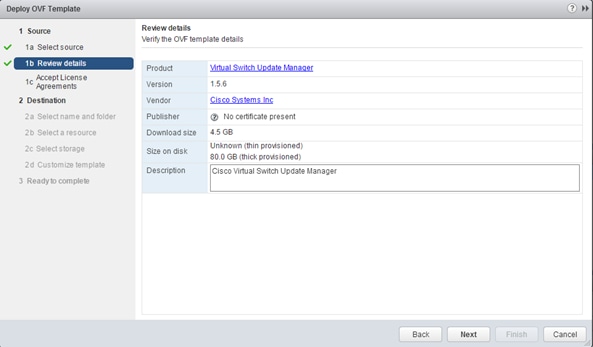

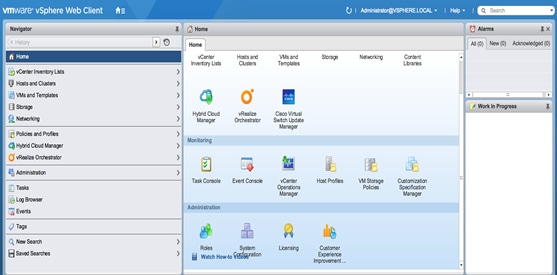

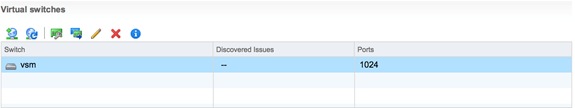

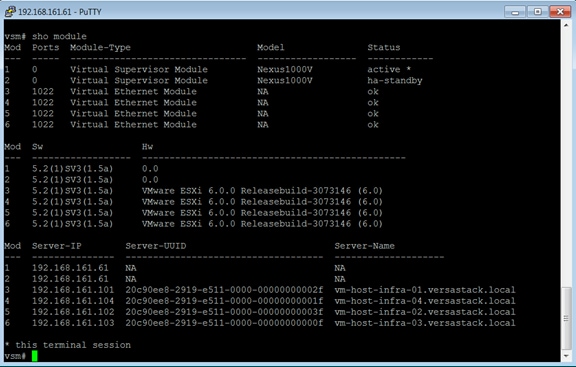

Setup the Optional Cisco Nexus 1000V Switch using Cisco Switch Update Manager

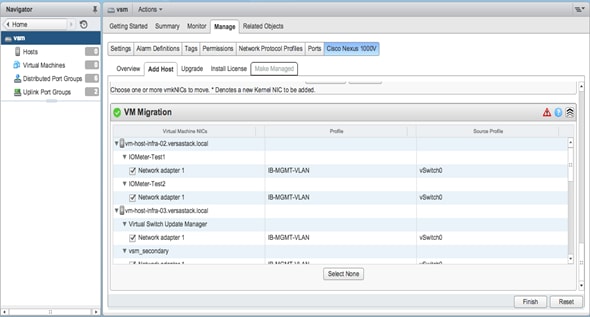

Migrate Networking Components for ESXi Hosts to Cisco Nexus 1000V

Migrate ESXi Host Redundant Network Ports to Cisco Nexus 1000V

Backup Management and other Software

Bill of Materials for VersaStack

Cisco Nexus 9000 Example Configurations

Cisco MDS Example Configurations

This deployment guide provides step-by-step instructions in order to deploy a VersaStack system consisting of IBM V9000 storage and Cisco UCS infrastructure for a successful VMware deployment. As an example, this solution could be deployed by enterprises to support their environments needing extreme performance in the datacenter. For design guidance on which VersaStack solution best suites your requirements, please refer to the design zone information for VersaStack later in this document.

In today’s rapid paced IT environments there are many challenges including:

· In a recent poll, 73% of all IT spending was used just to keep the current data center running; amounting in increased Opex

· Rapid storage growth resulting in expensive and complex storage management

· Underutilized compute and storage resources

· IT groups are challenged to meet SLA’s, dealing with complex troubleshooting

· IT groups are beset with time consuming data migrations to manage growth and change

To overcome these issues and increase efficiency, IT departments are moving towards converged infrastructure solutions. These solutions offer many benefits, some of which are having the integration testing completed along with thoroughly documented deployment procedures. They also offer increased feature sets and premium support with a single point of contact. Cisco and IBM have team up to bring the best networking, compute and storage in a single solution named VersaStack. VersaStack offers customer’s versatility, simplicity and great performance along with reliability. In this document we will show how to install an All-Flash VersaStack setup for a VMware infrastructure that is designed to increase IOPS and provide best performance for IO intensive applications. Brief list of the VersaStack benefits that solve the challenges previously noted include:

· Cisco Unified Computing System Manger providing simplified management for compute and network through a consolidated management tool

· Cisco UCS Service Profiles designed to vastly reduce deployment time and provide consistency in the datacenter

· Cisco Fabric Interconnects to reduce infrastructure costs and simplify networking

· IBM Real-time compression to reduce the storage footprint and storage costs

· IBM Data-at-rest Encryption to provide Enterprise reliability

· Optional IBM Easy Tier to automate optimizing performance while lowering storage costs by automatically placing infrequently accessed data on cheaper disks, and highly accessed data on faster tiers thereby reducing costly migrations

· IBM’s FlashSystem V9000 Simplified Storage Management designed to simplify day to day storage tasks

VersaStack offers customers the ability to reduce OPEX while at the same time helping meet their SLA’s by simplifying many of the day to day IT tasks, as well as consolidating and automating others.

Introduction

The current data center trend, driven by the need to better utilize available resources, is towards virtualization on shared infrastructure. Higher levels of efficiency can be realized on integrated platforms due to the pooling of compute, network and storage resources, brought together by a pre-validated process. Validation eliminates compatibility issues and presents a platform with reliable features that can be deployed in an agile manner. This industry trend and the validation approach used to cater to it, has resulted in enterprise customers moving away from silo architectures. VersaStack serves as the foundation for a variety of workloads, enabling efficient architectural designs that can be deployed quickly and with confidence.

Audience

This document describes the architecture and deployment procedures of an infrastructure composed of Cisco®, IBM ®, and VMware® virtualization that use IBM FlashSystem V9000 block protocols. The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy the core VersaStack architecture with IBM FlashSystem V9000.

What’s New?

The following design elements distinguish this version of VersaStack from previous models:

· Support for the Cisco UCS 3.1(1e) release and Cisco UCS B200-M4 servers

· Support for the latest release of IBM FlashSystem V9000 software 7.6.0.4

· VMware vSphere 6.0 U1a

· Validation of the Cisco Nexus 9000 switches including an IBM FlashSystem V9000 storage array with 16G Host connectivity

· Validation of Cisco MDS 9148S Switches with 16G ports

For more information on previous VersaStack models, please refer the VersaStack guides at:

Architecture

The VersaStack architecture is highly modular or "Pod-like”. There are sufficient architectural flexibility and design options to scale as required with investment protection. The platform can be scaled up (adding resources to existing VersaStack units) and/or out (adding more VersaStack units).

Specifically, this VersaStack is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized solutions. VersaStack All-Flash includes IBM FlashSystem V9000, Cisco networking, the Cisco Unified Computing System™ (Cisco UCS®), Cisco MDS fiber-channel switches and VMware vSphere software in a single package. The design is flexible enough that the networking, computing, and storage can fit in one data center rack or be deployed according to a customer's data center design. Port density enables the networking components to accommodate multiple configurations.

One benefit of the VersaStack architecture is the ability to meet any customer's capacity or performance needs in a cost effective manner. The Converged Infrastructure system capable of serving multiple protocols across a single interface allows for customer choice and investment protection because it is wire-once architecture.

This architecture references relevant criteria pertaining to resiliency, cost benefit, and ease of deployment of all components including IBM FlashSystem V9000 storage.

Figure 1 illustrates the VMware vSphere built on VersaStack components and the network connections for a configuration with IBM FlashSystem V9000 Storage. This design uses the Cisco Nexus® 9372, and Cisco UCS B-Series with the Cisco UCS virtual interface card (VIC) and the IBM FlashSystem V9000 storage controllers connected in a highly available design using Cisco Virtual Port Channels (vPCs). This infrastructure is deployed to provide FC-booted hosts with block-level access to shared storage datastores.

Common infrastructure services such as Active Directory, DNS, DHCP, vCenter, Cisco Nexus 1000v virtual supervisor module (VSM), Cisco UCS Performance Manager etc can be deployed on a redundant and self-contained hardware in a Common Infrastructure Pod along with the VersaStack Pod. At a customer's site, depending on whether this is a new data center, there may not be a need to build this infrastructure piece.

This document details the implementation and deployment of VersaStack Pod and does not cover the implementation of the Common Infrastructure Pod.

Figure 1 VersaStack Cabling Overview

The reference hardware configuration includes:

· Two Cisco Nexus 9396 or 9372 switches

· Two Cisco UCS 6248UP Fabric Interconnects

· Two Cisco MDS 9148S Fibre-Channel switches

· Support for 32 Cisco UCS C-Series servers without any additional networking components

· Support for 8 Cisco UCS B-Series servers without any additional blade server chassis

· Support for up to 160 Cisco UCS C-Series and B-Series servers by way of additional fabric extenders and blade server chassis

· Two IBM FlashSystem V9000 control enclosures and one V9000 Storage enclosure. Support for up to 12 flash modules of the same capacity within storage enclosures.

For server virtualization, the deployment includes VMware vSphere. Although this is the base design, each of the components can be scaled easily to support specific business requirements. For example, more (or different) servers or even blade chassis can be deployed to increase compute capacity, additional V9000 Storage Enclosures to increase capacity, and pairs of V9000 Control Enclosures shelves can be deployed to improve I/O capability and throughput, and special hardware or software features can be added to introduce new features and functionality.

This document guides you through the low-level steps for deploying the base architecture. These procedures cover everything from physical cabling to network, compute and storage device configurations.

For detailed information regarding the design of VersaStack, please reference the Design guide at:

Software Revisions

The table below details the software revisions used for validating various components of the Cisco Nexus 9000 based VersaStack architecture. To validate your enic version, run the command "ethtool -i vmnic0" through the command line of the ESX host. For more information regarding supported configurations please reference the following Interoperability links:

IBM:

http://www-03.ibm.com/systems/support/storage/ssic/interoperability.wss

Cisco:

http://www.cisco.com/web/techdoc/ucs/interoperability/matrix/matrix.html

Table 1 Software Revisions

| Device |

Image |

Comments |

|

| Compute |

Cisco UCS Fabric Interconnects 6200 Series Switches |

3.1(1e) |

Embedded management |

| Cisco UCS C 220 M3/M4 Server |

3.1(1e) |

Software bundle release |

|

| Cisco UCS B 200 M3/ M4 Server |

3.1(1e) |

Software bundle release |

|

| Cisco ESXi enic driver |

2.3.0.7 |

Ethernet driver for Cisco VIC |

|

| Cisco ESXi fnic driver |

1.6.0.25 |

FCoE driver for Cisco VIC |

|

| Network |

Cisco Nexus 9372 Switches |

6.1(2)I3(5) |

Operating system version |

| Cisco MDS 9148S Switches |

6.2(13b) |

FC switch firmware version |

|

| Storage |

IBM FlashSystem V9000 Storage |

7.6.0.4 |

Software version |

| Software |

VMware vSphere ESXi |

6.0 update1a |

Software version |

| VMware vCenter |

6.0 |

Software version |

|

| Cisco Nexus 1000v Switch |

5.2(1)SV3(1.5a) |

Software version |

Configuration Guidelines

This document provides details on configuring a fully redundant, highly available VersaStack unit with IBM FlashSystem V9000 storage. Therefore, reference is made at each step to the component being configured as either A or B. For example, Controller-A and Controller-B are used to identify the IBM storage controllers that are provisioned within this document and Cisco Nexus A and Cisco Nexus B identifies the pair of Cisco Nexus switches that are configured. The Cisco UCS fabric Interconnects are similarly configured. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these are identified sequentially: VM-Host-Infra-01, VM-Host-Infra-02, and so on. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure.

This document is intended to enable you to fully configure the VersaStack Pod in the environment. Various steps require you to insert customer-specific naming conventions, IP addresses, VSAN and VLAN schemes, as well as to record appropriate MAC addresses.

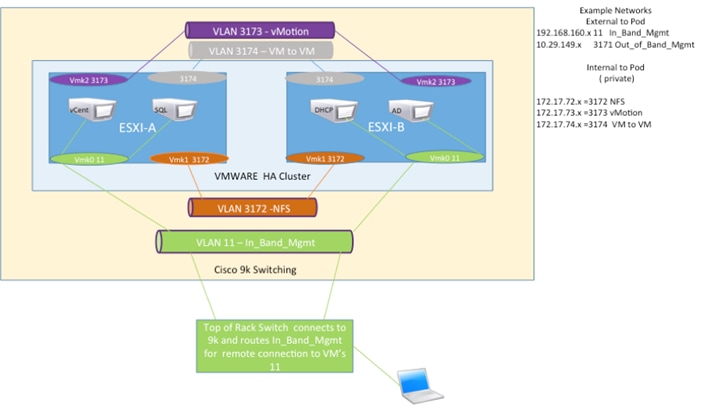

VLAN Topology

Table 2 and Table 3 describe the VLAN and VSAN IDs necessary for deployment as outlined in this guide. The virtual machines (VMs) necessary for deployment are outlined in this guide as well. Networking architectures can be unique to each environment. Since the design of this deployment is a POD, the architecture in this document leverages private networks and only the in-band management VLAN traffic routes out through the Cisco Nexus 9000 switches. Other management traffic is routed through a separate Out of Band Management switch; your architecture could vary based on the deployment objectives. An NFS VLAN is included in this document to allow connectivity to any existing NFS data stores for migration of virtual machines if required, however NFS is not validated in the solution and is not supported on IBM FlashSystem V9000.

| VLAN Name |

VLAN Purpose |

ID used in this Document |

| Native |

VLAN to which untagged frames are assigned |

2 |

| Out of Band Mgmt |

VLAN for out-of-band management interfaces |

3171 |

| NFS |

VLAN for Infrastructure NFS traffic |

3172 |

| vMotion |

VLAN for VMware vMotion |

3173 |

| VM-Traffic |

VLAN for Production VM Interfaces |

3174 |

| In-Band Mgmt |

VLAN for in-band management interfaces |

11 |

Figure 2 VLAN Logical View

Fibre Channel Topology

The SAN infrastructure allows addition of storage enclosures or additional building blocks non-disruptively. A pair of Cisco MDS switches has been used for the fibre channel connectivity providing redundancy. Separate fabrics have been created by utilizing VSAN’s on the Cisco MDS switches which provides dedicated hosts or server-side storage area networks (SANs) and a private fabric to support the cluster interconnects.

The logical fabric isolation provides:

· No access for any host or server to the storage enclosure accidentally.

· No congestion to the host or server-side SAN can cause potential performance implications for both the host or server-side SAN and the FlashSystem V9000.

Table 3 describes the VSANs necessary for deployment as outlined in this guide.

| VSAN Name |

VSAN Purpose |

ID Used in Validating This Document |

| Host-Fabric-A |

VSAN for Host connectivity |

101 |

| Host-Fabric-B |

VSAN for Host connectivity |

102 |

| Cluster-Fabric-A |

VSAN for Cluster connectivity |

201 |

| Cluster-Fabric-B |

VSAN for Cluster connectivity |

202 |

Figure 3 Fibre Channel Topology View

Virtual Machines

This document assumes that the required infrastructure machines exists or are created during the install. For example, some of the machines that would be necessary are listed in the below table.

Table 4 Virtual Machine List

| Virtual Machine Description |

Host Name |

| Active Directory |

|

| vCenter Server |

|

| DHCP Server |

|

Configuration Variables

The following customer implementation values for the variables below should be identified prior to starting the installation procedure.

Table 5 Customer Variables

| Variable |

Description |

Customer Implementation Value |

| <<var_cont01_mgmt_ip>> |

Out-of-band management IP for V9000 Controller 01 |

|

| <<var_cont01_mgmt_mask>> |

Out-of-band management network netmask |

|

| <<var_cont01_mgmt_gateway>> |

Out-of-band management network default gateway |

|

| <<var_cont02_mgmt_ip>> |

Out-of-band management IP for V9000 Controller 02 |

|

| <<var_cont02_mgmt_mask>> |

Out-of-band management network netmask |

|

| <<var_cont02_mgmt_gateway>> |

Out-of-band management network default gateway |

|

| <<var_can01_srvc_ip>> |

Out-of-band service IP for canister1, Storage Enclosure |

|

| <<var_can02_srvc_ip>> |

Out-of-band service IP for canister2, Storage Enclosure |

|

| <<var_can_srvc_mask>> |

Out-of-band services management network netmask |

|

| <<var_can_srvc_gateway>> |

Out-of-band services management network default gateway |

|

| <<var_cluster_mgmt_ip>> |

Out-of-band management IP for V9000 cluster |

|

| <<var_cluster_mgmt_mask>> |

Out-of-band management network netmask |

|

| <<var_cluster_mgmt_gateway>> |

Out-of-band management network default gateway |

|

| <<var_password>> |

Global default administrative password |

|

| <<var_dns_domain_name>> |

DNS domain name |

|

| <<var_nameserver_ip>> |

DNS server IP(s) |

|

| <<var_timezone>> |

VersaStack time zone (for example, America/New_York) |

|

| <<var_global_ntp_server_ip>> |

NTP server IP address |

|

| <<var_email_contact>> |

Administrator e-mail address |

|

| <<var_admin_phone>> |

Local contact number for support |

|

| <<var_mailhost_ip>> |

Mail server host IP |

|

| <<var_country_code>> |

Two-letter country code |

|

| <<var_state>> |

State or province name |

|

| <<var_city>> |

City name |

|

| <<var_org>> |

Organization or company name |

|

| <<var_unit>> |

Organizational unit name |

|

| <<var_street_address>>, |

Street address for support information |

|

| <<var_contact_name>> |

Name of contact for support |

|

| <<var_admin>> |

Secondary Admin account for storage login |

|

| <<var_nexus_A_hostname>> |

Cisco Nexus A host name |

|

| <<var_nexus_A_mgmt0_ip>> |

Out-of-band Cisco Nexus A management IP address |

|

| <<var_nexus_A_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_A_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_nexus_B_hostname>> |

Cisco Nexus B host name |

|

| <<var_nexus_B_mgmt0_ip>> |

Out-of-band Cisco Nexus B management IP address |

|

| <<var_nexus_B_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_B_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_ib-mgmt_vlan_id>> |

In-band management network VLAN ID |

|

| <<var_native_vlan_id>> |

Native VLAN ID |

|

| <<var_nfs_vlan_id>> |

NFS VLAN ID |

|

| <<var_vmotion_vlan_id>> |

VMware vMotion® VLAN ID |

|

| <<var_vm-traffic_vlan_id>> |

VM traffic VLAN ID |

|

| <<var_nexus_vpc_domain_id>> |

Unique Cisco Nexus switch VPC domain ID |

|

| <<var_ucs_clustername>> |

Cisco UCS Manager cluster host name |

|

| <<var_ucsa_mgmt_ip>> |

Cisco UCS fabric interconnect (FI) A out-of-band management IP address |

|

| <<var_ucsb_mgmt_ip>> |

Cisco UCS fabric interconnect (FI) B out-of-band management IP address |

|

| <<var_ucsa_mgmt_mask>> |

Out-of-band management network netmask |

|

| <<var_ucsa_mgmt_gateway>> |

Out-of-band management network default gateway |

|

| <<var_ucs_cluster_ip>> |

Cisco UCS Manager cluster IP address |

|

| <<var_cimc_mask>> |

Out-of-band management network netmask |

|

| <<var_cimc_gateway>> |

Out-of-band management network default gateway |

|

| <<var_vsm_domain_id>> |

Unique Cisco Nexus 1000v virtual supervisor module (VSM) domain ID |

|

| <<var_vsm_mgmt_ip>> |

Cisco Nexus 1000v VSM management IP address |

|

| <<var_vsm_updatemgr_mgmt_ip>> |

Virtual Switch Update Manager IP address |

|

| <<var_vsm_mgmt_mask>> |

In-band management network netmask |

|

| <<var_vsm_mgmt_gateway>> |

In-band management network default gateway |

|

| <<var_vsm_hostname>> |

Cisco Nexus 1000v VSM host name |

|

| <<var_ftp_server>> |

IP address for FTP server |

|

| <<var_MDS_A_hostname>> |

Name for the FC Cisco MDS Switch in Fabric A |

|

| <<var_MDS_A_mgmt0_ip>> |

Cisco MDS switch Out-of-band management IP address |

|

| <<var_MDS_A_mgmt0_netmask>> |

Cisco MDS switch Out-of-band management IP netmask |

|

| <<var_MDS_A_mgmt0_gw>> |

Cisco MDS switch Out-of-band Cisco Nexus A management IP gateway |

|

| <<var_MDS_B_hostname>> |

Name for the FC Cisco MDS Switch in Fabric B |

|

| <<var_MDS_B_mgmt0_ip>> |

Cisco MDS switch Out-of-band management IP address |

|

| <<var_MDS_B_mgmt0_netmask>> |

Cisco MDS switch Out-of-band management IP netmask |

|

| <<var_MDS_B_mgmt0_gw>> |

Cisco MDS switch Out-of-band management IP gateway |

|

| <<var_UTC_offset>> |

UTC time offset for your area |

|

| <<var_vsan_a_id>> |

VSAN id for Host connectivity on Cisco MDS switch A (101 is used ) |

|

| <<var_vsan_B_id>> |

VSAN id for Host connectivity on Cisco MDS switch B (102 is used ) |

|

| <<var_vsan_a_clus_id>> |

VSAN id for V9000 Cluster connectivity on Cisco MDS switch A (201 is used ) |

|

| <<var_vsan_B_clus_id>> |

VSAN id for V9000 Cluster connectivity on Cisco MDS switch B (202 is used ) |

|

| <<var_fabric_a_fcoe_vlan_id>> |

Fabric id for Cisco MDS switch A (101 is used ) |

|

| <<var_fabric_b_fcoe_vlan_id>> |

Fabric id for Cisco MDS switch B (102 is used ) |

|

| <<var_In-band_mgmtblock_net>> |

Block of IP addresses for KVM access for Cisco UCS |

|

| <<var_vmhost_infra_01_ip>> |

VMware ESXi host 01 in-band Mgmt IP |

|

| <<var_nfs_vlan_id_ip_host-01>> |

NFS VLAN IP address for ESXi host 01 |

|

| <<var_nfs_vlan_id_mask_host-01>> |

NFS VLAN netmask for ESXi host 01 |

|

| <<var_vmotion_vlan_id_ip_host-01>> |

vMotion VLAN IP address for ESXi host 01 |

|

| <<var_vmotion_vlan_id_mask_host-01>> |

vMotion VLAN netmask for ESXi host 01 |

|

| The last 5 variables should be repeated for all ESXi hosts |

|

|

The variables below for the Fibre Channel environment are to be collected during the installation phase for subsequent use in this document.

![]() IBM FlashSystem V9000 Storage Controllers are also referred to as AC2 and the Storage Enclosure as AE2, this document refers to the controllers as Controller A (ContA) and Controller B (ContB) and the storage enclosure as (SE).

IBM FlashSystem V9000 Storage Controllers are also referred to as AC2 and the Storage Enclosure as AE2, this document refers to the controllers as Controller A (ContA) and Controller B (ContB) and the storage enclosure as (SE).

Following are the IBM FlashSystem V9000 Storage Fibre Channel port naming conventions used on Cisco MDS switches:

· Cont – Storage Controller

· SE- Storage Enclosure

· FE – Front-end, Host connectivity

· BE – Back-end, Cluster communication

Table 6 WWPN Variables

| Source |

Switch/ Port |

Variable |

WWPN |

| FC_SE-BE1-fabricA |

Switch A, FC13 |

<<var_wwpn_FC_SE-BE1-fabricA>> |

|

| FC_SE-BE2-fabricA |

Switch A, FC14 |

<<var_wwpn_FC_SE-BE2-fabricA>> |

|

| FC_SE-BE3-fabricA |

Switch A, FC15 |

<<var_wwpn_FC_SE-BE3-fabricA>> |

|

| FC_SE-BE4-fabricA |

Switch A, FC16 |

<<var_wwpn_FC_SE-BE4-fabricA>> |

|

| FC_SE-BE5-fabricB |

Switch B, FC13 |

<<var_wwpn_FC_SE-BE5-fabricB>> |

|

| FC_SE-BE6-fabricB |

Switch B, FC14 |

<<var_wwpn_FC_SE-BE6-fabricB>> |

|

| FC_SE-BE7-fabricB |

Switch B, FC15 |

<<var_wwpn_FC_SE-BE7-fabricB>> |

|

| FC_SE-BE8-fabricB |

Switch B, FC16 |

<<var_wwpn_FC_SE-BE8-fabricB>> |

|

| FC_ContA-BE1-fabricA |

Switch A, FC9 |

<<var_wwpn_FC_ContA-BE1-fabricA>> |

|

| FC_ContA-BE2-fabricA |

Switch A, FC10 |

<<var_wwpn_FC_ContA-BE2-fabricA>> |

|

| FC_ContB-BE1-fabricA |

Switch A, FC11 |

<<var_wwpn_FC_ContB-BE1-fabricA>> |

|

| FC_ContB-BE2-fabricA |

Switch A, FC12 |

<<var_wwpn_FC_ContB-BE2-fabricA>> |

|

| FC_ContA-BE3-fabricB |

Switch B, FC9 |

<<var_wwpn_FC_ContA-BE3-fabricB>> |

|

| FC_ContA-BE4-fabricB |

Switch B, FC10 |

<<var_wwpn_FC_ContA-BE4-fabricB>> |

|

| FC_ContB-BE3-fabricB |

Switch B, FC11 |

<<var_wwpn_FC_ContB-BE3-fabricB>> |

|

| FC_ContB-BE4-fabricB |

Switch B, FC12 |

<<var_wwpn_FC_ContB-BE4-fabricB>> |

|

| FC_ContA-FE1-fabricA |

Switch A, FC5 |

<<var_wwpn_FC_ContA-FE1-fabricA>> |

|

| FC_ContA-FE2-fabricB |

Switch B, FC5 |

<<var_wwpn_FC_ContA-FE2-fabricB>> |

|

| FC_ContA-FE3-fabricA |

Switch A, FC6 |

<<var_wwpn_FC_ContA-FE3-fabricA>> |

|

| FC_ContA-FE4-fabricB |

Switch B, FC6 |

<<var_wwpn_FC_ContA-FE4-fabricB>> |

|

| FC_ContB-FE1-fabricA |

Switch A, FC7 |

<<var_wwpn_FC_ContB-FE1-fabricA>> |

|

| FC_ContB-FE2-fabricB |

Switch B, FC7 |

<<var_wwpn_FC_ContB-FE2-fabricB>> |

|

| FC_ContB-FE3-fabricA |

Switch A, FC8 |

<<var_wwpn_FC_ContB-FE3-fabricA>> |

|

| FC_ContB-FE4-fabricB |

Switch B, FC8 |

<<var_wwpn_FC_ContB-FE4-fabricB>> |

|

VersaStack Build Process

Figure 4 VersaStack Build Process Flow Chart

VersaStack Cabling

The information in this section is provided as a reference for cabling the equipment in a VersaStack environment. To simplify cabling requirements, the tables include both local and remote device and port locations.

The tables in this section contain details for the prescribed and supported configuration of the IBM FlashSystem V9000 running 7.6.0.4.

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps

Be sure to follow the cabling directions in this section. Failure to do so will result in changes to the deployment procedures that follow because specific port locations are mentioned.

It is possible to order IBM FlashSystem V9000 systems in a different configuration from what is presented in the tables in this section. Before starting, be sure that the configuration matches the descriptions in the tables and diagrams in this section.

Figure 5 shows the cabling diagrams for VersaStack configurations using the Cisco Nexus 9000 and IBM FlashSystem V9000. For more information about FlashSystem V9000 enclosure cabling information, reference the following URL:

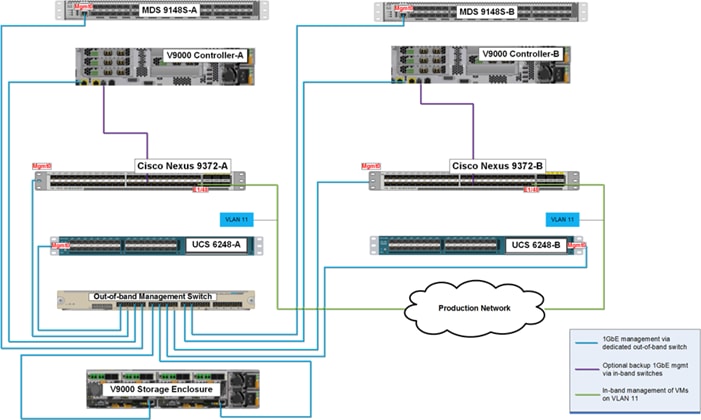

Figure 6 shows the Management cabling. The V9000’s have redundant management connections. One path is through the dedicated out-of-band management switch, and the secondary path is through the in-band management path going up through the Cisco Nexus 9000 to the production network.

Figure 6 VersaStack Management Cabling

The tables below provide the details of all the connections in use.

Table 7 Cisco Nexus 9000-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9000-A

|

Eth1/25 |

10GbE |

Cisco UCS fabric interconnect-A |

Eth1/25 |

| Eth1/26 |

10GbE |

Cisco UCS fabric interconnect-B |

Eth1/26 |

|

| Eth1/49 |

40GbE |

Cisco Nexus 9000-B |

Eth1/49 |

|

| Eth1/50 |

40GbE |

Cisco Nexus 9000-B |

Eth1/50 |

|

| Eth1/48 |

GbE |

GbE management switch |

Any |

![]() For devices requiring GbE connectivity, use the GbE Copper SFP+s (GLC–T=).

For devices requiring GbE connectivity, use the GbE Copper SFP+s (GLC–T=).

Table 8 Cisco Nexus 9000-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9000-B

|

Eth1/25 |

10GbE |

Cisco UCS fabric interconnect-B |

Eth1/25 |

| Eth1/26 |

10GbE |

Cisco UCS fabric interconnect-A |

Eth1/26 |

|

| Eth1/49 |

40GbE |

Cisco Nexus 9000-A |

Eth1/49 |

|

| Eth1/50 |

40GbE |

Cisco Nexus 9000-A |

Eth1/50 |

|

| Eth1/48 |

GbE |

GbE management switch |

Any |

![]() For devices requiring GbE connectivity, use the GbE Copper SFP+s (GLC–T=).

For devices requiring GbE connectivity, use the GbE Copper SFP+s (GLC–T=).

Figure 7 IBM V9000 Storage Day-0 Fibre Cabling Reference

Table 9 Cisco Nexus MDS 9148S-A Cabling Reference to Switch Port Correlation

| Switch/ Port |

Cabling reference |

|

| FC_SE-BE1-fabricA |

Switch A, FC13 |

9 |

| FC_SE-BE2-fabricA |

Switch A, FC14 |

11 |

| FC_SE-BE3-fabricA |

Switch A, FC15 |

13 |

| FC_SE-BE4-fabricA |

Switch A, FC16 |

15 |

| FC_ContA-BE1-fabricA |

Switch A, FC9 |

1 |

| FC_ContA-BE2-fabricA |

Switch A, FC10 |

3 |

| FC_ContB-BE1-fabricA |

Switch A, FC11 |

5 |

| FC_ContB-BE2-fabricA |

Switch A, FC12 |

7 |

| FC_ContA-FE1-fabricA |

Switch A, FC5 |

65 |

| FC_ContA-FE3-fabricA |

Switch A, FC6 |

67 |

| FC_ContB-FE1-fabricA |

Switch A, FC7 |

69 |

| FC_ContB-FE3-fabricA |

Switch A, FC8 |

71 |

Table 10 Cisco Nexus MDS 9148S-B Cabling Reference Correlation

| Port naming on Switch |

Switch/ Port |

Cabling reference |

| FC_SE-BE5-fabricB |

Switch B, FC13 |

10 |

| FC_SE-BE6-fabricB |

Switch B, FC14 |

12 |

| FC_SE-BE7-fabricB |

Switch B, FC15 |

14 |

| FC_SE-BE8-fabricB |

Switch B, FC16 |

16 |

| FC_ContA-BE3-fabricB |

Switch B, FC9 |

2 |

| FC_ContA-BE4-fabricB |

Switch B, FC10 |

4 |

| FC_ContB-BE3-fabricB |

Switch B, FC11 |

6 |

| FC_ContB-BE4-fabricB |

Switch B, FC12 |

8 |

| FC_ContA-FE2-fabricB |

Switch B, FC5 |

66 |

| FC_ContA-FE4-fabricB |

Switch B, FC6 |

68 |

| FC_ContB-FE2-fabricB |

Switch B, FC7 |

70 |

| FC_ContB-FE4-fabricB |

Switch B, FC8 |

72 |

Table 11 IBM FlashSystem V9000 Controller, Cont-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| IBM FlashSystem V9000 Controller, Cont-A |

E1 |

GbE |

GbE management switch |

Any |

| E2 (optional) |

GbE |

Cisco Nexus 9000-A |

Any |

|

| FC1 (Slot2:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/5 |

|

| FC2 (Slot2:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/5 |

|

| FC3 (Slot5:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/6 |

|

| FC4 (Slot5:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/6 |

|

| FC5 (Slot1:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/9 |

|

| FC6 (Slot1:Port4) |

16gbps |

Cisco MDS 9148S-A |

fc1/10 |

|

| FC7 (Slot3:Port2) |

16gbps |

Cisco MDS 9148S-B |

fc1/9 |

|

| FC8 (Slot3:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/10 |

Table 12 IBM FlashSystem V9000 Controller, Cont-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| IBM FlashSystem V9000 Controller, Cont-B |

E1 |

GbE |

GbE management switch |

Any |

| E2 (optional) |

GbE |

Cisco Nexus 9000-B |

Any |

|

| FC1 (Slot2:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/7 |

|

| FC2 (Slot2:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/7 |

|

| FC3 (Slot5:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/8 |

|

| FC4 (Slot5:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/8 |

|

| FC5 (Slot1:Port2) |

16gbps |

Cisco MDS 9148S-A |

fc1/11 |

|

| FC6 (Slot1:Port4) |

16gbps |

Cisco MDS 9148S-A |

fc1/12 |

|

| FC7 (Slot3:Port2) |

16gbps |

Cisco MDS 9148S-B |

fc1/11 |

|

| FC8 (Slot3:Port4) |

16gbps |

Cisco MDS 9148S-B |

fc1/12 |

Table 13 IBM FlashSystem V9000 Storage Enclosure, SE Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| IBM FlashSystem V9000 Storage Enclosure, SE |

E1 |

GbE |

GbE management switch |

Any |

| E2 |

GbE |

GbE management switch |

Any |

|

| FC1 |

16gbps |

Cisco MDS 9148S-A |

fc1/13 |

|

| FC2 |

16gbps |

Cisco MDS 9148S-A |

fc1/14 |

|

| FC3 |

16gbps |

Cisco MDS 9148S-A |

fc1/15 |

|

| FC4 |

16gbps |

Cisco MDS 9148S-A |

fc1/16 |

|

| FC5 |

16gbps |

Cisco MDS 9148S-B |

fc1/13 |

|

| FC6 |

16gbps |

Cisco MDS 9148S-B |

fc1/14 |

|

| FC7 |

16gbps |

Cisco MDS 9148S-B |

fc1/15 |

|

| FC8 |

16gbps |

Cisco MDS 9148S-B |

fc1/16 |

Table 14 Cisco Nexus MDS 9148S-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

|

Cisco MDS 9148S-A

|

Mgmt0 |

GbE |

GbE management switch |

Any |

| fc1/1 |

8gbps |

Cisco UCS Fabric Interconnect 6248-A |

fc29 |

|

| fc1/2 |

8gbps |

Cisco UCS Fabric Interconnect 6248-A |

fc30 |

|

| fc1/3 |

8gbps |

Cisco UCS Fabric Interconnect 6248-A |

fc31 |

|

| fc1/4 |

8gbps |

Cisco UCS Fabric Interconnect 6248-A |

fc32 |

|

| fc1/5 |

16gbps |

IBM controller, ContA-FE1 |

FC1 |

|

| fc1/6 |

16gbps |

IBM controller, ContA-FE3 |

FC3 |

|

| fc1/7 |

16gbps |

IBM controller, ContB-FE1 |

FC1 |

|

| fc1/8 |

16gbps |

IBM controller, ContB-FE3 |

FC3 |

|

| fc1/9 |

16gbps |

IBM controller, ContA-BE1 |

FC5 |

|

| fc1/10 |

16gbps |

IBM controller, ContA-BE2 |

FC6 |

|

| fc1/11 |

16gbps |

IBM controller, ContB-BE1 |

FC5 |

|

| fc1/12 |

16gbps |

IBM controller, ContB-BE2 |

FC6 |

|

| fc1/13 |

16gbps |

IBM Storage Enclosure, SE-BE1 |

FC1 |

|

| fc1/14 |

16gbps |

IBM Storage Enclosure, SE-BE2 |

FC2 |

|

| fc1/15 |

16gbps |

IBM Storage Enclosure, SE-BE3 |

FC3 |

|

| fc1/16 |

16gbps |

IBM Storage Enclosure, SE-BE4 |

FC4 |

Table 15 Cisco Nexus MDS 9148S-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

|

Cisco MDS 9148S-B

|

Mgmt0 |

GbE |

GbE management switch |

Any |

| fc1/1 |

8gbps |

Cisco UCS Fabric Interconnect 6248-B |

fc29 |

|

| fc1/2 |

8gbps |

Cisco UCS Fabric Interconnect 6248-B |

fc30 |

|

| fc1/3 |

8gbps |

Cisco UCS Fabric Interconnect 6248-B |

fc31 |

|

| fc1/4 |

8gbps |

Cisco UCS Fabric Interconnect 6248-B |

fc32 |

|

| fc1/5 |

16gbps |

IBM controller, ContA-FE2 |

FC2 |

|

| fc1/6 |

16gbps |

IBM controller, ContA-FE4 |

FC4 |

|

| fc1/7 |

16gbps |

IBM controller, ContB-FE2 |

FC2 |

|

| fc1/8 |

16gbps |

IBM controller, ContB-FE4 |

FC4 |

|

| fc1/9 |

16gbps |

IBM controller, ContA-BE3 |

FC7 |

|

| fc1/10 |

16gbps |

IBM controller, ContA-BE4 |

FC8 |

|

| fc1/11 |

16gbps |

IBM controller, ContB-BE3 |

FC7 |

|

| fc1/12 |

16gbps |

IBM controller, ContB-BE4 |

FC8 |

|

| fc1/13 |

16gbps |

IBM Storage Enclosure, SE-BE5 |

FC5 |

|

| fc1/14 |

16gbps |

IBM Storage Enclosure, SE-BE6 |

FC6 |

|

| fc1/15 |

16gbps |

IBM Storage Enclosure, SE-BE7 |

FC7 |

|

| fc1/16 |

16gbps |

IBM Storage Enclosure, SE-BE8 |

FC8 |

Table 16 Cisco UCS Fabric Interconnect A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS fabric interconnect-A

|

Mgmt0 |

GbE |

GbE management switch |

Any |

| Eth1/25 |

10GbE |

Cisco Nexus 9000-A |

Eth 1/25 |

|

| Eth1/26 |

10GbE |

Cisco Nexus 9000-B |

Eth 1/26 |

|

| Eth1/1 |

10GbE |

Cisco UCS Chassis FEX-A |

IOM 1/1 |

|

| Eth1/2 |

10GbE |

Cisco UCS Chassis FEX-A |

IOM 1/2 |

|

| Eth1/3 |

10GbE |

Cisco UCS Chassis FEX-A |

IOM 1/3 |

|

| Eth1/4 |

10GbE |

Cisco UCS Chassis FEX-A |

IOM 1/4 |

|

| fc29 |

8gbps |

Cisco MDS 9148S-A |

fc1/1 |

|

| fc30 |

8gbps |

Cisco MDS 9148S-A |

fc1/2 |

|

| fc31 |

8gbps |

Cisco MDS 9148S-A |

fc1/3 |

|

| fc32 |

8gbps |

Cisco MDS 9148S-A |

fc1/4 |

|

| L1 |

GbE |

Cisco UCS fabric interconnect-B |

L1 |

|

| L2 |

GbE |

Cisco UCS fabric interconnect-B |

L2 |

Table 17 Cisco UCS Fabric Interconnect B Cabling Information

| Local Port |

Connection |

Remote Device |

Remote Port |

|

| Cisco UCS fabric interconnect-B

|

Mgmt0 |

GbE |

GbE management switch |

Any |

| Eth1/25 |

10GbE |

Cisco Nexus 9000-B |

Eth 1/25 |

|

| Eth1/26 |

10GbE |

Cisco Nexus 9000-A |

Eth 1/26 |

|

| Eth1/1 |

10GbE |

Cisco UCS Chassis FEX-B |

IOM 1/1 |

|

| Eth1/2 |

10GbE |

Cisco UCS Chassis FEX-B |

IOM 1/2 |

|

| Eth1/3 |

10GbE |

Cisco UCS Chassis FEX-B |

IOM 1/3 |

|

| Eth1/4 |

10GbE |

Cisco UCS Chassis FEX-B |

IOM 1/4 |

|

| fc29 |

8gbps |

Cisco MDS 9148S-B |

fc1/1 |

|

| fc30 |

8gbps |

Cisco MDS 9148S-B |

fc1/2 |

|

| fc31 |

8gbps |

Cisco MDS 9148S-B |

fc1/3 |

|

| fc32 |

8gbps |

Cisco MDS 9148S-B |

fc1/4 |

|

| L1 |

GbE |

Cisco UCS fabric interconnect-A |

L1 |

|

| L2 |

GbE |

Cisco UCS fabric interconnect-A |

L2 |

![]() Cisco UCS C-Series can be connected to the FI directly or using FEX, the system validation included Cisco UCS C-Series directly connected to the Fabric Interconnects.

Cisco UCS C-Series can be connected to the FI directly or using FEX, the system validation included Cisco UCS C-Series directly connected to the Fabric Interconnects.

Table 18 Connectivity with Direct Connect to FI

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS C-Series Server 1 with Cisco VIC

|

Port 0 |

10GbE |

Cisco UCS Fabric Interconnect 6248-A |

Port 5 |

| Port 1 |

10GbE |

Cisco UCS Fabric Interconnect 6248-B |

Port 6 |

|

| Cisco UCS C-Series Server 2 with Cisco VIC

|

Port 0 |

10GbE |

Cisco UCS Fabric Interconnect 6248-A |

Port 7 |

| Port 1 |

10GbE |

Cisco UCS Fabric Interconnect 6248-B |

Port 8 |

Table 19 Cisco Nexus Rack FEX A Example Connectivity Option

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 2232PP FEX A |

Fabric Port 1/1 |

10GbE |

Cisco UCS fabric interconnect A |

Port 5 |

| Fabric Port 1/2 |

10GbE |

Cisco UCS fabric interconnect A |

Port 6 |

Table 20 Cisco Nexus Rack FEX B

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 2232PP FEX B |

Fabric Port 1/1 |

10GbE |

Cisco UCS fabric interconnect B |

Port 5 |

| Fabric Port 1/2 |

10GbE |

Cisco UCS fabric interconnect B |

Port 6 |

Cisco Nexus 9000 Initial Configuration Setup

This section provides the details for the initial Cisco Nexus 9000 Switch setup.

Cisco Nexus A

To set up the initial configuration for the first Cisco Nexus switch complete the following steps:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Auto Provisioning and continue with normal setup ?(yes/no)[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

Please register Cisco Nexus9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. Nexus9000 devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]: n

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : <<var_nexus_A_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : <<var_nexus_A_mgmt0_ip>>

Mgmt0 IPv4 netmask : <<var_nexus_A_mgmt0_netmask>>

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : <<var_nexus_A_mgmt0_gw>>

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <<var_global_ntp_server_ip>>

Configure default interface layer (L3/L2) [L2]:

Configure default switchport interface state (shut/noshut) [noshut]:

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]:

The following configuration will be applied:

password strength-check

switchname <<var_nexus_A_hostname>>

vrf context management

ip route 0.0.0.0/0 <<var_nexus_A_mgmt0_gw>>

exit

no feature telnet

ssh key rsa 2048 force

feature ssh

ntp server <<var_global_ntp_server_ip>>

system default switchport

no system default switchport shutdown

copp profile strict

interface mgmt0 ip address <<var_nexus_A_mgmt0_ip>><var_nexus_A_mgmt0_netmask>> no shutdown

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]:

[########################################] 100% Copy complete.

Cisco Nexus B

To set up the initial configuration for the second Cisco Nexus switch complete the following steps:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Auto Provisioning and continue with normal setup ?(yes/no)[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ---This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

Please register Cisco Nexus9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. Nexus9000 devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]: n

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : <<var_nexus_B_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : <<var_nexus_B_mgmt0_ip>>

Mgmt0 IPv4 netmask : <<var_nexus_B_mgmt0_netmask>>

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : <<var_nexus_B_mgmt0_gw>>

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <<var_global_ntp_server_ip>>

Configure default interface layer (L3/L2) [L2]:

Configure default switchport interface state (shut/noshut) [noshut]:

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]:

The following configuration will be applied:

password strength-check

switchname <<var_nexus_B_hostname>>

vrf context management

ip route 0.0.0.0/0 <<var_nexus_B_mgmt0_gw>>

exit

no feature telnet

ssh key rsa 2048 force

feature ssh

ntp server <<var_global_ntp_server_ip>>

system default switchport

no system default switchport shutdown

copp profile strict

interface mgmt0 ip address <<var_nexus_B_mgmt0_ip>><<var_nexus_B_mgmt0_netmask>> no shutdown

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]:

[########################################] 100% Copy complete.

Enable the Appropriate Cisco Nexus 9000 Features and Settings

Cisco Nexus 9000 A and Cisco Nexus 9000 B

To enable IP switching feature and set default spanning tree behaviors, complete the following steps:

1. On each Cisco Nexus 9000, enter configuration mode:

config terminal

2. Use the following commands to enable the necessary features:

feature udld

feature lacp

feature vpc

3. Configure spanning tree and save the running configuration to start-up:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

copy run start

Create VLANs for VersaStack IP Traffic

Cisco Nexus 9000 A and Cisco Nexus 9000 B

To create the necessary virtual local area networks (VLANs), complete the following step on both switches:

From the configuration mode, run the following commands:

vlan <<var_ib-mgmt_vlan_id>>

name IB-MGMT-VLAN

vlan <<var_native_vlan_id>>

name Native-VLAN

vlan <<var_nfs_vlan_id>>

name NFS-VLAN

vlan <<var_vmotion_vlan_id>>

name vMotion-VLAN

vlan <<var_vm-traffic_vlan_id>>

name VM-Traffic-VLAN

exit

copy run start

Configure Virtual Port Channel Domain

Cisco Nexus 9000 A

1. From the global configuration mode, create a new vPC domain:

vpc domain <<var_nexus_vpc_domain_id>>

2. Make Cisco Nexus 9000A the primary vPC peer by defining a low priority value:

role priority 10

3. Use the management interfaces on the supervisors of the Cisco Nexus 9000s to establish a keepalive link:

peer-keepalive destination <<var_nexus_B_mgmt0_ip>> source <<var_nexus_A_mgmt0_ip>>

4. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

copy run start

Cisco Nexus 9000 B

To configure vPCs for switch B, complete the following steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain <<var_nexus_vpc_domain_id>>

2. Make Cisco Nexus 9000A the primary vPC peer by defining a low priority value:

role priority 20

3. Use the management interfaces on the supervisors of the Cisco Nexus 9000s to establish a keepalive link:

peer-keepalive destination <<var_nexus_A_mgmt0_ip>> source <<var_nexus_B_mgmt0_ip>>

4. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

copy run start

Configure Network Interfaces for the VPC Peer Links

Cisco Nexus 9000 A

1. Define a port description for the interfaces connecting to VPC Peer <var_nexus_B_hostname>>.

interface Eth1/49

description VPC Peer <<var_nexus_B_hostname>>:1/47

interface Eth1/50

description VPC Peer <<var_nexus_B_hostname>>:1/48

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/49,Eth1/50

channel-group 10 mode active

no shutdown

3. Define a description for the port-channel connecting to <<var_nexus_B_hostname>>.

interface Po10

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow in-band management, NFS, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>,<<var_vmotion_vlan_id>>, <<var_vm_traffic_vlan_id>>,

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Cisco Nexus 9000 B

1. Define a port description for the interfaces connecting to VPC Peer <var_nexus_A_hostname>>.

interface Eth1/49

description VPC Peer <<var_nexus_A_hostname>>:1/47

interface Eth1/50

description VPC Peer <<var_nexus_A_hostname>>:1/48

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/49,Eth1/50

channel-group 10 mode active

no shutdown

3. Define a description for the port-channel connecting to <<var_nexus_A_hostname>>.

interface Po10

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow in-band management, NFS, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>,

<<var_vmotion_vlan_id>>, <<var_vm_traffic_vlan_id>>,

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Cisco Nexus 9000 A

1. Define a description for the port-channel connecting to <<var_ucs_clustername>>-A.

interface Po13

description <<var_ucs_clustername>>-A

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, NFS, VM traffic, and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>,<<var_vmotion_vlan_id>>, <<var_vm-traffic_vlan_id>>,

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

5. Make this a VPC port-channel and bring it up.

vpc 13

no shutdown

6. Define a port description for the interface connecting to <<var_ucs_clustername>>-A.

interface Eth1/25

description <<var_ucs_clustername>>-A:1/25

7. Apply it to a port channel and bring up the interface.

channel-group 13 force mode active

no shutdown

8. Define a description for the port-channel connecting to <<var_ucs_clustername>>-B

interface Po14

description <<var_ucs_clustername>>-B

9. Make the port-channel a switchport, and configure a trunk to allow InBand management, NFS, and VM traffic VLANs and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>, <<var_vmotion_vlan_id>>, <<var_vm-traffic_vlan_id>>

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

11. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

12. Make this a VPC port-channel and bring it up.

vpc 14

no shutdown

13. Define a port description for the interface connecting to <<var_ucs_clustername>>-B

interface Eth1/26

description <<var_ucs_clustername>>-B:1/26

14. Apply it to a port channel and bring up the interface.

channel-group 14 force mode active

no shutdown

copy run start

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Cisco Nexus 9000 B

1. Define a description for the port-channel connecting to <<var_ucs_clustername>>-B

interface Po14

description <<var_ucs_clustername>>-B

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, NFS, VM traffic, and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>, <<var_vmotion_vlan_id>>, <<var_vm_traffic_vlan_id>>

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

5. Make this a VPC port-channel and bring it up.

vpc 14

no shutdown

6. Define a port description for the interface connecting to <<var_ucs_clustername>>-B

interface Eth1/25

description <<var_ucs_clustername>>-B:1/25

7. Apply it to a port channel and bring up the interface.

channel-group 14 force mode active

no shutdown

8. Define a description for the port-channel connecting to <<var_ucs_clustername>>-A

interface Po13

description <<var_ucs_clustername>>-A

9. Make the port-channel a switchport, and configure a trunk to allow InBand management, NFS, and VM traffic VLANs and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <<var_native_vlan_id>>

switchport trunk allowed vlan <<var_ib-mgmt_vlan_id>>, <<var_nfs_vlan_id>>, <<var_vmotion_vlan_id>>, <<var_vm_traffic_vlan_id>>,

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

11. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

12. Make this a VPC port-channel and bring it up.

vpc 13

no shutdown

13. Define a port description for the interface connecting to <<var_ucs_clustername>>-A

interface Eth1/26

description <<var_ucs_clustername>>-A:1/26

14. Apply it to a port channel and bring up the interface.

channel-group 13 force mode active

no shutdown

copy run start

Management Plane Access for Servers and Virtual Machines

There are multiple ways to configure the switch to the uplink to your separate management switch. The two examples shown below helps you to know how your configuration could be setup, however since networking configurations can vary, we recommend you consult your local network personal for the optimal configuration. In the first example provided in this section, a single switch is top of rack and the Cisco Nexus 9000 series switches are both connected to it through its ports number 48. The Cisco Nexus 9000-Series switches use a 1 GB SFP to convert the Cat-5 copper cable connected to the top of rack switch; however, note that the connection types can vary. The Cisco Nexus 9000 switches are configured with the interface-VLAN option and each Cisco Nexus 9000 switch has a unique IP for its VLAN. The traffic we wish to route from the Cisco Nexus 9000 is the in-band management traffic, so we will use the VLAN 11 and set the port to access mode. The top of rack switch also has its ports set to access mode. In the second example, the top of rack switch would have port channel configured, and also we show how to leverage port channel, which maximizes upstream connectivity.

Cisco Nexus 9000 A and B using Interface VLAN Example 1

On the Cisco Nexus A switch type the following commands. Notice the VLAN IP is different on each switch.

Cisco Nexus 9000 A

int Eth1/48

description IB-management-access

switchport mode access

spanning-tree port type network

switchport access vlan <<var_ib-mgmt_vlan_id>>

no shut

feature interface-vlan

int Vlan <<var_ib-mgmt_vlan_id>>

ip address <<var_switch_A_inband_mgmt_ip_address>>/<<var_inband_mgmt_netmask>>

no shut

ip route 0.0.0.0/0 <<var_inband_mgmt_gateway>>

copy run start

Cisco Nexus 9000 B

int Eth1/48

description Ib-management-access

switchport mode access

spanning-tree port type network

switchport access vlan <<var_ib-mgmt_vlan_id>>

no shut

feature interface-vlan

int Vlan <<var_ib-mgmt_vlan_id>>

ip address <<var_switch_B_inband_mgmt_ip_address>>/<<var_inband_mgmt_netmask>>

no shut

ip route 0.0.0.0/0 <<var_inband_mgmt_gateway>>

copy run start

Cisco Nexus 9000 A and B using Port Channel Example 2

To enable management access across the IP switching environment leveraging port channel in config mode, complete the following steps:

1. Define a description for the port-channel connecting to management switch.

interface po11

description IB-MGMT

2. Configure the port as an access VLAN carrying the InBand management VLAN traffic.

switchport

switchport mode access

switchport access vlan <<var_ib-mgmt_vlan_id>>

3. Make the port channel and associated interfaces normal spanning tree ports.

spanning-tree port type normal

4. Make this a VPC port-channel and bring it up.

vpc 11

no shutdown

5. Define a port description for the interface connecting to the management plane.

interface Eth1/48

description IB-MGMT-SWITCH_uplink

6. Apply it to a port channel and bring up the interface.

channel-group 11 force mode active

no shutdown

7. Save the running configuration to start-up in both Cisco Nexus 9000s and run commands to look at port and port channel.

Copy run start

sh int eth1/48 br

sh port-channel summary

Cisco MDS 9148S Initial Configuration Setup

These steps provide the details of the initial Cisco MDS Fibre Channel Switch setup. We are creating a cluster zone to enable FlashSystem V9000 Controllers (AC2) to Storage Enclosure (AE2) communication.

Cisco MDS A

To set up the initial configuration for the first Cisco MDS switch complete the following step:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the Cisco MDS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Auto Provisioning and continue with normal setup ?(yes/no)[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Setup configures only enough connectivity for management of the system.

Please register Cisco MDS 9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. MDS devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]:

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : <<var_MDS_A_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : <<var_MDS_A_mgmt0_ip>>

Mgmt0 IPv4 netmask : <<var_MDS_A_mgmt0_netmask>>

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : <<var_MDS_A_mgmt0_gw>>

Configure advanced IP options? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Enable the telnet service? (yes/no) [n]:

Configure congestion/no_credit drop for fc interfaces? (yes/no)[y]: Enter the type of drop to configure congestion/no_credit drop? (con/no) [c]:

Enter milliseconds in multiples of 10 for congestion-drop for port mode F in range (<100-500>/default), where default is 500. [d]:

Congestion-drop for port mode E must be greater than or equal to Congestion-drop for port mode F. Hence, Congestion drop for port mode E will be set as default.

Enable the http-server? (yes/no) [y]:

Configure clock? (yes/no) [n]:

Configure timezone? (yes/no) [n]: y

Enter timezone config [PST/MST/CST/EST] : <<var_timezone>>

Enter Hrs offset from UTC [-23:+23] : <<var_UTC_offset>>

Enter Minutes offset from UTC [0-59] :

Configure summertime? (yes/no) [n]:

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <<var_global_ntp_server_ip>>

Configure default switchport interface state (shut/noshut) [shut]:

Configure default switchport trunk mode (on/off/auto) [on]:

Configure default switchport port mode F (yes/no) [n]:

Configure default zone policy (permit/deny) [deny]:

Enable full zoneset distribution? (yes/no) [n]:

Configure default zone mode (basic/enhanced) [basic]:

The following configuration will be applied:

password strength-check

switchname <<var_MDS_A_hostname>>

interface mgmt0

ip address <<var_MDS_A_mgmt0_ip>> <<var_MDS_A_mgmt0_netmask>> no shutdown

ip default-gateway <<var_MDS_A_mgmt0_gw>>

ssh key rsa 2048 force

feature ssh

no feature telnet system timeout congestion-drop default mode F system timeout congestion-drop default mode E

feature http-server

clock timezone PST 0 0

ntp server <<var_global_ntp_server_ip>>

system default switchport shutdown

system default switchport trunk mode on

no system default zone default-zone permit

no system default zone distribute full

no system default zone mode enhanced

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]:

[########################################] 100% Copy complete.

Cisco MDS B

To set up the initial configuration for the second Cisco MDS switch complete the following step:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the Cisco MDS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Auto Provisioning and continue with normal setup ?(yes/no)[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

Please register Cisco MDS 9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. MDS devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]:

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : <<var_MDS_B_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : <<var_MDS_B_mgmt0_ip>>

Mgmt0 IPv4 netmask : <<var_MDS_B_mgmt0_netmask>>

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : <<var_MDS_B_mgmt0_gw>>

Configure advanced IP options? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Enable the telnet service? (yes/no) [n]:

Configure congestion/no_credit drop for fc interfaces? (yes/no) [y]:

Enter the type of drop to configure congestion/no_credit drop? (con/no) [c]:

Enter milliseconds in multiples of 10 for congestion-drop for port mode F in range (<100-500>/default), where default is 500. [d]:

Congestion-drop for port mode E must be greater than or equal to Congestion-drop for port mode F. Hence, Congestion drop for port mode E will be set as default.

Enable the http-server? (yes/no) [y]:

Configure clock? (yes/no) [n]:

Configure timezone? (yes/no) [n]: y

Enter timezone config [PST/MST/CST/EST] : <<var_timezone>>

Enter Hrs offset from UTC [-23:+23] : <<var_UTC_offset>>

Enter Minutes offset from UTC [0-59] :

Configure summertime? (yes/no) [n]:

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <<var_global_ntp_server_ip>>

Configure default switchport interface state (shut/noshut) [shut]:

Configure default switchport trunk mode (on/off/auto) [on]:

Configure default switchport port mode F (yes/no) [n]:

Configure default zone policy (permit/deny) [deny]:

Enable full zoneset distribution? (yes/no) [n]:

Configure default zone mode (basic/enhanced) [basic]:

The following configuration will be applied:

password strength-check

switchname : <<var_MDS_B_hostname>>

interface mgmt0

ip address <<var_MDS_B_mgmt0_ip>> <<var_MDS_B_mgmt0_netmask>>

no shutdown

ip default-gateway <<var_MDS_B_mgmt0_gw>>

ssh key rsa 2048 force

feature ssh

no feature telnet

system timeout congestion-drop default mode F

system timeout congestion-drop default mode E

feature http-server clock timezone PST 0 0

ntp server <<var_global_ntp_server_ip>>

system default switchport shutdown

system default switchport trunk mode on

no system default zone default-zone permit

no system default zone distribute full

no system default zone mode enhanced

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]: [########################################] 100% Copy complete.

Enable Appropriate Cisco MDS Features and Settings

Cisco MDS A and B

To enable the feature on both switches, enter the following commands

Config

feature npiv

feature fport-channel-trunk

Enable VSANs and Create Port Channel and Cluster Zone

Cisco MDS A

1. Create Port Channel that will be uplinked to the fabric interconnect

interface port-channel 1

2. Create a VSAN for Host Connectivity and assign interfaces to it. Ports assigned to the port channel will also be in this VSAN. Configure the ports up.

vsan database

vsan <<var_vsan_a_id>>

vsan <<var_vsan_a_id>> interface fc1/5-8

vsan <<var_vsan_a_id>> interface po1

interface fc1/5-8

no shut

3. Create a VSAN for Cluster Interconnect and assign interfaces to it.

vsan database

vsan <<var_vsan_a_clus_id>>

vsan <<var_vsan_a_clus_id>> interface fc1/8-16

no shut

4. Activate the port channel.

![]() The port channel ports will not be connected until the Fabric Interconnect is configured.

The port channel ports will not be connected until the Fabric Interconnect is configured.

interface port-channel 1

channel mode active

switchport rate-mode dedicated

5. Assign interfaces to the port channel and save the config.

interface fc1/1-4

port-license acquire

channel-group 1 force

no shutdown

exit

copy run start

![]() You can run a “show int br” to validate the interfaces 1-4 are in the proper VSAN.

You can run a “show int br” to validate the interfaces 1-4 are in the proper VSAN.

6. Run show flogi database to obtain the WWPN’s for the FlashSystem V9000 ports in cluster VSAN. Copy the 8 WWPN’s for the IBM Storwize system to create a zone for the cluster in Step 9.

VersaStack-MDS-A# sh flogi database vsan 201

--------------------------------------------------------------------------------

INTERFACE VSAN FCID PORT NAME NODE NAME

--------------------------------------------------------------------------------fc1/9 201 0x940000 50:05:07:68:0c:11:22:71 50:05:07:68:0c:00:22:71 fc1/10 201 0x940300 50:05:07:68:0c:31:22:71 50:05:07:68:0c:00:22:71 fc1/11 201 0x940100 50:05:07:68:0c:11:22:67 50:05:07:68:0c:00:22:67 fc1/12 201 0x940200 50:05:07:68:0c:31:22:67 50:05:07:68:0c:00:22:67 fc1/13 201 0x940400 50:05:07:60:5e:83:cc:81 50:05:07:60:5e:83:cc:80

fc1/14 201 0x940600 50:05:07:60:5e:83:cc:91 50:05:07:60:5e:83:cc:80

fc1/15 201 0x940500 50:05:07:60:5e:83:cc:a1 50:05:07:60:5e:83:cc:bf

fc1/16 201 0x940700 50:05:07:60:5e:83:cc:b1 50:05:07:60:5e:83:cc:bf

Total number of flogi = 8.

7. Input all FlashSystem V9000 Fabric A WWPNs into the variable table below belonging to host and cluster VSANs. Their assigned switch ports identify FlashSystem V9000 Fibre Channel ports.

Table 21 V9000 Fabric A WWPNs

| Source |

Switch/ Port |

Variable |

WWPN |

| FC_SE-BE1-fabricA |

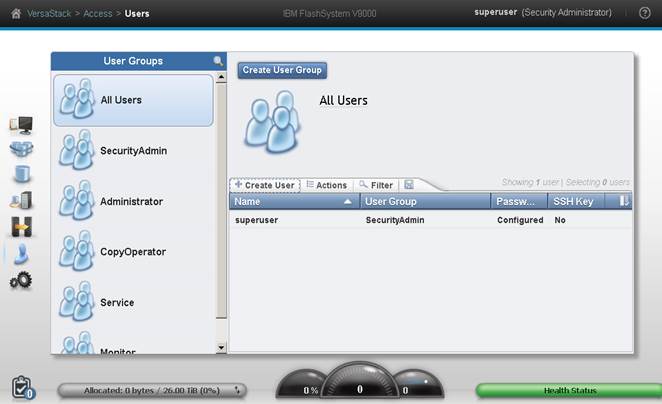

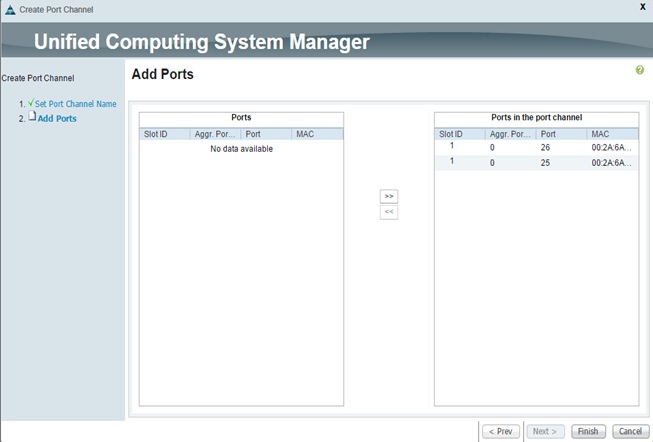

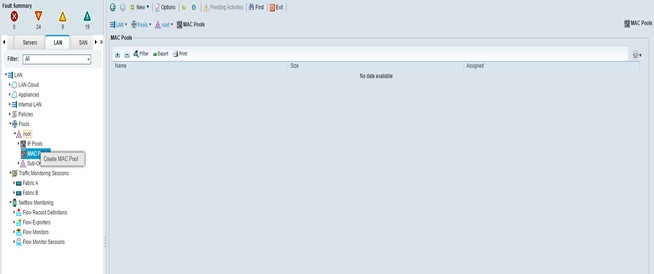

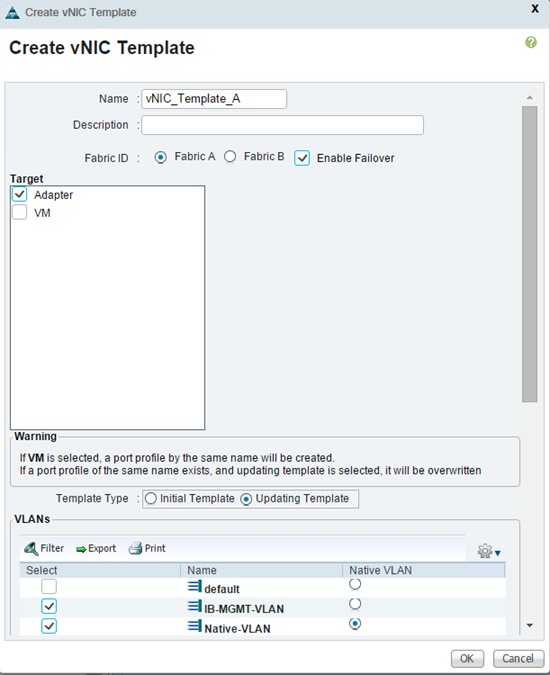

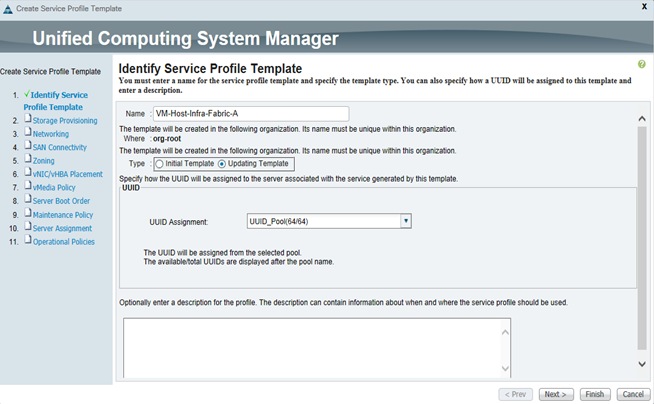

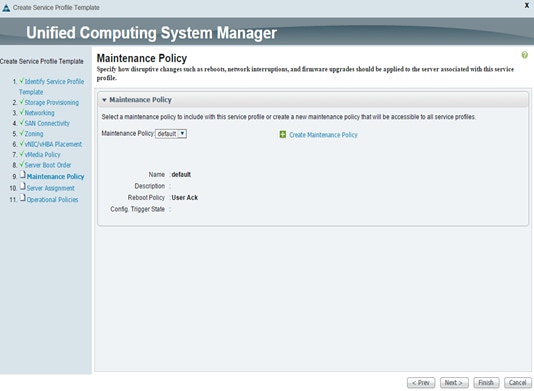

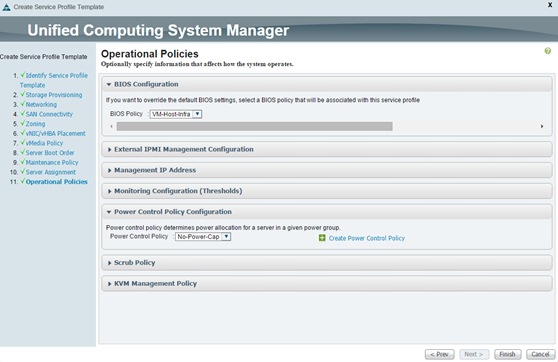

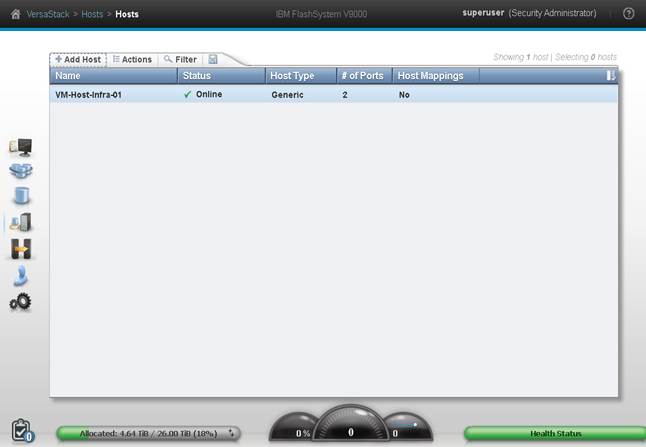

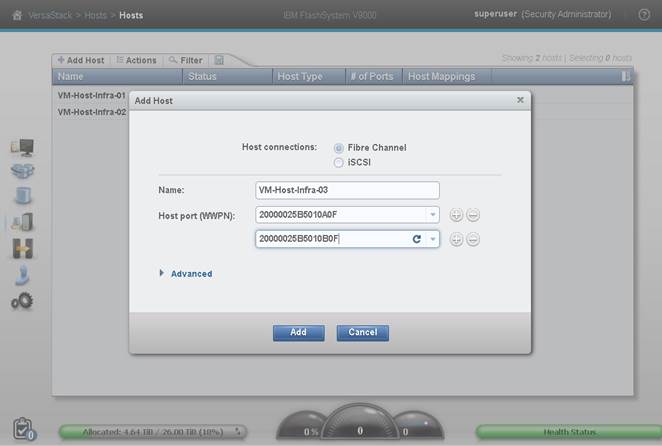

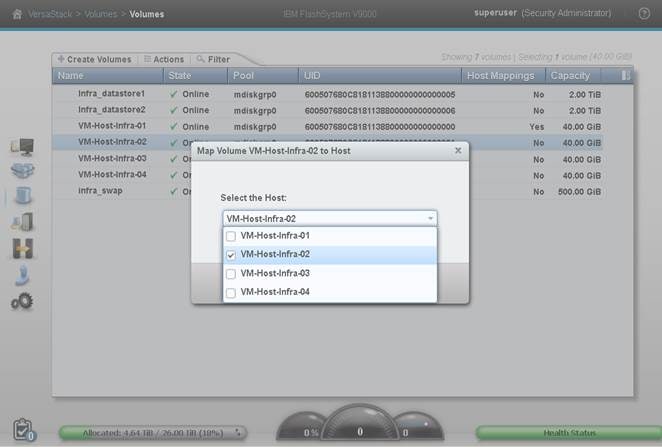

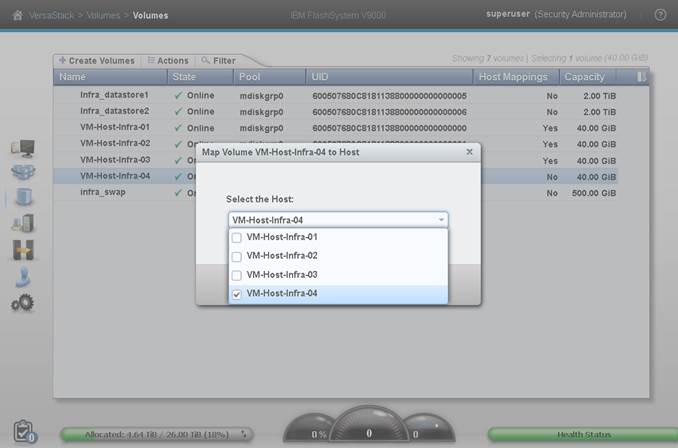

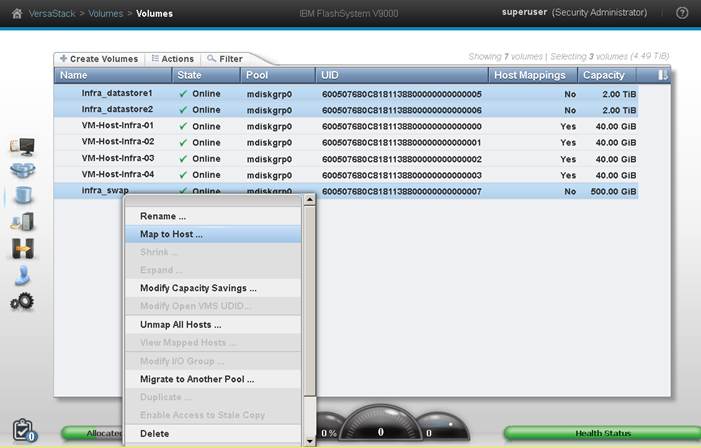

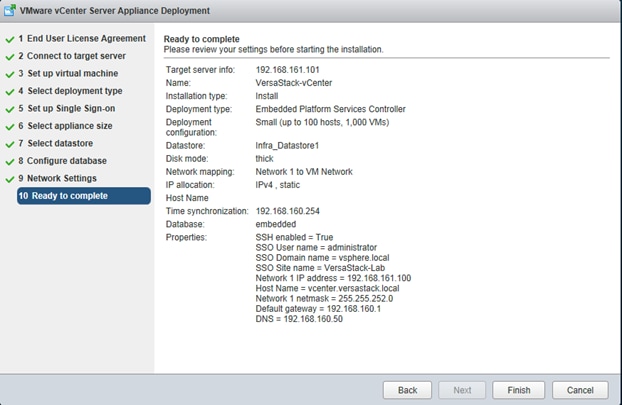

Switch A FC13 |