Guidelines for using RoCEv2 Protocol in the Native ENIC driver on ESXi

General Guidelines and Limitations:

-

Cisco UCS Manager release 4.2(3b) supports RoCEv2 only on ESXi 7.0 U3.

-

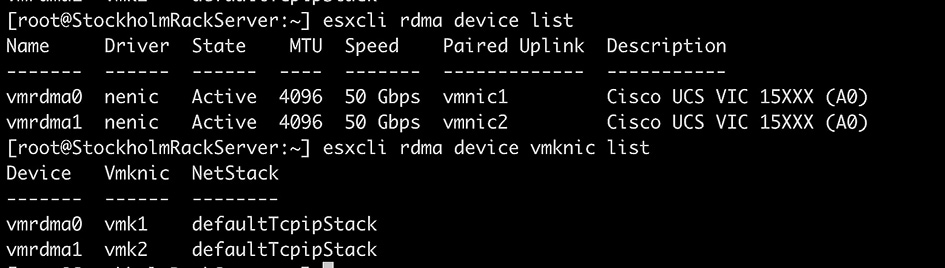

Cisco recommends you check UCS Hardware and Software Compatibility specific to your UCS Manager release to determine support for ESXi. RoCEv2 on ESXi is supported on UCS B-Series and C-Series servers with Cisco UCS VIC 15000 Series and later adapters.

-

RoCEv2 on ESXi is not supported on UCS VIC 1200, 1300 and 1400 Series adapters.

-

RDMA on ESXi nENIC currently supports only ESXi NVME that is part of the ESXi kernel. The current implementation does not support the ESXi user space RDMA application.

-

Multiple mac addresses and multiple VLANs are supported only on VIC 15000 Series adapters.

-

RoCEv2 supports maximum two RoCEv2 enabled interfaces per adapter.

-

Pvrdma, VSAN over RDMA, and iSER are not supported.

-

The COS setting is not supported on UCS Manager.

Downgrade Limitations:

-

Cisco recommends you remove the RoCEv2 configuration before downgrading to any non-supported RoCEv2 release.

Feedback

Feedback