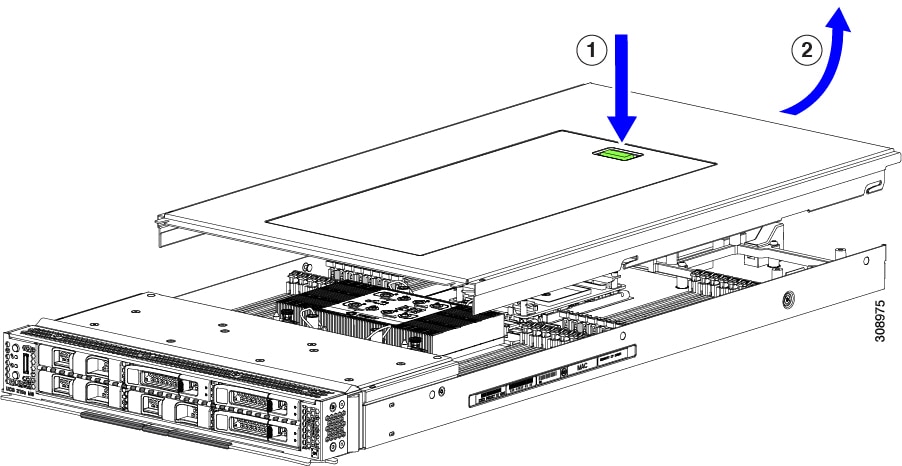

Removing and Installing the Compute Node Cover

The top cover for the Cisco UCS X210c M7 compute node can be removed to allow access to internal components, some of which are field-replaceable. The green button on the top cover releases the compute node so that it can be removed from the chassis.

Removing a Compute Node Cover

To remove the cover of the UCS X210c M7 compute node, follow these steps:

Procedure

|

Step 1 |

Press and hold the button down (1, in the figure below). |

|

Step 2 |

While holding the back end of the cover, slide it back, then pull it up (2). By sliding the cover back, you enable the front edge to clear the metal lip on the rear of the front mezzanine module.

|

Installing a Compute Node Cover

Use this task to install a removed top cover for the UCS X210c M7 compute node.

Procedure

|

Step 1 |

Insert the cover angled so that it hits the stoppers on the base. |

|

Step 2 |

Lower the compute node's cover until it reaches the bottom.  |

|

Step 3 |

Keeping the compute node's cover flat, slide it forward until the release button clicks. |

Feedback

Feedback