Segment Routing Flexible Algorithm is a traffic engineering solution part of the SR architecture. It allows for user-defined

algorithms where the IGP computes paths for unicast traffic based on a user-defined combination of metric type and constraint

(FA definition).

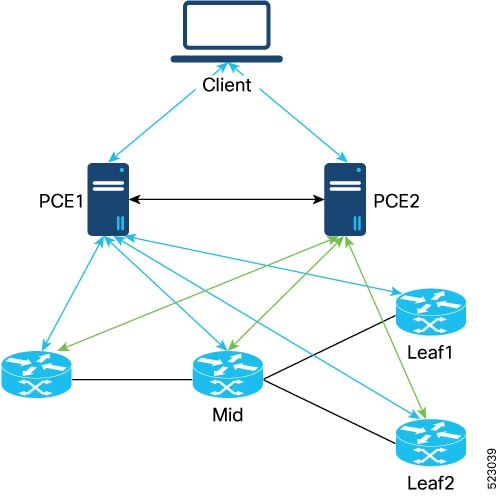

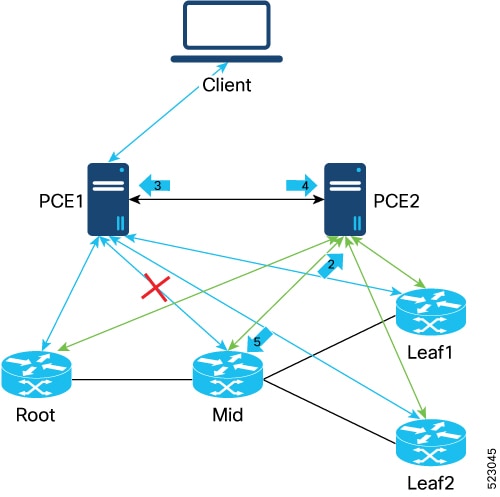

The Flexible Algorithm Constraint for Tree-SID Path Computation feature expands the traffic engineering options for multicast

transport. It allows the SR-PCE to compute a P2MP tree that adheres to the definition and topology of a user-defined Flex

Algo.

In addition, a tree based on a Flex Algo constraint provides link-level fast re-route (FRR), guaranteeing that the primary

and backup paths chosen by a node along the tree follow the same traffic engineering constraints specified by the Flex Algo.

The signaling includes Flex-Algo information to enable Fast Re-Route (FRR). The SR-PCE uses the Central Controller Instructions

(CCI) object format as defined in RFC9050 in order to signal both the link/node address as well as the Flex Algo node-SID.

This allows a node in the tree to program as a backup the backup path associated with the Flex Algo node-SID.

SR-PCE computes the P2MP tree with an associated Flex-Algo constraint as described below:

Verification

SR-PCEThe following output shows the paths calculated at the SR-PCE for the tree rooted at PE node 1, with leaf nodes at 4 and 5:

RP/0/RP0/CPU0:R6# show pce lsp p2mp root ipv4 1.1.1.101

Tree: sr_p2mp_root_1.1.1.101_tree_id_524289, Root: 1.1.1.101 ID: 524289

PCC: 1.1.1.101

Label: 15600

Operational: up Admin: up Compute: Yes

Local LFA FRR: Enabled

Flex-Algo: 128 Metric Type: LATENCY

Metric Type: TE

Transition count: 1

Uptime: 00:47:27 (since Wed Feb 07 12:50:52 PST 2024)

Destinations: 1.1.1.104, 1.1.1.105

Nodes:

Node[0]: 1.1.1.101 (R1)

Delegation: PCC

PLSP-ID: 6

Role: Ingress

Hops:

Incoming: 15600 CC-ID: 2

Outgoing: 15600 CC-ID: 2 (1.1.1.103!) N-SID: 128103 [R3]

Node[1]: 1.1.1.103 (R3)

Delegation: PCC

PLSP-ID: 1

Role: Transit

Hops:

Incoming: 15600 CC-ID: 1

Outgoing: 15600 CC-ID: 1 (1.1.1.104!) N-SID: 128104 [R4]

Outgoing: 15600 CC-ID: 1 (1.1.1.105!) N-SID: 128105 [R5]

Node[2]: 1.1.1.104 (R4)

Delegation: PCC

PLSP-ID: 2

Role: Egress

Hops:

Incoming: 15600 CC-ID: 3

Node[3]: 1.1.1.105 (R5)

Delegation: PCC

PLSP-ID: 3

Role: Egress

Hops:

Incoming: 15600 CC-ID: 4

Tree: sr_p2mp_root_1.1.1.101_tree_id_524290, Root: 1.1.1.101 ID: 524290

PCC: 1.1.1.101

Label: 15599

Operational: up Admin: up Compute: Yes

Local LFA FRR: Enabled

Flex-Algo: 128 Metric Type: LATENCY

Metric Type: TE

Transition count: 1

Uptime: 00:47:27 (since Wed Feb 07 12:50:52 PST 2024)

Destinations: 1.1.1.104, 1.1.1.105

Nodes:

Node[0]: 1.1.1.101 (R1)

Delegation: PCC

PLSP-ID: 7

Role: Ingress

Hops:

Incoming: 15599 CC-ID: 2

Outgoing: 15599 CC-ID: 2 (1.1.1.103!) N-SID: 128103 [R3]

Node[1]: 1.1.1.103 (R3)

Delegation: PCC

PLSP-ID: 2

Role: Transit

Hops:

Incoming: 15599 CC-ID: 1

Outgoing: 15599 CC-ID: 1 (1.1.1.104!) N-SID: 128104 [R4]

Outgoing: 15599 CC-ID: 1 (1.1.1.105!) N-SID: 128105 [R5]

Node[2]: 1.1.1.104 (R4)

Delegation: PCC

PLSP-ID: 3

Role: Egress

Hops:

Incoming: 15599 CC-ID: 3

Node[3]: 1.1.1.105 (R5)

Delegation: PCC

PLSP-ID: 4

Role: Egress

Hops:

Incoming: 15599 CC-ID: 4

The following output shows the paths calculated at the SR-PCE for the tree rooted at PE node 4, with leaf nodes at 1 and 5:

RP/0/RP0/CPU0:R6# show pce lsp p2mp root ipv4 1.1.1.104

Tree: sr_p2mp_root_1.1.1.104_tree_id_524289, Root: 1.1.1.104 ID: 524289

PCC: 1.1.1.104

Label: 15598

Operational: up Admin: up Compute: Yes

Local LFA FRR: Enabled

Flex-Algo: 128 Metric Type: LATENCY

Metric Type: TE

Transition count: 1

Uptime: 00:47:03 (since Wed Feb 07 12:51:20 PST 2024)

Destinations: 1.1.1.101, 1.1.1.105

Nodes:

Node[0]: 1.1.1.104 (R4)

Delegation: PCC

PLSP-ID: 1

Role: Ingress

Hops:

Incoming: 15598 CC-ID: 1

Outgoing: 15598 CC-ID: 1 (1.1.1.105!) N-SID: 128105 [R5]

Outgoing: 15598 CC-ID: 1 (1.1.1.102!) N-SID: 128102 [R2]

Node[1]: 1.1.1.105 (R5)

Delegation: PCC

PLSP-ID: 5

Role: Egress

Hops:

Incoming: 15598 CC-ID: 2

Node[2]: 1.1.1.102 (R2)

Delegation: PCC

PLSP-ID: 1

Role: Transit

Hops:

Incoming: 15598 CC-ID: 3

Outgoing: 15598 CC-ID: 3 (1.1.1.101!) N-SID: 128101 [R1]

Node[3]: 1.1.1.101 (R1)

Delegation: PCC

PLSP-ID: 8

Role: Egress

Hops:

Incoming: 15598 CC-ID: 4

The following output shows the paths calculated at the SR-PCE for the tree rooted at PE node 5, with leaf nodes at 1 and 4:

RP/0/RP0/CPU0:R6# show pce lsp p2mp root ipv4 1.1.1.105

Tree: sr_p2mp_root_1.1.1.105_tree_id_524289, Root: 1.1.1.105 ID: 524289

PCC: 1.1.1.105

Label: 15597

Operational: up Admin: up Compute: Yes

Local LFA FRR: Enabled

Flex-Algo: 128 Metric Type: LATENCY

Metric Type: TE

Transition count: 1

Uptime: 00:46:58 (since Wed Feb 07 12:51:28 PST 2024)

Destinations: 1.1.1.101, 1.1.1.104

Nodes:

Node[0]: 1.1.1.105 (R5)

Delegation: PCC

PLSP-ID: 2

Role: Ingress

Hops:

Incoming: 15597 CC-ID: 1

Outgoing: 15597 CC-ID: 1 (1.1.1.104!) N-SID: 128104 [R4]

Outgoing: 15597 CC-ID: 1 (1.1.1.103!) N-SID: 128103 [R3]

Node[1]: 1.1.1.104 (R4)

Delegation: PCC

PLSP-ID: 4

Role: Egress

Hops:

Incoming: 15597 CC-ID: 2

Node[2]: 1.1.1.103 (R3)

Delegation: PCC

PLSP-ID: 3

Role: Transit

Hops:

Incoming: 15597 CC-ID: 3

Outgoing: 15597 CC-ID: 3 (1.1.1.101!) N-SID: 128101 [R1]

Node[3]: 1.1.1.101 (R1)

Delegation: PCC

PLSP-ID: 9

Role: Egress

Hops:

Incoming: 15597 CC-ID: 4

PE Node 1 - RootThe following ouput from PE node 1 shows the mVPN routes that are learned in the VRF sample-mvpn-1:

RP/0/RP0/CPU0:R1# show bgp vrf sample-mvpn1 ipv4 mvpn

BGP VRF sample-mvpn1, state: Active

BGP Route Distinguisher: 1.1.1.1:2

VRF ID: 0x6000000a

BGP router identifier 1.1.1.1, local AS number 64000

Non-stop routing is enabled

BGP table state: Active

Table ID: 0x0 RD version: 14

BGP table nexthop route policy:

BGP main routing table version 14

BGP NSR Initial initsync version 2 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 1.1.1.1:2 (default for vrf sample-mvpn1)

Route Distinguisher Version: 14

*> [1][1.1.1.101]/40 0.0.0.0 0 i

*>i[1][1.1.1.104]/40 1.1.1.104 100 0 i

*>i[1][1.1.1.105]/40 1.1.1.105 100 0 i

*> [3][32][3.3.3.1][32][232.0.0.1][1.1.1.101]/120

0.0.0.0 0 i

*>i[4][3][1.1.1.1:2][32][3.3.3.1][32][232.0.0.1][1.1.1.101][1.1.1.104]/224

1.1.1.104 100 0 i

*>i[4][3][1.1.1.1:2][32][3.3.3.1][32][232.0.0.1][1.1.1.101][1.1.1.105]/224

1.1.1.105 100 0 i

*>i[7][1.1.1.1:2][64000][32][3.3.3.1][32][232.0.0.1]/184

1.1.1.104 100 0 i

Processed 7 prefixes, 7 paths

The following ouput from PE node 1 shows the mVPN context information for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show mvpn vrf sample-mvpn1 context

MVPN context information for VRF sample-mvpn1 (0x55842fbf9bd0)

RD: 1.1.1.1:2 (Valid, IID 0x1), VPN-ID: 0:0

Import Route-targets : 2

RT:1.1.1.101:0, BGP-AD

RT:1.1.1.101:10, BGP-AD

BGP Auto-Discovery Enabled (I-PMSI added)

SR P2MP Core-tree data:

MDT Name: TRmdtsample-mvpn1, Handle: 0x20008044, idb: 0x55842fc0e240

MTU: 1376, MaxAggr: 255, SW_Int: 30, AN_Int: 60

RPF-ID: 1, C:0, O:1, D:0, CP:0

Static Type : - / -

Def MDT ID: 524289 (0x55842f9ad558), added: 1, HLI: 0x80001, Cfg: 1/0

Part MDT ID: 0 (0x0), added: 0, HLI: 0x00000, Cfg: 0/0

Ctrl Trees : 0/0/0, Ctrl ID: 0 (0x0), Ctrl HLI: 0x00000

The following ouput from PE node 1 shows the SR ODN information for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show mvpn vrf sample-mvpn1 database segment-routing

* - LFA protected MDT

Core Type Core Tree Core State On-demand

Source Information Color

Default* 1.1.1.101 524289 (0x80001) Up 128

I-PMSI Leg: 1.1.1.104

1.1.1.105

Part* 0.0.0.0 0 (0x00000) Down 128

Control* 0.0.0.0 0 (0x00000) Down 128

The following ouput from PE node 1 shows the mVPN PE information of nodes 4 and 5 (leaf nodes) for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show mvpn vrf sample-mvpn1 pe

MVPN Provider Edge Router information

VRF : sample-mvpn1

PE Address : 1.1.1.104 (0x55842fc10cc0)

RD: 0:0:0 (null), RIB_HLI 0, RPF-ID 3, Remote RPF-ID 0, State: 0, S-PMSI: 0

PPMP_LABEL: 0, MS_PMSI_HLI: 0x00000, Bidir_PMSI_HLI: 0x00000, MLDP-added: [RD 0, ID 0, Bidir ID 0, Remote Bidir ID 0], Counts(SHR/SRC/DM/DEF-MD/SR-POL): 0, 0, 0, 0, 0 Bidir: GRE RP Count 0, MPLS RP Count 0RSVP-TE added: [Leg 0, Ctrl Leg 0, Part tail 0 Def Tail 0, IR added: [Def Leg 0, Ctrl Leg 0, Part Leg 0, Part tail 0, Part IR Tail Label 0

Tree-SID Added: [Def/Part Leaf 1, Def Egress 1, Part Egress 0, Ctrl Leaf 0]

bgp_i_pmsi: 1,0/0 , bgp_ms_pmsi/Leaf-ad: 0/0, bgp_bidir_pmsi: 0, remote_bgp_bidir_pmsi: 0, PMSIs: I 0x55842fc0b040, 0x0, MS 0x0, Bidir Local: 0x0, Remote: 0x0, BSR/Leaf-ad 0x0/0, Autorp-disc/Leaf-ad 0x0/0, Autorp-ann/Leaf-ad 0x0/0

IIDs: I/6: 0x1/0x0, B/R: 0x0/0x0, MS: 0x0, B/A/A: 0x0/0x0/0x0

Bidir RPF-ID: 4, Remote Bidir RPF-ID: 0

I-PMSI: Tree-SID [524289, 1.1.1.104] (0x55842fc0b040)

I-PMSI rem: (0x0)

MS-PMSI: (0x0)

Bidir-PMSI: (0x0)

Remote Bidir-PMSI: (0x0)

BSR-PMSI: (0x0)

A-Disc-PMSI: (0x0)

A-Ann-PMSI: (0x0)

RIB Dependency List: 0x0

Bidir RIB Dependency List: 0x0

Sources: 0, RPs: 0, Bidir RPs: 0

PE Address : 1.1.1.105 (0x55842fc11130)

RD: 0:0:0 (null), RIB_HLI 0, RPF-ID 5, Remote RPF-ID 0, State: 0, S-PMSI: 0

PPMP_LABEL: 0, MS_PMSI_HLI: 0x00000, Bidir_PMSI_HLI: 0x00000, MLDP-added: [RD 0, ID 0, Bidir ID 0, Remote Bidir ID 0], Counts(SHR/SRC/DM/DEF-MD/SR-POL): 0, 0, 0, 0, 0 Bidir: GRE RP Count 0, MPLS RP Count 0RSVP-TE added: [Leg 0, Ctrl Leg 0, Part tail 0 Def Tail 0, IR added: [Def Leg 0, Ctrl Leg 0, Part Leg 0, Part tail 0, Part IR Tail Label 0

Tree-SID Added: [Def/Part Leaf 1, Def Egress 1, Part Egress 0, Ctrl Leaf 0]

bgp_i_pmsi: 1,0/0 , bgp_ms_pmsi/Leaf-ad: 0/0, bgp_bidir_pmsi: 0, remote_bgp_bidir_pmsi: 0, PMSIs: I 0x55842fbf0670, 0x0, MS 0x0, Bidir Local: 0x0, Remote: 0x0, BSR/Leaf-ad 0x0/0, Autorp-disc/Leaf-ad 0x0/0, Autorp-ann/Leaf-ad 0x0/0

IIDs: I/6: 0x1/0x0, B/R: 0x0/0x0, MS: 0x0, B/A/A: 0x0/0x0/0x0

Bidir RPF-ID: 6, Remote Bidir RPF-ID: 0

I-PMSI: Tree-SID [524289, 1.1.1.105] (0x55842fbf0670)

I-PMSI rem: (0x0)

MS-PMSI: (0x0)

Bidir-PMSI: (0x0)

Remote Bidir-PMSI: (0x0)

BSR-PMSI: (0x0)

A-Disc-PMSI: (0x0)

A-Ann-PMSI: (0x0)

RIB Dependency List: 0x0

Bidir RIB Dependency List: 0x0

Sources: 0, RPs: 0, Bidir RPs: 0

The following ouput from PE node 1 shows the data MDT cache information for the protocol independent multicast (PIM) for VRF

sample-mvpn1:

RP/0/RP0/CPU0:R1# show pim vrf sample-mvpn1 mdt sr-p2mp cache

Core Source Cust (Source, Group) Core Data Expires Name

1.1.1.101 (3.3.3.1, 232.0.0.1) [tree-id 524290] never

Leaf AD: 1.1.1.105

1.1.1.104

The following ouput from PE node 1 shows the local data MDT information on the root PE for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show pim vrf sample-mvpn1 mdt sr-p2mp local

Tree MDT Cache DIP Local VRF Routes On-demand Name

Identifier Source Count Entry Using Cache Color

[tree-id 524290 (0x80002)] *1.1.1.101 1 N Y 1 128

Tree-SID Leaf: 1.1.1.104

1.1.1.105

The following ouput from PE node 1 shows the PIM topology table information for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show pim vrf sample-mvpn1 topology

IP PIM Multicast Topology Table

Entry state: (*/S,G)[RPT/SPT] Protocol Uptime Info

Entry flags: KAT - Keep Alive Timer, AA - Assume Alive, PA - Probe Alive

RA - Really Alive, IA - Inherit Alive, LH - Last Hop

DSS - Don't Signal Sources, RR - Register Received

SR - Sending Registers, SNR - Sending Null Registers

E - MSDP External, EX - Extranet

MFA - Mofrr Active, MFP - Mofrr Primary, MFB - Mofrr Backup

DCC - Don't Check Connected, ME - MDT Encap, MD - MDT Decap

MT - Crossed Data MDT threshold, MA - Data MDT Assigned

SAJ - BGP Source Active Joined, SAR - BGP Source Active Received,

SAS - BGP Source Active Sent, IM - Inband mLDP, X - VxLAN

Interface state: Name, Uptime, Fwd, Info

Interface flags: LI - Local Interest, LD - Local Dissinterest,

II - Internal Interest, ID - Internal Dissinterest,

LH - Last Hop, AS - Assert, AB - Admin Boundary, EX - Extranet,

BGP - BGP C-Multicast Join, BP - BGP Source Active Prune,

MVS - MVPN Safi Learned, MV6S - MVPN IPv6 Safi Learned

(3.3.3.1,232.0.0.1)SPT SSM Up: 01:56:39

JP: Join(00:00:12) RPF: Loopback200,3.3.3.1* Flags: MT MA

TRmdtsample-mvpn1 01:56:39 fwd BGP

The following ouput from PE node 1 shows all Multicast Routing Information Base (MRIB) information for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show mrib vrf sample-mvpn1 route

IP Multicast Routing Information Base

Entry flags: L - Domain-Local Source, E - External Source to the Domain,

C - Directly-Connected Check, S - Signal, IA - Inherit Accept,

IF - Inherit From, D - Drop, ME - MDT Encap, EID - Encap ID,

MD - MDT Decap, MT - MDT Threshold Crossed, MH - MDT interface handle

CD - Conditional Decap, MPLS - MPLS Decap, EX - Extranet

MoFE - MoFRR Enabled, MoFS - MoFRR State, MoFP - MoFRR Primary

MoFB - MoFRR Backup, RPFID - RPF ID Set, X - VXLAN

Interface flags: F - Forward, A - Accept, IC - Internal Copy,

NS - Negate Signal, DP - Don't Preserve, SP - Signal Present,

II - Internal Interest, ID - Internal Disinterest, LI - Local Interest,

LD - Local Disinterest, DI - Decapsulation Interface

EI - Encapsulation Interface, MI - MDT Interface, LVIF - MPLS Encap,

EX - Extranet, A2 - Secondary Accept, MT - MDT Threshold Crossed,

MA - Data MDT Assigned, LMI - mLDP MDT Interface, TMI - P2MP-TE MDT Interface

IRMI - IR MDT Interface, TRMI - TREE SID MDT Interface, MH - Multihome Interface

(*,224.0.0.0/24) Flags: D P

Up: 02:26:26

(*,224.0.1.39) Flags: S P

Up: 02:26:26

(*,224.0.1.40) Flags: S P

Up: 02:26:26

(*,232.0.0.0/8) Flags: D P

Up: 02:26:26

(3.3.3.1,232.0.0.1) RPF nbr: 3.3.3.1 Flags: RPF

Up: 02:12:57

Incoming Interface List

Loopback200 Flags: A, Up: 02:12:57

Outgoing Interface List

TRmdtsample-mvpn1 Flags: F MA TRMI, Up: 02:12:57

The following ouput from PE node 1 shows the Multicast Routing Information Base (MRIB) information for Loopback200 (232.0.0.1)

for VRF sample-mvpn1:

RP/0/RP0/CPU0:R1# show mrib vrf sample-mvpn1 route 232.0.0.1

IP Multicast Routing Information Base

Entry flags: L - Domain-Local Source, E - External Source to the Domain,

C - Directly-Connected Check, S - Signal, IA - Inherit Accept,

IF - Inherit From, D - Drop, ME - MDT Encap, EID - Encap ID,

MD - MDT Decap, MT - MDT Threshold Crossed, MH - MDT interface handle

CD - Conditional Decap, MPLS - MPLS Decap, EX - Extranet

MoFE - MoFRR Enabled, MoFS - MoFRR State, MoFP - MoFRR Primary

MoFB - MoFRR Backup, RPFID - RPF ID Set, X - VXLAN

Interface flags: F - Forward, A - Accept, IC - Internal Copy,

NS - Negate Signal, DP - Don't Preserve, SP - Signal Present,

II - Internal Interest, ID - Internal Disinterest, LI - Local Interest,

LD - Local Disinterest, DI - Decapsulation Interface

EI - Encapsulation Interface, MI - MDT Interface, LVIF - MPLS Encap,

EX - Extranet, A2 - Secondary Accept, MT - MDT Threshold Crossed,

MA - Data MDT Assigned, LMI - mLDP MDT Interface, TMI - P2MP-TE MDT Interface

IRMI - IR MDT Interface, TRMI - TREE SID MDT Interface, MH - Multihome Interface

(3.3.3.1,232.0.0.1) RPF nbr: 3.3.3.1 Flags: RPF

Up: 02:13:03

Incoming Interface List

Loopback200 Flags: A, Up: 02:13:03

Outgoing Interface List

TRmdtsample-mvpn1 Flags: F MA TRMI, Up: 02:13:03

The following ouput from PE node 1 shows the SR-TE process for the tree, showing the tree rooted at node 1, the default MDT

(524289) and the data MDT (524290):

RP/0/RP0/CPU0:R1# show segment-routing traffic-eng p2mp policy root ipv4 1.1.1.101

SR-TE P2MP policy database:

----------------------

! - Replications with Fast Re-route, * - Stale dynamic policies/endpoints

Policy: sr_p2mp_root_1.1.1.101_tree_id_524289 (IPv4) LSM-ID: 0x80001 (PCC-initiated)

Root: 1.1.1.101, ID: 524289

Color: 128

Local LFA FRR: Enabled

PCE Group: not-configured

Flex-Algo: 128

Delegated PCE: 1.1.1.106 (Feb 7 12:50:52.204)

Delegated Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524289

PLSP-ID: 6

Role: Root

Replication:

Incoming label: 15600 CC-ID: 2

Interface: None [1.1.1.103!] Outgoing label: 15600 N-SID: 128103 CC-ID: 2

Endpoints:

1.1.1.104, 1.1.1.105

Policy: sr_p2mp_root_1.1.1.101_tree_id_524290 (IPv4) LSM-ID: 0x80002 (PCC-initiated)

Root: 1.1.1.101, ID: 524290

Color: 128

Local LFA FRR: Enabled

PCE Group: not-configured

Flex-Algo: 128

Delegated PCE: 1.1.1.106 (Feb 7 12:50:52.204)

Delegated Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524290

PLSP-ID: 7

Role: Root

Replication:

Incoming label: 15599 CC-ID: 2

Interface: None [1.1.1.103!] Outgoing label: 15599 N-SID: 128103 CC-ID: 2

Endpoints:

1.1.1.104, 1.1.1.105

The following ouput from PE node 1 shows the primary and backup interfaces for Flex Algo 128 to P node 3 (1.1.1.103):

RP/0/RP0/CPU0:R1# show isis fast-reroute flex-algo 128 1.1.1.103/32

L2 1.1.1.103/32 [12/115]

via 20.1.3.3, TenGigE0/0/0/1, R3, SRGB Base: 100000, Weight: 0

Backup path: LFA, via 20.1.2.2, TenGigE0/0/0/0, R2, SRGB Base: 100000, Weight: 0, Metric: 24

The following ouput from PE node 1 shows the interfaces to connect to P node 3 using the Flex Algo label of P node 3 (128103):

RP/0/RP0/CPU0:R1# show mpls forwarding labels 128103

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

128103 Pop SR Pfx (idx 28103) Te0/0/0/1 20.1.3.3 0

128103 SR Pfx (idx 28103) Te0/0/0/0 20.1.2.2 0 (!)

The following ouput from PE node 1 shows the MRIB MPLS forwarding entry for Tree SID label 15599, showing the primary and

backup interfaces:

RP/0/RP0/CPU0:R1# show mrib mpls forwarding labels 15599

LSP information (XTC) :

LSM-ID: 0x00000, Role: Head, Head LSM-ID: 0x80002

Incoming Label : (15599)

Transported Protocol : <unknown>

Explicit Null : None

IP lookup : disabled

Outsegment Info #1 [H/Push, Recursive]:

OutLabel: 15599, NH: 1.1.1.103, SID: 128103, Sel IF: TenGigE0/0/0/1

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/0

The following ouput from PE node 1 shows the MPLS forwarding entry showing the primary interface to P node 3 for Tree SID

label 15599:

RP/0/RP0/CPU0:R1# show mpls forwarding labels 15599

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

15599 15599 mLDP/IR: 0x00000 Te0/0/0/1 20.1.3.3 0

The following ouput from PE node 1 shows the MRIB MPLS forwarding entry for Tree SID label 15600, showing the primary and

backup interfaces:

RP/0/RP0/CPU0:R1# show mrib mpls forwarding labels 15600

LSP information (XTC) :

LSM-ID: 0x00000, Role: Head, Head LSM-ID: 0x80001

Incoming Label : (15600)

Transported Protocol : <unknown>

Explicit Null : None

IP lookup : disabled

Outsegment Info #1 [H/Push, Recursive]:

OutLabel: 15600, NH: 1.1.1.103, SID: 128103, Sel IF: TenGigE0/0/0/1

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/0

The following ouput from PE node 1 shows the MPLS forwarding entry showing the primary interface for Tree SID label 15600:

RP/0/RP0/CPU0:R1# show mpls forwarding labels 15600

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

15600 15600 mLDP/IR: 0x00000 Te0/0/0/1 20.1.3.3 0

P Node 3 (Transit)The following ouput from P node 3 (transit) shows the SR-TE process for the tree, showing the tree rooted at node 1, the default

MDT (524289) and the data MDT (524290):

RP/0/RP0/CPU0:R3# show segment-routing traffic-eng p2mp policy root ipv4 1.1.1.101

SR-TE P2MP policy database:

----------------------

! - Replications with Fast Re-route, * - Stale dynamic policies/endpoints

Policy: sr_p2mp_root_1.1.1.101_tree_id_524289 LSM-ID: 0x40001

Root: 1.1.1.101, ID: 524289

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 12:50:52.408)

Delegated PCE: 1.1.1.106 (Feb 7 12:51:14.098)

Delegated Conn: 1.1.1.106 (0x2)

Creator Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524289

PLSP-ID: 1

Role: Transit

Replication:

Incoming label: 15600 CC-ID: 1

Interface: None [1.1.1.104!] Outgoing label: 15600 N-SID: 128104 CC-ID: 1

Interface: None [1.1.1.105!] Outgoing label: 15600 N-SID: 128105 CC-ID: 1

Policy: sr_p2mp_root_1.1.1.101_tree_id_524290 LSM-ID: 0x40002

Root: 1.1.1.101, ID: 524290

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 12:50:52.411)

Delegated PCE: 1.1.1.106 (Feb 7 12:51:14.100)

Delegated Conn: 1.1.1.106 (0x2)

Creator Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524290

PLSP-ID: 2

Role: Transit

Replication:

Incoming label: 15599 CC-ID: 1

Interface: None [1.1.1.104!] Outgoing label: 15599 N-SID: 128104 CC-ID: 1

Interface: None [1.1.1.105!] Outgoing label: 15599 N-SID: 128105 CC-ID: 1

The following ouput from P node 3 (transit) shows the MRIB MPLS forwarding entry for Tree SID label 15599, showing the primary

and backup interfaces:

RP/0/RP0/CPU0:R3# show mrib mpls forwarding

LSP information (XTC) :

LSM-ID: 0x00000, Role: Mid

Incoming Label : 15597

Transported Protocol : <unknown>

Explicit Null : None

IP lookup : disabled

Outsegment Info #1 [M/Swap, Recursive]:

OutLabel: 15597, NH: 1.1.1.101, SID: 128101, Sel IF: TenGigE0/0/0/0

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/3

LSP information (XTC) :

LSM-ID: 0x00000, Role: Mid

Incoming Label : 15599

Transported Protocol : <unknown>

Explicit Null : None

IP lookup : disabled

Outsegment Info #1 [M/Swap, Recursive]:

OutLabel: 15599, NH: 1.1.1.104, SID: 128104, Sel IF: TenGigE0/0/0/2

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/1

Outsegment Info #2 [M/Swap, Recursive]:

OutLabel: 15599, NH: 1.1.1.105, SID: 128105, Sel IF: TenGigE0/0/0/1

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/2

LSP information (XTC) :

LSM-ID: 0x00000, Role: Mid

Incoming Label : 15600

Transported Protocol : <unknown>

Explicit Null : None

IP lookup : disabled

Outsegment Info #1 [M/Swap, Recursive]:

OutLabel: 15600, NH: 1.1.1.104, SID: 128104, Sel IF: TenGigE0/0/0/2

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/1

Outsegment Info #2 [M/Swap, Recursive]:

OutLabel: 15600, NH: 1.1.1.105, SID: 128105, Sel IF: TenGigE0/0/0/1

Backup Tunnel: Un:0x0 Backup State: Ready, NH: 0.0.0.0, MP Label: 0

Backup Sel IF: TenGigE0/0/0/2

The following ouput from P node 3 shows the primary and backup interfaces to connect to PE node 4 using the Flex Algo label

of PE node 4 (128104):

RP/0/RP0/CPU0:R3# show mpls forwarding labels 128104

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

128104 Pop SR Pfx (idx 28104) Te0/0/0/2 20.3.4.4 0

128104 SR Pfx (idx 28104) Te0/0/0/1 20.3.5.5 0 (!)

The following ouput from P node 3 shows the primary and backup interfaces to connect to PE node 5 using the Flex Algo label

of PE node 5 (128105):

RP/0/RP0/CPU0:R3# show mpls for labels 128105

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

128105 Pop SR Pfx (idx 28105) Te0/0/0/1 20.3.5.5 0

128105 SR Pfx (idx 28105) Te0/0/0/2 20.3.4.4 0 (!)

PE Node 4 (Leaf)

The following output from PE node 4 shows the mVPN routes that are learned in the VRF sample-mvpn-1:

RP/0/RP0/CPU0:R4# show bgp vrf sample-mvpn1 ipv4 mvpn

BGP VRF sample-mvpn1, state: Active

BGP Route Distinguisher: 4.4.4.4:0

VRF ID: 0x60000005

BGP router identifier 4.4.4.4, local AS number 64000

Non-stop routing is enabled

BGP table state: Active

Table ID: 0x0 RD version: 11

BGP table nexthop route policy:

BGP main routing table version 11

BGP NSR Initial initsync version 4 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 4.4.4.4:0 (default for vrf sample-mvpn1)

Route Distinguisher Version: 11

*>i[1][1.1.1.101]/40 1.1.1.101 100 0 i

*> [1][1.1.1.104]/40 0.0.0.0 0 i

*>i[1][1.1.1.105]/40 1.1.1.105 100 0 i

*>i[3][32][3.3.3.1][32][232.0.0.1][1.1.1.101]/120

1.1.1.101 100 0 i

*> [4][3][1.1.1.1:2][32][3.3.3.1][32][232.0.0.1][1.1.1.101][1.1.1.104]/224

0.0.0.0 0 i

*> [7][1.1.1.1:2][64000][32][3.3.3.1][32][232.0.0.1]/184

0.0.0.0 0 i

Processed 6 prefixes, 6 paths

RP/0/RP0/CPU0:R4# show bgp vrf sample-mvpn1

BGP VRF sample-mvpn1, state: Active

BGP Route Distinguisher: 4.4.4.4:0

VRF ID: 0x60000005

BGP router identifier 4.4.4.4, local AS number 64000

Non-stop routing is enabled

BGP table state: Active

Table ID: 0xe0000005 RD version: 74

BGP table nexthop route policy:

BGP main routing table version 75

BGP NSR Initial initsync version 31 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 4.4.4.4:0 (default for vrf sample-mvpn1)

Route Distinguisher Version: 74

*>i3.3.3.1/32 1.1.1.101 0 100 0 ?

*> 3.3.3.4/32 0.0.0.0 0 32768 ?

*>i3.3.3.5/32 1.1.1.105 0 100 0 ?

Processed 3 prefixes, 3 paths

The following ouput from PE node 4 shows the remote data MDT information on the root PE for VRF sample-mvpn1:

RP/0/RP0/CPU0:R4# show pim vrf sample-mvpn1 mdt sr-p2mp remote

Tree MDT Cache DIP Local VRF Routes On-demand Name

Identifier Source Count Entry Using Cache Color

[tree-id 524290 (0x80002)] 1.1.1.101 1 N N 1 0

The following ouput from PE node 4 shows the PIM topology table information for VRF sample-mvpn1:

RP/0/RP0/CPU0:R4# show pim vrf sample-mvpn1 topology

IP PIM Multicast Topology Table

Entry state: (*/S,G)[RPT/SPT] Protocol Uptime Info

Entry flags: KAT - Keep Alive Timer, AA - Assume Alive, PA - Probe Alive

RA - Really Alive, IA - Inherit Alive, LH - Last Hop

DSS - Don't Signal Sources, RR - Register Received

SR - Sending Registers, SNR - Sending Null Registers

E - MSDP External, EX - Extranet

MFA - Mofrr Active, MFP - Mofrr Primary, MFB - Mofrr Backup

DCC - Don't Check Connected, ME - MDT Encap, MD - MDT Decap

MT - Crossed Data MDT threshold, MA - Data MDT Assigned

SAJ - BGP Source Active Joined, SAR - BGP Source Active Received,

SAS - BGP Source Active Sent, IM - Inband mLDP, X - VxLAN

Interface state: Name, Uptime, Fwd, Info

Interface flags: LI - Local Interest, LD - Local Dissinterest,

II - Internal Interest, ID - Internal Dissinterest,

LH - Last Hop, AS - Assert, AB - Admin Boundary, EX - Extranet,

BGP - BGP C-Multicast Join, BP - BGP Source Active Prune,

MVS - MVPN Safi Learned, MV6S - MVPN IPv6 Safi Learned

(3.3.3.1,232.0.0.1)SPT SSM Up: 01:59:13

JP: Join(BGP) RPF: TRmdtsample-mvpn1,1.1.1.101 Flags:

Loopback200 01:59:13 fwd LI LH

The following ouput from PE node 4 shows the SR-TE process for the tree, showing the tree rooted at node 1, the default MDT

(524289) and the data MDT (524290):

RP/0/RP0/CPU0:R4# show segment-routing traffic-eng p2mp policy root ipv4 1.1.1.101

SR-TE P2MP policy database:

----------------------

! - Replications with Fast Re-route, * - Stale dynamic policies/endpoints

Policy: sr_p2mp_root_1.1.1.101_tree_id_524289 LSM-ID: 0x40001

Root: 1.1.1.101, ID: 524289

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 11:35:30.279)

Delegated PCE: 1.1.1.106 (Feb 7 11:35:58.546)

Delegated Conn: 1.1.1.106 (0x3)

Creator Conn: 1.1.1.106 (0x3)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524289

PLSP-ID: 2

Role: Leaf

Replication:

Incoming label: 15600 CC-ID: 3

Policy: sr_p2mp_root_1.1.1.101_tree_id_524290 LSM-ID: 0x40002

Root: 1.1.1.101, ID: 524290

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 11:35:30.281)

Delegated PCE: 1.1.1.106 (Feb 7 11:35:58.547)

Delegated Conn: 1.1.1.106 (0x3)

Creator Conn: 1.1.1.106 (0x3)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524290

PLSP-ID: 3

Role: Leaf

Replication:

Incoming label: 15599 CC-ID: 3

PE Node 5 (Leaf)

The following output from PE node 5 shows the mVPN routes that are learned in the VRF sample-mvpn-1:

RP/0/RP0/CPU0:R5# show bgp vrf sample-mvpn1 ipv4 mvpn

BGP VRF sample-mvpn1, state: Active

BGP Route Distinguisher: 5.5.5.5:0

VRF ID: 0x60000003

BGP router identifier 5.5.5.5, local AS number 64000

Non-stop routing is enabled

BGP table state: Active

Table ID: 0x0 RD version: 11

BGP table nexthop route policy:

BGP main routing table version 11

BGP NSR Initial initsync version 8 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 5.5.5.5:0 (default for vrf sample-mvpn1)

Route Distinguisher Version: 11

*>i[1][1.1.1.101]/40 1.1.1.101 100 0 i

*>i[1][1.1.1.104]/40 1.1.1.104 100 0 i

*> [1][1.1.1.105]/40 0.0.0.0 0 i

*>i[3][32][3.3.3.1][32][232.0.0.1][1.1.1.101]/120

1.1.1.101 100 0 i

*> [4][3][1.1.1.1:2][32][3.3.3.1][32][232.0.0.1][1.1.1.101][1.1.1.105]/224

0.0.0.0 0 i

*> [7][1.1.1.1:2][64000][32][3.3.3.1][32][232.0.0.1]/184

0.0.0.0 0 i

Processed 6 prefixes, 6 paths

The following ouput from PE node 4 shows the remote data MDT information on the root PE for VRF sample-mvpn1:

RP/0/RP0/CPU0:R5# show pim vrf sample-mvpn1 mdt sr-p2mp remote

Tree MDT Cache DIP Local VRF Routes On-demand Name

Identifier Source Count Entry Using Cache Color

[tree-id 524290 (0x80002)] 1.1.1.101 1 N N 1 0

The following ouput from PE node 5 shows the SR-TE process for the tree, showing the tree rooted at node 1, the default MDT

(524289) and the data MDT (524290):

RP/0/RP0/CPU0:R5# show segment-routing traffic-eng p2mp policy root ipv4 1.1.1.101

SR-TE P2MP policy database:

----------------------

! - Replications with Fast Re-route, * - Stale dynamic policies/endpoints

Policy: sr_p2mp_root_1.1.1.101_tree_id_524289 LSM-ID: 0x40001

Root: 1.1.1.101, ID: 524289

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 11:58:58.821)

Delegated PCE: 1.1.1.106 (Feb 7 11:59:34.323)

Delegated Conn: 1.1.1.106 (0x2)

Creator Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524289

PLSP-ID: 3

Role: Leaf

Replication:

Incoming label: 15600 CC-ID: 4

Policy: sr_p2mp_root_1.1.1.101_tree_id_524290 LSM-ID: 0x40002

Root: 1.1.1.101, ID: 524290

PCE Group: not-configured

Flex-Algo: 128

Creator PCE: 1.1.1.106 (Feb 7 11:58:58.823)

Delegated PCE: 1.1.1.106 (Feb 7 11:59:34.524)

Delegated Conn: 1.1.1.106 (0x2)

Creator Conn: 1.1.1.106 (0x2)

PCC info:

Symbolic name: sr_p2mp_root_1.1.1.101_tree_id_524290

PLSP-ID: 4

Role: Leaf

Replication:

Incoming label: 15599 CC-ID: 4

Feedback

Feedback