Table Of Contents

Oracle JD Edwards on Cisco Unified Computing System with EMC VNX Storage

Cisco Unified Computing System

Design Considerations for Oracle JD Edwards Implementation on Cisco Unified Computing System

Scalable Architecture Using Cisco UCS Servers

EMC VNX5300—Block Storage for Oracle JD Edwards

Sizing Guidelines for Oracle JD Edwards

Oracle JD Edwards Deployment Architecture on Cisco Unified Computing System

Cisco Unified Computing System Configuration

Creating Uplink Ports Channels

Cisco UCS Service Profile Configuration

Creating Service Profile Templates

Creating a Service Profile From a Template and Associating it to a Cisco UCS Server Blade

Creating Storage Pools and RAID Groups

Configuring the Nexus Switches

Enabling Nexus 5548 Switch Licensing

Creating VSAN and Adding FC Interfaces

Configuring Ports 21-24 as FC Ports

Creating VLANs and Managing Traffic

Creating and Configuring Virtual Port Channel (VPC)

Modifying Service Profile for Boot Policy

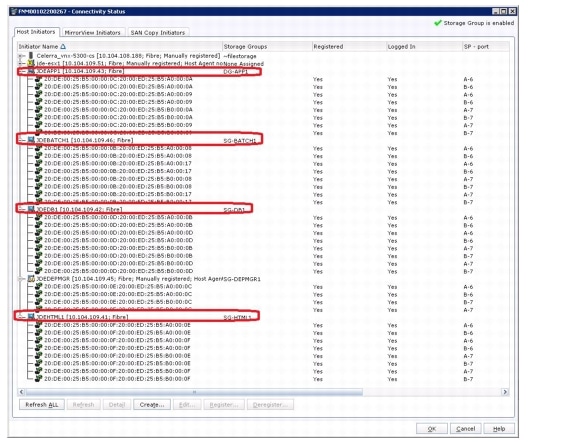

Creating the Zoneset and Zones on Nexus 5548

Microsoft Windows 2008 R2 Installation

Oracle JD Edwards Installation

General Installation Requirements

JD Edwards Specific Installation Requirements

JD Edwards Deployment Server Installation Requirements

JD Edwards Enterprise Server Installation Requirements

JD Edwards Database Server Installation Requirements

JD Edwards HTML Server Installation Requirements

JD Edwards Port Numbers Installation

JD Edwards Deployment Server Install

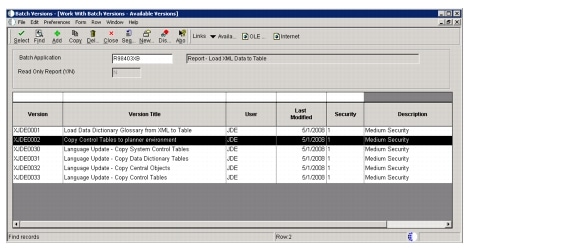

Tools Upgrade on the Deployment Server

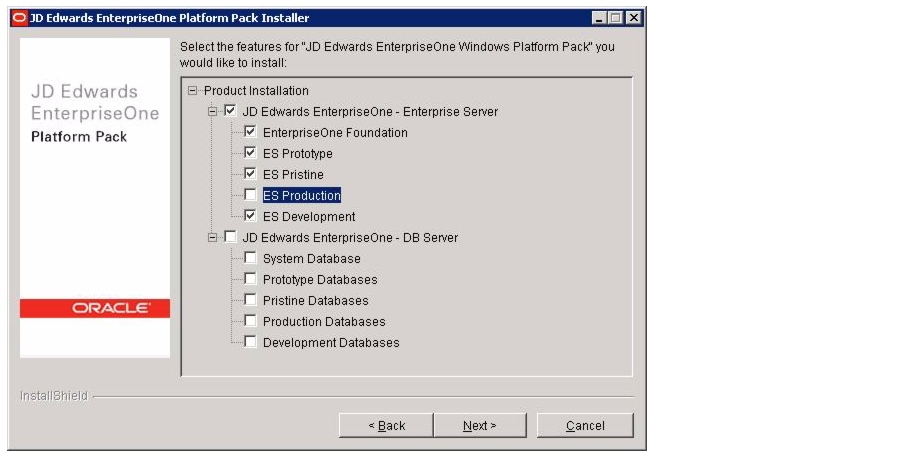

Installing the Enterprise Server

Installing the Database Server

Oracle HTTP Server Installation

Installing the OneWorld Client

Oracle JD Edwards Performance and Scalability

Interactive with Batch Test Scenario

Interactive with Batch on same Physical Server

Interactive with Batch on Separate Physical Server

Best Practices and Tuning Recommendations

Microsoft SQL Server 2008 R2 Configuration

JD Edwards Enterprise Server Configuration

Appendix A—Workload Mix for Batch and Interactive Test

Appendix B—Reference Documents

About Cisco Validated Design (CVD) Program

Oracle JD Edwards on Cisco Unified Computing System with EMC VNX Storage

Executive Summary

Oracle JD Edwards is a suite of products from Oracle that caters to the Enterprise Resource Planning (ERP) requirement of an Organization. Oracle has three flag ship ERP Applications, Oracle E-Business Suite, PeopleSoft and JD Edwards. ERP Applications have been thriving and improving the productivity of Organizations for a couple of decades now. But with the increased complexity and extreme performance requirements, customers are constantly looking for better Infrastructure to host and run these Applications.

This Cisco Validated Design presents a differentiated Solution using Cisco Unified Computing System (UCS) that validates Oracle JD Edwards environment on Cisco UCS hosting SQL Server and Windows Operating System, with Cisco UCS Blade Servers, Nexus 5548 Switches, UCS Management System and EMC VNX5300 Storage platform. Cisco Oracle Competency Center tested, validated, benchmarked and showcased the Oracle JD Edwards ERP Application using Oracle Day in the life (DIL) Kit.

Target Audience

This Cisco Validated Design is intended to assist Solution Architects, JDE Project Manager, Infrastructure Manager, Sales Engineers, Field Engineers and Consultants in planning, design, and deployment of Oracle JD Edwards hosted on Cisco Unified Computing System (UCS) servers. This document assumes that the reader has an architectural understanding of the Cisco UCS servers, Nexus 5548 switch, Oracle JD Edwards, EMC® VNX5300™ Storage array, and related software.

Purpose of this Guide

This Cisco Validated Design showcases Oracle JD Edwards Performance and Scalability on Cisco UCS Platform and how enterprises can apply best practices for Cisco Unified Computing System, Cisco Nexus family of switches and EMC VNX5300 storage platform while deploying the Oracle JD Edwards application.

Design validation was achieved by executing Oracle JD Edwards Day in the Life (DIL) Kit on Cisco UCS Platform by benchmarking various Application workloads using HP's LoadRunner tool. Oracle JDE DIL kit comprises of interactive Application workloads and batch workload, Universal Batch Engine Processes (UBEs). The interactive Application Users were validated and benchmarked by scaling from 500 to 7500 concurrent users and various set of UBEs were also executed concurrently with 1000 Interactive users and 5000 Interactive user concurrent load. Achieving sub second response times for various JDE Application workloads with a large variation of interactive apps and UBEs , clearly demonstrate the suitability of UCS Serves for small to large Oracle JD Edwards and help customers to make an informed decision on choosing Cisco UCS for their JD Edwards implementation on Cisco Unified Computing System.

Business Needs

Customers constantly look value for money, when they transition from one platform to another or migrate from proprietary systems to commodity hardware platforms; they endeavor to improve Operational efficiency and optimal resource utilization.

Other important aspects are Management and Maintenance; ERP Applications are business critical Applications and need to be up and running all the time. Ease of Maintenance and efficient management with minimal staff and reduced budgets are pushing Infrastructure managers to balance uptime and ROI.

Server sprawl, old technologies that consume precious real estate space and power with increase in the cooling requirement have pushed customer to look for innovative technologies that can address some of these challenges.

Solution Overview

The Solution in the design guide demonstrates the deployment of Oracle JD Edwards on Cisco Unified Computing System with EMC VNX5300 as the storage system. The Oracle JD Edwards solution architecture is designed to run on multiple platforms and on multiple databases. In this deployment, the Oracle JD Edwards Enterprise One (JDE E1) Release 9.0.2 was deployed on Microsoft Windows 2008 R2. The JDE E1 database was hosted on Microsoft SQL Server 2008 R2, and the JDE HTML server ran on Oracle WebLogic Server Release 10.3.5.

The deployment and testing was conducted in a Cisco® test and development environment to measure the performance of Oracle's JDE E1 Release 9.0.2 with Oracle's JDE E1 Day in the Life (DIL) test kit. The JDE E1 DIL kit is a suite of 17 test scripts that exercises representative transactions of the most popular JDE E1 applications, including SCM, SRM, HCM, CRM, and Financial Management. This complex mix of applications simulate workloads to more closely reflect customer environments

The solution describes the following aspects of Oracle JD Edwards deployment on Cisco Unified Computing System:

•

Sizing and Design guidelines for Oracle JD Edwards using JDE E1 DIL kit for both interactive and batch processes.

•

Configuring Cisco UCS for Oracle JD Edwards

–

Configuring Fabric Interconnect

–

Configuring Cisco UCS Blades

•

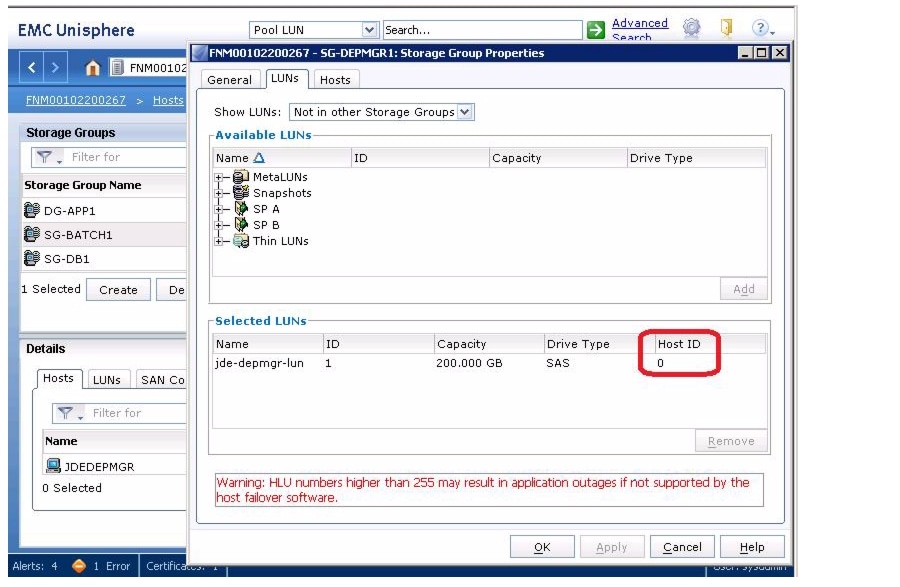

Configuring EMC VNX5300 storage system for Oracle JD Edwards

–

Configuring the storage and creating LUNs

–

Associating LUNs with the hosts

•

Installing and configuring JDE E1 release 9.02 with Tool update 8.98.4.6

–

Provisioning the required server resource

–

Installing and Configuring JDE HTML Server, JDE Enterprise Server and Microsoft SQL Server 2008R2 on Windows 2008R2

•

Performance characterization of JD Edwards Day in the life Kit (DIL Kit)

–

Performance and Scaling analysis for JDE E1 interactive Apps

–

Performance Analysis of JDE batch processes (UBEs)

–

Performance and Scaling Analysis for interactive and UBEs executed concurrently on same server

–

Split Configuration Scaling: Performance Analysis of interactive and UBEs executed on separate server.

•

Best Practices and Tuning Recommendations to deploy Oracle JD Edward son Cisco Unified Computing System

Figure 1 elaborates on the components of JD Edwards using Cisco UCS Servers.

Figure 1 Deployment Overview of JD Edwards Using Cisco UCS Servers

Technology Overview

Cisco Unified Computing System

The Cisco Unified Computing System is a next-generation data center platform that unites compute, network, and storage access. The platform, optimized for virtual environments, is designed using open industry-standard technologies and aims to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency; lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. It is an integrated, scalable, multi chassis platform in which all resources participate in a unified management domain.

The main components of Cisco Unified Computing System are:

•

Computing—The system is based on an entirely new class of computing system that incorporates blade servers based on Intel Xeon 5500/5600 Series Processors. Selected Cisco UCS blade servers offer the patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines per server.

•

Network—The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

•

Virtualization—The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

•

Storage access—The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and iSCSI. This provides customers with choice for storage access and investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

•

Management—The system uniquely integrates all system components which enable the entire solution to be managed as a single entity by the Cisco UCS Manager. The Cisco UCS Manager has an intuitive graphical user interface (GUI), a command-line interface (CLI), and a robust application programming interface (API) to manage all system configuration and operations.

The Cisco Unified Computing System is designed to deliver:

•

A reduced Total Cost of Ownership and increased business agility.

•

Increased IT staff productivity through just-in-time provisioning and mobility support.

•

A cohesive, integrated system which unifies the technology in the data center. The system is managed, serviced and tested as a whole.

•

Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand.

•

Industry standards supported by a partner ecosystem of industry leaders.

Cisco Unified Computing System is designed from the ground up to be programmable and self integrating. A server's entire hardware stack, ranging from server firmware and settings to network profiles, is configured through model-based management. With Cisco virtual interface cards, even the number and type of I/O interfaces is programmed dynamically, making every server ready to power any workload at any time.

With model-based management, administrators manipulate a model of a desired system configuration, associate a model's service profile with the hardware components, and the system configures automatically to match the model. This automation speeds provisioning and workload migration with accurate and rapid scalability. The result is increased IT staff productivity, improved compliance, and reduced risk of failures due to inconsistent configurations.

Cisco Fabric Extender technology reduces the number of system components to purchase, configure, manage, and maintain by condensing three network layers into one. It eliminates both blade server and hypervisor-based switches by connecting fabric interconnect ports directly to individual blade servers and virtual machines. Virtual networks are now managed exactly as physical networks are, but with massive scalability. This represents a radical simplification over traditional systems, reducing capital and operating costs while increasing business agility, simplifying and speeding deployment, and improving performance. Figure 2 shows the Cisco Unified Computing System components.

Figure 2 Cisco Unified Computing System Components

Fabric Interconnect

The Cisco® UCS 6200 Series Fabric Interconnect is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6200 Series offers line-rate, low-latency, lossless 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel functions.

The Cisco UCS 6200 Series provides the management and communication backbone for the Cisco UCS B-Series Blade Servers and Cisco UCS 5100 Series Blade Server Chassis. All chassis, and therefore all blades, attached to the Cisco UCS 6200 Series Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabric, the Cisco UCS 6200 Series provides both the LAN and SAN connectivity for all blades within its domain.

From a networking perspective, the Cisco UCS 6200 Series uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10 Gigabit Ethernet on all ports, 1Tb switching capacity, 160 Gbps bandwidth per chassis, independent of packet size and enabled services. The product family supports Cisco low-latency, lossless 10 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over a lossless Ethernet fabric from a blade server through an interconnect. Significant TCO savings come from an FCoE-optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated. The Cisco Fabric Interconnect is shown in Figure 3.

Figure 3 Cisco UCS 6200 Series Fabric Interconnect

The following are the different types of Cisco Fabric Interconnects:

•

Cisco UCS 6296UP Fabric Interconnect

•

Cisco UCS 6248UP Fabric Interconnect

•

Cisco UCS U6120XP 20-Port Fabric Interconnect

•

Cisco UCS U6140XP 40-Port Fabric Interconnect

Cisco UCS 6296UP Fabric Interconnect

The Cisco UCS 6296UP 96-Port Fabric Interconnect is a 2RU 10 Gigabit Ethernet, FCoE and native Fibre Channel switch offering up to 1920-Gbps throughput and up to 96 ports. The switch has 48 1/10-Gbps fixed Ethernet, FCoE and Fiber Channel ports and three expansion slots. It doubles the switching capacity of the data center fabric to improve workload density from 960Gbps to 1.92 Tbps, reduces end-to-end latency by 40 percent to improve application performance and provides flexible unified ports to improve infrastructure agility and transition to a fully converged fabric.

Cisco UCS 6248UP Fabric Interconnect

The Cisco UCS 6248UP 48-Port Fabric Interconnect is a one-rack-unit (1RU) 10 Gigabit Ethernet, FCoE and Fiber Channel switch offering up to 960-Gbps throughput and up to 48 ports. The switch has 32 1/10-Gbps fixed Ethernet, FCoE and FC ports and one expansion slot.

Cisco UCS 2100 and 2200 Series Fabric Extenders

The Cisco UCS 2100 and 2200 Series Fabric Extenders multiplex and forward all traffic from blade servers in a chassis to a parent Cisco UCS fabric interconnect over from 10-Gbps unified fabric links. All traffic, even traffic between blades on the same chassis or virtual machines on the same blade, is forwarded to the parent interconnect, where network profiles are managed efficiently and effectively by the fabric interconnect. At the core of the Cisco UCS fabric extender are application-specific integrated circuit (ASIC) processors developed by Cisco that multiplex all traffic.

Up to two fabric extenders can be placed in a blade chassis.

•

The Cisco UCS 2104XP Fabric Extender has eight 10GBASE-KR connections to the blade chassis midplane, with one connection per fabric extender for each of the chassis' eight half slots. This configuration gives each half-slot blade server access to each of two 10-Gbps unified fabric-based networks through SFP+ sockets for both throughput and redundancy. It has four ports connecting the fabric interconnect.

•

The Cisco UCS 2208XP is first product in the Cisco UCS 2200 Series. It has eight 10 Gigabit Ethernet, FCoE-capable, and Enhances Small Form-Factor Pluggable (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 160 Gbps of I/O to the chassis.

Cisco UCS Blade Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis.

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors.

Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2104XP Fabric Extenders.

A passive mid-plane provides up to 20 Gbps of I/O bandwidth per server slot and up to 40 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 40 Gigabit Ethernet standards. The Cisco UCS Blade Server Chassis is shown in Figure 4.

Figure 4 Cisco Blade Server Chassis (Front and Back View)

Cisco UCS Manager

Cisco Unified Computing System (CISCO UCS) Manager provides unified, embedded management of all software and hardware components of the Cisco UCS through an intuitive GUI, a command line interface (CLI), or an XML API. The Cisco UCS Manager provides unified management domain with centralized management capabilities and controls multiple chassis and thousands of virtual machines.

Cisco UCS Blade Server Types

The following are the different types of Cisco Blade Servers:

•

Cisco UCS B200M3 Servers

•

Cisco UCS B200 M2 Servers

•

Cisco UCS B250 M2 Extended Memory Blade Servers

•

Cisco UCS B230 M2 Blade Servers

•

Cisco UCS B440 M2 High-Performance Blade Servers

Cisco UCS B200 M3 Server

Delivering performance, versatility and density without compromise, the Cisco UCS B200 M3 Blade Server addresses the broadest set of workloads, from IT and Web Infrastructure through distributed database.

Building on the success of the Cisco UCS B200 M2 Blade Servers, the enterprise-class Cisco UCS B200 M3 further extends the capabilities of the Cisco Unified Computing System portfolio in a half-blade form factor. The Cisco UCS B200 M3 Server harnesses the power of the Intel® Xeon® E5-2600 processor product family, up to 384 GB of RAM, two hard drives, and up to 8 x 10GE to deliver exceptional levels of performance, memory expandability, and I/O throughput for nearly all applications. The Cisco UCS B200M3 Server is shown in Figure 5.

Figure 5 Cisco UCS B200 M3 Blade Server

Cisco UCS B200 M2 Server

The Cisco UCS B200 M2 Blade Server is a half-width, two-socket blade server. The system uses two Intel Xeon 5600 Series Processors, up to 96 GB of DDR3 memory, two optional hot-swappable small form factor (SFF) serial attached SCSI (SAS) disk drives, and a single mezzanine connector for up to 20 Gbps of I/O throughput. The server balances simplicity, performance, and density for production-level virtualization and other mainstream data center workloads. The Cisco UCS B200 M2 Server is shown in the Figure 6.

Figure 6 Cisco UCS B200 M2 Blade Server

Cisco UCS B250 M2 Extended Memory Blade Server

The Cisco UCS B250 M2 Extended Memory Blade Server is a full-width, two-socket blade server featuring Cisco Extended Memory Technology. The system supports two Intel Xeon 5600 Series Processors, up to 384 GB of DDR3 memory, two optional SFF SAS disk drives, and two mezzanine connections for up to 40 Gbps of I/O throughput. The server increases performance and capacity for demanding virtualization and large-data-set workloads with greater memory capacity and throughput. The Cisco UCS Extended Memory Blade Server is shown in Figure 7.

Figure 7 Cisco UCS B250 M2 Extended Memory Blade Server

Cisco UCS B230 M2 Blade Servers

The Cisco UCS B230 M2 Blade Server is a full-slot, 2-socket blade server offering the performance and reliability of the Intel Xeon processor E7-2800 product family and up to 32 DIMM slots, which support up to 512 GB of memory. The Cisco UCS B230 M2 supports two SSD drives and one CNA mezzanine slot for up to 20 Gbps of I/O throughput. The Cisco UCS B230 M2 Blade Server platform delivers outstanding performance, memory, and I/O capacity to meet the diverse needs of virtualized environments with advanced reliability and exceptional scalability for the most demanding applications.

Cisco UCS B440 M2 High-Performance Blade Servers

The Cisco UCS B440 M2 High-Performance Blade Server is a full-slot, 2-socket blade server offering the performance and reliability of the Intel Xeon processor E7-4800 product family and up to 512 GB of memory. The Cisco UCS B440 M2 supports four SFF SAS/SSD drives and two CNA mezzanine slots for up to 40 Gbps of I/O throughput. The Cisco UCS B440 M2 blade server extends Cisco UCS by offering increased levels of performance, scalability, and reliability for mission-critical workloads.

Cisco UCS Service Profiles

Programmatically Deploying Server Resources

Cisco UCS Manager provides centralized management capabilities, creates a unified management domain, and serves as the central nervous system of the Cisco Unified Computing System. Cisco UCS Manager is embedded device management software that manages the system from end-to-end as a single logical entity through an intuitive GUI, CLI, or XML API. Cisco UCS Manager implements role- and policy-based management using service profiles and templates. This construct improves IT productivity and business agility. Now infrastructure can be provisioned in minutes instead of days, shifting IT's focus from maintenance to strategic initiatives.

Dynamic Provisioning with Service Profiles

Cisco UCS resources are abstract in the sense that their identity, I/O configuration, MAC addresses and WWNs, firmware versions, BIOS boot order, and network attributes (including QoS settings, ACLs, pin groups, and threshold policies) all are programmable using a just-in-time deployment model. The manager stores this identity, connectivity, and configuration information in service profiles that reside on the Cisco UCS 6200 Series Fabric Interconnect. A service profile can be applied to any blade server to provision it with the characteristics required to support a specific software stack. A service profile allows server and network definitions to move within the management domain, enabling flexibility in the use of system resources. Service profile templates allow different classes of resources to be defined and applied to a number of resources, each with its own unique identities assigned from predetermined pools.

Service Profiles and Templates

A service profile contains configuration information about the server hardware, interfaces, fabric connectivity, and server and network identity. The Cisco UCS Manager provisions servers utilizing service profiles. The Cisco UCS Manager implements a role-based and policy-based management focused on service profiles and templates. A service profile can be applied to any blade server to provision it with the characteristics required to support a specific software stack. A service profile allows server and network definitions to move within the management domain, enabling flexibility in the use of system resources.

Service profile templates are stored in the Cisco UCS 6200 Series Fabric Interconnects for reuse by server, network, and storage administrators. Service profile templates consist of server requirements and the associated LAN and SAN connectivity. Service profile templates allow different classes of resources to be defined and applied to a number of resources, each with its own unique identities assigned from predetermined pools.

The Cisco UCS Manager can deploy the service profile on any physical server at any time. When a service profile is deployed to a server, the Cisco UCS Manager automatically configures the server, adapters, Fabric Extenders, and Fabric Interconnects to match the configuration specified in the service profile. A service profile template parameterizes the UIDs that differentiate between server instances.

This automation of device configuration reduces the number of manual steps required to configure servers, Network Interface Cards (NICs), Host Bus Adapters (HBAs), and LAN and SAN switches. Figure 8 shows the Service profile which contains abstracted server state information, creating an environment to store unique information about a server.

Figure 8 Service Profiles

Cisco Nexus 5548UP Switch

The Cisco Nexus 5548UP is a 1RU 1 Gigabit and 10 Gigabit Ethernet switch offering up to 960 gigabits per second throughput and scaling up to 48 ports. It offers 32 1/10 Gigabit Ethernet fixed enhanced Small Form-Factor Pluggable (SFP+) Ethernet/FCoE or 1/2/4/8-Gbps native FC unified ports and three expansion slots. These slots have a combination of Ethernet/FCoE and native FC ports. The Cisco Nexus 5548UP switch is shown in Figure 9.

Figure 9 Cisco Nexus 5548UP Switch

I/O Adapters

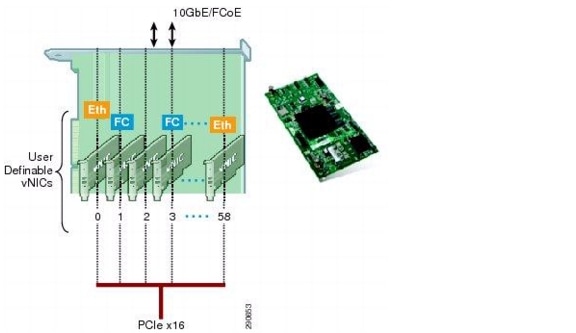

The Cisco UCS blade server has various Converged Network Adapters (CNA) options. The Cisco UCS M81KR Virtual Interface Card (VIC) option is used in this Cisco Validated Design.

This Cisco UCS M81KR VIC called as Palo Card is unique to the Cisco UCS blade system. This mezzanine card adapter is designed around a custom ASIC that is specifically intended for VMware-based virtualized systems. It uses custom drivers for the virtualized HBA and 10-GE network interface card. As is the case with the other Cisco CNAs, the Cisco UCS M81KR VIC encapsulates fiber channel traffic within the 10-GE packets for delivery to the Fabric Extender and the Fabric Interconnect.

The Cisco UCS M81KR VIC provides the capability to create multiple VNICs (up to 128 in version 1.4) on the CNA. This allows complete I/O configurations to be provisioned in virtualized or non-virtualized environments using just-in-time provisioning, providing tremendous system flexibility and allowing consolidation of multiple physical adapters.

System security and manageability is improved by providing visibility and portability of network policies and security all the way to the virtual machines. Additional M81KR features like VN-Link technology and pass-through switching, minimize implementation overhead and complexity. The Cisco UCS M81KR VIC is as shown in Figure 10.

Figure 10 Cisco UCS M81KR VIrtual Interface Card

Cisco UCS Virtual Interface Card 1240

The Cisco UCS Virtual Interface Card (VIC) 1240 is a 4-port 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed exclusively for the M3 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional Port Expander, the Cisco UCS VIC 1240 capabilities can be expanded to eight ports of 10 Gigabit Ethernet. The Cisco UCS VIC 1240 enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1240 supports Cisco Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment.

Cisco UCS Virtual Interface Card 1280

The Cisco UCS Virtual Interface Card (VIC) 1280 is an eight-port 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable mezzanine card designed exclusively for Cisco UCS B-Series Blade Servers. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1280 supports Cisco Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS Fabric Interconnect ports to virtual machines, simplifying server virtualization deployment.

EMC VNX Storage Family

The EMC VNX family of storage systems represents EMC's next generation of unified storage, optimized for virtual environments, while offering a cost effective choice for deploying mission-critical enterprise applications such as Oracle JD EDwards. The massive virtualization and consolidation trends with servers demand a new storage technology that is dynamic and scalable. The EMC VNX series meets these requirements and offers several software and hardware features for optimally deploying enterprise applications such. The EMC VNX family is shown in Figure 11.

Figure 11 The EMC VNX Family of Unified Storage Platforms

A key distinction of the VNX Series is support for both block and file-based external storage access over a variety of access protocols, including Fibre Channel (FC), iSCSI, FCoE, NFS, and CIFS network shared file access. Furthermore, data stored in one of these systems, whether accessed as block or file-based storage objects, is managed uniformly via Unisphere®, a web-based interface window. Additional information on Unisphere can be found on emc.com in the white paper titled: Introducing EMC Unisphere: A Common Midrange Element Manager.

EMC VNX Storage Platforms

The EMC VNX Series continues the EMC tradition of providing some of the highest data reliability and availability in the industry. Apart from this they also include in their design a boost in performance and bandwidth to address the sustained data access bandwidth rates. The new system design has also placed heavy emphasis on storage efficiencies and density, as well as crucial green storage factors, such as a smaller data center footprint, lower power consumption, and improvements in power reporting.

The VNX has many features that help improve availability. Data protection is heightened by the lack of any single point of failure from the network to the actual disk drive in which the data is stored. The data resides on the VNX for block storage system, which delivers data availability, protection, and performance. The VNX uses RAID technology to protect the data at the drive level. All data paths to and from the network are redundant.

The basic design principle for the VNX Series storage platform includes the VNX for file front end and the VNX for block hardware for the storage processors on the back end. The control flow is handled by the storage processors in block-only systems and the control station in the file-enabled systems. The VNX OE for block software has been designed to ensure the I/O is well balanced between the two SPs. At the time of provisioning, the odd number LUNs are owned by one SP and even number LUNs are owned by the other. This results in LUNs being evenly distributed between the two SPs. If a failover occurs, LUNs would trespass over to the alternate path/SP. EMC PowerPath® restores the "default": path once the error condition is recovered. This brings the LUNs again in a balanced state between the SPs. For more information on VNX Series, see: http://www.emc.com/collateral/hardware/data-sheets/h8520-vnx-family-ds.pdf.

Key efficiency features available with the VNX Series include FAST Cache and FAST VP.

FAST Cache Technology

FAST Cache is a storage performance optimization feature that provides immediate access to frequently accessed data. In traditional storage arrays, the DRAM caches are too small to maintain the hot data for a long period of time. Very few storage arrays give an option to non-disruptively expand DRAM cache, even if they support DRAM cache expansion. FAST Cache extends the available cache to customers by up to 2 TB using enterprise Flash drives. FAST Cache tracks the data temperature at 64 KB granularity and copies hot data to the Flash drives once its temperature reaches a certain threshold. After a data chunk gets copied to FAST Cache, the subsequent accesses to that chunk of data will be served at Flash latencies. Eventually, when the data temperature cools down, the data chunks get evicted from FAST Cache and will be replaced by newer hot data. FAST Cache uses a simple Least Recently Used (LRU) mechanism to evict the data chunks.

FAST Cache is built on the premise that the overall applications' latencies can improve when most frequently accessed data is maintained on a relatively smaller sized, but faster storage medium, like Flash drives. FAST Cache identifies the most frequently accessed data which is temporary and copies it to the flash drives automatically and non-disruptively. The data movement is completely transparent to applications, thereby making this technology application-agnostic and management-free. For example, FAST Cache can be enabled or disabled on any storage pool simply by selecting/clearing the "FAST Cache" storage pool property in advanced settings.

FAST Cache can be selectively enabled on a few or all storage pools within a storage array, depending on application performance requirements and SLAs.

There are several distinctions to EMC FAST Cache:

•

It can be configured in read/write mode, which allows the data to be maintained on a faster medium for longer periods, irrespective of application read-to-write mix and data re-write rate.

•

FAST Cache is created on a persistent medium like Flash drives, which can be accessed by both the storage processors. In the event of a storage processor failure, the surviving storage processor can simply reload the cache rather than repopulating it from scratch. This can be done by observing the data access patterns again, which is a differentiator.

•

Enabling FAST Cache is completely non-disruptive. It is as simple as selecting the Flash drives that are part of FAST Cache and does not require any array disruption or downtime.

•

Since FAST Cache is created on external Flash drives, adding FAST Cache will not consume any extra PCI-E slots inside the storage processor.

Figure 12 EMC FAST Cache

Additional information on EMC Fast Cache is documented in the white paper titled EMC FAST Cache—A Detailed Review which is available at: http://www.emc.com/collateral/software/white-papers/h8046-clariion-celerra-unified-fast-cache-wp.pdf.

FAST VP

VNX FAST VP is a policy-based auto-tiering solution for enterprise applications. FAST VP operates at a granularity of 1 GB, referred to as a "slice." The goal of FAST VP is to efficiently utilize storage tiers to lower customers' TCO by tiering colder slices of data to high-capacity drives, such as NL-SAS, and to increase performance by keeping hotter slices of data on performance drives, such as Flash drives. This occurs automatically and transparently to the host environment. High locality of data is important to realize the benefits of FAST VP. When FAST VP relocates data, it will move the entire slice to the new storage tier. To successfully identify and move the correct slices, FAST VP automatically collects and analyzes statistics prior to relocating data. Customers can initiate the relocation of slices manually or automatically by using a configurable, automated scheduler that can be accessed from the Unisphere management tool. The multi-tiered storage pool allows FAST VP to fully utilize all the storage tiers: Flash, SAS, and NL-SAS. The creation of a storage pool allows for the aggregation of multiple RAID groups, using different storage tiers, into one object. The LUNs created out of the storage pool can be either thickly or thinly provisioned. These "pool LUNs" are no longer bound to a single storage tier. Instead, they can be spread across different storage tiers within the same storage pool. If you create a storage pool with one tier (Flash, SAS, or NL-SAS), then FAST VP has no impact on the performance of the system. To operate FAST VP, you need at least two tiers.

Additional information on EMC FAST VP for Unified Storage is documented in the white paper titled EMC FAST VP for Unified Storage System - A Detailed Review, see: http://www.emc.com/collateral/software/white-papers/h8058-fast-vp-unified-storage-wp.pdf.

FAST Cache and FAST VP are offered in a FAST Suite package as part of the VNX Total Efficiency Pack. This pack includes the FAST Suite which automatically optimizes for the highest system performance and lowest storage cost simultaneously. In addition, this pack includes the Security and Compliance Suite which keeps data safe from changes, deletions, and malicious activity. For additional information on this Total Efficiency Pack as well as other offerings such as the Total Protection Pack, see: http://www.emc.com/collateral/software/data-sheet/h8509-vnx-software-suites-ds.pdf.

EMC PowerPath

EMC PowerPath is host-based software that provides automated data path management and load-balancing capabilities for heterogeneous server, network, and storage deployed in physical and virtual environments. A critical IT challenge is being able to provide predictable, consistent application availability and performance across a diverse collection of platforms. PowerPath is designed to address those challenges, helping IT meet service-level agreements and scale-out mission-critical applications.

This software supports up to 32 paths from multiple HBAs (iSCSI TCI/IP Offload Engines [TOEs] or FCoE CNAs) to multiple storage ports when the multipathing license is applied. Without the multipathing license, PowerPath will use only a single port of one adapter (PowerPath SE). In this mode, the single active port can be zoned to a maximum of two storage ports. This configuration provides storage port failover only, not host-based load balancing or host-based failover. It is supported, but not recommended, if the customer wants true I/O load balancing at the host and also HBA failover.

PowerPath balances the I/O load on a host-by-host basis. It maintains statistics on all I/O for all paths. For each I/O request, PowerPath intelligently chooses the most under utilized path available. The available under utilized path is chosen based on statistics and heuristics, and the load-balancing and failover policy in effect.

In addition to the load balancing capability, PowerPath also automates path failover and recovery for high availability. If a path fails, I/O is redirected to another viable path within the path set. This redirection is transparent to the application, which is not aware of the error on the initial path. This avoids sending I/O errors to the application. Important features of PowerPath include standardized path management, optimized load balancing, and automated I/O path failover and recovery.

For more information on Powerpath, see: http://www.emc.com/collateral/software/data-sheet/l751-powerpath-ve-multipathing-ds.pdf.

Oracle JD Edwards

Oracle's JD Edwards is the ERP solution of choice for many small and medium-sized businesses (SMBs). JDE E1 offers an attractive combination of a large number of easy-to-deploy and easy-to-use ERP applications across multiple industries. These applications include Supply Chain Management (SCM), Human Capital Management (HCM), Supplier Relationship Management (SRM), Financials, and Customer Relationship Management (CRM). The various components of JD Edwards are elaborated in Figure 13.

Figure 13 JD Edwards Components

HTML Server

HTML Server is the interface of the JDE to the outside world. It allows the JDE ERP Users to connect to their Applications using their Browsers via the Web server. It is one of the Tiers of the standard three-tier JDE Architecture. HTML Server is just not an interface, it has logic and runs Web Services which processes some of the data and only the result set is sent through the WAN to the end users.

Enterprise Server

Enterprise Server hosts the JDE Applications that execute all the basic functions of the JDE ERP systems, like running the Transaction processing service, Batch Services, data replication, security and all the time stamp and distributed processing happens at this layer. Multiple enterprise server can be added for scalability, especially when we need to apply Electronic Software Updates (ESU's) to one server while the other is online.

Database Server

Database Server in a JDE environment is used to host the data. It simply is a data repository and is used to process JDE logic. The JDE Database server can run many supported databases like Oracle , SQL Server, DB2 or Access. Since this server does not run any Applications as mentioned the only licensing that is required for this server is the database license, hence the server should be sized correctly. If this server has excess capacity the UBE's can be run on this server to improve their performance.

Deployment Server

Deployment server essentially is a centralized software (C Code) repository for deploying software packages to all the servers and workstations that are part of the Cisco JDE solution. Although the Deployment server is not a business critical server, it is very important to note that it is a critical piece of the JDE Architecture, without which the Installation, upgrade, development or modification of packages (codes) or reports would become impossible.

Server Manager

The Server Manager is a key JDE software component that helps customers deploy latest JDE tools software onto various JDE Servers that are registered with the server manager. The server manager is web based and enables life cycle management of JDE products like the Enterprise server and HTML server via a web based console. It has built in abilities for configuration management and it maintains an audit history of changes made to the components and configuration of various JDE Server software.

Batch Server

Batch processes (UBE's) are background processes requiring no operator intervention or interactivity. One of most important batch process in JDE is MRP Process. Batch Process can be scheduled using a Process Scheduler which runs in the Batch Server. JDE customers running a high volume of reports often split the load on their Enterprise server such that they have one or more Batch servers which handle the high volume reporting (UBE) loads, thereby freeing up their enterprise server to handle interactive user loads more efficiently. This leads to better interactive application and UBE performance due to the expanded scaling afforded by the additional hardware.

Design Considerations for Oracle JD Edwards Implementation on Cisco Unified Computing System

The design document provides best practices for designing JD Edwards environments, demonstrates several advantages to organizations choosing the Cisco UCS platform and are applicable for organization of all sizes. There are several options which need to be considered vis-à-vis JD Edwards HTML Server, JDE E1 application server for interactive and batch (UBE) processes and most importantly the scalability and ease of deployment and maintenance of Hardware installed for JD Edwards deployment.

Scalable Architecture Using Cisco UCS Servers

An obvious immediate benefit with Cisco is a single trusted vendor providing all the components needed for a JD Edwards deployment with the ability to provide scalable platform, dynamic provisioning, failover with minimal downtimes and reliability.

Some of the capabilities offered by Cisco United Computing System which complement the scalable architecture include the following:

•

Dynamic provisioning and service profiles: Cisco UCS Manager supports service profiles, which contain abstracted server states, creating a stateless environment. It implements role-based and policy-based management focused on service profiles and templates. These mechanisms fully provision one or many servers and their network connectivity in minutes, rather than hours or days. This can be very valuable in JD Edwards environments, where new servers may need to be provisioned on short notice, or even whole new farm for specific development activities.

•

Cisco Unified Fabric and Fabric Interconnects: The Cisco Unified Fabric leads to a dramatic reduction in network adapters, blade-server switches, and cabling by passing all network and storage traffic over one cable to the parent Fabric Interconnects, where it can be processed and managed centrally. This improves performance and reduces the number of devices that need to be powered, cooled, secured, and managed. The 6200 series offer key features and benefits, including:

–

High performance Unified Fabric with line-rate, low-latency, lossless 10 Gigabit Ethernet, and Fibre Channel over Ethernet (FCoE).

–

Centralized unified management with Cisco UCS Manager Software.

–

Virtual machine optimized services with the support for VN-Link technologies.

To accurately design JD Edwards on any hardware configuration, we need to understand the characteristics of each tier in JDE deployment vis-à-vis CPU, memory and I/O operations. For instance JDE Enterprise Server for Interactive is both CPU and memory intensive but is low on disk utilization whereas the database server is more memory and disk intensive rather than CPU utilization. Some of the important characteristics to design JD Edwards on Cisco UCS Server are elaborated Table 1.

Table 1 JD Edwards Design Considerations

Boot from SAN

Boot from SAN is a critical feature which helps to achieve stateless computing in which there is no static binding between a physical server and the OS / applications hosted on that server. The OS is installed on a SAN LUN and is booted using the service profile. When the service profile is moved to another server, the server policy and the PWWN of the HBAs will also move along with the service profile. The new server takes the identity of the old server and looks identical to the old server.

The following are the benefits of boot from SAN:

•

Reduce Server Footprint - Boot from SAN eliminates the need for each server to have its own direct-attached disk (internal disk) which is a potential point of failure. The following are the advantages of diskless servers:

–

Require less physical space

–

Require less power

–

Require fewer hardware components

–

Less expensive

•

Disaster Recovery- Boot information and production data stored on a local SAN can be replicated to another SAN at a remote disaster recovery site. When server functionality at the primary site goes down in the event of a disaster, the remote site can take over with a minimal downtime.

•

Recovery from server failures- Recovery from server failures is simplified in a SAN environment. Data can be quickly recovered with the help of server snapshots, and mirrors of a failed server in a SAN environment. This greatly reduces the time required for server recovery.

•

High Availability- A typical data center is highly redundant in nature with redundant paths, redundant disks and redundant storage controllers. The operating system images are stored on SAN disks which eliminates potential problems caused due to mechanical failure of a local disk.

•

Rapid Redeployment- Businesses that experience temporary high production workloads can take advantage of SAN technologies to clone the boot image and distribute the image to multiple servers for rapid deployment. Such servers may only need to be in production for hours or days and can be readily removed when the production need has been met. Highly efficient deployment of boot images makes temporary server usage highly cost effective.

•

Centralized Image Management: When operating system images are stored on SAN disks, all upgrades and fixes can be managed at a centralized location. Servers can readily access changes made to disks in a storage array.

With boot from SAN, the server image resides on the SAN and the server communicates with the SAN through a Host Bus Adapter (HBA). The HBA BIOS contain instructions that enable the server to find the boot disk. After Power OnSelf Test (POST), the server hardware component fetches the designated boot device in the hardware BOIS settings. Once the hardware detects the boot device, it follows the regular boot process.

EMC VNX5300—Block Storage for Oracle JD Edwards

Oracle JD Edwards data is traditionally stored in any of the supported RDBMS such as SQL Server using block storage. In our current implementation, the EMC VNX5300 storage system is used for block storage. The EMC VNX5300's capability of block access is leveraged in this solution,

The VNX 5300 configured for this JD Edwards workload utilizes a DPE with 15 x 3.5" drive form factor. It includes four onboard 8 Gb/s Fibre Channel ports and two 6 Gb/s SAS ports for back-end connectivity on each storage processor. Each SP in the enclosure has a power supply module and two UltraFlex I/O module slots, Both I/O module slots may be populated on this model. Any slots without I/O modules will be populated with blanks (to ensure proper airflow). The front of the DPE houses the first tray of drives. The VNX 5300 configured for this JDE workload is configured with a mix of SAS drives, SSDs and NL-SAS drives, with LUNs carved out using heterogeneous storage pools leveraging FAST VP to ensure meeting both the storage capacity as well as the performance demands required. FAST Cache is enabled to ensure faster response times for both read and write operations. The storage connectivity provided to the Cisco UCS environment is comprised of Fibre Channel connectivity from the on-board 8Gb FC connections on each Storage Processor.

Sizing Guidelines for Oracle JD Edwards

Sizing ERP deployments is a complicated process, and getting it right depends a lot on the inputs provided by customers on how they intend to use the ERP system and what their priorities are in terms of end user as well as corporate expectations.

Some of the common questions related to ERP sizing such as the number of concurrent interactive users using the system, total number of ERP end users, the kind of applications that the end users will access as well as number of reports and type of reports generated during peak activity can help size the system for optimal performance. Analyzing the demand characteristics during different time periods in the fiscal year that the JDE system is expected to handle is necessary to do a proper sizing.

The JDE Edwards configuration used in the present deployment was geared to handle a very high workload of end users running heavy SRM interactive applications as well as a high number of batch processes. A physical three-tier solution with the Enterprise, HTML Web and Database all residing of different physical machines was used to provide an optimal solution in terms of end user response times as well as optimal batch throughput.

The following sections briefly describe the sizing aspects of each tier of the three tier JD Edwards deployment architecture.

JDE HTML Server

The JDE HTML server serves end user interactive application requests from JDE users. The JDE HTML server loads the application forms and requests services from the JDE Enterprise server for application processing based on form input. Some very lightweight application logic also runs on the JDE HTML Server. .Client requests do result in significant load on the JDE HTML servers since the JDE HTML servers make and manage database as well as network connections. The JDE HTML server's utilization of CPU and memory depends heavily on the number of interactive users using the server. Disk utilization is not a major factor in the sizing of the JDE HTML Server.

Typically, on the Windows Server, the number of interactive users per JVM should be capped to around 250 interactive users for optimal performance.

JDE Enterprise Server

The JDE Enterprise Server acts as the central point for serving requests for application logic. The JDE EnterpriseOne clients make requests for application processing and depending upon the JDE environment used as well as user preferences, the input data is then processed and returned back to the client. The Call Object kernels running on the JDE Enterprise server are delegated the responsibility of processing end user application processing requests and the Security kernel handles the responsibility of ensuring authentication of the end users .The application processing is CPU intensive and the CPU frequency and number of cores available to the Enterprise server plays a large part affecting the performance and throughput of the system. As the number of interactive users requests grow, the memory requirements of the JDE Enterprise server also increases. This is also true for the batch (UBE) reports that the JDE Enterprise server processes.

The typical sizing recommendation for number of users per call object kernel on Windows Server would be between 8 - 12 users/call object kernels and about 1 security kernel for every 50 interactive users. The in memory cache usage of call object kernels increase with user load so it is typical for the memory usage of individual call objects to increase with increase in user loads, as and when more users are serviced by them.

JDE Database Server

The JDE Database Server services the data requests made by both the JDE Enterprise and JDE HTML Servers. The JDE Database Server sizing depends on the type of reports being processed as well as the interactive user loads. Some JDE reports can be very Disk I/O intensive and depending on the kind of reports being processed, careful consideration needs to be given to disk layout. If the SQL Server database has ample memory available to it and the memory is utilized to cache SQL Server data it can benefit application performance by reducing disk I/O operations. The JDE Database server typically benefits from having faster disk and high memory allocation. The suggested minimum server configuration, to deploy Oracle JD Edwards on Cisco UCS with Microsoft Windows and SQL Server 2008 R2 is detailed in Table 2.

Table 2 Oracle JD Edwards on Cisco Unified Computing System—Suggested Minimum Server Configuration

A typical Cisco UCS server configuration in ideal lab conditions for small, medium and large servers, is elaborated in Figure 14. The configuration may vary depending on customer workload and technology landscape.

Figure 14 JD Edwards on Cisco Unified Computing System—Sizing Chart

Oracle JD Edwards Deployment Architecture on Cisco Unified Computing System

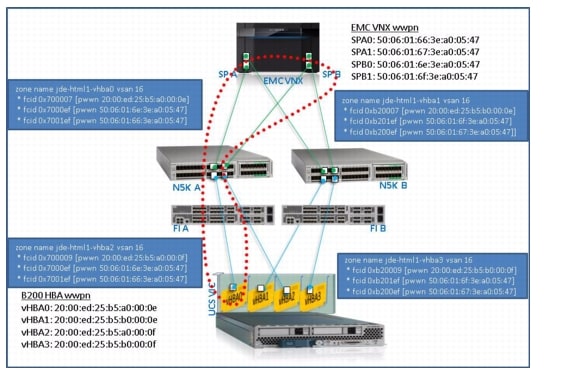

The deployment architecture of Oracle JD Edwards on Cisco UCS is elaborated in Figure 15.

Figure 15 Deployment Architecture of JD Edwards on Cisco Unified Computing System

The configuration presented in this document is based on the following main components (Table 3).

Table 3 Configuration Components

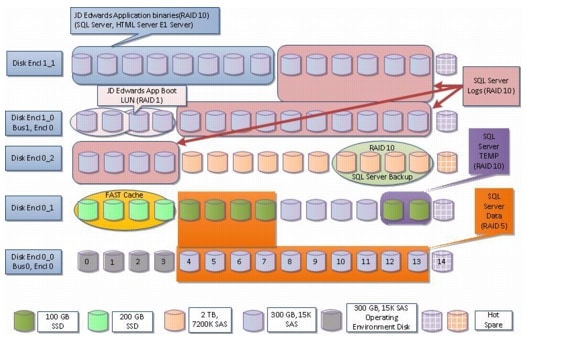

The Disk layout carved on EMC VNX5300 JD Edwards deployment on Cisco Unified Computing Systems elaborated in Figure 16.

Figure 16 JD Edwards Disk Layout on EMC VNX5300

Infrastructure Setup

This section elaborates on the infrastructure setup details used to deploy Oracle JD Edwards on Cisco Unified Computing System with EMC VNX5300. The high-level workflow to configure the system is elaborated in Figure 17.

Figure 17 Workflow

Cisco Unified Computing System Configuration

This section details the Cisco UCS configuration that is done as part of the infrastructure build for deployment of Oracle JD Edwards. The racking, power and installation of the chassis are described in the install guide: http://www.cisco.com/en/US/docs/unified_computing/Cisco UCS/hw/chassis/install/Cisco UCS5108_install.html) and it is beyond the scope of this document. More details on each step can be found in the following documents:

•

Cisco Unified Computing System CLI Configuration guide

•

Cisco UCS Manager GUI configuration guide http://www.cisco.com/en/US/docs/unified_computing/ucs/sw/gui/config/guide/2.0/b_UCSM_GUI_Configuration_Guide_2_0.html

Validate Installed Firmware

To log into Cisco UCS Manager, perform the following steps:

1.

Open the Web browser with the Cisco UCS 6248 Fabric Interconnect cluster address.

2.

Click Launch to download the Cisco UCS Manager software.

3.

You will be prompted to accept security certificates; accept as necessary.

4.

In the login page, enter admin for both username and password text boxes.

5.

Click Login to access the Cisco UCS Manager software.

6.

Click Equipment and then Installed Firmware

7.

Verify the that the Firmware is installed. The firmware that existed during the deployment was 2.0(1w). For more information on Firmware Management, refer to http://www.cisco.com/en/US/docs/unified_computing/ucs/sw/gui/config/guide/2.0/b_UCSM_GUI_Configuration_Guide_2_0_chapter_01010.html

Verification: The Installed Firmware should be displayed as 2.0(1w) as shown in Figure 18.

Figure 18 Firmware Verification

Chassis Discovery Policy

To edit the chassis discovery policy, perform the following steps:

1.

Navigate to the Equipment tab in the right pane of the Cisco UCS Manager.

2.

In the right pane, click the Policies tab.

3.

Under Global Policies, change the Chassis Discovery Policy to 4-link.

4.

Click Save Changes in the bottom right corner.

Verification: The chassis discovery policy configured to 4-link is displayed as shown in Figure 19.

Figure 19 Chassis Discovery Policy

Enabling Network Components

To enabling Fiber Channel, servers, and uplink ports, perform the following steps:

1.

Select the Equipment tab on the top left of the Cisco UCS Manager window.

2.

Select Equipment>Fabric Interconnects >Fabric Interconnect A (primary) >Fixed Module.

3.

Expand the Unconfigured Ethernet Ports section.

4.

Select ports 1-4 that are connected to the Cisco UCS chassis and right-click on them and select Configure as Server Port.

5.

Click Yes to confirm, and then click OK to continue.

6.

Select ports 29 and 30. These ports are connected to the Cisco Nexus 5548 switches. Right-click them and select Configure as Uplink Port.

7.

Click Yes to confirm, and then click OK to continue.

8.

Configure port 23 and port 24 as Fiber Channel. For more information on the same, refer to http://www.cisco.com/en/US/docs/unified_computing/ucs/sw/gui/config/guide/2.0/b_UCSM_GUI_Configuration_Guide_2_0_chapter_0101.html

9.

Select Equipment > Fabric Interconnects >Fabric Interconnect A (primary).

10.

Right-click and select Set FC End-Host Mode to put the Fabric Interconnect in Fiber Channel Switching Mode.

11.

Click Yes to confirm.

12.

A message displays stating that the "Fiber Channel Wnd-Host Mode has been set and the switch will reboot." Click OK to continue. Wait until the Cisco UCS Manager is available again and log back into the interface.

13.

Re-execute Step 2 to Step 12 for Fabric Interconnect B

Verification: Check if all configured links show their status as "up" as shown in the figure below for Fabric Interconnect A. This can also be verified on the Cisco Nexus switch side by running "show int status" and all the ports connected to the Cisco UCS fabric interconnects are shown as "up."

Note

The FC ports are enabled since the VSAN ID is set to default "1." Create the VSAN ID for the present deployment and configure the same on Nexus 5548 switch at "Configuration of Ports 21-24 as FC ports", and re-enable the FC port with specific VSAN ID.

Figure 20 Configured Links on Fabric Interconnect A

Creating MAC Address Pools

To create MAC Address pools, perform the following steps:

1.

Select the LAN tab on the left of the Cisco UCS Manager window.

2.

Under Pools > root.

Note

Two MAC address pools will be created, one for fabric A and one for fabric B.

3.

Right-click MAC Pools under the root organization and select Create MAC Pool to create the MAC address pool for fabric A.

4.

Enter JDE-MAC-FIA for the name of the MAC pool for fabric A.

5.

Enter a description of the MAC pool in the description text box. This is optional; you can choose to omit the description.

6.

Click Next to continue.

7.

Click Add to add the MAC address pool.

8.

Specify a starting MAC address for fabric A.

Note

The default is fine, but it is recommended to change the pool address as per the deployment and also to differentiate between MAC address for Fabric A and Fabric B. Currently it is configured as (DE:25:B5:0A:00:00).

9.

Specify the size as 24 for the MAC address pool for fabric A.

10.

Click OK.

11.

Click Finish.

12.

A pop-up message box appears, click OK to save changes.

13.

Right click MAC Pools under the root organization and select Create MAC Pool to create the MAC address pool for fabric B.

14.

Enter JDE-MAX-FIB for the name of the MAC pool for fabric B.

15.

Enter a description of the MAC pool in the description text box. This is optional; you can choose to omit the description.

16.

Click Next to continue.

17.

Click Add to add the MAC address pool.

18.

Specify a starting MAC address for fabric B.

Note

The default is fine, but it is recommended to change the pool address as per the deployment and also to differentiate between MAC address for Fabric A and Fabric B. Currently it is configured as (DE:25:B5:0B:00:00)

19.

Specify the size as 24 for the MAC address pool for fabric B.

20.

Click OK.

21.

Click Finish.

22.

A pop-up message box appears; click OK to save changes and exit.

Verification: Select LAN tab > Pools > root. Select MAC Pools and it expands to show the MAC pools created. On the right pane, details of the MAC pools are displayed as shown Figure 21.

Figure 21 MAC Pool Details

Creating WWPN Pools

To create WWPN pools, perform the following steps:

1.

Select the SAN at the top left of the Cisco UCS Manager window.

2.

Select WWPN Pools > root.

Note

Two WWPN pools will be created, one for fabric A and one for fabric B.

3.

Right-click WWPN Pools, and select Create WWPN Pool.

4.

Enter JDE-WWPN-A as the name for the WWPN pool for fabric A.

5.

Enter a description of the WWPN pool in the description text box. This is optional; you can choose to omit the description.

6.

Click Next.

7.

Click Add to add a block of WWPNs.

8.

Enter 20:00:ED:25:B5:A0:00:00 as the starting WWPN in the block for fabric A.

Note

It is recommended to change the WWPN prefix for the deployment. This helps ensure identifying WWPNs initiated from Fabric A or Fabric B.

9.

Set the size of the WWPN block to 24.

10.

Click OK to continue.

11.

Click Finish to create the WWPN pool.

12.

Click OK to save changes.

13.

Right-click the WWPN Pools and select Create WWPN Pool.

14.

Enter JDE-WWPN-B as the name for the WWPN pool for fabric B.

15.

Enter a description of the WWPN pool in the description text box. This is optional; you can choose to omit the description.

16.

Click Next.

17.

Click Add to add a block of WWPNs.

18.

Enter 20:00:ED:25:B5:B0:00:00 as the starting WWPN in the block for fabric B.

19.

Set the size of the WWPN block to 24.

20.

Click OK to continue.

21.

Click Finish to create the WWPN pool.

22.

Click OK to save changes and exit.

Verification: The new name with the 24 block size is shown in Figure 22.

Figure 22 WWPN Pool Details

Creating WWNN Pools

To create WWNN pools, perform the following steps:

1.

Select the SAN tab at the top left of the Cisco UCS Manager window.

2.

Select Pools > root.

3.

Right-click on WWNN Pools and select Create WWNN Pool.

4.

Enter "JDE-WWNN" as the name of the WWNN pool.

5.

Enter a description of the WWNN pool in the description text box. This is optional; you can choose to omit the description.

6.

Click Next to continue.

7.

A pop-up window "Add WWN Blocks" appears; click Add button at the bottom of the page.

8.

A pop-up window "Create WWN Blocks" appears; set the size of the WWNN block to "24".

9.

Click OK to continue.

10.

Click Finish.

11.

Click OK to save changes and exit.

Verification: The new name with the 24 block size displays in the right panel when the WWNN pool is selected on the left panel, as shown in Figure 23.

Figure 23 WWNN Pool Details

Creating UUID Suffix Pools

To create UUID suffix pools, perform the following steps:

1.

Select the Servers tab on the top left of the Cisco UCS Manager window.

2.

Select Pools > root.

3.

Right-click UUID Suffix Pools and select Create UUID Suffix Pool.

4.

Enter the name the UUID suffix pool as JDE-UUID.

5.

Enter a description of the UUID suffix pool in the description text box. This is optional; you can choose to omit the description.

6.

Prefix is set to Derived by default. Do not change the default setting.

7.

Click Next to continue.

8.

A pop-up window Add UUID Blocks appears. Click Add to add a block of UUID suffixes.

9.

The From field will be in default setting. Do not change the From field.

10.

Set the size of the UUID suffix pool to 24.

11.

Click OK to continue.

12.

Click Finish to create the UUID suffix pool.

13.

Click OK to save changes and exit.

Verification: Make sure that the UUID suffix pool created is displayed as shown in Figure 24.

Figure 24 UUID Suffix Pool Details

Creating VLANs

To create VLANs, perform the following steps:

1.

Select the LAN tab on the left of the Cisco UCS Manager window.

Note

Three VLANs will be created; Management Traffic, Data traffic and Oracle RAC database inter-node private traffic.

2.

Right-click the VLANs in the tree, and click Create VLAN(s).

3.

Enter MGMT-VLAN as the name of the VLAN (for example: 809). This name will be used for traffic management.

4.

Keep the option Common/Global selected for the scope of the VLAN.

5.

Enter a VLAN ID for the management VLAN. Keep the sharing type as None.

6.

Create VLANs for Application data traffic (for example, 810)

Verification: Select LAN tab > LAN Cloud > VLANs. Open VLANs and all of the created VLANs are displayed. The right pane provides the details of all individual VLANs as shown in Figure 25.

Figure 25 Details of Created VLANS

Creating Uplink Ports Channels

To create uplink port channels to Nexus 5548 switches, perform the following steps:

1.

Select the LAN tab on the left of the Cisco UCS Manager window.

Note

Two port channels are created, one from fabric A to both Cisco Nexus 5548 switches and one from fabric B to both Cisco Nexus 5548 switches.

2.

Expand the Fabric A tree.

3.

Right-click Port Channels and click Create Port Channel.

4.

Enter 101 as the unique ID of the port channel.

5.

Enter JDE Port A as the name of the port channel.

6.

Click Next.

7.

Select ports 1/15 and 1/16 to be added to the port channel.

8.

Click >> to add the ports to the Port Channel.

9.

Click Finish to create the port channel.

10.

A pop-up message box appears, click OK to continue.

11.

In the left pane, click the newly created port channel.

12.

In the right pane under Actions, choose Enable Port Channel option.

13.

In the pop-up box, click Yes, and then click OK to save changes.

14.

Expand the Fabric B tree.

15.

Right-click Port Channels and click Create Port Channel.

16.

Enter 103 as the unique ID of the port channel.

17.

Enter JDE Port B as the name of the port channel.

18.

Click Next.

19.

Select ports 1/15 and 1/16 to be added to the Port Channel.

20.

Click >> to add the ports to the Port Channel.

21.

Click Finish to create the port channel.

22.

A pop-up message box appears, click OK to continue.

23.

In the left pane, click the newly created port channel.

24.

In the right pane under Actions, choose Enable Port Channel option.

25.

In the pop-up box, click Yes, and then click OK to save changes.

Verification: Select LAN tab > LAN Cloud. On the right pane, select the LAN Uplinks and expand the Port channels listed as shown in Figure 26.

Note

In order for the Fabric Interconnect Port Channels to get enabled, the vpc needs to be configured first on Nexus 5548 Switches as described in Creation and Configuration of Virtual Port Channel (VPC).

Figure 26 Port Channel Details

Creating VSANs

To create VSANs, perform the following steps:

1.

Select the SAN tab at the top left of the Cisco UCS Manager window.

2.

Expand the SAN cloud tree.

3.

Right-click VSANs and click Create VSAN.

4.

Enter VSAN16 as the VSAN name

5.

Enter 16 as the VSAN ID.

6.

Enter 16 as the FCoE VLAN ID.

7.

Click OK to create the VSANs.

Verification: Select SAN tab >SAN Cloud >VSANs on the left panel. The right panel displays the created VSANs as shown in Figure 27.

Figure 27 VSAN Details

Cisco UCS Service Profile Configuration

An important aspect of configuring a physical server in a Cisco UCS 5108 chassis is to develop a service profile through Cisco UCS Manager. Service profile is an extension of the virtual machine abstraction applied to physical servers. The definition has been expanded to include elements of the environment that span the entire data center, encapsulating the server identity (LAN and SAN addressing, I/O configurations, firmware versions, boot order, network VLAN, physical port, and quality-of-service [QoS] policies) in logical "service profiles" that can be dynamically created and associated with any physical server in the system within minutes rather than hours or days. The association of service profiles with physical servers is performed as a simple, single operation. It enables migration of identities between servers in the environment without requiring any physical configuration changes and facilitates rapid bare metal provisioning of replacements for failed servers.

Service profiles can be created in several ways:

•

Manually: Create a new service profile using the Cisco UCS Manager GUI.

•

From a Template: Create a service profile from a template.

•

By Cloning: Cloning a service profile creates a replica of a service profile. Cloning is equivalent to creating a template from the service policy and then creating a service policy from that template to associate with a server.

In the present scenario we created a Service profile initial template and thereafter instantiate service profile through the template.

•

A service profile template parameterizes the UIDs that differentiate one instance of an otherwise identical server from another. Templates can be categorized into two types: initial and updating.

•

Initial Template: The initial template is used to create a new server from a service profile with UIDs, but after the server is deployed, there is no linkage between the server and the template, so changes to the template will not propagate to the server, and all changes to items defined by the template must be made individually to each server deployed with the initial template.

•

Updating Template: An updating template maintains a link between the template and the deployed servers, and changes to the template (most likely to be firmware revisions) cascade to the servers deployed with that template on a schedule determined by the administrator.

•

Service profiles, templates, and other management data is stored in high-speed persistent storage on the Cisco Unified Computing System fabric interconnects, with mirroring between fault-tolerant pairs of fabric interconnects.

Creating Service Profile Templates

To create service profile templates, perform the following steps:

Step 1

Select the Servers tab at the top left of the Cisco UCS Manager window.

Step 2

Select Service Profile Templates > root. In the right window, click Create Service Profile Template under the Actions tab.

Step 3

The Create Service Profile Template window appears.

1.

Identify the Service Profile Template section.

a.

Enter the name of the service profile template as JD Edwards Template.

b.

Select the type as Initial Template.

c.

In the UUID section, select JDE-UUID as the UUID pool.

d.

Click Next to continue to the next section.

2.

Storage Section

a.

Keep default for the Local Storage option.

b.

Select the option Expert for the field "How would you like to configure SAN connectivity".

c.

In the WWNN Assignment field, select JDE-WWNN.

d.

Click Add at the bottom of the window to add vHBAs to the template.

Note

Four vHBAs need to be created and a the first pair of vHBA's will be used for SAN Boot LUN and the second pair of vHBA's will be used for JD Edwards Application purposes.

e.

The Create vHBA window appears. Make sure that the vHBA is vhba0.

f.

In the WWPN Assignment field, select JDE-WWPN-A.

g.

Ensure that the Fabric ID is set to A.

h.

In the Select VSAN field, select VSAN16.

i.

Click OK to save changes.

j.

Click Add at the bottom of the window to add vHBAs to the template.

k.

The Create vHBA window appears. Ensure that the vHBA is vhba1.

l.

In the WWPN Assignment field, select JDE-WWPN-B.

m.

Ensure that the Fabric ID is set to B.

n.

In the Select VSAN field, select VSAN16.

o.

Click OK to save changes.

p.

Click Add at the bottom of the window to add vHBAs to the template.

q.

Create vhba2 (with Fabric ID A) and vhba3 (with Fabric ID B)

r.

Make sure that both the vHBAs are created.

s.

Click Next to continue.

3.

Network Section

a.

Restore the default setting for Dynamic vNIC Connection Policy field.

b.

Select the option Expert for the field "How would you like to configure LAN connectivity".

c.

Click Add to add a vNIC to the template.

d.

The Create vNIC window appears. Enter the name of the vNIC as eth0.

e.

Select the MAC address assignment field as JDE-MAC-FIA.

f.

Select Fabric ID as Fabric A.

g.

Select appropriate VLANs (810) in the VLANs.

h.

Click OK to save changes.

i.

Click Add to add a vNIC to the template.

j.

The Create vNIC window appears. Enter the name of the vNIC eth1.

k.

Select the MAC address assignment field as JDE-MAC-FIB.

l.

Select Fabric ID as Fabric B.

m.

Select appropriate VLANs (810) in the VLANs. The VLAN was already created in Creating VLANs section.

n.

Click OK to add the vNIC to the template.

o.

Ensure that both the vHBAs are created.

p.

Click Next to continue.

4.

vNIC/vHBA Placement Section

a.

Restore the default setting as Let System Perform Placement in the Select Placement field.

b.

Ensure that all the vHBAs are created.

c.

Click Next to continue.

5.

Server Boot Order Section

a.

Do not select any boot. You will attach a boot policy when you create Service Profile from Service Profile template.

6.

Maintenance Policy, Server assignment, and operation policy Section

a.

Select default settings for all these policies.

b.

Custom policies can be defined for each of the three cases, for instance, in operational policy one can disable 'quiet boot' in the BIOS policy

c.

Click Finish to complete the creation of Service profile template.