About Remote Leaf Switches in the ACI Fabric

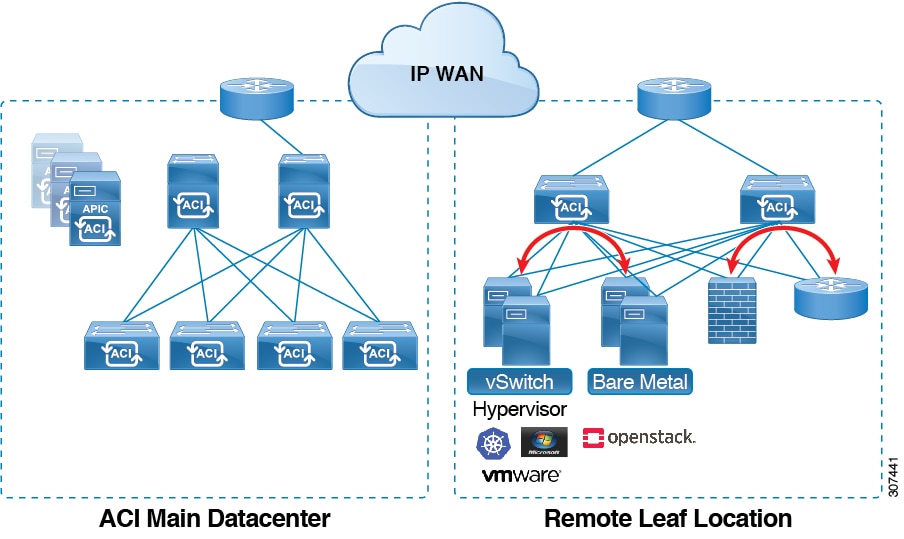

With an ACI fabric deployed, you can extend ACI services and APIC management to remote data centers with Cisco ACI leaf switches that have no local spine switch or APIC attached.

The remote leaf switches are added to an existing pod in the fabric. All policies deployed in the main data center are deployed in the remote switches, which behave like local leaf switches belonging to the pod. In this topology, all unicast traffic is through VXLAN over Layer 3. Layer 2 broadcast, unknown unicast, and multicast (BUM) messages are sent using Head End Replication (HER) tunnels without the use of Layer 3 multicast (bidirectional PIM) over the WAN. Any traffic that requires use of the spine switch proxy is forwarded to the main data center.

The APIC system discovers the remote leaf switches when they come up. From that time, they can be managed through APIC, as part of the fabric.

Note |

|

Characteristics of Remote Leaf Switch Behavior in Release 4.0(1)

Starting in Release 4.0(1), remote leaf switch behavior takes on the following characteristics:

-

Reduction of WAN bandwidth use by decoupling services from spine-proxy:

-

PBR: For local PBR devices or PBR devices behind a vPC, local switching is used without going to the spine proxy. For PBR devices on orphan ports on a peer remote leaf, a RL-vPC tunnel is used. This is true when the spine link to the main DC is functional or not functional.

-

ERSPAN: For peer destination EPGs, a RL-vPC tunnel is used. EPGs on local orphan or vPC ports use local switching to the destination EPG. This is true when the spine link to the main DC is functional or not functional.

-

Shared Services: Packets do not use spine-proxy path reducing WAN bandwidth consumption.

-

Inter-VRF traffic is forwarded through an upstream router and not placed on the spine.

-

This enhancement is only applicable for a remote leaf vPC pair. For communication across remote leaf pairs, a spine proxy is still used.

-

-

Resolution of unknown L3 endpoints (through ToR glean process) in a remote leaf location when spine-proxy is not reachable.

Characteristics of Remote Leaf Switch Behavior in Release 4.1(2)

Before Release 4.1(2), all local switching (within the remote leaf vPC peer) traffic on the remote leaf location is switched directly between endpoints, whether physical or virtual, as shown in the following figure.

In addition, before Release 4.1(2), traffic between the remote leaf switch vPC pairs, either within a remote location or between remote locations, is forwarded to the spine switches in the ACI main data center pod, as shown in the following figure.

Starting in Release 4.1(2), support is now available for direct traffic forwarding between remote leaf switches in different remote locations. This functionality offers a level of redundancy and availability in the connections between remote locations, as shown in the following figure.

In addition, remote leaf switch behavior also takes on the following characteristics starting in release 4.1(2):

-

Starting with Release 4.1(2), with direct traffic forwarding, when a spine switch fails within a single-pod configuration, the following occurs:

-

Local switching will continue to function for existing and new end point traffic between the remote leaf switch vPC peers, as shown in the "Local Switching Traffic: Prior to Release 4.1(2)" figure above.

-

For traffic between remote leaf switches across remote locations:

-

New end point traffic will fail because the remote leaf switch-to-spine switch tunnel would be down. From the remote leaf switch, new end point details will not get synced to the spine switch, so the other remote leaf switch pairs in the same or different locations cannot download the new end point information from COOP.

-

For uni-directional traffic, existing remote end points will age out after 300 secs, so traffic will fail after that point. Bi-directional traffic within a remote leaf site (between remote leaf VPC pairs) in a pod will get refreshed and will continue to function. Note that bi-directional traffic to remote locations (remote leaf switches) will be affected as the remote end points will be expired by COOP after a timeout of 900 seconds.

-

For shared services (inter-VRF), bi-directional traffic between end points belonging to remote leaf switches attached to two different remote locations in the same pod will fail after the remote leaf switch COOP end point age-out time (900 sec). This is because the remote leaf switch-to-spine COOP session would be down in this situation. However, shared services traffic between end points belonging to remote leaf switches attached to two different pods will fail after 30 seconds, which is the COOP fast-aging time.

-

L3Out-to-L3Out communication would not be able to continue because the BGP session to the spine switches would be down.

-

-

-

When there is remote leaf direct uni-directional traffic, where the traffic is sourced from one remote leaf switch and destined to another remote leaf switch (which is not the vPC peer of the source), there will be a milli-second traffic loss every time the remote end point (XR EP) timeout of 300 seconds occurs.

-

With a remote leaf switches with ACI Multi-Site configuration, all traffic continues from the remote leaf switch to the other pods and remote locations, even with a spine switch failure, because traffic will flow through an alternate available pod in this situation.

10 Mbps Bandwidth Support in IPN for Remote Leaf Switches

You might have situations where most of the data traffic from the remote leaf switches is local and the Inter-Pod Network (IPN) is needed only for management purposes. In these situations, you may not need a 100 Mbps IPN. To support these environments, starting with Release 4.2(4), support is now available for 10 Mbps as a minimum bandwidth in the IPN.

To support this, the following requirements should be met:

-

The IPN path is only used for managing remote leaf switches (management functions such as upgrades and downgrades, discovery, COOP, and policy pushes).

-

Configure IPN with the QoS configuration in order to prioritize control and management plane traffic between the Cisco ACI datacenter and remote leaf switch pairs based on the information provided in the section "Creating DSCP Translation Policy Using Cisco APIC GUI".

-

All traffic from the Cisco ACI datacenter and remote leaf switches is through the local L3Out.

-

The EPG or bridge domain are not stretched between the remote leaf switch and the ACI main datacenter.

-

You should pre-download software images on the remote leaf switches to reduce upgrade time.

The following figure shows a graphical representation of this feature.

Dot1q Tunnel Support on Remote Leaf Switches

In some situations, a co-location provider might be hosting multiple customers, where each customer is using thousands of VLANs per remote leaf switch pair. Starting with Release 4.2(4), support is available to create an 802.1Q tunnel between the remote leaf switch and the ACI main datacenter, which provides the flexibility to map multiple VLANs into a single 802.1Q tunnel, thereby reducing the EPG scale requirement.

The following figure shows a graphical representation of this feature.

Create this 802.1Q tunnel between the remote leaf switch and the ACI main datacenter using the instructions provided in the "802.1Q Tunnels" chapter in the Cisco APIC Layer 2 Networking Configuration Guide, located in the Cisco APIC documentation landing page.

You can configure remote leaf switches in the APIC GUI, either with and without a wizard, or use the REST API or the NX-OS style CLI.

About Remote Leaf Back-to-Back Connection

Beginning with Cisco APIC Release 5.2(1), you can connect remote leaf switch pairs directly to each other ("back-to-back") by fabric links to carry local east-west traffic. An example of a scenario with significant east-west data traffic is unicast traffic from an EPG to an L3Out in a vPC pair, as shown in the figure below.

Only traffic between non-vPC connected hosts traverses the back-to-back links. A vPC connected host can send traffic locally from the remote leaf switch nearest the destination, so such traffic will not use the back-to-back links.

When uplinks and a back-to-back connection are active between a pair of remote leaf switches, the back-to-back links are preferred for east-west traffic, while the uplinks carry traffic to and from any other remote leaf switches and switches in the main datacenter.

Although the remote leaf architecture normally calls for a spine switch or the IPN router to route traffic between proximately located remote leaf switches, a direct back-to-back leaf connection can save bandwidth on the upstream device.

Guidelines and Limitations for Remote Leaf Back-to-Back Connection

-

The back-to-back links between the remote leaf switches must be direct, with no intermediate devices.

-

The back-to-back connection can use fabric ports or front panel ports that are converted to fabric ports.

-

Remote leaf switches can be connected with back-to-back links only in pairs. Interconnecting more than two remote leaf switches by back-to-back links is not supported.

-

When a pair of remote leaf switches is connected back-to-back and one of the pair loses its uplink connectivity, the same remote leaf switch will be reachable via the other remote leaf switch through the back-to-back link. In this case, traffic from the main datacenter will also be carried on the back-to-back link.

-

PTP and SyncE are not supported on back-to-back links.

Deploying the Remote Leaf Back-to-Back Connection

In releases before Cisco APIC Release 5.2(1), a back-to-back connection between remote leaf switch fabric ports would result in a wiring error. With Cisco APIC Release 5.2(1), such a connection is recognized automatically in either of the following situations:

-

The connection is made between two remote leaf vPC peers.

-

The connection is made between remote leaf switches that are not members of any vPC in a single remote location.

In these cases, no specific configuration is necessary.

Feedback

Feedback