Overview

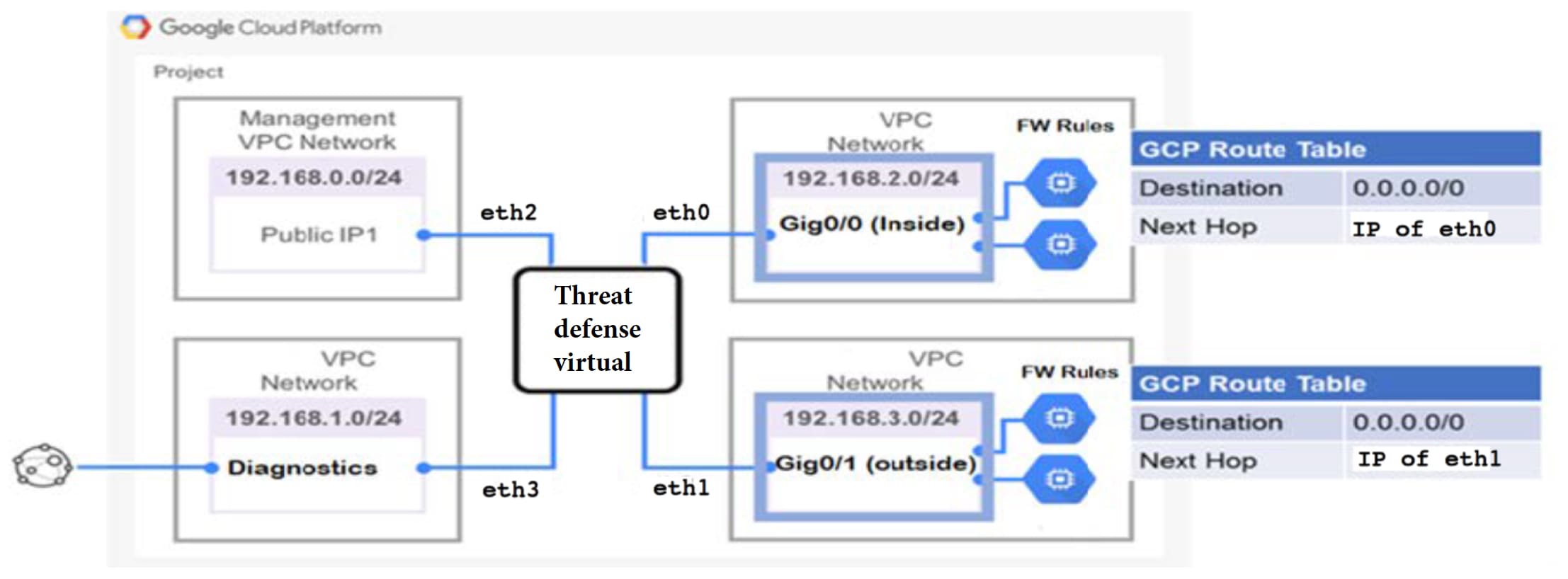

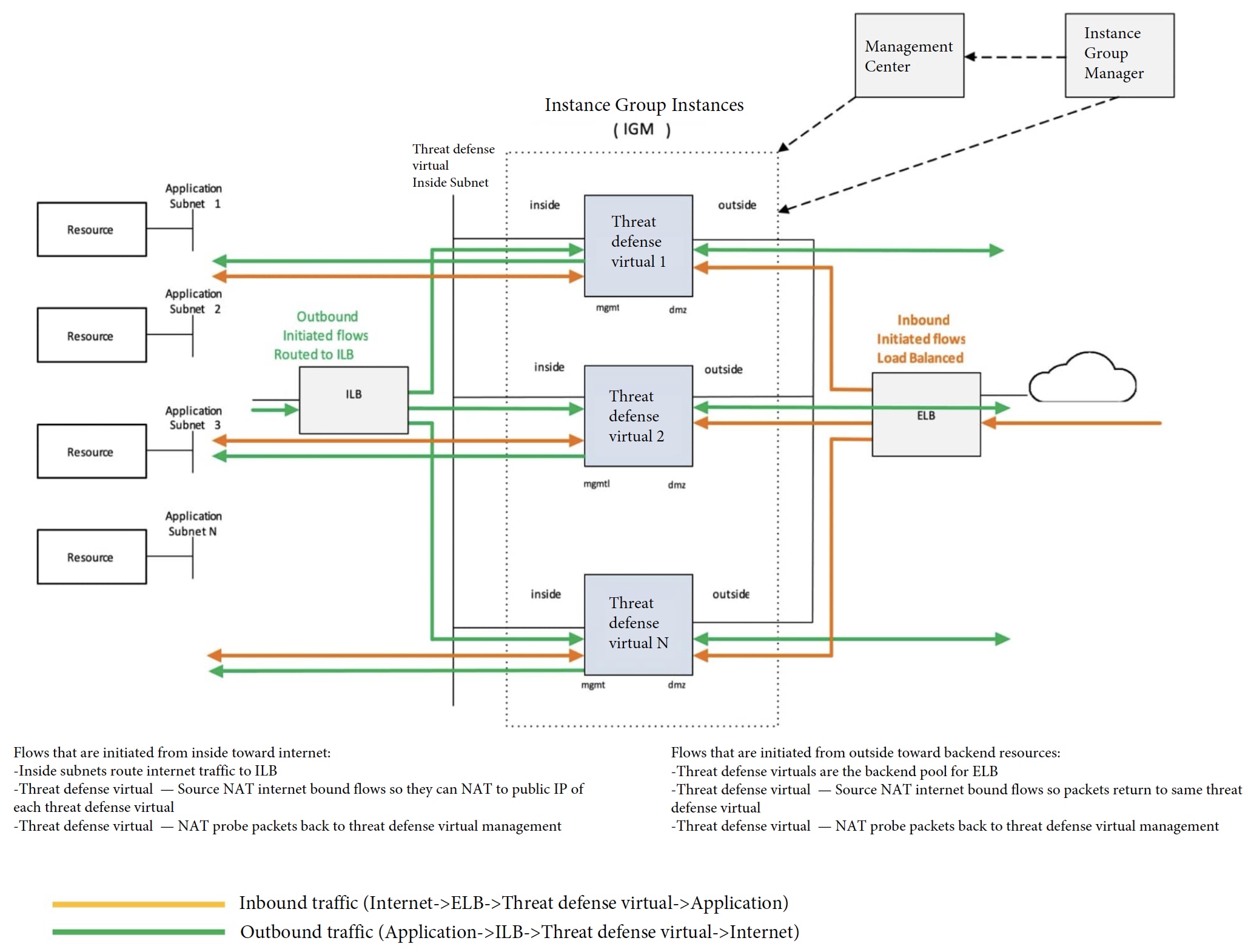

The threat defense virtual runs the same software as physical Secure Firewall Threat Defense (formerly Firepower Threat Defense) to deliver proven security functionality in a virtual form factor. The threat defense virtual can be deployed in the public GCP. It can then be configured to protect virtual and physical data center workloads that expand, contract, or shift their location over time.

System Requirements

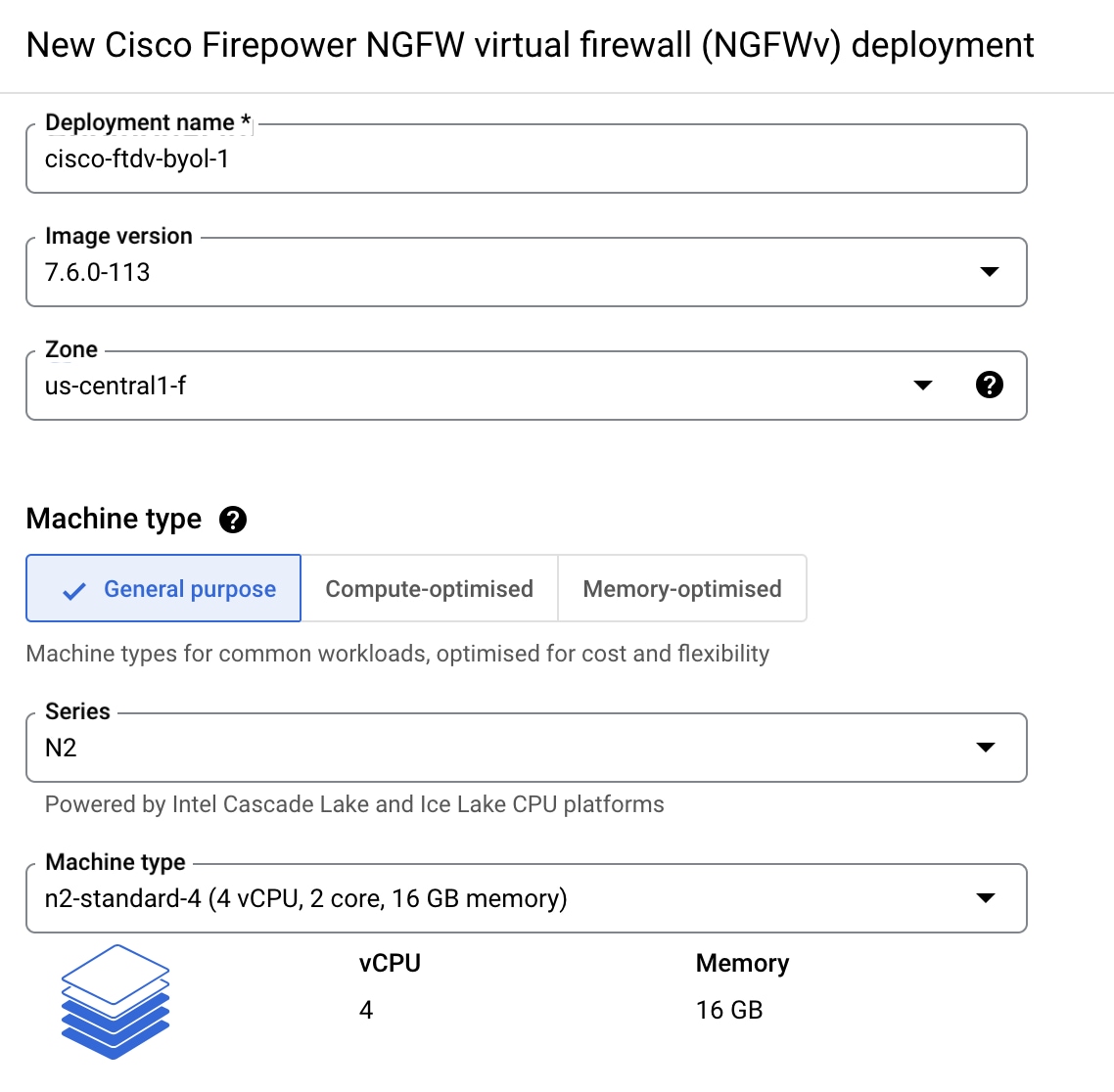

Select the Google virtual machine type and size to meet your threat defense virtual needs. Currently, the threat defense virtual supports both compute-optimized and general purpose machine (standard, high-memory, and high-CPU machine types).

Note |

Supported machine types may change without notice. |

|

Compute-Optimized Machine Types |

Attributes |

||

|---|---|---|---|

|

vCPUs |

RAM (GB) |

vNICs |

|

|

c2-standard-4 |

4 |

16 GB |

4 |

|

c2-standard-8 |

8 |

32 GB |

8 |

|

c2-standard-16 |

16 |

64 GB |

8 |

|

General Purpose Machine Types |

Attributes |

||

|---|---|---|---|

|

vCPUs |

RAM (GB) |

vNICs |

|

|

n1-standard-4 |

4 |

15 |

4 |

|

n1-standard-8 |

8 |

30 |

8 |

|

n1-standard-16 |

16 |

60 |

8 |

|

n2-standard-4 |

4 |

16 |

4 |

|

n2-standard-8 |

8 |

32 |

8 |

|

n2-standard-16 |

16 |

64 |

8 |

|

n2-highmem-4 |

4 |

32 |

4 |

|

n2-highmem-8 |

8 |

64 |

8 |

-

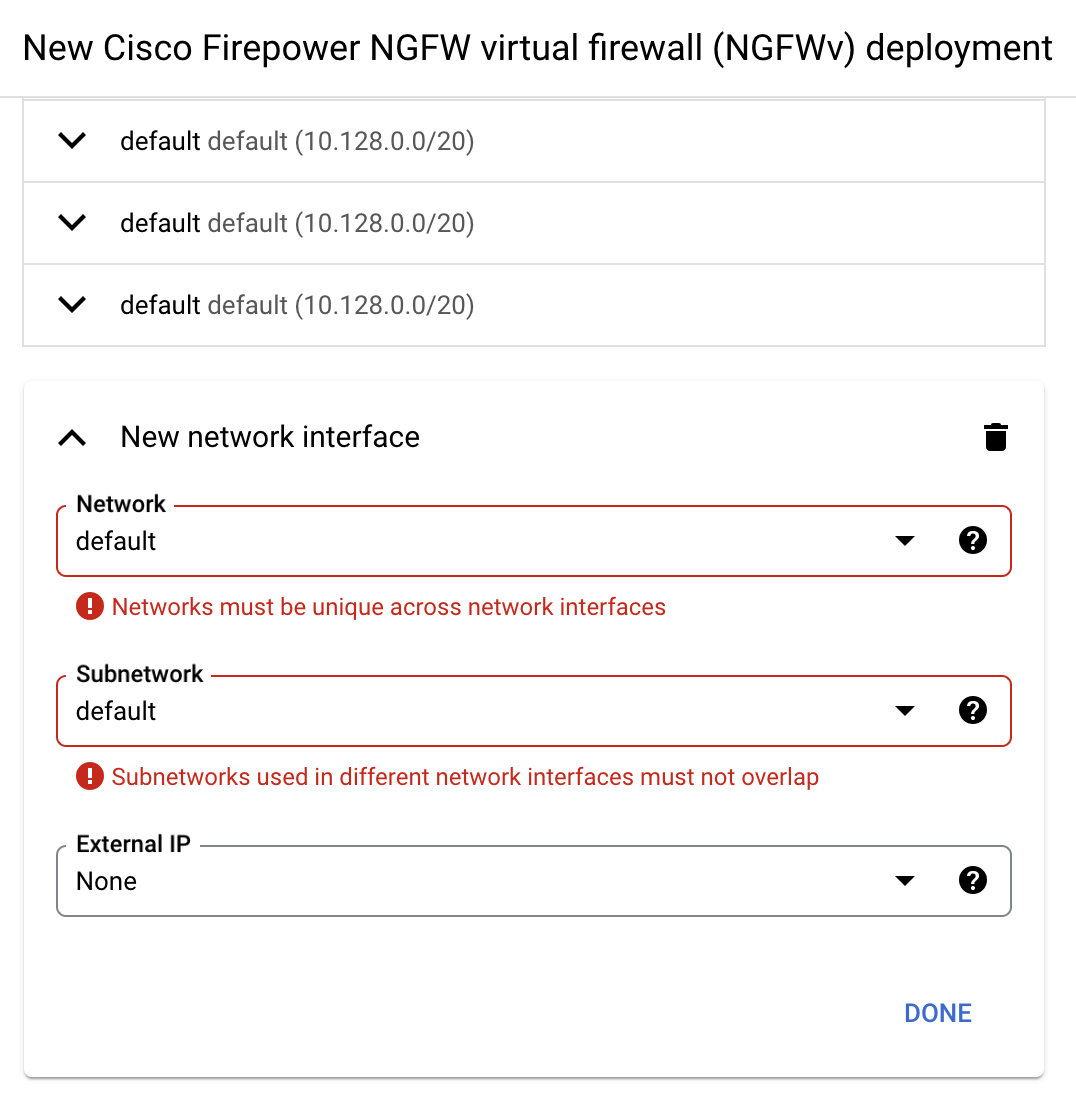

The threat defense virtual requires a minimum of 4 interfaces.

-

The maximum supported vCPUs is 16.

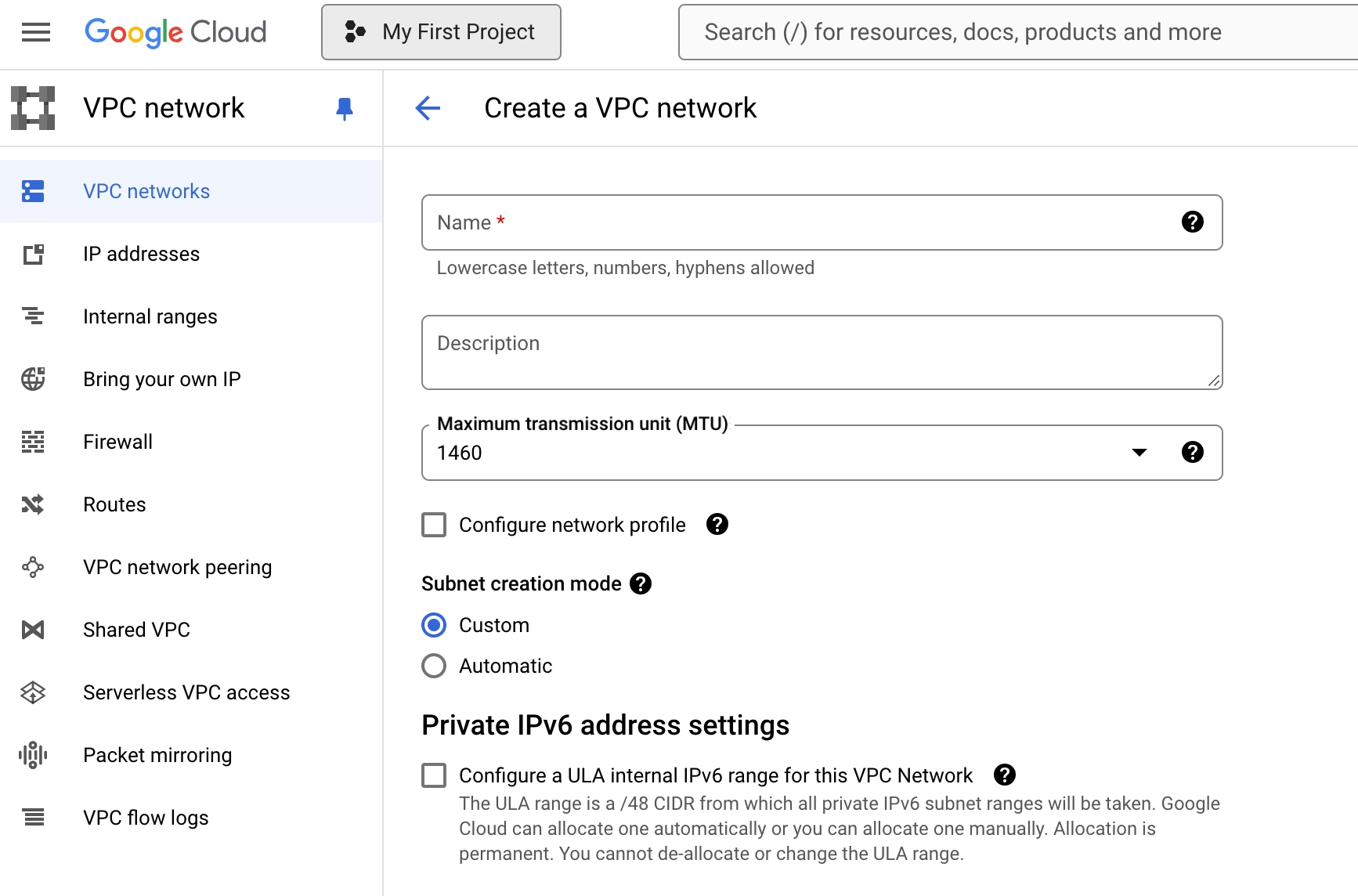

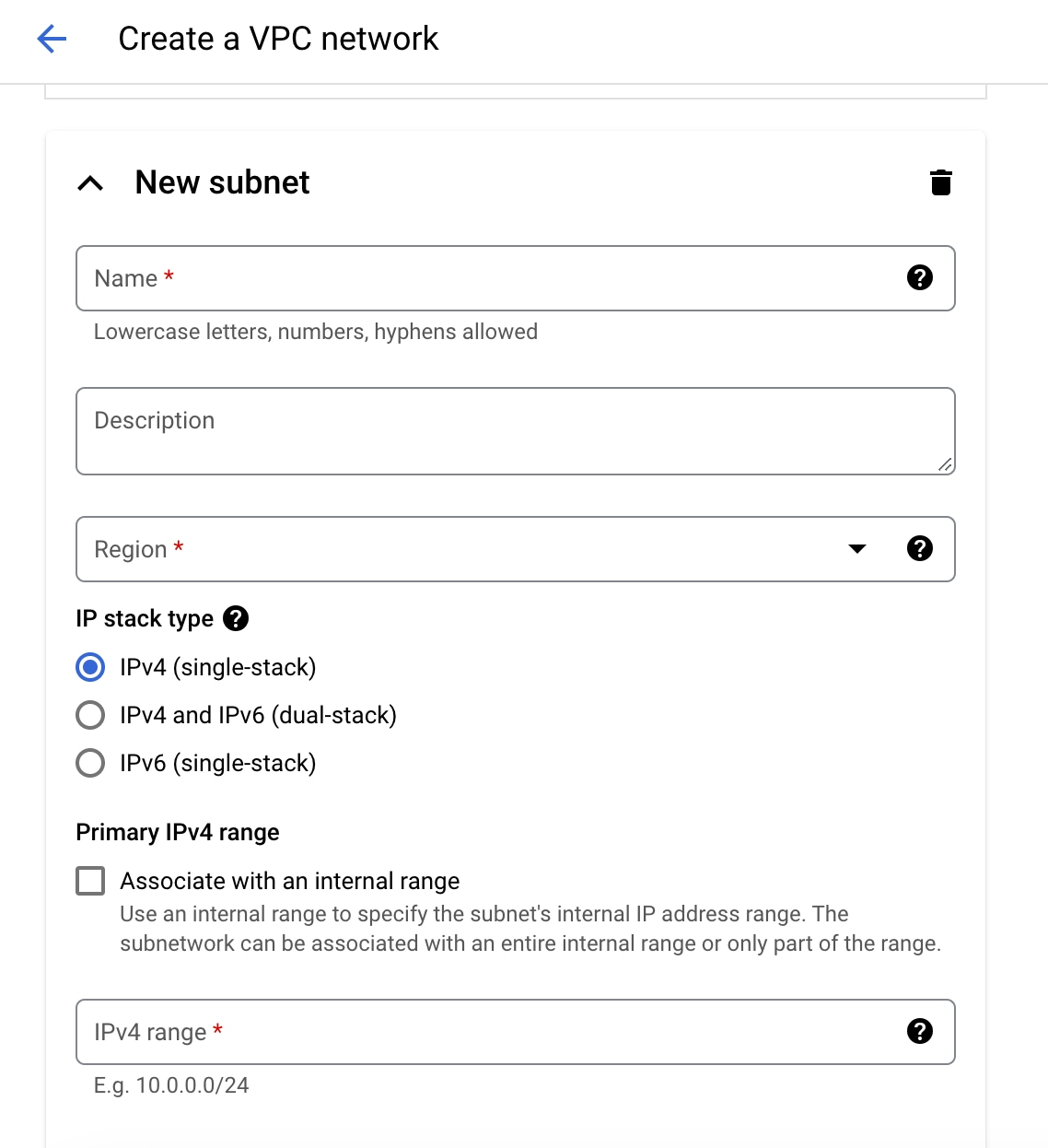

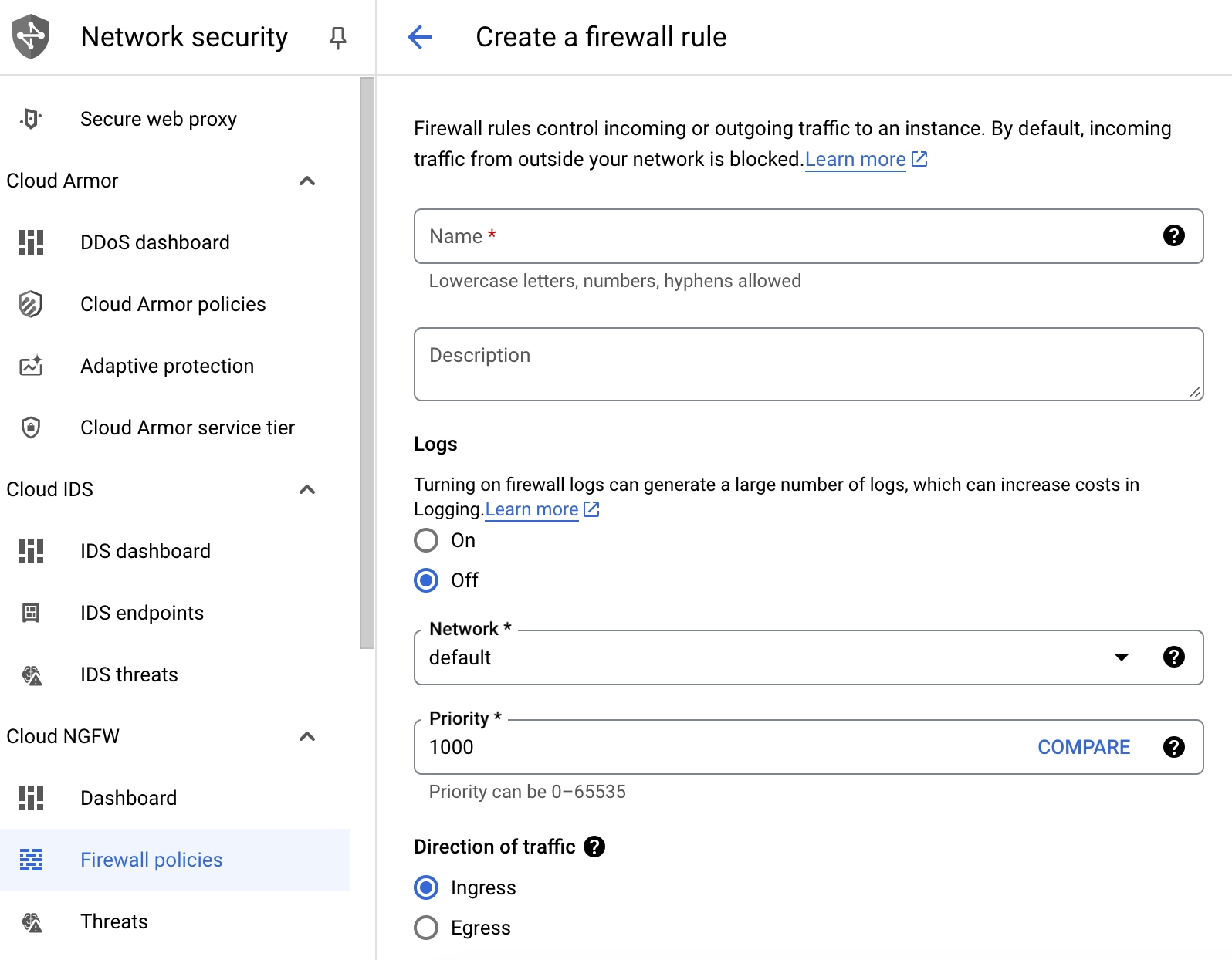

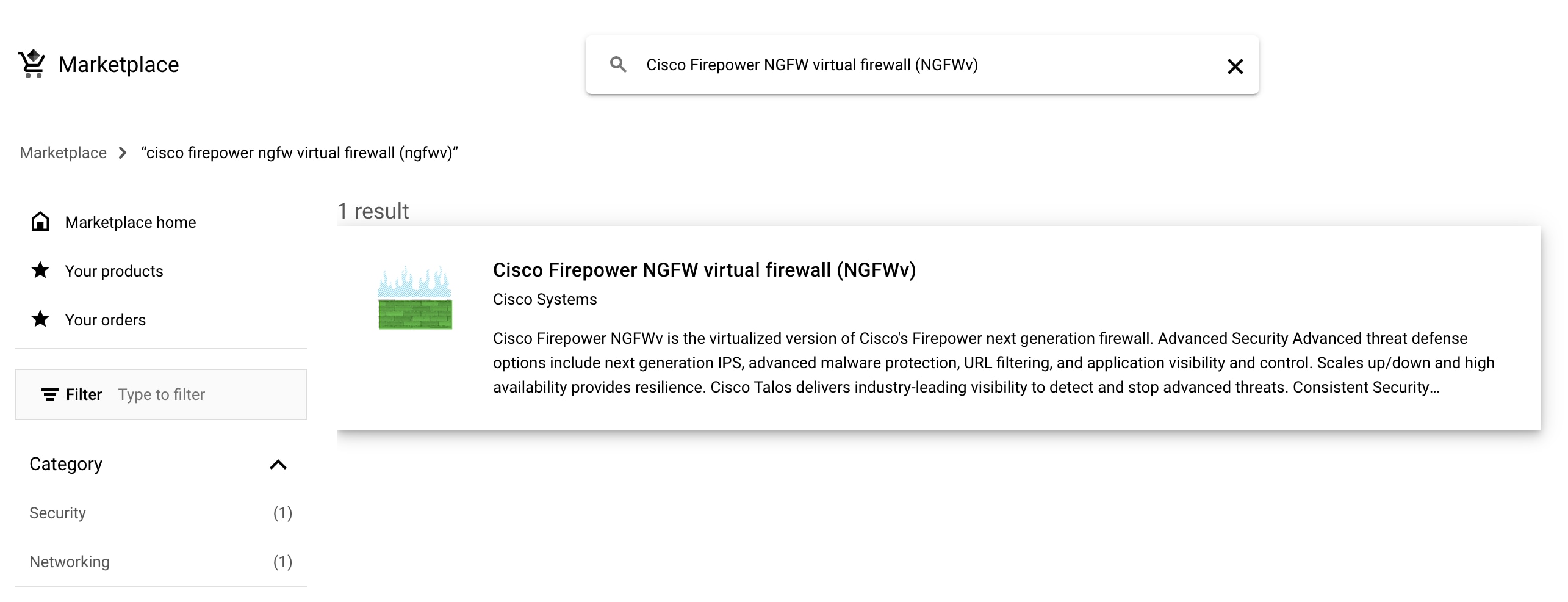

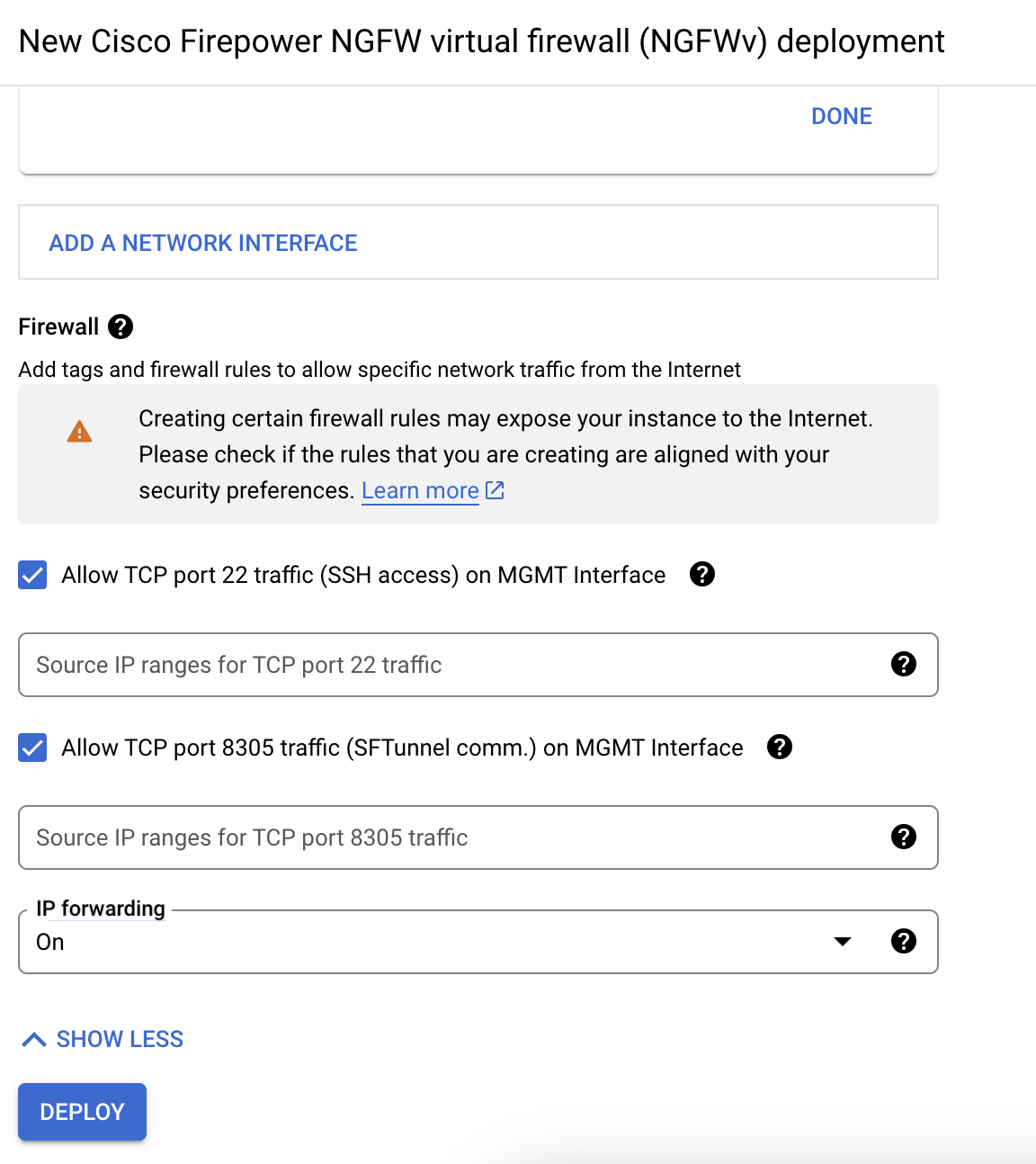

You create an account on GCP, launch a VM instance using the Cisco Firepower NGFW virtual firewall (NGFWv) offering on the GCP Marketplace, and choose a GCP machine type.

Feedback

Feedback