System Architecture

This chapter includes the following major topics:

•![]() DRaaS 1.0 System Architecture

DRaaS 1.0 System Architecture

•![]() VMDC Orchestration using BMC CLM

VMDC Orchestration using BMC CLM

DRaaS 1.0 System Architecture

This section includes the following topics:

•![]() System High Level Architecture

System High Level Architecture

System High Level Architecture

This section describes the high level architecture of the DRaaS System. The system provides disaster recovery for customer physical/virtual servers by deploying recovery VMs in the VMDC 2.3-based container on the provider side.

Figure 2-1 illustrates the high level architecture of DRaaS System.

Figure 2-1 DRaaS High Level Architecture

The physical system architecture consists of the following building blocks:

Provider Cloud

The provider cloud within the DRaaS System will be based on VMDC 2.3. The VMDC 2.3 design is based on the earlier VMDC 2.2 design, with changes to optimize the design for lower cost, fewer layers, and increased tenancy scale. The Cisco VMDC System provides vPC-based L3 hierarchical virtual routing and forwarding (VRF)-Lite DC design, multi-tenancy, secure separation, differentiated service tiers, and high availability in a data center environment. It also provides secure separation between replicated workloads and provides shared network services for customers in DRaaS.

The VMDC 2.3 architecture works with Vblock, FlexPod, or any other integration stack. Integrated stacks can be added as required to scale the SP cloud environment.

Based on the customer's production environment and needs, a specific tenancy model can be selected to provide similar services in the cloud-matching production environment. VMDC architecture and deployment models will be covered in detail in this chapter.

Enterprise Data Center

The DR solutions should address enterprise customer requirements for various vertical industries and geographies. The enterprise data center design is therefore expected to vary from customer to customer. The intent of the DRaaS System is to keep the enterprise DC architecture generic so as to provide the greatest coverage. While the DC architecture is almost irrelevant and the solution supports heterogeneous replication across any-to-any infrastructure, a typical three tier (core/aggregation and access) DC architecture is suggested in the system.

WAN Connectivity

The WAN connectivity design principles provided by VMDC are maintained and supported without requiring any additional components and technologies. The replicated data between the enterprise and SP data center can be encrypted with the help of Cisco technologies like IPsec VPN based on Cisco ASA firewalls. Optionally, for low cost implementation to support a small number of servers, inflightreplicated data encryption can be provided by InMage partner software.

To support partial failover of customer's environment, technologies like Overlay Transport Virtualization (OTV) can be used for L2 extension between the customer's data center and the cloud. L2 connectivity allows customers to use the same IP from enterprise network in the cloud without the need to change for accessing workloads in the cloud after recovery.

Partner Solution for Providing Disaster Recovery

Data replication and recovery of the production servers will be provided by InMage ScoutCloud technology. InMage ScoutCloud is a software-based replication and recovery technology, which can protect both physical and virtual servers into the cloud. InMage ScoutCloud is being integrated into the DRaaS System, providing the following functionality:

•![]() Heterogeneous data replication

Heterogeneous data replication

•![]() Continuous data protection

Continuous data protection

•![]() Application consistency

Application consistency

•![]() Recovery automation

Recovery automation

•![]() DR Drill

DR Drill

System Logical Topology

Figure 2-2 covers the logical topology of the DRaaS System.

Figure 2-2 DRaaS Logical Topology

As shown in Figure 2-2, each customer will have a dedicated network container created on the SP VMDC cloud. The network containers will be created based on the necessary security and network services required by the enterprise customers. Any network topology on the customer's data center can be matched on the VMDC cloud using network containers. Predefined containers provide examples for different types of deployments. Automated provisioning and management logic for each customer type is pre-defined in the management and orchestration software. Customers can choose from existing models or define their own customized models. The production workloads from each enterprise data center will be replicated to the corresponding network container on the VMDC cloud and will be available for recovery purposes.

End-to-End Architecture

The DRaaS System addresses the following design principles and architectural goals:

•![]() Secure Multi-Tenancy

Secure Multi-Tenancy

•![]() Secure, modular, and highly available cloud

Secure, modular, and highly available cloud

•![]() Continuous Data Protection (CDP)

Continuous Data Protection (CDP)

•![]() Physical-to-Virtual (P2V) and Virtual-to-Virtual (V2V) Disaster Recovery

Physical-to-Virtual (P2V) and Virtual-to-Virtual (V2V) Disaster Recovery

•![]() Near zero RPO and RTO-capable DRaaS

Near zero RPO and RTO-capable DRaaS

•![]() Automated run book automation

Automated run book automation

•![]() Self-Service Multi-Tenant Portal

Self-Service Multi-Tenant Portal

By utilizing the architecture above, DRaaS in a multi-tenant environment can be supported as shown in Figure 2-3.

Figure 2-3 End-to-End Architecture

In a multi-tenant environment, each customer is mapped as a separate VMDC tenant where the necessary network security is provided and traffic segregation is maintained. Figure 2-3 depicts the end-to-end architecture of the DRaaS System based on VMDC.

With the deployment of lightweight components as shown in Figure 2-3 and utilizing the network security provided by VMDC architecture, customers can replicate their data into a secure cloud environment for recovery.

Data changes are collected from the production servers as they occur, directly in memory before they are written to disk, and sent to a software appliance within an enterprise data center. Because of this approach, absolutely no additional I/O load is induced on production servers due to replication. The appliance is responsible for further offloading compute-intensive tasks from production systems, such as compression, encryption, WAN acceleration, and consolidated bandwidth management.

The system provides CDP for the customer's production servers. The customers will be able to recover their environments to any point in time before the disaster occurred. The servers are not only protected from the physical disasters, but also from logical disasters due to CDP.

Application consistency is enforced at regular intervals through VSS integration on Windows and native application-specific mechanisms on Linux and Solaris systems. Application consistency is also enforced at the guest level in virtual environments such as VMware ESX, Xen Server, and Hyper-V. These application-consistent points are tagged by a bookmark and archived as part of the CDP data. They can be leveraged to perform application consistent recoveries within stringent recovery time objectives.

The following use cases are covered as part of the DRaaS System and will be discussed in more detail in the following sections:

•![]() Protection Workflows, page 4-2

Protection Workflows, page 4-2

•![]() Recovery Workflows, page 4-37

Recovery Workflows, page 4-37

•![]() Failback Protection Workflows, page 4-46

Failback Protection Workflows, page 4-46

•![]() Resume Protection Workflows, page 4-78

Resume Protection Workflows, page 4-78

•![]() DR Drill Workflows, page 4-84

DR Drill Workflows, page 4-84

DRaaS Operational Workflows

Following are the workflows for protecting and recovering the customer's production workloads into the cloud. The workflows describe the process of creating the network containers for customers within the SP cloud, replication of workloads into the network containers, and recovery of workloads in the event of a disaster.

The workflow in Figure 2-4 is used for protection and failover scenarios.

Figure 2-4 New Customer Protection Workflow

Step 1 ![]() Based on the customer requirements, deploy a VMDC Network Container using BMC.

Based on the customer requirements, deploy a VMDC Network Container using BMC.

Step 2 ![]() Secure IPsec connectivity is manually set up between the Enterprise and the VMDC-based cloud provider setup.

Secure IPsec connectivity is manually set up between the Enterprise and the VMDC-based cloud provider setup.

Step 3 ![]() At both enterprise and SP data centers, deploy and configure the necessary DR components.

At both enterprise and SP data centers, deploy and configure the necessary DR components.

Step 4 ![]() Use the InMage management wizard to select the machines to be protected and set up the recovery plans.

Use the InMage management wizard to select the machines to be protected and set up the recovery plans.

Step 5 ![]() Allow customers to monitor the status of DR and RPO/RTO utilizing the Partner Product portals.

Allow customers to monitor the status of DR and RPO/RTO utilizing the Partner Product portals.

The workflow in case of a failure scenario is shown in Figure 2-5.

Figure 2-5 Failure Scenario

Step 1 ![]() When the customer DC goes down, customer declares a disaster and communicates to SP what VMs to restore and what checkpoints to use. SP can use the recovery plan (which could be preconfigured), which details the list of protected VMs, the startup order, and any custom steps.

When the customer DC goes down, customer declares a disaster and communicates to SP what VMs to restore and what checkpoints to use. SP can use the recovery plan (which could be preconfigured), which details the list of protected VMs, the startup order, and any custom steps.

Step 2 ![]() SP logs into the DR product portal and brings up the required VMs in its environment. Customers with self-service capabilities will be able to recover VMs in the cloud themselves using the self-service portal.

SP logs into the DR product portal and brings up the required VMs in its environment. Customers with self-service capabilities will be able to recover VMs in the cloud themselves using the self-service portal.

Step 3 ![]() Customer works with its DNS provider to direct the client traffic to the SP DC. If the customer is utilizing a Global Site Selector (GSS)-based DNS solution or has a L2 extension, this step will be automatic or not required.

Customer works with its DNS provider to direct the client traffic to the SP DC. If the customer is utilizing a Global Site Selector (GSS)-based DNS solution or has a L2 extension, this step will be automatic or not required.

Step 4 ![]() When the Enterprise DC is back up, customer works with the SP during a maintenance window to bring up the VMs in customer DC, failback the VMs from SP to enterprise, and update the DNS so that the client traffic is re-routed to the customer DC.

When the Enterprise DC is back up, customer works with the SP during a maintenance window to bring up the VMs in customer DC, failback the VMs from SP to enterprise, and update the DNS so that the client traffic is re-routed to the customer DC.

Network Deployment Considerations to Support Recovery Environment

Table 2-1 shows the considerations in matching the networks between the enterprise's and SP's VPC. Logically, the enterprise network will consist of VLANs and network services, including firewall rules and load balancing. Based on the requirements of enterprise, which depend on the type of applications that are protected, network containers can be created on the VMDC to meet those requirements.

The typical deployment of a multi-tiered application running in the enterprise is shown in Figure 2-6.

Figure 2-6 Application Deployment Example

The following is the onboarding procedure of a customer running the application shown above:

•![]() The enterprise IT admin needs to coordinate with the SP to have the network container created on the VMDC, based on the requirements and dependencies of the application being protected. The options of creating the network container and maintaining consistency on the SP side are as follows:

The enterprise IT admin needs to coordinate with the SP to have the network container created on the VMDC, based on the requirements and dependencies of the application being protected. The options of creating the network container and maintaining consistency on the SP side are as follows:

–![]() The container is pre-configured by SP with the necessary VLANs and network services. The firewall rules and the load balancing rules pre-configured based on the pre-determined IPs of recovery servers on VMDC.

The container is pre-configured by SP with the necessary VLANs and network services. The firewall rules and the load balancing rules pre-configured based on the pre-determined IPs of recovery servers on VMDC.

–![]() The container is preconfigured and the firewall and load balancing rules are configured dynamically by the SP using BMC orchestration or manually through CLI during the failover process of the servers. Any changes done with the network services after replication has been set up on the enterprise data center have to be communicated to the SP. This ensures network consistency during recovery of the servers. Optionally, the SP can enable the Enterprise customer to manage the firewall and load balancing services on the VMDC cloud. This can be done by providing access to the BMC orchestrator or to the specific devices directly for modifications.

The container is preconfigured and the firewall and load balancing rules are configured dynamically by the SP using BMC orchestration or manually through CLI during the failover process of the servers. Any changes done with the network services after replication has been set up on the enterprise data center have to be communicated to the SP. This ensures network consistency during recovery of the servers. Optionally, the SP can enable the Enterprise customer to manage the firewall and load balancing services on the VMDC cloud. This can be done by providing access to the BMC orchestrator or to the specific devices directly for modifications.

•![]() For the application to run properly on the VMDC cloud after recovery, all the components of the application from different tiers needs to communicate with each other. All the servers needs to be brought up in an order based on the application dependencies.

For the application to run properly on the VMDC cloud after recovery, all the components of the application from different tiers needs to communicate with each other. All the servers needs to be brought up in an order based on the application dependencies.

•![]() The other important dependency is the IP addressing. Two types of configurations are done on the servers within an application for intra-component communication:

The other important dependency is the IP addressing. Two types of configurations are done on the servers within an application for intra-component communication:

–![]() Configuration based on IP address

Configuration based on IP address

–![]() Configuration based on DNS names

Configuration based on DNS names

Legacy application servers configured based on IP address can run seamlessly as long as they have the same IPs on the VMDC cloud. This may or may not be the case for all the customers. Customers who have different network subnets available on the VMDC need to reconfigure the servers to have the new IPs part of the application configuration. The task mentioned about can be performed by a SP administrator in a managed recovery use case or by the customer after the servers are available on the VMDC cloud.

The re-IPing of the servers can be eliminated if the servers with in the applications are using DNS

names for communicating, in which case the DNS entries can be updated to reflect the new IPs. An SP can also perform the modification of DNS entries if the customer is using SP DNS servers. Optionally, DNS entries can be modified automatically using scripts.

In cases of the customer protecting the enterprise DNS servers, the DNS servers can be brought online during the recovery of application and based on the new IP addresses the configuration can be updated by the customer manually or can be automated.

Use of Cisco GSS

To accelerate the disaster recovery service and the dynamic distribution of the workload between the primary and secondary data centers, Cisco provides different network services to optimize the access and the distribution of the user traffic to the remote sites using a Global Site Load Balancing (GSLB) solution. This global GSLB solution for traditional L3 interconnection between sites relies on three major technologies:

•![]() Intelligent Domain Name System (DNS): A DNS known as the Global Site Selector (GSS) redirects the requests from end-users to the physical location where the application is active.

Intelligent Domain Name System (DNS): A DNS known as the Global Site Selector (GSS) redirects the requests from end-users to the physical location where the application is active.

•![]() HTTP Traffic Redirection between Sites: In case of resource unavailability, the local Server Load Balancing (SLB) device will return an HTTP redirection message type (HTTP status code 3xx) to the end-user so that the web browser of the client can be automatically and transparently redirected to the elected backup data center where resources and information are available.

HTTP Traffic Redirection between Sites: In case of resource unavailability, the local Server Load Balancing (SLB) device will return an HTTP redirection message type (HTTP status code 3xx) to the end-user so that the web browser of the client can be automatically and transparently redirected to the elected backup data center where resources and information are available.

•![]() Route Health Injection (RHI): RHI provides a real-time, very granular distribution of user traffic across multiple sites based on application availability. This method is initiated by an SLB device that will inform the upward router about the presence or absence of selected applications based on extremely accurate information. This information is usually related to the status of the services that it supports. Therefore, the redirection of the user request to a remote site occurs in real time.

Route Health Injection (RHI): RHI provides a real-time, very granular distribution of user traffic across multiple sites based on application availability. This method is initiated by an SLB device that will inform the upward router about the presence or absence of selected applications based on extremely accurate information. This information is usually related to the status of the services that it supports. Therefore, the redirection of the user request to a remote site occurs in real time.

VMDC Cloud Infrastructure

The VMDC System is the Cisco reference architecture for IaaS cloud deployments. This Cisco cloud architecture is designed around a set of modular DC components consisting of building blocks of resources called PoDs, or Points of Delivery. These PoDs comprise the Cisco UCS, SAN and NAS storage arrays, access (switching) layers, and aggregation (switching and routing) layers connecting into the DSN-based services layer or connecting directly to service appliances; and multiple 10 GE fabric using highly scalable Cisco network switches and routers. The VMDC system is built around the UCS, Nexus 1000V, Nexus 5000 and Nexus 7000 switches, Multilayer Director Switch (MDS), ASR 1000, ASR 9000, ASA 5585-X or Adaptive Security Appliance Services Module (ASASM), Catalyst 6500 DSN, ACE, Nexus 1000V VSG, VMware vSphere, EMC VMAX, VNX and NetApp FAS storage arrays. Cloud service orchestration is provided by the BMC Cloud Lifecycle Management (CLM) suite and cloud service assurance is provided by the ZenOSS Cloud Service Assurance (CSA) suite. Figure 2-7 provides a synopsis of the functional infrastructure components comprising the VMDC system.

Figure 2-7 VMDC Infrastructure Components

This section includes the following topics:

•![]() Modifications in VMDC Network Containers for DRaaS

Modifications in VMDC Network Containers for DRaaS

VMDC 2.3 Architecture

The VMDC System utilizes a hierarchical network design for high availability and scalability. The hierarchical or layered DC design uses redundant switches at each layer of the network topology for device-level failover that creates highly available transport between end nodes using the network. DC networks often require additional services beyond basic packet forwarding, such as SLB, firewall, and intrusion prevention. These services might be introduced as modules populating a slot of one of the switching nodes in the network or as stand-alone appliance devices. Each service approach also supports the deployment of redundant hardware to preserve the HA standards set by the network topology. This layered approach is the basic foundation of the VMDC design to provide scalability, performance, flexibility, resiliency, and service assurance. VLANs and VRF instances are used to provide tenant isolation within the DC architecture, and routing protocols within the VRF instances are utilized to interconnect the different networking and service devices. This multilayered VMDC architecture is comprised of core, aggregation, services, and access layers. This architecture allows for DC modules to be added as demand and load increases. It also provides the flexibility to create different logical topologies utilizing device virtualization, the insertion of service devices, and traditional L3 and L2 network configurations.

The VMDC 2.3 System is the latest released version of the VMDC architecture, with VMDC 2.2 being the previous release. Architecturally, VMDC 2.3 is based on VMDC 2.2 (and 2.0), but with several optimizations to reduce cost and footprint and increase tenancy scale. The key differences between VMDC 2.3 and 2.2 include:

•![]() VMDC 2.3 includes an ASR 1000 as the DC Edge (PE) router, while VMDC 2.2 uses the ASR 9000.

VMDC 2.3 includes an ASR 1000 as the DC Edge (PE) router, while VMDC 2.2 uses the ASR 9000.

•![]() VMDC 2.3 includes a collapsed core/aggregation layer, while VMDC 2.2 includes a separate Nexus 7000 core layer and Nexus 7000 aggregation layers.

VMDC 2.3 includes a collapsed core/aggregation layer, while VMDC 2.2 includes a separate Nexus 7000 core layer and Nexus 7000 aggregation layers.

•![]() VMDC 2.3 includes an ASA 5585-X for the perimeter firewall, while VMDC 2.2 uses the ASA5585-X or ASASM module on Catalyst 6500 DSN.

VMDC 2.3 includes an ASA 5585-X for the perimeter firewall, while VMDC 2.2 uses the ASA5585-X or ASASM module on Catalyst 6500 DSN.

•![]() VMDC 2.3 includes an ACE 4710 for Server Load Balancing, while VMDC 2.2 uses the ACE-30 module on the Catalyst 6500 DSN.

VMDC 2.3 includes an ACE 4710 for Server Load Balancing, while VMDC 2.2 uses the ACE-30 module on the Catalyst 6500 DSN.

•![]() VMDC 2.2 optimizes the Enhanced Gold, Silver, and Bronze network containers to consume fewer resources on the platforms, compared to VMDC 2.3.

VMDC 2.2 optimizes the Enhanced Gold, Silver, and Bronze network containers to consume fewer resources on the platforms, compared to VMDC 2.3.

•![]() VMDC 2.3 utilizes the ACE 4710 in One-Arm mode, while VMDC 2.2 uses the ACE30 in Two-Arm mode.

VMDC 2.3 utilizes the ACE 4710 in One-Arm mode, while VMDC 2.2 uses the ACE30 in Two-Arm mode.

Note ![]() For detailed information on VMDC 2.3 System architecture, refer to the following documents:

For detailed information on VMDC 2.3 System architecture, refer to the following documents:

•![]() VMDC 2.3 Implementation Guide

VMDC 2.3 Implementation Guide

For information on the previous VMDC 2.2 System architecture, refer to the following documents:

•![]() VMDC 2.2 Implementation Guide

VMDC 2.2 Implementation Guide

Figure 2-8 provides a representation of the VMDC 2.3 physical architecture.

Figure 2-8 VMDC 2.3 System Architecture

VMDC 2.3 Modular Components

The VMDC System architecture provides a scalable solution that can address the needs of Enterprise and SP cloud data centers. This architecture enables customers to select the design that best suits their immediate needs while providing a solution that can scale to meet future needs without retooling or redesigning the DC. This scalability is achieved using a hierarchical design with two different modular building blocks, Point of Delivery (PoD), and ICS.

Point of Delivery (PoD)

The modular DC design starts with a basic infrastructure module called a PoD. A PoD is a repeatable, physical construct with predictable infrastructure characteristics and deterministic functions. A PoD identifies a modular unit of DC components and enables customers to add network, compute, and storage resources incrementally. This modular architecture provides a predictable set of resource characteristics (network, compute, and storage resource pools, power and space consumption) per unit that are added repeatedly as needed.

In this design, the aggregation layer switch pair, services layer nodes, and one or more Integrated Compute and Storage (ICSs) are contained within a PoD. The PoD connects to the WAN/PE layer device in the DC, in the VMDC 2.3 architecture, and connects to the core layer in previous VMDC 2.2 and 2.0 architectures. To scale a PoD, providers can add additional ICSs and can continue to scale in this manner until the PoD resources are exceeded. To scale the DC, additional PoDs can be deployed and connected to the core layer devices. Figure 2-9 illustrates how PoDs can be used to scale compute, network, and storage in predictable increments within the DC.

Figure 2-9 VMDC 2.3 PoDs for Scaling the Data Center

ICS

The second modular building block utilized is a generic ICS based on existing models, such as the VCE Vblock or Cisco/NetApp FlexPod infrastructure packages. The VMDC architecture is not limited to a specific ICS definition, but can be extended to include other compute and storage stacks. An ICS can include network, compute, and storage resources in a repeatable unit. In this guide, the access layer switch pair, storage, and compute resources are contained within an ICS. To scale a PoD, customers can add additional integrated compute stacks and can continue to scale in this manner until the PoD resources are exceeded. Figure 2-10 illustrates how integrated compute stacks can be used to scale the PoD.

Figure 2-10 VMDC 2.3 ICS for Scaling the Data Center

VMDC 2.3 Network Containers

The VMDC 2.3 solution defines a reference three-tier Infrastructure as a Service (IaaS) model of Gold, Silver, and Bronze tiers. These service tiers define resource and service levels for compute, storage, and network performance. This is not meant to be a strict definition of resource allocation, but to demonstrate how differentiated service tiers could be built. These are differentiated based on the following features:

•![]() Network resources. Differentiation based on network resources and features:

Network resources. Differentiation based on network resources and features:

–![]() Application tiers. Service tiers can provide differentiated support for application hosting. In some instances, applications may require several application tiers of VMs (web, application, database). VMDC 2.3 Gold and Silver services are defined with three application tiers on three separate VLANs to host web, application, and database services on different VMs. The Bronze service is defined with one VLAN only so if there are multi-tiered applications, they must reside on the same VLAN or potentially on the same VM (Linux, Apache, MySQL, PHP, Perl, or Python (LAMP)/Windows Apache, MySQL, PHP, Perl or Python (WAMP) stack). All three services, Gold, Silver, and Bronze, are defined with separate VRF instances to provide security and isolation.

Application tiers. Service tiers can provide differentiated support for application hosting. In some instances, applications may require several application tiers of VMs (web, application, database). VMDC 2.3 Gold and Silver services are defined with three application tiers on three separate VLANs to host web, application, and database services on different VMs. The Bronze service is defined with one VLAN only so if there are multi-tiered applications, they must reside on the same VLAN or potentially on the same VM (Linux, Apache, MySQL, PHP, Perl, or Python (LAMP)/Windows Apache, MySQL, PHP, Perl or Python (WAMP) stack). All three services, Gold, Silver, and Bronze, are defined with separate VRF instances to provide security and isolation.

–![]() Stateful services. Tenant workloads can also be differentiated by the services applied to each tier. The Gold service is defined with an ASA 5585-X virtual firewall context, ACE 4710 Virtual Server Load Balancer (vSLB) context, and secure remote access (IPSec VPN and SSL-VPN) on the ASA 5555-X. The Silver tier is defined with an ACE vSLB. The Bronze tier is defined with no services on ASA or ACE. All three services include the Nexus 1000V Virtual Security Gateway (VSG) for compute firewall services.

Stateful services. Tenant workloads can also be differentiated by the services applied to each tier. The Gold service is defined with an ASA 5585-X virtual firewall context, ACE 4710 Virtual Server Load Balancer (vSLB) context, and secure remote access (IPSec VPN and SSL-VPN) on the ASA 5555-X. The Silver tier is defined with an ACE vSLB. The Bronze tier is defined with no services on ASA or ACE. All three services include the Nexus 1000V Virtual Security Gateway (VSG) for compute firewall services.

–![]() Quality of Service (QoS). Bandwidth control during periods of network congestion can be a key differentiator. QoS policies can provide different traffic classes to different tenant types and prioritize bandwidth by service tier. The Gold tier supports VoIP/real-time traffic, call signalling and data class, while the Silver, Bronze, and Copper tiers have only data class. Additionally, Gold and Silver tenants are given bandwidth guarantee with Gold getting more bandwidth (2x) than Silver.

Quality of Service (QoS). Bandwidth control during periods of network congestion can be a key differentiator. QoS policies can provide different traffic classes to different tenant types and prioritize bandwidth by service tier. The Gold tier supports VoIP/real-time traffic, call signalling and data class, while the Silver, Bronze, and Copper tiers have only data class. Additionally, Gold and Silver tenants are given bandwidth guarantee with Gold getting more bandwidth (2x) than Silver.

•![]() VM resources. Service tiers can vary based on the size of specific VM attributes, such as CPU, memory, and storage capacity. The Gold service tier is defined with VM characteristics of four vCPUs and 16 GB memory. The Silver tier is defined with VMs of two vCPUs and 8 GB, while the Bronze tier VMs have one vCPU and 4 GB.

VM resources. Service tiers can vary based on the size of specific VM attributes, such as CPU, memory, and storage capacity. The Gold service tier is defined with VM characteristics of four vCPUs and 16 GB memory. The Silver tier is defined with VMs of two vCPUs and 8 GB, while the Bronze tier VMs have one vCPU and 4 GB.

•![]() Storage resources. To meet data store protection, RPOs, or RTOs, service tiers can vary based on provided storage features, such as redundant array of independent disks (RAID) levels, disk types and speeds, and backup and snapshot capabilities. The Gold service is defined with 15k FC disks, Silver tier on 10k FC disks, and Bronze tier on SATA disks.

Storage resources. To meet data store protection, RPOs, or RTOs, service tiers can vary based on provided storage features, such as redundant array of independent disks (RAID) levels, disk types and speeds, and backup and snapshot capabilities. The Gold service is defined with 15k FC disks, Silver tier on 10k FC disks, and Bronze tier on SATA disks.

Figure 2-11 shows a representation of a VMDC 2.3 Gold service tier network container.

Figure 2-11 VMDC 2.3 Expanded Gold Network Container

The network container is a logical (virtual) segment of the shared (common) physical network resources (end-to-end through the DC) that represents the DC network domain carrying tenant traffic. The physical infrastructure is common to all tenants, but each network device (routers, switches, firewalls, and so forth) is virtualized such that each tenant's virtual network container is overlaid on the common physical network.

The Gold tenant gets two network (and compute/storage) zones to place workloads into. Each zone has its own set of VLANs, VRF instances, and firewall/load balancer contexts. Figure 2-11 shows a logical representation of a two-zone VMDC 2.3 Expanded Gold network container.

This Gold service tier provides the highest level of sophistication by including secure remote access, firewall, and load balancing to the service. The vFW (on the ASA 5585-X60) provides perimeter security services, protecting tenant VMs. The vSLB (ACE 4710 appliance) provides load balancing across VMs in each tier of the tenant. The ASA 5555-X provides virtualized secure remote access (IPsec-VPN and SSL-VPN) to tenant VMs from the Internet. The ACE and ASA service module/ appliance are utilized in routed (L3) virtual mode in the VMDC 2.3 design. The Gold service tier also includes the Nexus 1000V VSG for providing virtual security services to the VMs. The Gold service provides higher QoS SLA and three traffic classes - real-time (VoIP), call signaling, and premium data.

The two zones can be used to host different types of applications, to be accessed through different network paths. The two zones are discussed below.

•![]() PVT Zone: The Private Zone (PVT) and its VMs can be used for cloud services to be accessed through the customer MPLS-VPN network.

PVT Zone: The Private Zone (PVT) and its VMs can be used for cloud services to be accessed through the customer MPLS-VPN network.

–![]() The customer sites connect to the provider MPLS-core and the customer has their own MPLS-VPN (Cust-VRF).

The customer sites connect to the provider MPLS-core and the customer has their own MPLS-VPN (Cust-VRF).

–![]() The VMDC DC ASR 1000 PE connects to the customer sites through the MPLS-VPN (Cust-VRF in Figure 2-11).

The VMDC DC ASR 1000 PE connects to the customer sites through the MPLS-VPN (Cust-VRF in Figure 2-11).

–![]() This Cust-VRF is extended through the VMDC network to the Nexus 7004 aggregation switch.

This Cust-VRF is extended through the VMDC network to the Nexus 7004 aggregation switch.

–![]() On the agg/access Nexus 7004, the Cust-VRF connects to the ASA Cust-vFW, and then is connected back into a Cust-PVT-VRF on the Nexus 7004 agg/access device (VRF sandwich to insert service nodes), and then to the compute layer on the UCS.

On the agg/access Nexus 7004, the Cust-VRF connects to the ASA Cust-vFW, and then is connected back into a Cust-PVT-VRF on the Nexus 7004 agg/access device (VRF sandwich to insert service nodes), and then to the compute layer on the UCS.

–![]() For the VMDC 2.3 Gold tenant, the PVT zone is defined with three server VLANs.

For the VMDC 2.3 Gold tenant, the PVT zone is defined with three server VLANs.

–![]() In addition, each tenant is assigned a separate Nexus 1000V VSG instance. The tenant is defined as an ORG in the VSG (PNSC), with the three VLANs placed into separate VSG sub-zones.

In addition, each tenant is assigned a separate Nexus 1000V VSG instance. The tenant is defined as an ORG in the VSG (PNSC), with the three VLANs placed into separate VSG sub-zones.

–![]() The VSG is used to provide security policies to monitor and protect traffic between the VLANs (sub-zones).

The VSG is used to provide security policies to monitor and protect traffic between the VLANs (sub-zones).

•![]() DMZ Zone: The VMDC 2.3 Gold container supports a DMZ Zone for tenants to place VMs into a DMZ area, for isolating and securing the DMZ workloads from the PVT workloads, and also to enable users on the Internet to access the DMZ-based cloud services.

DMZ Zone: The VMDC 2.3 Gold container supports a DMZ Zone for tenants to place VMs into a DMZ area, for isolating and securing the DMZ workloads from the PVT workloads, and also to enable users on the Internet to access the DMZ-based cloud services.

–![]() The ASR 1000 PE WAN router is also connected to the Internet and a shared (common) VRF (usually global routing table) exists for all Gold tenants to connect to (either encrypted or unencrypted).

The ASR 1000 PE WAN router is also connected to the Internet and a shared (common) VRF (usually global routing table) exists for all Gold tenants to connect to (either encrypted or unencrypted).

–![]() Encrypted (SSL or IPsec Remote Access VPN) traffic is sent to an ASA 5555-X, and based on the VPN policy, is mapped to a particular tenant and the corresponding tenant VPN VLAN.

Encrypted (SSL or IPsec Remote Access VPN) traffic is sent to an ASA 5555-X, and based on the VPN policy, is mapped to a particular tenant and the corresponding tenant VPN VLAN.

–![]() The tenant VPN VLAN then connects to the tenant DMZ-vFW (different vFW context on the ASA 5585-X than the tenant PVT-vFW), then to the tenant DMZ-VRF (different VRF on the Nexus 7004 agg/access than the tenant PVT-VRF), and then to the Compute layer for the DMZ Zone.

The tenant VPN VLAN then connects to the tenant DMZ-vFW (different vFW context on the ASA 5585-X than the tenant PVT-vFW), then to the tenant DMZ-VRF (different VRF on the Nexus 7004 agg/access than the tenant PVT-VRF), and then to the Compute layer for the DMZ Zone.

–![]() Similarly, unencrypted traffic from the Internet, based on the destination VM/VIP address, is sent to the tenant DMZ-vFW, then to the DMZ-vSLB, DMZ-VRF, and the DMZ Compute Zone.

Similarly, unencrypted traffic from the Internet, based on the destination VM/VIP address, is sent to the tenant DMZ-vFW, then to the DMZ-vSLB, DMZ-VRF, and the DMZ Compute Zone.

–![]() The DMZ Zone can be used to host applications like proxy servers, Internet-facing web servers, email servers, etc. The DMZ Zone consists of one server VLAN in this implementation.

The DMZ Zone can be used to host applications like proxy servers, Internet-facing web servers, email servers, etc. The DMZ Zone consists of one server VLAN in this implementation.

In VMDC 2.3, a Gold tenant can choose to have only the PVT Zone, or both the PVT and DMZ Zones. If the tenant has both PVT and DMZ Zones, then the Gold tenant will consume three VRF instances (Cust, Cust-PVT, and Cust-DMZ) on the Nexus 7004 Agg, two VFW instances, two vSLB instances, two VSGs, and four server VLANs. To facilitate traffic flows between the DMZ and PVT Zones (for example, proxy or web servers in the DMZ Zone, application and database servers in the PVT Zone), the DMZ-vFW and PVT-vFW are interconnected. Configuring appropriate security policies (routing, NAT, firewall rule, ACLs) on the DMZ-vFW and PVT-vFW can allow or disallow communication between the two zones.

Load-balanced traffic for all tiers of Gold tenants is implemented using the ACE 4710, which has one interface in each of the tiers.

•![]() MPLS-VPN to PVT Zone

MPLS-VPN to PVT Zone

•![]() Unsecured (clear) Internet to DMZ Zone

Unsecured (clear) Internet to DMZ Zone

•![]() Secure (Remote Access SSL/IPsec VPN) Internet to DMZ Zone

Secure (Remote Access SSL/IPsec VPN) Internet to DMZ Zone

•![]() DMZ to PVT Zone

DMZ to PVT Zone

•![]() MPLS-VPN to DMZ Zone

MPLS-VPN to DMZ Zone

•![]() PVT to Internet Zone is via an HTTP proxy hosted in the DMZ Zone

PVT to Internet Zone is via an HTTP proxy hosted in the DMZ Zone

Figure 2-12 is a representation of a VMDC 2.3 Silver network container.

Figure 2-12 VMDC 2.3 Silver Network Container

The Silver service tier includes one VRF instance per Silver tenant and three server VLANs (three-tiered applications) for each tenant. The Silver service includes a load-balancing service for more sophistication over the Bronze tier. The vLB (ACE 4710 appliance) provides load balancing across VMs in each tier of the tenant. The ACE service load balancer is utilized in one arm, routed (L3), virtual mode in the VMDC 2.3 design, and one context is used per Silver tenant. The context has links on each of the server VLANs and works in one-arm mode. The Silver service tier also includes the Nexus 1000V VSG to provide virtual security services to the VMs. The Silver service provides medium QoS SLA and one traffic class, premium data.

Figure 2-13 is a representation of a VMDC 2.3 Bronze network container.

Figure 2-13 VMDC 2.3 Bronze Network Container

The Bronze service tier includes one VRF instance and one server VLAN for each tenant. The Bronze service is the least sophisticated tier and does not include any perimeter security services. The Bronze service tier does include the Nexus 1000V VSG for providing virtual security services to the VMs. The Bronze service provides lower QoS SLA and one traffic class, standard data.

Note ![]() Additionally, VMDC 2.3 also defines a Copper network container, which has the similar characteristics as Bronze, but has only Internet-based access and no L3VPN-based access. The Copper container also uses a shared perimeter firewall (ASA vFW context) for all tenants. However, the VMDC 2.3 Copper network container has not been validated with the DRaaS System.

Additionally, VMDC 2.3 also defines a Copper network container, which has the similar characteristics as Bronze, but has only Internet-based access and no L3VPN-based access. The Copper container also uses a shared perimeter firewall (ASA vFW context) for all tenants. However, the VMDC 2.3 Copper network container has not been validated with the DRaaS System.

Modifications in VMDC Network Containers for DRaaS

The VMDC 2.3-based infrastructure and Gold, Silver, or Bronze network containers (specific container used by a tenant based on FW, SLB services needed) can be used for DR services, but the following modifications need to be made:

•![]() Utilize a new ASA context per tenant for IPsec-VPN services to encrypt the communication between InMage control servers in the DR site and the Enterprise site. This ASA context needs to be added whether the tenant is using Gold, Silver or Bronze container on the DR site.This context will logically reside close to the server VLANs.

Utilize a new ASA context per tenant for IPsec-VPN services to encrypt the communication between InMage control servers in the DR site and the Enterprise site. This ASA context needs to be added whether the tenant is using Gold, Silver or Bronze container on the DR site.This context will logically reside close to the server VLANs.

Note ![]() In the case of Silver or Bronze VMDC containers, no existing ASA context is being used in the network container for firewall or VPN services. Therefore inserting this ASA context for InMage VPN purposes will be a new addition to the network container. In the case of the VMDC Gold container, an ASA context (on the multi-context ASA5585X) is utilized for perimeter firewall services, and a shared ASA (single-context ASA5555) is utilized for remote access VPN purposes. However, these existing ASA contexts in the VMDC Gold container cannot be used for the InMage VPN purposes since they logically sit in a different part of the network container. This new ASA context for the tenant can be created on the existing ASA5585-FW device (if enough capacity for contexts and throughput exists) or a new ASA device can be utilized. It is recommended to use a new physical ASA device (ASA5555 or ASA55585 based on VPN throughput needed) for the InMage VPN purposes. Thus, the VMDC 2.3 infrastructure for DRaaS would have three separate physical ASA devices: one eachg for FW, RA-VPN, and one for Inmage Site-Site VPN. The VMDC 2.3 Gold container for DRaaS would have three logical ASA devices: one per-tenant context for FW, one shared/global ASA for RA-VPN, and one per-tenant context for InMage Site-Site VPN

In the case of Silver or Bronze VMDC containers, no existing ASA context is being used in the network container for firewall or VPN services. Therefore inserting this ASA context for InMage VPN purposes will be a new addition to the network container. In the case of the VMDC Gold container, an ASA context (on the multi-context ASA5585X) is utilized for perimeter firewall services, and a shared ASA (single-context ASA5555) is utilized for remote access VPN purposes. However, these existing ASA contexts in the VMDC Gold container cannot be used for the InMage VPN purposes since they logically sit in a different part of the network container. This new ASA context for the tenant can be created on the existing ASA5585-FW device (if enough capacity for contexts and throughput exists) or a new ASA device can be utilized. It is recommended to use a new physical ASA device (ASA5555 or ASA55585 based on VPN throughput needed) for the InMage VPN purposes. Thus, the VMDC 2.3 infrastructure for DRaaS would have three separate physical ASA devices: one eachg for FW, RA-VPN, and one for Inmage Site-Site VPN. The VMDC 2.3 Gold container for DRaaS would have three logical ASA devices: one per-tenant context for FW, one shared/global ASA for RA-VPN, and one per-tenant context for InMage Site-Site VPN

•![]() In the case of Gold containers, the tenant ASA context performing perimeter firewall services needs to have a security policy (ACL) configured to permit the IPsec-VPN traffic from the ENT site to the DR site. This ACL should be specific to allow IPsec traffic only between the IPsec tunnel endpoints (local ASA Site-Site VPN endpoint in the DR site, and remote VPN endpoint in the ENT site) used to encrypt the InMage traffic.

In the case of Gold containers, the tenant ASA context performing perimeter firewall services needs to have a security policy (ACL) configured to permit the IPsec-VPN traffic from the ENT site to the DR site. This ACL should be specific to allow IPsec traffic only between the IPsec tunnel endpoints (local ASA Site-Site VPN endpoint in the DR site, and remote VPN endpoint in the ENT site) used to encrypt the InMage traffic.

•![]() Create a new VLAN for Bronze container to host the InMage control servers. To insert an ASA context to encrypt the InMage traffic, we need to create an outside and inside interface on the ASA context. Since the VMDC 2.3 Bronze container is defined with only one VLAN, we need to define a new VLAN to host the InMage servers. The first VLAN (where recovered VMs will be placed) will serve as the outside interface for the ASA VPN context and the second (new) VLAN (where the InMage servers are placed) will serve as the inside interface for the ASA VPN context.

Create a new VLAN for Bronze container to host the InMage control servers. To insert an ASA context to encrypt the InMage traffic, we need to create an outside and inside interface on the ASA context. Since the VMDC 2.3 Bronze container is defined with only one VLAN, we need to define a new VLAN to host the InMage servers. The first VLAN (where recovered VMs will be placed) will serve as the outside interface for the ASA VPN context and the second (new) VLAN (where the InMage servers are placed) will serve as the inside interface for the ASA VPN context.

Note ![]() For the VMDC 2.3 Gold and Silver containers, three server VLANs already support three-tier applications so there is no need to create a new VLAN to host the InMage servers or for InMage VPN purposes. Instead, the InMage servers can be hosted in the second VLAN (App tier). The first VLAN (Web tier, where recovered Web-tier VMs will be placed) will serve as the outside interface for the ASA VPN context, and the second VLAN (App tier, where the recovered App-tier VMs and also the InMage servers will be placed) will serve as the inside interface for the ASA VPN context.

For the VMDC 2.3 Gold and Silver containers, three server VLANs already support three-tier applications so there is no need to create a new VLAN to host the InMage servers or for InMage VPN purposes. Instead, the InMage servers can be hosted in the second VLAN (App tier). The first VLAN (Web tier, where recovered Web-tier VMs will be placed) will serve as the outside interface for the ASA VPN context, and the second VLAN (App tier, where the recovered App-tier VMs and also the InMage servers will be placed) will serve as the inside interface for the ASA VPN context.

Figure 2-14, Figure 2-15, and Figure 2-16 show logical representations of the modified VMDC 2.3 Gold, Silver, and Bronze network containers for DRaaS.

Figure 2-14 Modified VMDC 2.3 Gold Container for DRaaS

Figure 2-15 Modified VMDC 2.3 Silver Container for DRaaS

Figure 2-16 Modified VMDC 2.3 Bronze Container for DRaaS

VMDC Orchestration using BMC CLM

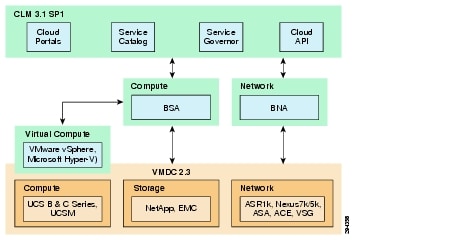

The Cisco-BMC cloud management architecture for VMDC is designed to meet the growing needs of today's data center and cloud deployments. BMC Cloud Lifecycle Management (CLM) provides an end-to-end automated lifecycle management solution for cloud-based IT hosting environments.

The architecture focuses on the planning, governance, provisioning, operation, administration, and maintenance of cloud services, the runtime environments and infrastructure resources needed to sustain them, and the management services that comprise CLM.

The VMDC 2.3 architecture and network containers have been validated to be orchestrated by CLM 3.1 Service Pack 1 (SP1). CLM 3.1 SP1 includes all of the elements that are essential to enabling a VMDC 2.3-based cloud environment:

•![]() Self-service Portal and Service Catalog. Provides the ability to order and track deployed services.

Self-service Portal and Service Catalog. Provides the ability to order and track deployed services.

•![]() Service delivery automation. Automates provisioning of services. CLM can also provide usage metering of services, by using additional BMC components.

Service delivery automation. Automates provisioning of services. CLM can also provide usage metering of services, by using additional BMC components.

•![]() Resource management. Provisions and manages resources as per-service needs. This includes network, compute, and storage resources.

Resource management. Provisions and manages resources as per-service needs. This includes network, compute, and storage resources.

•![]() Operational process automation. Automates operational processes such as user management, service desk integration, and alerting. Capacity management and service level management can also be provided by additional BMC components like BMC Capacity Optimization (BCO) and BMC ProactiveNet Performance Management (BPPM).

Operational process automation. Automates operational processes such as user management, service desk integration, and alerting. Capacity management and service level management can also be provided by additional BMC components like BMC Capacity Optimization (BCO) and BMC ProactiveNet Performance Management (BPPM).

CLM 3.1 SP1 enables onboarding and pooling of resources for compute, storage, and networking, and creation of policies to manage those pools. It provides functionality to provision network containers, physical servers, and virtual server instances. It also provides the ability for end users, through a portal, to place service requests to create and manage server instances. CLM 3.1 SP1 is fully multi-tenant/ multi-service aware. It can support simultaneous use of the cloud environment by multiple tenants that can request, deploy, and operate services independently.

Note ![]() For detailed information on using BMC CLM 3.1 SP1 for orchestrating VMDC 2.3 architecture, refer to the following document:

For detailed information on using BMC CLM 3.1 SP1 for orchestrating VMDC 2.3 architecture, refer to the following document:

•![]() Orchestrating VMDC 2.3 with BMC CLM 3.1 SP1 Design & Implementation Guide

Orchestrating VMDC 2.3 with BMC CLM 3.1 SP1 Design & Implementation Guide

This section includes the following topics:

•![]() Container Pre-Provisioning for DR Services

Container Pre-Provisioning for DR Services

CLM 3.1 SP1 Architecture

CLM 3.1 SP1 is a general-purpose, one-size-fits-all management solution for cloud hosting environments. CLM 3.1 SP1 can manage environments that reside entirely on-premise or off-premise and hybrid environments that are hosted partially on-premise and off premise. CLM 3.1 SP1 can manage hosting environments that use physical or virtual compute, storage, and network resources. CLM 3.1 SP1 can now manage multi-hypervisor environments that include Microsoft Hyper-V and VMware vSphere. It can also manage environments that use cloud resources, including resources and services offered by other Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) clouds.

CLM 3.1 SP1 is fully multi-tenant aware. It can support simultaneous use of the cloud by multiple tenants that can request, deploy, and operate multiple services independently. CLM 3.1 SP1 has an architecture that provides the foundation for scaling the cloud and for configuring multiple data centers.

Figure 2-17 CLM 3.1 SP1 Architecture

User Roles

CLM 3.1 SP1 supports three different classes of users:

•![]() Cloud Administrator. A Cloud Administrator is an IT professional responsible for the full lifecycle of the cloud environment, including initial planning, deployment and configuration, and continued administration, operation, and maintenance. The Cloud Administrator uses the Administration console for most tasks.

Cloud Administrator. A Cloud Administrator is an IT professional responsible for the full lifecycle of the cloud environment, including initial planning, deployment and configuration, and continued administration, operation, and maintenance. The Cloud Administrator uses the Administration console for most tasks.

•![]() Cloud Organization (Tenant) Administrator. A Cloud Organization (Tenant) Administrator is responsible for managing a subset of the cloud that is provisioned for a particular Tenant or Service.

Cloud Organization (Tenant) Administrator. A Cloud Organization (Tenant) Administrator is responsible for managing a subset of the cloud that is provisioned for a particular Tenant or Service.

•![]() Cloud End User. Cloud End Users request services made available to them by the Cloud Administrator through the BMC My Services console. Cloud End Users can request virtual as well as physical resources, view and manage their commissioned resources, monitor the health of their commissioned resources, and decommission resources.

Cloud End User. Cloud End Users request services made available to them by the Cloud Administrator through the BMC My Services console. Cloud End Users can request virtual as well as physical resources, view and manage their commissioned resources, monitor the health of their commissioned resources, and decommission resources.

Consoles

CLM 3.1 SP1 has three consoles that cloud users can use to manage the VMDC cloud infrastructure:

•![]() BMC CLM My Cloud Services console. This enables users and administrators to request, deploy, and operate Service Offerings from the Service Catalog.

BMC CLM My Cloud Services console. This enables users and administrators to request, deploy, and operate Service Offerings from the Service Catalog.

•![]() BMC CLM Administration console. This enables Cloud Administrators to manage the cloud and the services that it hosts.

BMC CLM Administration console. This enables Cloud Administrators to manage the cloud and the services that it hosts.

•![]() BMC Tenant Administration console. This is an enhanced My Cloud Services console. This console offers additional administration capabilities to Tenant Admins, such as, capabilities around network management.

BMC Tenant Administration console. This is an enhanced My Cloud Services console. This console offers additional administration capabilities to Tenant Admins, such as, capabilities around network management.

Service Catalog

The Service Catalog contains the Service Offerings that are available for consumption by cloud users. Cloud Administrators maintain the Service Catalog by creating, modifying, and deleting Service Offerings. They can also control which offerings in the Service Catalog are available to each Tenant.

Cloud Database

The Cloud DB contains operational state and configuration information about the objects managed by the cloud. These managed objects include the Service Offering Instance (SOI), virtual cloud resources, and physical and virtual infrastructure resources.

Product Catalog and Definitive Media Library

The Product Catalog and Definitive Media Library (DML) list all software that can be provisioned in the cloud. The Product Catalog does not store the software itself. Instead, it contains a unique reference to each piece of software, while the software itself remains in native repositories such as the BMC Server Automation (BSA) software repository or the Amazon AWS Amazon Machine Images (AMI) repository. The Product Catalog also contains software metadata, such as software and hardware requirements pertaining to software provisioning, as well as other data used during software configuration. Cloud Administrators create and maintain entries in the Product Catalog by using interfaces provided by the Product Catalog.

Cloud Blueprints

Cloud blueprints define cloud services and resources that can be provisioned in the VMDC infrastructure. CLM 3.1 SP1 uses the following cloud blueprints:

•![]() Service blueprints describe the functional structure of a given Service Offering, including its functional components and communication paths. They also define how a Service Offering is to be deployed under different circumstances. Each Service Offering in the Service Catalog has a Service Blueprint that is used for its instantiation. When creating a Service Blueprint, the user can define the service and how it is deployed:

Service blueprints describe the functional structure of a given Service Offering, including its functional components and communication paths. They also define how a Service Offering is to be deployed under different circumstances. Each Service Offering in the Service Catalog has a Service Blueprint that is used for its instantiation. When creating a Service Blueprint, the user can define the service and how it is deployed:

–![]() Service definitions of applications or server instances specify the topology (number of tiers), configuration, operating systems, and software packages that need to be provisioned to "stand up" an application or server.

Service definitions of applications or server instances specify the topology (number of tiers), configuration, operating systems, and software packages that need to be provisioned to "stand up" an application or server.

–![]() Service deployment definitions for each Service Blueprint specify a set of one or more ways in which the blueprint could be instantiated when it is provisioned.

Service deployment definitions for each Service Blueprint specify a set of one or more ways in which the blueprint could be instantiated when it is provisioned.

For example, in a blueprint for an application, one service is related to three deployments, Small, Medium, and Large, that are mapped to a Service Offering in the Service Catalog. The Small deployment definition for the application might use a single Resource Set that consists of one VM to support all three tiers, web, business logic, and database. In contrast, the Large deployment definition might distribute the application component to three different Resource Sets, each corresponding to a different application tier.

•![]() PoD blueprints define the physical topology of the VMDC infrastructure.

PoD blueprints define the physical topology of the VMDC infrastructure.

•![]() Network container blueprints define the logical segmentation of the VMDC cloud infrastructure.

Network container blueprints define the logical segmentation of the VMDC cloud infrastructure.

Infrastructure Resources

Cloud infrastructure resources represent physical or virtual DC resources that host Service Offerings in the cloud. All compute, storage, network, and software resources that are part of the VMDC infrastructure are considered to be infrastructure resources. In the VMDC 2.3 system, the following components comprise the infrastructure resources:

•![]() ASR 1006 (WAN Router)

ASR 1006 (WAN Router)

•![]() Nexus 7004 (DC Aggregation layer)

Nexus 7004 (DC Aggregation layer)

•![]() Nexus 5548 (DC Access layer)

Nexus 5548 (DC Access layer)

•![]() ACE 4710 (Server Load Balancer appliance)

ACE 4710 (Server Load Balancer appliance)

•![]() ASA 5585-X (Firewall appliance)

ASA 5585-X (Firewall appliance)

•![]() UCS B-series blade and C-series rack servers

UCS B-series blade and C-series rack servers

•![]() UCS 6248 (Fabric Interconnect)

UCS 6248 (Fabric Interconnect)

•![]() Nexus 1000V (Distributed Virtual Switch)

Nexus 1000V (Distributed Virtual Switch)

•![]() Virtual Security Gateway (Compute Firewall)

Virtual Security Gateway (Compute Firewall)

•![]() NetApp FAS and EMC VMAX, VNX (NAS and SAN devices)

NetApp FAS and EMC VMAX, VNX (NAS and SAN devices)

•![]() VMware Virtual Center

VMware Virtual Center

•![]() VMware ESXi clusters and hosts

VMware ESXi clusters and hosts

•![]() Microsoft Hyper-V 2012

Microsoft Hyper-V 2012

•![]() Microsoft System Center Virtual Machine Manager 2012

Microsoft System Center Virtual Machine Manager 2012

Cloud Providers

Cloud providers are software programs that act as element managers for different types of resources, platforms, and services consumed by the cloud. At installation, CLM 3.1 SP1 includes the following providers:

•![]() BMC Server Automation (BSA). BSA is a control and resource provider for various types of infrastructure compute resources, such as physical servers, VMs, VM clusters, and virtual cluster resource pools.

BMC Server Automation (BSA). BSA is a control and resource provider for various types of infrastructure compute resources, such as physical servers, VMs, VM clusters, and virtual cluster resource pools.

•![]() BMC Network Automation (BNA). BNA is a control and resource provider for network resource providers, such as IP addresses, routers, firewalls, load balancers, and VLANs

BMC Network Automation (BNA). BNA is a control and resource provider for network resource providers, such as IP addresses, routers, firewalls, load balancers, and VLANs

•![]() Amazon EC2. This provider facilitates integration with the Amazon EC2 cloud.

Amazon EC2. This provider facilitates integration with the Amazon EC2 cloud.

•![]() vCloud Director. This provider facilitates integration with the VMware vCloud Director-based cloud environment.

vCloud Director. This provider facilitates integration with the VMware vCloud Director-based cloud environment.

Cloud Workload Manager

The cloud Workload Manager (WLM) instantiates Service Offerings selected from the Service Catalog. It also administers and maintains those services based on policies defined in the cloud policy DB.

Cloud Platform Manager

The cloud Platform Manager provisions, operates, administers, and maintains runtime platform instances.

Cloud Resource Manager

The cloud Resource Manager (RM) manages cloud infrastructure resources, including onboarding, Organization, assignment, and allocation.

Cloud Service Governor

The cloud Service Governor orchestrates workload management, platform management, and resource management operations based on policies and rules defined by Cloud Administrators to provision hosted Service Offerings. The Service Governor makes placement decisions on where resources need to be placed, based on resource management/tracking and service policies/tagging. The cloud Service Governor also distributes and subsequently enforces operational policies across CLM 3.1 SP1 components.

CLM 3.1 SP1 Northbound API

The solution architecture also defines a set of APIs that can be used by third-party application developers to enhance the CLM 3.1 SP1 implementation. The API can be used to do the following tasks:

•![]() Integrate with CLM 3.1 SP1

Integrate with CLM 3.1 SP1

•![]() Automate repetitive processes

Automate repetitive processes

•![]() Create a customized portal in CLM 3.1 SP1

Create a customized portal in CLM 3.1 SP1

The API is a model-based, object-oriented RESTful web service that features an easy-to-use interface facilitated by standard HTTP requests and response messages that carry JSON-format payloads.

Figure 2-18 illustrates the BMC CLM 3.1 components and interactions with infrastructure components.

Figure 2-18 CLM 3.1 SP1 Components and Interactions

Container Pre-Provisioning for DR Services

To enable the DRaaS, the VMDC-based network containers need to be pre-provisioned in the DR site. Depending on the services (firewalls, load balancers) needed, the DR customer can choose to use the VMDC 2.3 Gold, Silver, or Bronze Network containers. BMC CLM can be used to automatically provision the VMDC Gold, Silver, or Bronze network containers on a VMDC 2.3 infrastructure. This capability for orchestrating VMDC 2.3 Gold, Silver, or Bronze network containers is available out-ofthe-box in BMC CLM 3.1 SP1.

Note ![]() For more information on using BMC CLM 3.1 SP1 to orchestrate VMDC 2.3 network containers, refer to the following document:

For more information on using BMC CLM 3.1 SP1 to orchestrate VMDC 2.3 network containers, refer to the following document:

Figure 2-19 illustrates the high-level CLM orchestration workflow.

Figure 2-19 CLM 3.1 SP1 End-to-End New Request Flow

The workflow steps needed for container pre-provisioning and preparing the DR service are listed:

Step 1 ![]() VMDC 2.3-based infrastructure, topology, and base infrastructure configurations are created in the DR site.

VMDC 2.3-based infrastructure, topology, and base infrastructure configurations are created in the DR site.

Step 2 ![]() BMC CLM 3.1 SP1 are installed in the DR site. The VMDC 2.3 PoD and Network Container Blueprints are on-boarded into CLM. The CLM portal is set up and running.

BMC CLM 3.1 SP1 are installed in the DR site. The VMDC 2.3 PoD and Network Container Blueprints are on-boarded into CLM. The CLM portal is set up and running.

Step 3 ![]() Tenant (ENT customer requiring DR services) uses the BMC CLM portal to request the Network Container from the Service Catalog. The tenant can choose Gold, Silver, or Bronze network container.

Tenant (ENT customer requiring DR services) uses the BMC CLM portal to request the Network Container from the Service Catalog. The tenant can choose Gold, Silver, or Bronze network container.

Step 4 ![]() CLM Cloud Admin (DR Provider) approves the change request, and the requested Network Container for DR services is created on the VMDC infrastructure for the tenant. Appropriate Compute and Storage resources are set aside for the Tenant.

CLM Cloud Admin (DR Provider) approves the change request, and the requested Network Container for DR services is created on the VMDC infrastructure for the tenant. Appropriate Compute and Storage resources are set aside for the Tenant.

Step 5 ![]() Necessary modifications are made in the VMDC network container to facilitate the DR service are made. This includes setting up any additional VLANs, creating the ASA context for IPsec VPN tunnels (for secure InMage communication from customer site to DR site, etc., as documented in VMDC container. Adding VLANs can be done by the Cloud Admin through the CLM portal. Creating the ASA context for VPN, and connecting to tenant's VMDC network container and VLANs has to be done manually by the DR site network administrator.

Necessary modifications are made in the VMDC network container to facilitate the DR service are made. This includes setting up any additional VLANs, creating the ASA context for IPsec VPN tunnels (for secure InMage communication from customer site to DR site, etc., as documented in VMDC container. Adding VLANs can be done by the Cloud Admin through the CLM portal. Creating the ASA context for VPN, and connecting to tenant's VMDC network container and VLANs has to be done manually by the DR site network administrator.

a. ![]() Install the InMage control plane servers into the tenant's VMDC network container in the appropriate VLANs. This task can be done through CLM by the CLM Cloud Admin, if the InMage applications are created as Service Offerings in the CLM Service Catalog. Otherwise, these InMage applications have to be manually installed by the DR site server administrator.

Install the InMage control plane servers into the tenant's VMDC network container in the appropriate VLANs. This task can be done through CLM by the CLM Cloud Admin, if the InMage applications are created as Service Offerings in the CLM Service Catalog. Otherwise, these InMage applications have to be manually installed by the DR site server administrator.

b. ![]() Set up the InMage DR components, storage and other necessary steps as documented in Chapter 3 Implementation and Configuration. These InMage DR components have to be set up at both the Enterprise and Provider DR sites.

Set up the InMage DR components, storage and other necessary steps as documented in Chapter 3 Implementation and Configuration. These InMage DR components have to be set up at both the Enterprise and Provider DR sites.

c. ![]() Verify IPsec connectivity between the DR components in the Enterprise and SP DR sites.

Verify IPsec connectivity between the DR components in the Enterprise and SP DR sites.

d. ![]() Verify DR functionality and create protection and recovery plans through InMage.

Verify DR functionality and create protection and recovery plans through InMage.

As part of the VMDC network container creation, BMC CLM also creates the firewall and load balancer services for the relevant containers. For example, in the VMDC 2.3 Gold container, perimeter FW services are provided by an ASA context, load balancing services are provided by an ACE context, and back-end or compute FW services are optionally provided by a VSG. When BMC CLM is used to create a VMDC 2.3 Gold network container for DR services, the ASA context, ACE context, and VSG are created for the tenant container. CLM also provisions some base security rules on the ASA an VSG. In addition, the CLM portal can be used to provision more specific FW security rules through network paths. These can be done by the Cloud Admin or by the Tenant Admin, and the security policies can be done at a VLAN (IP subnet and protocol/port) level or at a VM (specific IP address and protocol.port) level. In addition, CLM portal can be used (by Cloud Admin or Tenant Admin) to create load balancing virtual servers (VIPs) for specific protocols on the tenant ACE context, and associate servers or server pools (VMs) to the VIPs.

In the DRaaS scenario, after a failure, the recovered VMs will be brought online in the VMDC network container in the provider DR site. As such, these VMs need to have associated security policies on the ASA (and VSG if tenant is using compute FW), and associated load balancing policies on the ACE context. The following steps need to be followed to accomplish this once the workflow steps described above for creating the DR service have been completed.

Step 1 ![]() Network Container Pre-provisioning

Network Container Pre-provisioning

a. ![]() Follow the workflow steps described above to pre-provision the tenant network container through CLM in the DR site, install the DR components on the ENT and DR sites, and identify VMs to be protected/recovered.

Follow the workflow steps described above to pre-provision the tenant network container through CLM in the DR site, install the DR components on the ENT and DR sites, and identify VMs to be protected/recovered.

Step 2 ![]() vFW Policies

vFW Policies

a. ![]() Use the CLM portal to create Network Paths to allow/deny specific traffic flows to/from the VMs that will be recovered on the DR site. These Network Paths will be translated into appropriate ASA and VSG security policies and provisioned on the tenant ASA and VSG by CLM.

Use the CLM portal to create Network Paths to allow/deny specific traffic flows to/from the VMs that will be recovered on the DR site. These Network Paths will be translated into appropriate ASA and VSG security policies and provisioned on the tenant ASA and VSG by CLM.

b. ![]() The Network Paths and security policies can be based on VM subnets or specific VM IPs. It is recommended to use security policies based on VM subnets, so as to minimize the changes necessary when additional VMs get added into the protection plans (within the same VLAN or subnet).

The Network Paths and security policies can be based on VM subnets or specific VM IPs. It is recommended to use security policies based on VM subnets, so as to minimize the changes necessary when additional VMs get added into the protection plans (within the same VLAN or subnet).

c. ![]() It is recommended to configure these Network Paths and underlying security policies before DR declaration, so that all services are in place when VMs get recovered to the DR site. Post-recovery, the CLM portal can again be used to tweak the Network Paths and security policies as needed.

It is recommended to configure these Network Paths and underlying security policies before DR declaration, so that all services are in place when VMs get recovered to the DR site. Post-recovery, the CLM portal can again be used to tweak the Network Paths and security policies as needed.

Step 3 ![]() vSLB Policies

vSLB Policies

a. ![]() Use the CLM portal to create Virtual Servers (VIP) and associate to real servers (VMs), and define the protocols to be koad balanced and the mechanisms for probing the real servers. CLM will provision these policies on the tenant ACE context. The real servers have to reflect the specific IP addresses to which the VMs to be load balanced.

Use the CLM portal to create Virtual Servers (VIP) and associate to real servers (VMs), and define the protocols to be koad balanced and the mechanisms for probing the real servers. CLM will provision these policies on the tenant ACE context. The real servers have to reflect the specific IP addresses to which the VMs to be load balanced.

b. ![]() It is recommended to configure these SLB policies before DR declaration, so that all services are in place when VMs get recovered to the DR site. Post-recovery, the CLM portal can again be used to tweak the load balancing policies as needed.

It is recommended to configure these SLB policies before DR declaration, so that all services are in place when VMs get recovered to the DR site. Post-recovery, the CLM portal can again be used to tweak the load balancing policies as needed.

Available Replication Types

This section includes the following topics:

•![]() Hypervisor-based vs. Guest OS vs. Storage

Hypervisor-based vs. Guest OS vs. Storage

Hypervisor-based vs. Guest OS vs. Storage

Figure 2-20 shows the different types of replication technologies that can be used for disaster recovery purposes.

Figure 2-20 Disaster Recovery Replication Technologies

Storage Array Level Replication

The most popular replication method used by most of the organizations today is storage array-level replication. Array-based replication is expensive and lacks granularity. You need to purchase from a single storage vendor the exact type, brand, and model number of a storage array on both the source and target side of your DR solution. You need to budget for exactly the same storage class and tier. One of those storage arrays will stay dormant until a recovery situation requires it to be active. An array-based solution typically replicates an entire volume even if there is only one VM in the volume that needs to be replicated. It does not provide the flexibility of replicating a single VM. It also requires multiple points of management while performing disaster recovery tasks and needs a separate run book management tool along with the storage array management console.

Hypervisor-Based Replication

Hypervisor-based replication is a good option for organizations who has all of their environment virtualized. The agent that captures the changes on the production servers sits at the hypervisor layer. Since hypervisor-based replication is "VM-aware," it is possible to select the VMs that need to be replicated, while saving storage space at the secondary site by avoiding replicating the ones that don't. Hypervisor-based replication allows you to be much more granular in what you protect, and it also allows you to group VMs by defining protection groups. And it can be managed from virtualization management suites like VMware's vCenter or Microsoft's System Center. The main limitation of hypervisor-based replication is that it's specific to a hypervisor and using the same solution physical environments cannot be protected.

Guest OS/Host Based Replication