|

Finesse

|

Finesse loses connection with Agent PG or CTI Server

|

Ensure that a peer or secondary duplexed node is added to avoid single point of failure.

|

New task request from Digital Routing service: No impact.

Automatic transfer requests from Finesse (for transfer on logout or RONA): Automatic transfers are initiated on the Finesse server on which the agent was signed in. Any outage on that Finesse server

can result in lost transfer requests.

Agent transfer request: The request fails because Finesse is out of service, and Finesse retains the task.

Agents signed into media on the failed Finesse server are put into WORK_NOT_READY state and made not routable. Tasks on that

server are preserved in their current state, and time continues to accrue towards the maximum task lifetime. The agent fails

over to the secondary Finesse server, and must sign in to the media again. The agent is put into the previous state. If the

agent doesn't have tasks, the agent is put in NOT_READY state.

Queued tasks: No impact.

Offered tasks: These tasks RONA because the agent cannot accept them.

Active tasks: These tasks fail over to the other Finesse server and are recovered on that server.

|

Note

|

Any active tasks that were in INTERRUPTED state at the time of the lost connection change are recovered. However, these tasks

change to the UNKNOWN state when the task is no longer INTERRUPTED. The agent can only close tasks when they are in the UNKNOWN

state.

|

|

|

Finesse

|

Agent logs out, or presence is lost while agent has active tasks

|

Ensure that a peer or secondary duplexed node is added to avoid single point of failure.

|

New task request from Digital Routing service: No impact.

Automatic or agent transfer requests: No impact.

Queued tasks: No impact.

Offered tasks: These tasks fail over to the other Finesse server and are recovered on that server. If a task's Start Timeout threshold is

exceeded during failover, the task RONAs.

Active tasks: If an agent logs out with active tasks, or agent presence is lost with active tasks, the tasks are either closed or transferred

to the original script selector depending on how the agent was configured when signing into the MRD.

If the tasks are transferred, the disposition code is CD_TASK_TRANSFERRED_AGENT_LOGOUT.

If the tasks are closed, the disposition code is CD_AGENT_LOGGED_OUT_DURING_ DIALOG.

|

|

Finesse

|

An agent has a task that must be closed or transferred, and the request fails or times out from the Digital Routing service.

|

Cisco Finesse retries the operation on receipt of a timeout or failure. If there is a timeout where the task was already closed

or transferred, the Digital Routing service returns the following error codes, which are automatically handled by Finesse.

No action required and the below error codes are only meant for troubleshooting:

-

The error code 20229 with HTTP status 400. This error code is triggered when the task is already in 'Closed' state and it

cannot be modified.

-

The error code 20230 with HTTP status 400. This error code is triggered when the task is not in the correct state for transfer

operation.

|

The customer's subsequent messages could find that the conversation is active, and an Append Message is attempted in the flow. However, you can implement an intent-based logic using a keyword such as "End Conversation" in

the flow. This allows the customer to exit the conversation if no responses are received as needed.

Alternatively, use the Get Task API of the Digital Routing service to check the status of a task in CCE whenever a conversation

is found to be active in the Search Conversation node, as indicated by the status conversationActive. If the Task is in the "Closed" state or cannot be found, use the Close Conversation API of Webex Engage to clear up the

conversation, and send a message to the end customer indicating that the conversation has been marked as Closed due to an

error. This allows the customer to restart the conversation as needed.

|

|

Finesse application

|

Finesse application fails

|

Ensure that a peer or secondary duplexed node is added to avoid single point of failure.

|

New task request from Digital Routing service: No impact.

Automatic or agent transfer requests: No impact.

Queued tasks: No impact.

Offered tasks: These tasks may RONA and get transferred back to the queue.

Active tasks: Finesse retains the tasks, and the tasks are recovered automatically once the Finesse desktop switches to peer Finesse server.

|

|

CTI Server or OPC

|

One CTI Server or one OPC fails

|

Ensure that a peer or secondary duplexed node is added to avoid single point of failure.

|

New task request from Digital Routing service : No impact

Automatic transfer requests from Finesse (for transfer on logout or RONA): Results in lost transfer requests.

Agent transfer request: The request fails, and Finesse retains the task.

Queued tasks: No impact.

Offered tasks: These tasks fail over to the other Finesse server and are recovered on that server. If a task's Start Timeout threshold is

exceeded during failover, the task RONAs.

Active tasks: These tasks fail over to the other Finesse server and are recovered on that server.

|

Note

|

Any active tasks that were in INTERRUPTED state at the time of the lost connection change are also recovered. However, these

tasks change to the UNKNOWN state when the task is no longer INTERRUPTED. The agent only can only close tasks when they are

in the UNKNOWN state.

|

|

|

OPC

|

The Open Peripheral Controllers (OPCs) on both sides fail

|

None

|

New task request from Webex Connect: No impact.

Automatic or agent transfer requests: Results in lost transfers.

Queued tasks in the CCE router: No impact.

Offered and active tasks: These tasks are lost.

|

|

Webex Connect

|

An agent has a task that must be closed. The request is accepted by the Digital Routing service however the webhook event

for task closure that is routed towards Webex Connect fails.

|

The Digital Routing service's webhooknotification mechanism includes a retry mechanism for each webhook event. When a notification

fails or times out, it is retried twice before moving on to the next notification. The time between retries is set to 3 seconds

for the first attempt and 6 seconds for the second attempt to allow for an exponential backoff and for underlying network

connectivity to recover .

|

The Customer's subsequent messages could find that the conversation is active, and an Append Message is attempted in the flow. However, you can implement an intent-based logic using a keyword such as "End Conversation" in

the flow. This allows the customer to exit the conversation if no responses are received as needed.

Alternatively, use the Get Task API of the Digital Routing service to check the status of a task in CCE whenever a conversation

is found to be active in the Search Conversation node, as indicated by the status conversationActive. If the Task is in the "Closed" state or cannot be found, use the Close Conversation API of Webex Engage to clear up the

conversation, and send a message to the end customer indicating that the conversation has been marked as Closed due to an

error. This allows the customer to restart the conversation as needed.

|

Note

|

This is not applicable for conversations that are initiated through email.

|

|

|

Webex Connect / Webex Engage

|

An agent has a task that must be closed or transferred. Either the Webex Connect fails to process the webhook event, or the

corresponding Webex Engage API that is invoked to close the conversation or to remove the participant, fails.

|

Insuch scenarios, the Digital Routing service and Finesse clears the task in the system, however, the conversation is still

active in Webex Engage and the agent is deemed to be in the conversation.

The Agent Desktop and Finesse server automatically clear the task based on one of the following scenarios:

-

Operation that the agent initiates for Close or Transfer.

-

Operation that the Finesse server auto initiates owing to RONA.

-

Operation when the agent logs out because of closing the desktop browser while still having tasks in the Agent inbox or when

Finesse server initiates presence driven logout because of Agent desktop losing connectivity with the Finesse server for an

extended period.

|

The Customer's subsequent messages could find that the conversation is active, and an Append Message is attempted in the flow. However, you can implement an intent-based logic using a keyword such as "End Conversation" in

the flow. This allows the customer to exit the conversation if no responses are received as needed.

Alternatively, use the Get Task API of the Digital Routing service to check the status of a task in CCE whenever a conversation

is found to be active in the Search Conversation node, as indicated by the status conversationActive. If the Task is in the "Closed" state or cannot be found, use the Close Conversation API of Webex Engage to clear up the

conversation, and send a message to the end customer indicating that the conversation has been marked as Closed due to an

error. This allows the customer to restart the conversation as needed.

|

Note

|

This is not applicable for conversations that are initiated through email.

|

|

|

Digital Routing service / Webex Connect

|

An agent clicks Accept or Start Task to accept the task, however, the Webex Engage user is not part of the conversation yet.

|

This is a race condition in which the Webhook event from the Digital Routing service does not reach Webex Connect in time

or is still in the process of being sent, and the Webex Engage API to add the agent to the conversation is yet to be initiated.

Finesse has a retry mechanism that is based on the specific error code that the Webex Engage returns while loading the media,

which indicates that the user is not a participant in the conversation. It retries 3 times at an interval of 3 seconds to

load the conversation before giving up and showing an error to the Agent. Finesse triggers a CloseTask Action with a specific

disposition code to state that the conversation could not be loaded. This results in the Digital Routing service to trigger

a Closed notification and the conversation being marked as Closed.

|

If agent clicks Accept for the task too quickly, the agent may notice a delay of a few seconds for the conversation to load.

The customer may see a notification that the agent was being connected based on the flow, which handles the ROUTED webhook

event. However, the CLOSED webhook notification that follows would indicate to the customer that the conversation has been

marked as Closed. The disposition code in the Closed webhook event can be relied upon in the CLOSED flow, to relay an abnormal

termination message to the end user.

|

|

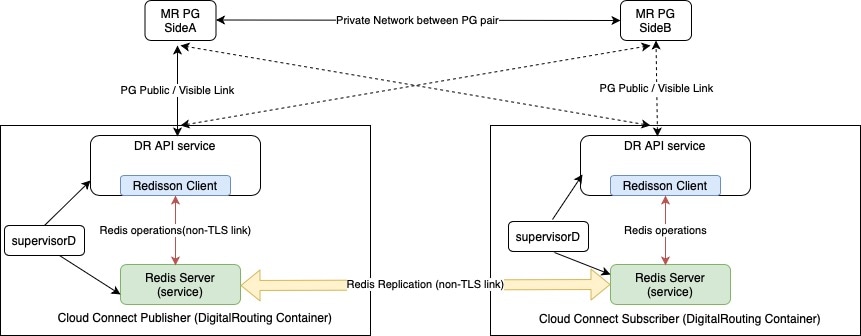

Digital Routing service

|

Double failure of the Digital Routing service:

The Digital Routing service works in an Active-Standby model for high availability. Whenthe Digital Routing service on the

Cloud Connect restarts,the MR PG loses its connection to the service. The MR PG then attempts to connect to the Digital Routing

service, which is running on the peer Cloud Connect node. If the service does not accept the connection request for whatever

reason, it results in the double failure of the Digital Routing service.

|

If you are planning for a maintenance window for the active side of the Digital Routing service, ensure that the standby side

is healthy, and can accept the connection request from MR PG.

|

When the MR PG connects back to the Digital Routing service that had restarted and which still doesn’t have any task context

since the peer was not marked as Primary / Active, all task contexts including the tasks that were queued in the CCE router

and waiting to be assigned to agents, as well as the tasks that were being handled by the agents before the double failure,

are lost.

|

Feedback

Feedback