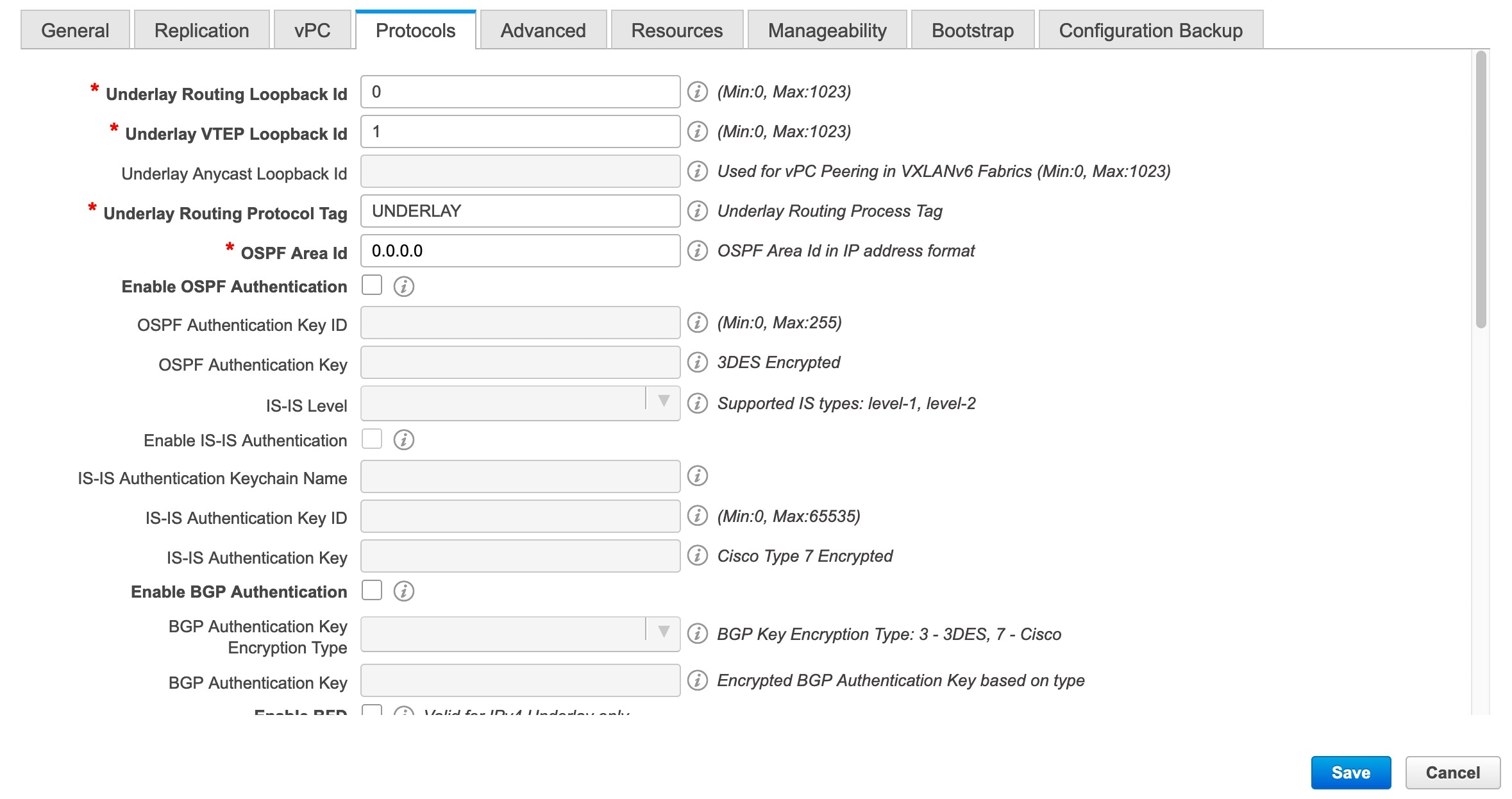

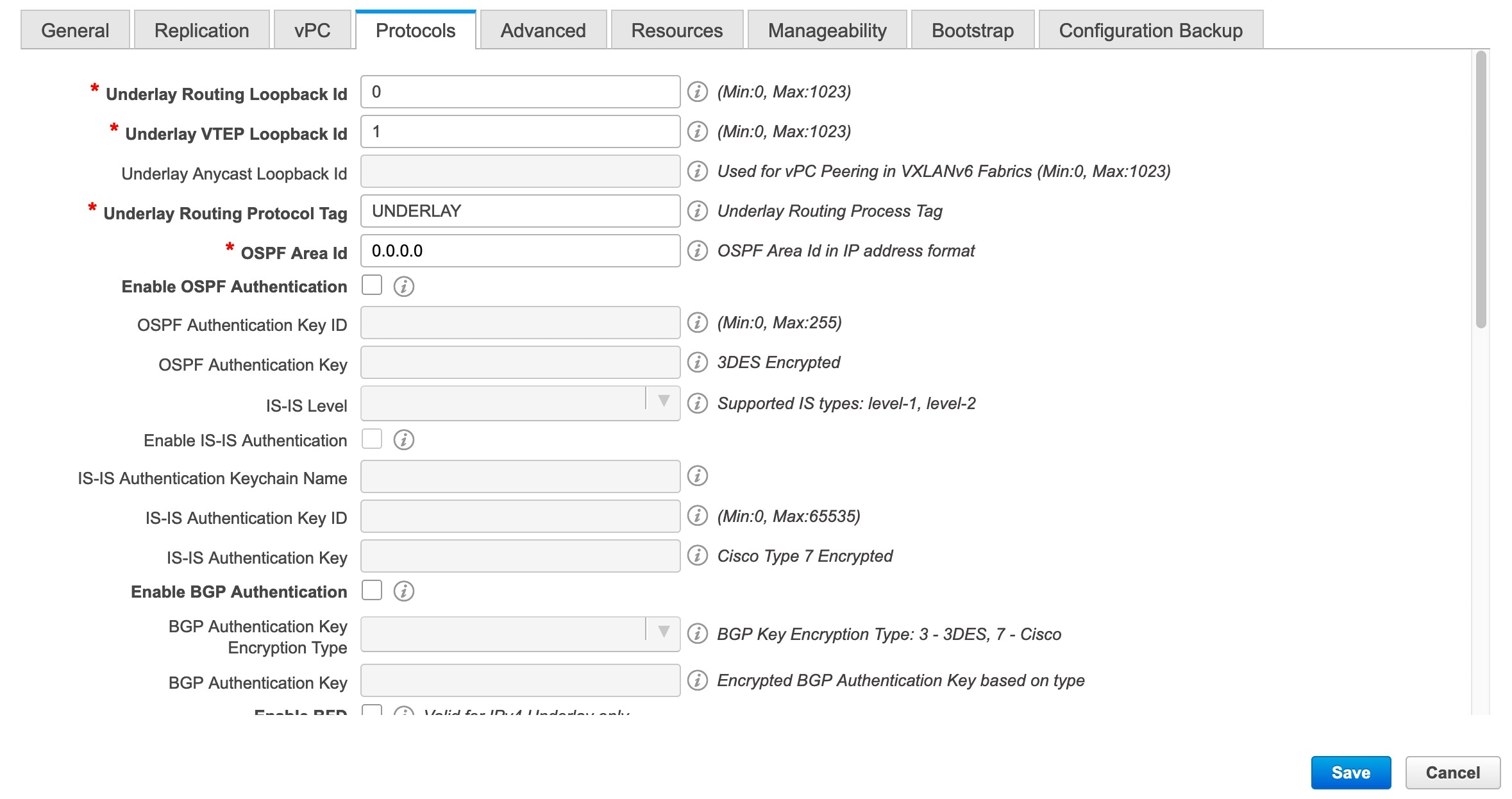

Underlay Routing Loopback Id - The loopback interface ID is populated as 0 since loopback0 is usually used for fabric underlay IGP peering purposes. This

must match the existing configuration on the switches. This must be the same across all the switches.

Underlay VTEP Loopback Id - The loopback interface ID is populated as 1 since loopback1 is usually used for the VTEP peering purposes. This must match

the existing configuration on the switches. This must be the same across all the switches where VTEPs are present.

Link-State Routing Protocol Tag - Enter the existing fabric’s routing protocol tag in this field to define the type of network.

OSPF Area ID – The OSPF area ID of the existing fabric, if OSPF is used as the IGP within the fabric.

|

Note

|

The OSPF or IS-IS authentication fields are enabled based on your selection in the Link-State Routing Protocol field in the General tab.

|

Enable OSPF Authentication – Select the check box to enable the OSPF authentication. Deselect the check box to disable it. If you enable this field,

the OSPF Authentication Key ID and OSPF Authentication Key fields are enabled.

OSPF Authentication Key ID – Enter the OSPF authentication key ID.

OSPF Authentication Key - The OSPF authentication key must be the 3DES key from the switch.

|

Note

|

Plain text passwords are not supported. Login to the switch, retrieve the OSPF authentication details.

|

You can obtain the OSPF authentication details by using the show run ospf command on your switch.

# show run ospf | grep message-digest-key

ip ospf message-digest-key 127 md5 3 c7c83ec78f38f32f3d477519630faf7b

In this example, the OSPF authentication key ID is 127 and the authentication key is c7c83ec78f38f32f3d477519630faf7b.

For information about how to configure a new key and retrieve it, see Retrieving the Authentication Key.

IS-IS Level - Select the IS-IS level from this drop-down list.

Enable IS-IS Authentication - Select the check box to enable IS-IS authentication. Deselect the check box to disable it. If you enable this field, the

IS-IS authentication fields are enabled.

IS-IS Authentication Keychain Name - Enter the keychain name.

IS-IS Authentication Key ID - Enter the IS-IS authentication key ID.

IS-IS Authentication Key - Enter the Cisco Type 7 encrypted key.

|

Note

|

Plain text passwords are not supported. Login to the switch, retrieve the IS-IS authentication details.

|

You can obtain the IS-IS authentication details by using the show run | section “key chain” command on your switch.

# show run | section “key chain”

key chain CiscoIsisAuth

key 127

key-string 7 075e731f

In this example, the keychain name is CiscoIsisAuth, the key ID is 127, and the type 7 authentication key is 075e731f.

Enable BGP Authentication - Select the check box to enable BGP authentication. Deselect the check box to disable it. If you enable this field, the

BGP Authentication Key Encryption Type and BGP Authentication Key fields are enabled.

BGP Authentication Key Encryption Type – Choose the 3 for 3DES encryption type, and 7 for Cisco encryption type.

BGP Authentication Key - Enter the encrypted key based on the encryption type.

|

Note

|

Plain text passwords are not supported. Login to the switch, retrieve the BGP authentication details.

|

You can obtain the BGP authentication details by using the show run bgp command on your switch.

# show run bgp

neighbor 10.2.0.2

remote-as 65000

password 3 sd8478fswerdfw3434fsw4f4w34sdsd8478fswerdfw3434fsw4f4w3

In this example, the BGP authentication key is displayed after the encryption type 3.

Enable PIM Hello Authentication - Enables the PIM hello authentication.

PIM Hello Authentication Key - Specifies the PIM hello authentication key.

Enable BFD feature – Select the check box to enable the BFD feature.

The BFD feature is disabled by default.

Make sure that the BFD feature setting matches with the switch configuration. If the switch configuration contains feature bfd but the BFD feature is not enabled in the fabric settings, config compliance generates diff to remove the BFD feature after

brownfield migration. That is, no feature bfd is generated after migration.

From Cisco DCNM Release 11.3(1), BFD within a fabric is supported natively. The BFD feature is disabled by default in the

Fabric Settings. If enabled, BFD is enabled for the underlay protocols with the default settings. Any custom required BFD

configurations must be deployed via the per switch freeform or per interface freeform policies.

The following config is pushed after you select the Enable BFD check box:

feature bfd

For information about BFD feature compatibility, refer your respective platform documentation and for information about the

supported software images, see Compatibility Matrix for Cisco DCNM.

Enable BFD for iBGP: Select the check box to enable BFD for the iBGP neighbor. This option is disabled by default.

Enable BFD for OSPF: Select the check box to enable BFD for the OSPF underlay instance. This option is disabled by default, and it is grayed out

if the link state protocol is ISIS.

Enable BFD for ISIS: Select the check box to enable BFD for the ISIS underlay instance. This option is disabled by default, and it is grayed out

if the link state protocol is OSPF.

Enable BFD for PIM: Select the check box to enable BFD for PIM. This option is disabled by default, and it is be grayed out if the replication

mode is Ingress.

Here are the examples of the BFD global policies:

router ospf <ospf tag>

bfd

router isis <isis tag>

address-family ipv4 unicast

bfd

ip pim bfd

router bgp <bgp asn>

neighbor <neighbor ip>

bfd

Enable BFD Authentication: Select the check box to enable BFD authentication. If you enable this field, the BFD Authentication Key ID and BFD Authentication Key fields are editable.

|

Note

|

-

BFD Authentication is not supported when the Fabric Interface Numbering field under the General tab is set to unnumbered. The BFD authentication fields will be grayed out automatically. BFD authentication is valid for only for P2P interfaces.

-

After you upgrade from DCNM Release 11.2(1) with BFD enabled to DCNM Release 11.3(1), the following configs are pushed to

the switch:

no ip redirects

no ipv6 redirects

|

BFD Authentication Key ID: Specifies the BFD authentication key ID for the interface authentication. The default value is 100.

BFD Authentication Key: Specifies the BFD authentication key.

For information about how to retrieve the BFD authentication parameters, see Retrieving the Authentication Key.

iBGP Peer-Template Config – Add iBGP peer template configurations on the leaf switches and route reflectors to establish an iBGP session between the

leaf switch and route reflector. Set this field based on switch configuration. If this field is blank, it implies that the

iBGP peer template is not used. If the iBGP peer template is used, enter the peer template definition as defined on the switch.

The peer template name on devices configured with BGP should be the same as defined here.

|

Note

|

If you use the iBGP peer template, include the BGP authentication configuration in this template config field. Additionally,

uncheck the Enable BGP Authentication check box to avoid duplicating the BGP configuration.

|

Until Cisco DCNM Release 11.3(1), iBGP peer template for iBGP definition on the leafs or border role devices and BGP RRs were

same. From DCNM Release 11.4(1), the following fields can be used to specify different configurations:

-

iBGP Peer-Template Config – Specifies the config used for RR and spines with border role.

-

Leaf/Border/Border Gateway iBGP Peer-Template Config – Specifies the config used for leaf, border, or border gateway. If this field is empty, the peer template defined in iBGP Peer-Template Config is used on all BGP enabled devices (RRs, leafs, border, or border gateway roles).

In brownfield migration, if the spine and leaf use different peer template names, both the iBGP Peer-Template Config and Leaf/Border/Border Gateway iBGP Peer-Template Config fields need to be set according to the switch config. If spine and leaf use the same peer template name and content (except

for the “route-reflector-client” CLI), only iBGP Peer-Template Config field in fabric setting needs to be set. If the fabric settings on iBGP peer templates do not match the existing switch configuration,

an error message is generated and the migration will not proceed.

Feedback

Feedback