- Using Ethernet Operations Administration and Maintenance

- Configuring IEEE Standard-Compliant Ethernet CFM in a Service Provider Network

- Configuring ITU-T Y.1731 Fault Management Functions in IEEE CFM

- Configuring Ethernet Connectivity Fault Management in a Service Provider Network

- Syslog Support for Ethernet Connectivity Fault Management

- Configuring ITU-T Y.1731 Fault Management Functions

- Layer 2 Access Control Lists on EVCs

- IEEE 802.1s on Bridge Domains

- Configuring MAC Address Limiting on Service Instances Bridge Domains and EVC Port Channels

- Static MAC Address Support on Service Instances and Pseudowires

- IEEE 802.1ah on Provider Backbone Bridges

- Enabling Ethernet Local Management Interface

- Configuring Remote Port Shutdown

- Configuring Ethernet Local Management Interface at a Provider Edge

- Configuring IEEE 802.3ad Link Bundling and Load Balancing

- Multichassis LACP

- Finding Feature Information

- Prerequisites for mLACP

- Restrictions for mLACP

- Information About mLACP

Multichassis LACP

In Carrier Ethernet networks, various redundancy mechanisms provide resilient interconnection of nodes and networks. The choice of redundancy mechanisms depends on various factors such as transport technology, topology, single node versus entire network multihoming, capability of devices, autonomous system (AS) boundaries or service provider operations model, and service provider preferences.

Carrier Ethernet network high-availability can be achieved by employing both intra- and interchassis redundancy mechanisms. Cisco's Multichassis EtherChannel (MCEC) solution addresses the need for interchassis redundancy mechanisms, where a carrier wants to "dual home" a device to two upstream points of attachment (PoAs) for redundancy. Some carriers either cannot or will not run loop prevention control protocols in their access networks, making an alternative redundancy scheme necessary. MCEC addresses this issue with enhancements to the 802.3ad Link Aggregation Control Protocol (LACP) implementation. These enhancements are provided in the Multichassis LACP (mLACP) feature described in this document.

Finding Feature Information

Your software release may not support all the features documented in this module. For the latest feature information and caveats, see the release notes for your platform and software release. To find information about the features documented in this module, and to see a list of the releases in which each feature is supported, see the Feature Information Table at the end of this document.

Use Cisco Feature Navigator to find information about platform support and Cisco software image support. To access Cisco Feature Navigator, go to www.cisco.com/go/cfn. An account on Cisco.com is not required.

Prerequisites for mLACP

- The command lacp max-bundle must be used on all PoAs in order to operate in PoA control and shared control modes.

- The maximum number of links configured cannot be less than the total number of interfaces in the link aggregation group (LAG) that is connected to the PoA.

- Each PoA may be connected to a dual-homed device (DHD) with a different number of links for the LAG (configured with a different number of maximum links).

- Each PoA must be configured using the port-channel min-linkcommand with the desired minimum number of links to maintain the LAG in the active state.

- Each PoA must be configured with the errdisable recovery cause mlacp command if brute-force failover is being used.

- For DHD control there must be an equal number of links going to each PoA.

- The max-bundle value must equal the number of links connected locally to the PoA (no local intra-PoA active or standby protection).

- LACP fast switchover must be configured on all devices to speed convergence.

Restrictions for mLACP

- mLACP does not support Fast Ethernet.

- mLACP does not support half-duplex links.

- mLACP does not support multiple neighbors.

- Converting a port channel to mLACP can cause a service disruption.

- The maximum number of member links per LAG per PoA is restricted by the maximum number of ports per port channel, as limited by the platform.

- System priority on a DHD must be a lesser priority than on PoAs.

- MAC Tunneling Protocol (MTP) supports only one member link in a port channel.

- A port-channel or its member links may flap while LACP stabilizes.

- DHD-based control does not function when min-links is not configured.

- DHD-controlled revertive behavior with min-links is not supported.

- Brute-force failover always causes min-link failures.

- Any failure with brute-force failover behaves revertively.

Information About mLACP

- Overview of Multichassis EtherChannel

- Interactions with the MPLS Pseudowire Redundancy Mechanism

- Redundancy Mechanism Processes

- Dual-Homed Topology Using mLACP

- Failure Protection Scenarios

- Operational Variants

- mLACP Failover

Overview of Multichassis EtherChannel

In Multichassis EtherChannel (MCEC), the DHD is dual-homed to two upstream PoAs. The DHD is incapable of running any loop prevention control protocol such as Multiple Spanning Tree (MST). Therefore, another mechanism is required to prevent forwarding loops over the redundant setup. One method is to place the DHD's uplinks in a LAG, commonly referred to as EtherChannel. This method assumes that the DHD is capable of running only IEEE 802.3ad LACP for establishing and maintaining the LAG.

LACP, as defined in IEEE 802.3ad, is a link-level control protocol that allows the dynamic negotiation and establishment of LAGs. An extension of the LACP implementation to PoAs is required to convey to a DHD that it is connected to a single virtual LACP peer and not to two disjointed devices. This extension is called Multichassis LACP or mLACP. The figure below shows this setup.

The PoAs forming a virtual LACP peer, from the perspective of the DHD, are defined as members of a redundancy group. For the PoAs in a redundancy group to appear as a single device to the DHD, the states between them must be synchronized through the Interchassis Communication Protocol (ICCP), which provides a control-only interchassis communication channel (ICC).

In Cisco IOS Release 12.2(33)SRE, the system functions in active/standby redundancy mode. In this mode DHD uplinks that connect to only a single PoA can be active at any time. The DHD recognizes one PoA as active and the other as standby but does not preclude a given PoA from being active for one DHD and standby for another. This capability allows two PoAs to perform load sharing for different services.

Interactions with the MPLS Pseudowire Redundancy Mechanism

The network setup shown in the figure above can be used to provide provider edge (PE) node redundancy for Virtual Private LAN Service (VPLS) and Virtual Private Wire Service (VPWS) deployments over Multiprotocol Label Switching (MPLS). In these deployments, the uplinks of the PoAs host the MPLS pseudowires that provide redundant connectivity over the core to remote PE nodes. Proper operation of the network requires interaction between the redundancy mechanisms employed on the attachment circuits (for example, mLACP) and those employed on the MPLS pseudowires. This interaction ensures the state (active or standby) is synchronized between the attachment circuits and pseudowires for a given PoA.

RFC 4447 introduced a mechanism to signal pseudowire status via the Link Distribution Protocol (LDP) and defined a set of status codes to report attachment circuit as well as pseudowire fault information. The Preferential Forwarding Status bit (draft-ietf-pwe3-redundancy-bit ) definition proposes to extend these codes to include two bits for pseudowire redundancy applications:

The draft also proposes two modes of operation:

- Independent mode--The local PE decides on its pseudowire status independent of the remote PE.

- Primary and secondary modes--One of the PEs determines the state of the remote side through a handshake mechanism.

For the mLACP feature, operation is based on the independent mode. By running ICC between the PoAs, only the preferential forwarding status bit is required; the request pseudowire switchover bit is not used.

The local pseudowire status (active or standby) is determined independently by the PoAs in a redundancy group and then relayed to the remote PEs in the form of a notification. Similarly, the remote PEs perform their own selection of their pseudowire status and notify the PoAs on the other side of the core.

After this exchange of local states, the pseudowires used for traffic forwarding are those selected to be active independently on both local and remote ends.

The attachment circuit redundancy mechanism determines and controls the pseudowire redundancy mechanism. mLACP determines the status of the attachment circuit on a given PoA according to the configured LACP system and port priorities, and then the status of the pseudowires on a given PoA is synchronized with that of the local attachment circuits. This synchronization guarantees that the PoA with the active attachment circuits has its pseudowires active. Similarly, the PoA with the standby attachment circuits has its pseudowires in standby mode. By ensuring that the forwarding status of the attachment circuits is synchronized with that of the pseudowires, the need to forward data between PoA nodes within a redundancy group can be avoided. This synchronization saves platform bandwidth that would otherwise be wasted on inter-PoA data forwarding in case of failures.

Redundancy Mechanism Processes

The Carrier Ethernet redundancy solution should include the following processes (and how they apply to the mLACP solution):

- Attachment circuit active or standby status selection--This selection can be performed by the access node or network, the aggregation node, or combination of the two. For mLACP, the attachment circuit status selection is determined through collaboration between the DHD and the PoAs.

- Pseudowire forwarding status notification--This notification is mandatory for mLACP operation in VPWS and VPLS deployments; that is, when the PoA uplinks employ pseudowire technology. When the PoAs decide on either an active or standby role, they need to signal the status of the associated pseudowires to the PEs on the far end of the network. For MPLS pseudowires, this is done using LDP.

- MAC flushing indication--This indication is mandatory for any redundancy mechanism in order to speed convergence time and eliminate potential traffic blackholing. The mLACP redundancy mechanism should be integrated with relevant 802.1Q/802.1ad/802.1ah MAC flushing mechanisms as well as MAC flushing mechanisms for VPLS.

Note |

Blackholing occurs when incoming traffic is dropped without informing the source that the data did not reach its intended recipient. A black hole can be detected only when lost traffic is monitored. |

- Active VLAN notification--For mLACP, this notification is not required as long as the PoAs follow the active/standby redundancy model.

The figure below shows redundancy mechanisms in Carrier Ethernet networks.

Dual-Homed Topology Using mLACP

The mLACP feature allows the LACP state machine and protocol to operate in a dual-homed topology. The mLACP feature decouples the existing LACP implementation from the multichassis specific requirements, allowing LACP to maintain its adherence to the IEEE 802.3ad standard. The mLACP feature exposes a single virtual instance of IEEE 802.3ad to the DHD for each redundancy group. The virtual LACP instance interoperates with the DHD according to the IEEE 802.3ad standard to form LAGs spanning two or more chassis.

- LACP and 802.3ad Parameter Exchange

- Port Identifier

- Port Number

- Port Priority

- Multichassis Considerations

- System MAC Address

- System Priority

- Port Key

LACP and 802.3ad Parameter Exchange

In IEEE 802.3ad, the concatenation of the LACP system MAC address and system priority form an LACP system ID (8 bytes). The system ID is formed by taking the two-byte system priority value as the most significant two octets of the system ID. The system MAC address makes up the remainder of the system ID (octets 3 to 8). System ID priority comparisons are based on the lower numerically valued ID.

To provide the highest LACP priority, the mLACP module communicates the system MAC address and priority values for the given redundancy group to its redundancy group peer(s) and vice versa. The mLACP then chooses the lowest system ID value among the PoAs in the given redundancy group to use as the system ID of the virtual LACP instance of the redundancy group.

Cisco IOS Release 12.2(33)SRE introduces two LACP configuration commands to specify the system MAC address and system priority used for a given redundancy group: mlacp system-mac mac-address and mlacp system-priority priority-value. These commands provide better settings to determine which side of the attachment circuit will control the selection logic of the LAG. The default value for the system MAC address is the chassis backplane default MAC address. The default value for the priority is 32768.

Port Identifier

IEEE 802.3ad uses a 4-byte port identifier to uniquely identify a port within a system. The port identifier is the concatenation of the port priority and port number (unique per system) and identifies each port in the system. Numerical comparisons between port IDs are performed by unsigned integer comparisons where the 2-byte Port Priority field is placed in the most significant two octets of the port ID. The 2-byte port number makes up the third and fourth octets. The mLACP feature coordinates the port IDs for a given redundancy group to ensure uniqueness.

Port Number

A port number serves as a unique identifier for a port within a device. The LACP port number for a port is equal to the port's ifIndex value (or is based on the slot and subslot identifiers on the Cisco 7600 router).

LACP relies on port numbers to detect rewiring. For multichassis operation, you must enter the mlacp node-id node-id command to coordinate port numbers between the two PoAs in order to prevent overlap.

Port Priority

Port priority is used by the LACP selection logic to determine which ports should be activated and which should be left in standby mode when there are hardware or software limitations on the maximum number of links allowed in a LAG. For multichassis operation in active/standby redundancy mode, the port priorities for all links connecting to the active PoA must be higher than the port priorities for links connecting to the standby PoA. These port priorities can either be guaranteed through explicit configuration or the system can automatically adjust the port priorities depending on selection criteria. For example, select the PoA with the highest port priority to be the active PoA and dynamically adjust the priorities of all other links with the same port key to an equal value.

In Cisco IOS Release 12.2(33)SRE, the mLACP feature supports only the active/standby redundancy model. The LACP port priorities of the individual member links should be the same for each link belonging to the LAG of a given PoA. To support this requirement, the mlacp lag-priority command is implemented in interface configuration mode in the command-line interface (CLI). This command sets the LACP port priorities for all the local member links in the LAG. Individual member link LACP priorities (configured by the lacp port-priority command) are ignored on links belonging to mLACP port channels.

The mlacp lag-priority command may also be used to force a PoA failover during operation in the following two ways:

- Set the active PoA's LAG priority to a value greater than the LAG priority on the standby PoA. This setting results in the quickest failover because it requires the fewest LACP link state transitions on the standby links before they turn active.

- Set the standby PoA's LAG priority to a value numerically less than the LAG priority on the active PoA. This setting results in a slightly longer failover time because standby links have to signal OUT_OF_SYNC to the DHD before the links can be brought up and go active.

In some cases, the operational priority and the configured priority may differ when using dynamic port priority management to force failovers. In this case, the configured version will not be changed unless the port channel is operating in nonrevertive mode. Enter the show lacp multichassis port-channel command to view the current operational priorities. The configured priority values can be displayed by using the show running-config command.

Multichassis Considerations

Because LACP is a link layer protocol, all messages exchanged over a link contain information that is specific and local to that link. The exchanged information includes:

- System attributes--priority and MAC address

- Link attributes--port key, priority, port number, and state

When extending LACP to operate over a multichassis setup, synchronization of the protocol attributes and states between the two chassis is required.

System MAC Address

LACP relies on the system MAC address to determine the identity of the remote device connected over a particular link. Therefore, to mask the DHD from its connection to two disjointed devices, coordination of the system MAC address between the two PoAs is essential. In Cisco IOS software, the LACP system MAC address defaults to the ROM backplane base MAC address and cannot be changed by configuration. For multichassis operation the following two conditions are required:

- System MAC address for each PoA should be communicated to its peer--For example, the PoAs elect the MAC address with the lower numeric value to be the system MAC address. The arbitration scheme must resolve to the same value. Choosing the lower numeric MAC address has the advantage of providing higher system priority.

- System MAC address is configurable--The system priority depends, in part, on the MAC address, and a service provider would want to guarantee that the PoAs have higher priority than the DHD (for example, if both DHD and PoA are configured with the same system priority and the service provider has no control over DHD). A higher priority guarantees that the PoA port priorities take precedence over the DHD's port priority configuration. If you configure the system MAC address, you must ensure that the addresses are uniform on both PoAs; otherwise, the system will automatically arbitrate the discrepancy, as when a default MAC address is selected.

System Priority

LACP requires that a system priority be associated with every device to determine which peer's port priorities should be used by the selection logic when establishing a LAG. In Cisco IOS software, this parameter is configurable through the CLI. For multichassis operation, this parameter is coordinated by the PoAs so that the same value is advertised to the DHD.

Port Key

The port key indicates which links can form a LAG on a given system. The key is locally significant to an LACP system and need not match the key on an LACP peer. Two links are candidates to join the same LAG if they have the same key on the DHD and the same key on the PoAs; however, the key on the DHD is not required to be the same as the key on the PoAs. Given that the key is configured according to the need to aggregate ports, there are no special considerations for this parameter for multichassis operation.

Failure Protection Scenarios

The mLACP feature provides network resiliency by protecting against port, link, and node failures. These failures can be categorized into five types. The figure below shows the failure points in a network, denoted by the letters A through E.

- A--Failure of the uplink port on the DHD

- B--Failure of the Ethernet link

- C--Failure of the downlink port on the active PoA

- D--Failure of the active PoA node

- E--Failure of the active PoA uplinks

When any of these faults occur, the system reacts by triggering a switchover from the active PoA to the standby PoA. The switchover involves failing over the PoA's uplinks and downlinks simultaneously.

Failure points A and C are port failures. Failure point B is an Ethernet link failure and failure point D is a node failure. Failure point E can represent one of four different types of uplink failures when the PoAs connect to an MPLS network:

- Pseudowire failure--Monitoring individual pseudowires (for example, using VCCV-BFD) and, upon a pseudowire failure, declare uplink failure for the associated service instances.

- Remote PE IP path failure--Monitoring the IP reachability to the remote PE (for example, using IP Route-Watch) and, upon route failure, declare uplink failure for all associated service instances.

- LSP failure--Monitoring the LSP to a given remote PE (for example, using automated LSP-Ping) and, upon LSP failure, declare uplink failure for all associated service instances.

- PE isolation--Monitoring the physical core-facing interfaces of the PE. When all of these interfaces go down, the PE effectively becomes isolated from the core network, and the uplink failure is declared for all affected service instances.

As long as the IP/MPLS network employs native redundancy and resiliency mechanisms such as MPLS fast reroute (FRR), the mLACP solution is sufficient for providing protection against PE isolation. Pseudowire, LSP, and IP path failures are managed by the native IP/MPLS protection procedures. That is, interchassis failover via mLACP is triggered only when a PE is completely isolated from the core network, because native IP/MPLS protection mechanisms are rendered useless. Therefore, failure point E is used to denote PE isolation from the core network.

Note |

The set of core-facing interfaces that should be monitored are identified by explicit configuration. The set of core-facing interfaces must be defined independently per redundancy group. Failure point E (unlike failure point A, B, or C) affects and triggers failover for all the multichassis LAGs configured on a given PoA. |

Operational Variants

LACP provides a mechanism by which a set of one or more links within a LAG are placed in standby mode to provide link redundancy between the devices. This redundancy is normally achieved by configuring more ports with the same key than the number of links a device can aggregate in a given LAG (due to hardware or software restrictions, or due to configuration). For active/standby redundancy, two ports are configured with the same port key, and the maximum number of allowed links in a LAG is configured to be 1. If the DHD and PoAs are all capable of restricting the number of links per LAG by configuration, three operational variants are possible.

DHD-based Control

The DHD is configured to limit the maximum number of links per bundle to one, whereas the PoAs are configured to limit the maximum number of links per bundle to greater than one. Thus, the selection of the active/standby link is the responsibility of the DHD. Which link is designated active and which is marked standby depends on the relative port priority, as configured on the system with the higher system priority. A PoA configured with a higher system priority can still determine the selection outcome. The DHD makes the selection and places the link with lower port priority in standby mode.

To accommodate DHD-controlled failover, the DHD must be configured with the max-bundle value equal to a number of links (L), where L is the fewest number of links connecting the DHD to a PoA. The max-bundle value restricts the DHD from bundling links to both PoAs at the same time (active/active). Although the DHD controls the selection of active/standby links, the PoA can still dictate the individual member link priorities by configuring the PoA's virtual LACP instance with a lower system priority value than the DHD's system priority.

The DHD control variant must be used with a PoA minimum link threshold failure policy where the threshold is set to L (same value for L as described above). A minimum link threshold must be configured on each of the PoAs because an A, B, or C link failure that does not trigger a failover (minimum link threshold is still satisfied) causes the DHD to add one of the standby links going to the standby PoA to the bundle. This added link results in the unsupported active/active scenario.

Note |

DHD control does not use the mLACP hot-standby state on the standby PoA, which results in higher failover times than the other variants. |

DHD control eliminates the split brain problem on the attachment circuit side by limiting the DHD's attempts to bundle all the links.

PoA Control

In PoA control, the PoA is configured to limit the maximum number of links per bundle to be equal to the number of links (L) going to the PoA. The DHD is configured with that parameter set to some value greater than L. Thus, the selection of the active/standby links becomes the responsibility of the PoA.

Shared Control (PoA and DHD)

In shared control, both the DHD and the PoA are configured to limit the maximum number of links per bundle to L--the number of links going to the PoA. In this configuration, each device independently selects the active/standby link. Shared control is advantageous in that it limits the split-brain problem in the same manner as DHD control, and shared control is not susceptible to the active/active tendencies that are prevalent in DHD control. A disadvantage of shared control is that the failover time is determined by both the DHD and the PoA, each changing the standby links to SELECTED and waiting for each of the WAIT_WHILE_TIMERs to expire before moving the links to IN_SYNC. The independent determination of failover time and change of link states means that both the DHD and PoAs need to support the LACP fast-switchover feature in order to provide a failover time of less than one second.

mLACP Failover

The mLACP forces a PoA failover to the standby PoA when one of the following failures occurs:

- Failure of the DHD uplink port, Ethernet link, or downlink port on the active PoA--A policy failover is triggered via a configured failover policy and is considered a forced failover. In Cisco IOS Release 12.2(33)SRE, the only option is the configured minimum bundle threshold. When the number of active and SELECTED links to the active PoA goes below the configured minimum threshold, mLACP forces a failover to the standby PoA's member links. This minimum threshold is configured using the port-channel min-links command in interface configuration mode. The PoAs determine the failover independent of the operational control variant in use.

- Failure of the active PoA--This failure is detected by the standby PoA. mLACP automatically fails over to standby because mLACP on the standby PoA is notified of failure via ICRM and brings up its local member links. In the DHD-controlled variant, this failure looks the same as a total member link failure, and the DHD activates the standby links.

- Failure of the active PoA uplinks--mLACP is notified by ICRM of PE isolation and relinquishes its active member links. This failure is a "forced failover" and is determined by the PoAs independent of the operational control variant in use.

- Dynamic Port Priority

- Revertive and Nonrevertive Modes

- Brute Force Shutdown

- Peer Monitoring with Interchassis Redundancy Manager

- MAC Flushing Mechanisms

Dynamic Port Priority

The default failover mechanism uses dynamic port priority changes on the local member links to force the LACP selection logic to move the required standby link(s) to the SELECTED and Collecting_Distributing state. This state change occurs when the LACP actor port priority values for all affected member links on the currently active PoA are changed to a higher numeric value than the standby PoA's port priority (which gives the standby PoA ports a higher claim to bundle links). Changing the actor port priority triggers the transmission of an mLACP Port Config Type-Length-Value (TLV) message to all peers in the redundancy group. These messages also serve as notification to the standby PoA(s) that the currently active PoA is attempting to relinquish its role. The LACP then transitions the standby link(s) to the SELECTED state and moves all the currently active links to STANDBY.

Dynamic port priority changes are not automatically written back to the running configuration or to the NVRAM configuration. If you want the current priorities to be used when the system reloads, the mlacp lag-priority command must be used and the configuration must be saved.

Revertive and Nonrevertive Modes

Dynamic port priority functionality is used by the mLACP feature to provide both revertive mode and nonrevertive mode. The default operation is revertive, which is the default behavior in single chassis LACP. Nonrevertive mode can be enabled on a per port-channel basis by using the lacp failover non-revertivecommand in interface configuration mode. In Cisco IOS Release 12.2(33)SRE this command is supported only for mLACP.

Nonrevertive mode is used to limit failover and, therefore, possible traffic loss. Dynamic port priority changes are utilized to ensure that the newly activated PoA remains active after the failed PoA recovers.

Revertive mode operation forces the configured primary PoA to return to active state after it recovers from a failure. Dynamic port priority changes are utilized when necessary to allow the recovering PoA to resume its active role.

Brute Force Shutdown

A brute-force shutdown is a forced failover mechanism to bring down the active physical member link interface(s) for the given LAG on the PoA that is surrendering its active status. This mechanism does not depend on the DHD's ability to manage dynamic port priority changes and compensates for deficiencies in the DHD's LACP implementation.

The brute-force shutdown changes the status of each member link to ADMIN_DOWN to force the transition of the standby links to the active state. Note that this process eliminates the ability of the local LACP implementation to monitor the link state.

The brute-force shutdown operates in revertive mode, so dynamic port priorities cannot be used to control active selection. The brute-force approach is configured by the lacp failover brute-force command in interface configuration mode. This command is not allowed in conjunction with a nonrevertive configuration.

Peer Monitoring with Interchassis Redundancy Manager

There are two ways in which a peer can be monitored with Interchassis Redundancy Manager (ICRM):

- Routewatch (RW)--This method is the default.

- Bidirectional Forwarding Detection (BFD)--You must configure the redundancy group with the monitor peer bfd command.

Note |

For stateful switchover (SSO) deployments (with redundant support in the chassis), BFD monitoring and a static route for the ICCP connection are required to prevent "split brain" after an SSO failover. Routewatch is compatible with SSO for health monitoring. |

For each redundancy group, for each peer (member IP), a monitoring adjacency is created. If there are two peers with the same IP address, the adjacency is shared regardless of the monitoring mode. For example, if redundancy groups 1 and 2 are peered with member IP 10.10.10.10, there is only one adjacency to 10.10.10.10, which is shared in both redundancy groups. Furthermore, redundancy group 1 can use BFD monitoring while redundancy group 2 is using RW.

Note |

BFD is completely dependent on RW--there must be a route to the peer for ICRM to initiate BFD monitoring. BFD implies RW and sometimes the status of the adjacency may seem misleading but is accurately representing the state. Also, if the route to the peer PoA is not through the directly connected (back-to-back) link between the systems, BFD can give misleading results. |

An example of output from the show redundancy interface command follows:

Router# show redundancy interface

Redundancy Group 1 (0x1)

Applications connected: mLACP

Monitor mode: Route-watch

member ip: 201.0.0.1 'mlacp-201', CONNECTED

Route-watch for 201.0.0.1 is UP

mLACP state: CONNECTED

ICRM fast-failure detection neighbor table

IP Address Status Type Next-hop IP Interface

========== ====== ==== =========== =========

201.0.0.1 UP RW

To interpret the adjacency status displayed by the show redundancy interchassiscommand, refer to the table below.

| Table 1 | Status Information from the show redundancy interchassis command |

| Adjacency Type |

Adjacency Status |

Meaning |

|---|---|---|

| RW |

DOWN |

RW or BFD is configured, but there is no route for the given IP address. |

| RW |

UP |

RW or BFD is configured. RW is up, meaning there is a valid route to the peer. If BFD is configured and the adjacency status is UP, BFD is probably not configured on the interface of the route's adjacency. |

| BFD |

DOWN |

BFD is configured. A route exists and the route's adjacency is to an interface that has BFD enabled. BFD is started but the peer is down. The DOWN status can be because the peer is not present or BFD is not configured on the peer's interface. |

| BFD |

UP |

BFD is configured and operational. |

Note |

If the adjacency type is "BFD," RW is UP regardless of the BFD status. |

MAC Flushing Mechanisms

When mLACP is used to provide multichassis redundancy in multipoint bridged services (for example, VPLS), there must be a MAC flushing notification mechanism in order to prevent potential traffic blackholing.

At the failover from a primary PoA to a secondary PoA, a service experiences traffic blackholing when the DHD in question remains inactive and while other remote devices in the network are attempting to send traffic to that DHD. Remote bridges in the network have stale MAC entries pointing to the failed PoA and direct traffic destined to the DHD to the failed PoA, where the traffic is dropped. This blackholing continues until the remote devices age out their stale MAC address table entries (which typically takes five minutes). To prevent this anomaly, the newly active PoA, which has taken control of the service, transmits a MAC flush notification message to the remote devices in the network to flush their stale MAC address entries for the service in question.

The exact format of the MAC flushing message depends on the nature of the network transport: native 802.1Q/802.1ad Ethernet, native 802.1ah Ethernet, VPLS, or provider backbone bridge (PBB) over VPLS. Furthermore, in the context of 802.1ah, it is important to recognize the difference between mechanisms used for customer-MAC (C-MAC) address flushing versus bridge-MAC (B-MAC) address flushing.

The details of the various mechanisms are discussed in the following sections.

Multiple I-SID Registration Protocol

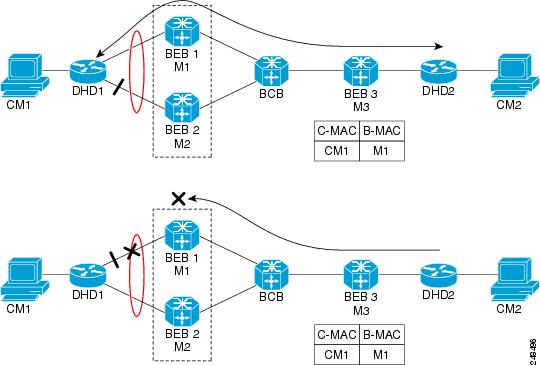

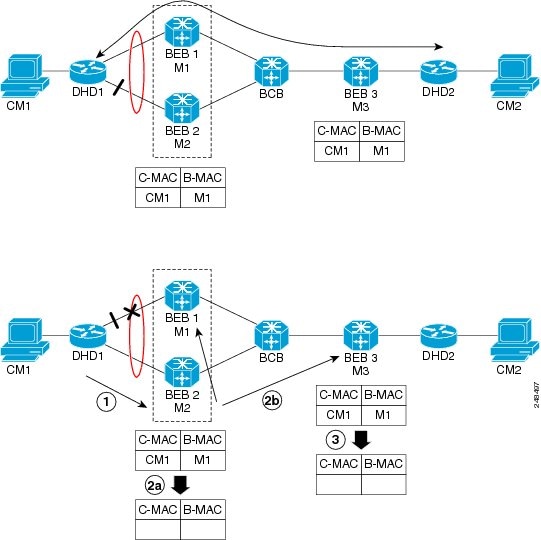

Multiple I-SID Registration Protocol (MIRP) is enabled by default on 802.1ah service instances. The use of MIRP in 802.1ah networks is shown in the figure below.

Device DHD1 is dual-homed to two 802.1ah backbone edge bridges (BEB1 and BEB2). Assume that initially the primary path is through BEB1. In this configuration BEB3 learns that the host behind DHD1 (with MAC address CM1) is reachable via the destination B-MAC M1. If the link between DHD1 and BEB1 fails and the host behind DHD1 remains inactive, the MAC cache tables on BEB3 still refer to the BEB1 MAC address even though the new path is now via BEB2 with B-MAC address M2. Any bridged traffic destined from the host behind DHD2 to the host behind DHD1 is wrongfully encapsulated with B-MAC M1 and sent over the MAC tunnel to BEB1, where the traffic blackholes.

To circumvent the traffic blackholing problem when the link between DHD1 and BEB1 fails, BEB2 performs two tasks:

- Flushes its own MAC address table for the service or services in question.

- Transmits an MIRP message on its uplink to signal the far end BEB (BEB3) to flush its MAC address table. Note that the MIRP message is transparent to the backbone core bridges (BCBs). The MIRP message is processed on a BEB because only BCBs learn and forward based on B-MAC addresses and they are transparent to C-MAC addresses.

Note |

MIRP triggers C-MAC address flushing for both native 802.1ah and PBB over VPLS. |

The figure below shows the operation of the MIRP.

The MIRP has not been defined in IEEE but is expected to be based on the IEEE 802.1ak Multiple Registration Protocol (MRP). MRP maintains a complex finite state machine (FSM) for generic attribute registration. In the case of MIRP, the attribute is an I-SID. As such, MIRP provides a mechanism for BEBs to build and prune a per I-SID multicast tree. The C-MAC flushing notification capability of MIRP is a special case of attribute registration in which the device indicates that an MIRP declaration is "new," meaning that this notification is the first time a BEB is declaring interest in a particular I-SID.

LDP MAC Address Withdraw

When the mLACP feature is used for PE redundancy in traditional VPLS (that is, not PBB over VPLS), the MAC flushing mechanism is based on the LDP MAC Address Withdraw message as defined in RFC 4762.

The required functional behavior is as follows: Upon a failover from the primary PoA to the standby PoA, the standby PoA flushes its local MAC address table for the affected services and generates the LDP MAC Address Withdraw messages to notify the remote PEs to flush their own MAC address tables. One message is generated for each pseudowire in the affected virtual forwarding instances (VFIs).

How to Configure mLACP

- Configuring Interchassis Group and Basic mLACP Commands

- Configuring the mLACP Interchassis Group and Other Port-Channel Commands

- Configuring Redundancy for VPWS

- Configuring Redundancy for VPLS

- Configuring Hierarchical VPLS

- Troubleshooting mLACP

Configuring Interchassis Group and Basic mLACP Commands

Perform this task to set up the communication between multiple PoAs and to configure them in the same group.

DETAILED STEPS

Configuring the mLACP Interchassis Group and Other Port-Channel Commands

Perform this task to set up mLACP attributes specific to a port channel. The mlacp interchassis group command links the port-channel interface to the interchassis group that was created in the previous Configuring Interchassis Group and Basic mLACP Commands.

DETAILED STEPS

Configuring Redundancy for VPWS

Perform this task to provide Layer 2 VPN service redundancy for VPWS.

DETAILED STEPS

Configuring Redundancy for VPLS

Coupled and Decoupled Modes for VPLS

VPLS can be configured in either coupled mode or decoupled mode. Coupled mode is when at least one attachment circuit in VFI changes state to active, all pseudowires in VFI advertise active. When all attachment circuits in VFI change state to standby, all pseudowires in VFI advertise standby mode. See the figure below.

VPLS decoupled mode is when all pseudowires in the VFI are always active and the attachment circuit state is independent of the pseudowire state. This mode provides faster switchover time when a platform does not support pseudowire status functionality, but extra flooding and multicast traffic will be dropped on the PE with standby attachment circuits. See the figure below.

Steps for Configuring Redundancy for VPLS

Perform the following task to configure redundancy for VPLS.

DETAILED STEPS

Configuring Hierarchical VPLS

Perform this task to configure Hierarchical VPLS (H-VPLS).

DETAILED STEPS

Troubleshooting mLACP

- Debugging mLACP

- Debugging mLACP on an Attachment Circuit or EVC

- Debugging mLACP on AToM Pseudowires

- Debugging Cross-Connect Redundancy Manager and Session Setup

- Debugging VFI

- Debugging the Segment Switching Manager (Switching Setup)

- Debugging High Availability Features in mLACP

Debugging mLACP

Use these debug commands for general mLACP troubleshooting.

DETAILED STEPS

Debugging mLACP on an Attachment Circuit or EVC

Use these debug commands for troubleshooting mLACP on an attachment circuit or on an EVC.

DETAILED STEPS

Debugging mLACP on AToM Pseudowires

Use the debug mpls l2transport vc command for troubleshooting mLACP on AToM pseudowires.

DETAILED STEPS

Debugging Cross-Connect Redundancy Manager and Session Setup

Use the following debugcommands to troubleshoot cross-connect, redundancy manager, and session setup.

DETAILED STEPS

Debugging VFI

Use the debug vficommand for troubleshooting a VFI.

DETAILED STEPS

Debugging the Segment Switching Manager (Switching Setup)

Use the debug ssmcommand for troubleshooting a segment switching manager (SSM).

DETAILED STEPS

Debugging High Availability Features in mLACP

Use the following debugcommands for troubleshooting High Availability features in mLACP.

DETAILED STEPS

Configuration Examples for mLACP

- Example Configuring VPWS

- Example Configuring VPLS

- Example Configuring H-VPLS

- Example Verifying VPWS on an Active PoA

- Example Verifying VPWS on a Standby PoA

- Example Verifying VPLS on an Active PoA

- Example Verifying VPLS on a Standby PoA

Example Configuring VPWS

Two sample configurations for VPWS follow: one example for an active PoA and the other for a standby PoA.

The figure below shows a sample topology for a VPWS configuration.

Active PoA for VPWS

The following VPWS sample configuration is for an active PoA:

mpls ldp graceful-restart mpls label protocol ldp ! redundancy mode sso interchassis group 1 member ip 201.0.0.1 backbone interface Ethernet0/2 backbone interface Ethernet1/2 backbone interface Ethernet1/3 monitor peer bfd mlacp node-id 0 ! pseudowire-class mpls-dhd encapsulation mpls status peer topology dual-homed ! interface Loopback0 ip address 200.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp interchassis group 1 hold-queue 300 in service instance 1 ethernet encapsulation dot1q 100 xconnect 210.0.0.1 10 pw-class mpls-dhd backup peer 211.0.0.1 10 pw-class mpls-dhd ! interface Ethernet0/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.200 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3

Standby PoA for VPWS

The following VPWS sample configuration is for a standby PoA:

mpls ldp graceful-restart mpls label protocol ldp mpls ldp graceful-restart mpls label protocol ldp ! Redundancy mode sso interchassis group 1 member ip 200.0.0.1 backbone interface Ethernet0/2 backbone interface Ethernet1/2 backbone interface Ethernet1/3 monitor peer bfd mlacp node-id 1 ! pseudowire-class mpls-dhd encapsulation mpls status peer topology dual-homed ! interface Loopback0 ip address 201.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp lag-priority 40000 mlacp interchassis group 1 hold-queue 300 in service instance 1 ethernet encapsulation dot1q 100 xconnect 210.0.0.1 10 pw-class mpls-dhd backup peer 211.0.0.1 10 pw-class mpls-dhd ! interface Ethernet1/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.201 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3

Example Configuring VPLS

Two sample configurations for VPLS follow: one example for an active PoA and the other for a standby PoA.

The figure below shows a sample topology for a VPLS configuration.

Active PoA for VPLS

The following VPLS sample configuration is for an active PoA:

mpls ldp graceful-restart mpls label protocol ldp ! redundancy mode sso interchassis group 1 member ip 201.0.0.1 backbone interface Ethernet0/2 monitor peer bfd mlacp node-id 0 ! l2 vfi VPLS_200 manual vpn id 10 neighbor 210.0.0.1 encapsulation mpls neighbor 211.0.0.1 encapsulation mpls neighbor 201.0.0.1 encapsulation mpls ! interface Loopback0 ip address 200.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp interchassis group 1 service instance 1 ethernet encapsulation dot1q 100 bridge-domain 200 ! interface Ethernet0/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.200 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3 ! interface Vlan200 no ip address xconnect vfi VPLS_200

Standby PoA for VPLS

The following VPLS sample configuration is for a standby PoA:

mpls ldp graceful-restart mpls label protocol ldp ! redundancy interchassis group 1 member ip 200.0.0.1 backbone interface Ethernet0/2 monitor peer bfd mlacp node-id 1 ! l2 vfi VPLS1 manual vpn id 10 neighbor 210.0.0.1 encapsulation mpls neighbor 211.0.0.1 encapsulation mpls neighbor 200.0.0.1 encapsulation mpls ! interface Loopback0 ip address 201.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp lag-priority 40000 mlacp interchassis group 1 service instance 1 ethernet encapsulation dot1q 100 bridge-domain 200 ! interface Ethernet1/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.201 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3 ! interface Vlan200 no ip address xconnect vfi VPLS_200

Example Configuring H-VPLS

Two sample configurations for H-VPLS follow: one example for an active PoA and the other for a standby PoA.

The figure below shows a sample topology for a H-VPLS configuration.

Active PoA for H-VPLS

The following H-VPLS sample configuration is for an active PoA:

mpls ldp graceful-restart mpls label protocol ldp ! redundancy mode sso interchassis group 1 member ip 201.0.0.1 backbone interface Ethernet0/2 backbone interface Ethernet1/2 backbone interface Ethernet1/3 monitor peer bfd mlacp node-id 0 ! pseudowire-class mpls-dhd encapsulation mpls status peer topology dual-homed ! interface Loopback0 ip address 200.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp interchassis group 1 hold-queue 300 in service instance 1 ethernet encapsulation dot1q 100 xconnect 210.0.0.1 10 pw-class mpls-dhd backup peer 211.0.0.1 10 pw-class mpls-dhd ! interface Ethernet0/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.200 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3

Standby PoA for H-VPLS

The following H-VPLS sample configuration is for a standby PoA:

mpls ldp graceful-restart mpls label protocol ldp ! Redundancy mode sso interchassis group 1 member ip 200.0.0.1 backbone interface Ethernet0/2 backbone interface Ethernet1/2 backbone interface Ethernet1/3 monitor peer bfd mlacp node-id 1 ! pseudowire-class mpls-dhd encapsulation mpls status peer topology dual-homed ! interface Loopback0 ip address 201.0.0.1 255.255.255.255 ! interface Port-channel1 no ip address lacp fast-switchover lacp max-bundle 1 mlacp lag-priority 40000 mlacp interchassis group 1 hold-queue 300 in service instance 1 ethernet encapsulation dot1q 100 xconnect 210.0.0.1 10 pw-class mpls-dhd backup peer 211.0.0.1 10 pw-class mpls-dhd ! interface Ethernet1/0 no ip address channel-group 1 mode active ! interface Ethernet1/3 ip address 10.0.0.201 255.255.255.0 mpls ip bfd interval 50 min_rx 150 multiplier 3

Example Verifying VPWS on an Active PoA

The following show commands can be used to display statistics and configuration parameters to verify the operation of the mLACP feature on an active PoA:

- show lacp multichassis group

- show lacp multichassis port-channel

- show mpls ldp iccp

- show mpls l2transport

- show etherchannel summary

- show etherchannel number port-channel

- show lacp internal

show lacp multichassis group

Use the show lacp multichassis group command to display the interchassis redundancy group value and the operational LACP parameters.

Router# show lacp multichassis group 100

Interchassis Redundancy Group 100

Operational LACP Parameters:

RG State: Synchronized

System-Id: 200.000a.f331.2680

ICCP Version: 0

Backbone Uplink Status: Connected

Local Configuration:

Node-id: 0

System-Id: 200.000a.f331.2680

Peer Information:

State: Up

Node-id: 7

System-Id: 2000.0014.6a8b.c680

ICCP Version: 0

State Flags: Active - A

Standby - S

Down - D

AdminDown - AD

Standby Reverting - SR

Unknown - U

mLACP Channel-groups

Channel State Priority Active Links Inactive Links

Group Local/Peer Local/Peer Local/Peer Local/Peer

1 A/S 28000/32768 4/4 0/0

show lacp multichassis port-channel

Use the show lacp multichassis port-channel command to display the interface port-channel value channel group, LAG state, priority, inactive links peer configuration, and standby links.

Router# show lacp multichassis port-channel1

Interface Port-channel1

Local Configuration:

Address: 000a.f331.2680

Channel Group: 1

State: Active

LAG State: Up

Priority: 28000

Inactive Links: 0

Total Active Links: 4

Bundled: 4

Selected: 4

Standby: 0

Unselected: 0

Peer Configuration:

Interface: Port-channel1

Address: 0014.6a8b.c680

Channel Group: 1

State: Standby

LAG State: Up

Priority: 32768

Inactive Links: 0

Total Active Links: 4

Bundled: 0

Selected: 0

Standby: 4

Unselected: 0

show mpls ldp iccp

Use the show mpls ldp iccp command to display the LDP session and ICCP state information.

Router# show mpls ldp iccp

ICPM RGID Table

iccp:

rg_id: 100, peer addr: 172.3.3.3

ldp_session 0x3, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM RGID Table total ICCP sessions: 1

ICPM LDP Session Table

iccp:

rg_id: 100, peer addr: 172.3.3.3

ldp_session 0x3, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM LDP Session Table total ICCP sessions: 1

show mpls l2transport

Use the show mpls l2transport command to display the local interface and session details, destination address, and status.

Router# show mpls l2transport vc 2

Local intf Local circuit Dest address VC ID Status

------------- -------------------------- --------------- ---------- ----------

Po1 Eth VLAN 2 172.2.2.2 2 UP

Po1 Eth VLAN 2 172.4.4.4 2 STANDBY

show etherchannel summary

Use the show etherchannel summary command to display the status and identity of the mLACP member links.

Router# show etherchannel summary

Flags: D - down P - bundled in port-channel

I - stand-alone s - suspended

H - Hot-standby (LACP only)

R - Layer3 S - Layer2

U - in use f - failed to allocate aggregator

M - not in use, minimum links not met

u - unsuitable for bundling

w - waiting to be aggregated

d - default port

Number of channel-groups in use: 2

Number of aggregators: 2

Group Port-channel Protocol Ports

------+-------------+-----------+-----------------------------------------------

1 Po1(RU) LACP Gi2/9(P) Gi2/20(P) Gi2/31(P)

show etherchannel number port-channel

Use the show etherchannel number port-channel command to display the status and identity of the EtherChannel and and port channel.

Router# show etherchannel 51 port-c

Port-channels in the group:

----------------------

Port-channel: Po51 (Primary Aggregator)

------------

Age of the Port-channel = 0d:02h:25m:23s

Logical slot/port = 14/11 Number of ports = 2

HotStandBy port = null

Passive port list = Gi9/15 Gi9/16

Port state = Port-channel L3-Ag Ag-Inuse

Protocol = LACP

Fast-switchover = enabled

Direct Load Swap = disabled

Ports in the Port-channel:

Index Load Port EC state No of bits

------+------+--------+------------------+-----------

0 55 Gi9/15 mLACP-stdby 4

1 AA Gi9/16 mLACP-stdby 4

Time since last port bundled: 0d:01h:03m:39s Gi9/16

Time since last port Un-bundled: 0d:01h:03m:40s Gi9/16

Last applied Hash Distribution Algorithm: Fixed Channel-group Iedge Counts:

--------------------------:

Access ref count : 0

Iedge session count : 0

show lacp internal

Use the show lacp internalcommand to display the device, port, and member- link information.

Router# show lacp internal

Flags: S - Device is requesting Slow LACPDUs

F - Device is requesting Fast LACPDUs

A - Device is in Active mode P - Device is in Passive mode

Channel group 1

LACP port Admin Oper Port Port

Port Flags State Priority Key Key Number State

Gi2/9 SA bndl-act 28000 0x1 0x1 0x820A 0x3D

Gi2/20 SA bndl-act 28000 0x1 0x1 0x8215 0x3D

Gi2/31 SA bndl-act 28000 0x1 0x1 0x8220 0x3D

Gi2/40 SA bndl-act 28000 0x1 0x1 0x8229 0x3D

Peer (MLACP-PE3) mLACP member links

Gi3/11 FA hot-sby 32768 0x1 0x1 0xF30C 0x5

Gi3/21 FA hot-sby 32768 0x1 0x1 0xF316 0x5

Gi3/32 FA hot-sby 32768 0x1 0x1 0xF321 0x7

Gi3/2 FA hot-sby 32768 0x1 0x1 0xF303 0x7

Example Verifying VPWS on a Standby PoA

The following show commands can be used to display statistics and configuration parameters to verify the operation of the mLACP feature on a standby PoA:

- show lacp multichassis group

- show lacp multichassis portchannel

- show mpls ldp iccp

- show mpls l2transport

- show etherchannel summary

- show lacp internal

show lacp multichassis group

Use the show lacp multichassis group command to display the LACP parameters, local configuration, status of the backbone uplink, peer information, node ID, channel, state, priority active, and inactive links.

Router# show lacp multichassis group 100

Interchassis Redundancy Group 100

Operational LACP Parameters:

RG State: Synchronized

System-Id: 200.000a.f331.2680

ICCP Version: 0

Backbone Uplink Status: Connected

Local Configuration:

Node-id: 7

System-Id: 2000.0014.6a8b.c680

Peer Information:

State: Up

Node-id: 0

System-Id: 200.000a.f331.2680

ICCP Version: 0

State Flags: Active - A

Standby - S

Down - D

AdminDown - AD

Standby Reverting - SR

Unknown - U

mLACP Channel-groups

Channel State Priority Active Links Inactive Links

Group Local/Peer Local/Peer Local/Peer Local/Peer

1 S/A 32768/28000 4/4 0/0

show lacp multichassis portchannel

Use the show lacp multichassis portchannel command to display the interface port-channel value channel group, LAG state, priority, inactive links peer configuration, and standby links.

Router# show lacp multichassis port-channel1

Interface Port-channel1

Local Configuration:

Address: 0014.6a8b.c680

Channel Group: 1

State: Standby

LAG State: Up

Priority: 32768

Inactive Links: 0

Total Active Links: 4

Bundled: 0

Selected: 0

Standby: 4

Unselected: 0

Peer Configuration:

Interface: Port-channel1

Address: 000a.f331.2680

Channel Group: 1

State: Active

LAG State: Up

Priority: 28000

Inactive Links: 0

Total Active Links: 4

Bundled: 4

Selected: 4

Standby: 0

Unselected: 0

show mpls ldp iccp

Use the show mpls ldp iccpcommand to display the LDP session and ICCP state information.

Router# show mpls ldp iccp

ICPM RGID Table

iccp:

rg_id: 100, peer addr: 172.1.1.1

ldp_session 0x2, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM RGID Table total ICCP sessions: 1

ICPM LDP Session Table

iccp:

rg_id: 100, peer addr: 172.1.1.1

ldp_session 0x2, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM LDP Session Table total ICCP sessions: 1

show mpls l2transport

Use the show mpls l2transport command to display the local interface and session details, destination address, and status.

Router# show mpls l2transport vc 2

Local intf Local circuit Dest address VC ID Status

------------- -------------------------- --------------- ---------- ----------

Po1 Eth VLAN 2 172.2.2.2 2 STANDBY

Po1 Eth VLAN 2 172.4.4.4 2 STANDBY

show etherchannel summary

Use the show etherchannel summary command to display the status and identity of the mLACP member links.

Router# show etherchannel summary

Flags: D - down P - bundled in port-channel

I - stand-alone s - suspended

H - Hot-standby (LACP only)

R - Layer3 S - Layer2

U - in use f - failed to allocate aggregator

M - not in use, minimum links not met

u - unsuitable for bundling

w - waiting to be aggregated

d - default port

Number of channel-groups in use: 2

Number of aggregators: 2

Group Port-channel Protocol Ports

------+-------------+-----------+-----------------------------------------------

1 Po1(RU) LACP Gi3/2(P) Gi3/11(P) Gi3/21(P)

Gi3/32(P)

show lacp internal

Use the show lacp internal command to display the device, port, and member-link information.

Router# show lacp 1 internal

Flags: S - Device is requesting Slow LACPDUs

F - Device is requesting Fast LACPDUs

A - Device is in Active mode P - Device is in Passive mode

Channel group 1

LACP port Admin Oper Port Port

Port Flags State Priority Key Key Number State

Gi3/2 FA bndl-sby 32768 0x1 0x1 0xF303 0x7

Gi3/11 FA bndl-sby 32768 0x1 0x1 0xF30C 0x5

Gi3/21 FA bndl-sby 32768 0x1 0x1 0xF316 0x5

Gi3/32 FA bndl-sby 32768 0x1 0x1 0xF321 0x7

Peer (MLACP-PE1) mLACP member links

Gi2/20 SA bndl 28000 0x1 0x1 0x8215 0x3D

Gi2/31 SA bndl 28000 0x1 0x1 0x8220 0x3D

Gi2/40 SA bndl 28000 0x1 0x1 0x8229 0x3D

Gi2/9 SA bndl 28000 0x1 0x1 0x820A 0x3D

Example Verifying VPLS on an Active PoA

The following show commands can be used to display statistics and configuration parameters to verify the operation of the mLACP feature on an active PoA:

- show lacp multichassis group

- show lacp multichassis port-channel

- show mpls ldp iccp

- show mpls l2transport

- show etherchannel summary

- show lacp internal

show lacp multichassis group

Use the show lacp multichassis group command to display the LACP parameters, local configuration, status of the backbone uplink, peer information, node ID, channel, state, priority active, and inactive links.

Router# show lacp multichassis group 100

Interchassis Redundancy Group 100

Operational LACP Parameters:

RG State: Synchronized

System-Id: 200.000a.f331.2680

ICCP Version: 0

Backbone Uplink Status: Connected

Local Configuration:

Node-id: 0

System-Id: 200.000a.f331.2680

Peer Information:

State: Up

Node-id: 7

System-Id: 2000.0014.6a8b.c680

ICCP Version: 0

State Flags: Active - A

Standby - S

Down - D

AdminDown - AD

Standby Reverting - SR

Unknown - U

mLACP Channel-groups

Channel State Priority Active Links Inactive Links

Group Local/Peer Local/Peer Local/Peer Local/Peer

1 A/S 28000/32768 4/4 0/0

show lacp multichassis port-channel

Use the show lacp multichassis port-channel command to display the interface port-channel value channel group, LAG state, priority, inactive links peer configuration, and standby links.

Router# show lacp multichassis port-channel1

Interface Port-channel1

Local Configuration:

Address: 000a.f331.2680

Channel Group: 1

State: Active

LAG State: Up

Priority: 28000

Inactive Links: 0

Total Active Links: 4

Bundled: 4

Selected: 4

Standby: 0

Unselected: 0

Peer Configuration:

Interface: Port-channel1

Address: 0014.6a8b.c680

Channel Group: 1

State: Standby

LAG State: Up

Priority: 32768

Inactive Links: 0

Total Active Links: 4

Bundled: 0

Selected: 0

Standby: 4

Unselected: 0

show mpls ldp iccp

Use the show mpls ldp iccp command to display the LDP session and ICCP state information.

Router# show mpls ldp iccp

ICPM RGID Table

iccp:

rg_id: 100, peer addr: 172.3.3.3

ldp_session 0x3, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM RGID Table total ICCP sessions: 1

ICPM LDP Session Table

iccp:

rg_id: 100, peer addr: 172.3.3.3

ldp_session 0x3, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM LDP Session Table total ICCP sessions: 1

show mpls l2transport

Use the show mpls l2transport command to display the local interface and session details, destination address, and the status.

Router# show mpls l2transport vc 4000

Local intf Local circuit Dest address VC ID Status

------------- -------------------------- --------------- ---------- ----------

VFI VPLS VFI 172.2.2.2 4000 UP

VFI VPLS VFI 172.4.4.4 4000 UP

show etherchannel summary

Use the show etherchannel summary command to display the status and identity of the mLACP member links.

Router# show etherchannel summary

Flags: D - down P - bundled in port-channel

I - stand-alone s - suspended

H - Hot-standby (LACP only)

R - Layer3 S - Layer2

U - in use f - failed to allocate aggregator

M - not in use, minimum links not met

u - unsuitable for bundling

w - waiting to be aggregated

d - default port

Number of channel-groups in use: 2

Number of aggregators: 2

Group Port-channel Protocol Ports

------+-------------+-----------+-----------------------------------------------

1 Po1(RU) LACP Gi2/9(P) Gi2/20(P) Gi2/31(P)

Gi2/40(P)

show lacp internal

Use the show lacp internal command to display the device, port, and member-link information.

Router# show lacp internal

Flags: S - Device is requesting Slow LACPDUs

F - Device is requesting Fast LACPDUs

A - Device is in Active mode P - Device is in Passive mode

Channel group 1

LACP port Admin Oper Port Port

Port Flags State Priority Key Key Number State

Gi2/9 SA bndl-act 28000 0x1 0x1 0x820A 0x3D

Gi2/20 SA bndl-act 28000 0x1 0x1 0x8215 0x3D

Gi2/31 SA bndl-act 28000 0x1 0x1 0x8220 0x3D

Gi2/40 SA bndl-act 28000 0x1 0x1 0x8229 0x3D

Peer (MLACP-PE3) mLACP member links

Gi3/11 FA hot-sby 32768 0x1 0x1 0xF30C 0x5

Gi3/21 FA hot-sby 32768 0x1 0x1 0xF316 0x5

Gi3/32 FA hot-sby 32768 0x1 0x1 0xF321 0x7

Gi3/2 FA hot-sby 32768 0x1 0x1 0xF303 0x7

Example Verifying VPLS on a Standby PoA

The show commands in this section can be used to display statistics and configuration parameters to verify the operation of the mLACP feature:

- show lacp multichassis group

- show lacp multichassis portchannel

- show mpls ldp iccp

- show mpls l2transport vc 2

- showetherchannelsummary

- show lacp internal

show lacp multichassis group

Use the show lacp multichassis group interchassis group number command to display the LACP parameters, local configuration, status of the backbone uplink, peer information, node ID, channel, state, priority, active, and inactive links.

Router# show lacp multichassis group 100

Interchassis Redundancy Group 100

Operational LACP Parameters:

RG State: Synchronized

System-Id: 200.000a.f331.2680

ICCP Version: 0

Backbone Uplink Status: Connected

Local Configuration:

Node-id: 7

System-Id: 2000.0014.6a8b.c680

Peer Information:

State: Up

Node-id: 0

System-Id: 200.000a.f331.2680

ICCP Version: 0

State Flags: Active - A

Standby - S

Down - D

AdminDown - AD

Standby Reverting - SR

Unknown - U

mLACP Channel-groups

Channel State Priority Active Links Inactive Links

Group Local/Peer Local/Peer Local/Peer Local/Peer

1 S/A 32768/28000 4/4 0/0

show lacp multichassis portchannel

Use the show lacp multichassis portchannel command to display the interface port-channel value channel group, LAG state, priority, inactive links peer configuration, and standby links.

Router# show lacp multichassis port-channel1

Interface Port-channel1

Local Configuration:

Address: 0014.6a8b.c680

Channel Group: 1

State: Standby

LAG State: Up

Priority: 32768

Inactive Links: 0

Total Active Links: 4

Bundled: 0

Selected: 0

Standby: 4

Unselected: 0

Peer Configuration:

Interface: Port-channel1

Address: 000a.f331.2680

Channel Group: 1

State: Active

LAG State: Up

Priority: 28000

Inactive Links: 0

Total Active Links: 4

Bundled: 4

Selected: 4

Standby: 0

Unselected: 0

show mpls ldp iccp

Use the show mpls ldp iccp command to display the LDP session and ICCP state information.

Router# show mpls ldp iccp

ICPM RGID Table

iccp:

rg_id: 100, peer addr: 172.1.1.1

ldp_session 0x2, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM RGID Table total ICCP sessions: 1

ICPM LDP Session Table

iccp:

rg_id: 100, peer addr: 172.1.1.1

ldp_session 0x2, client_id 0

iccp state: ICPM_ICCP_CONNECTED

app type: MLACP

app state: ICPM_APP_CONNECTED, ptcl ver: 0

ICPM LDP Session Table total ICCP sessions: 1

show mpls l2transport vc 2

Use the show mpls l2transport command to display the local interface and session details, destination address, and status.

Router# show mpls l2transport vc 2

Local intf Local circuit Dest address VC ID Status

------------- -------------------------- --------------- ---------- ----------

VFI VPLS VFI 172.2.2.2 4000 UP

VFI VPLS VFI 172.4.4.4 4000 UP

showetherchannelsummary

Use the show etherchannel summary command to display the status and identity of the mLACP member links.

Router# show etherchannel summary

Flags: D - down P - bundled in port-channel

I - stand-alone s - suspended

H - Hot-standby (LACP only)

R - Layer3 S - Layer2

U - in use f - failed to allocate aggregator

M - not in use, minimum links not met

u - unsuitable for bundling

w - waiting to be aggregated

d - default port

Number of channel-groups in use: 2

Number of aggregators: 2

Group Port-channel Protocol Ports

------+-------------+-----------+-----------------------------------------------

1 Po1(RU) LACP Gi3/2(P) Gi3/11(P) Gi3/21(P)

Gi3/32(P)

show lacp internal

Use the show lacp internal command to display the device, port, and member- link information.

Router# show lacp 1 internal

Flags: S - Device is requesting Slow LACPDUs

F - Device is requesting Fast LACPDUs

A - Device is in Active mode P - Device is in Passive mode

Channel group 1

LACP port Admin Oper Port Port

Port Flags State Priority Key Key Number State

Gi3/2 FA bndl-sby 32768 0x1 0x1 0xF303 0x7

Gi3/11 FA bndl-sby 32768 0x1 0x1 0xF30C 0x5

Gi3/21 FA bndl-sby 32768 0x1 0x1 0xF316 0x5

Gi3/32 FA bndl-sby 32768 0x1 0x1 0xF321 0x7

Peer (MLACP-PE1) mLACP member links

Gi2/20 SA bndl 28000 0x1 0x1 0x8215 0x3D

Gi2/31 SA bndl 28000 0x1 0x1 0x8220 0x3D

Gi2/40 SA bndl 28000 0x1 0x1 0x8229 0x3D

Gi2/9 SA bndl 28000 0x1 0x1 0x820A 0x3D

Additional References

Related Documents

| Related Topic |

Document Title |

|---|---|

| Carrier Ethernet configurations |

Cisco IOS Carrier Ethernet Configuration Guide , Release 12.2SR |

| Carrier Ethernet commands: complete command syntax, command mode, command history, defaults, usage guidelines, and examples |

Cisco IOS Carrier Ethernet Command Reference |

| Cisco IOS commands: master list of commands with complete command syntax, command mode, command history, defaults, usage guidelines, and examples |

Standards

| Standard |

Title |

|---|---|

| IEEE 802.3ad |

Link Aggregation Control Protocol |

| IEEE 802.1ak |

Multiple Registration Protocol |

MIBs

RFCs

| RFC |

Title |

|---|---|

| RFC 4762 |

Virtual Private LAN Service (VPLS) Using Label Distribution Protocol (LDP) Signaling |

| RFC 4447 |

Pseudowire Setup and Maintenance Using the Label Distribution Protocol (LDP) |

Technical Assistance

| Description |

Link |

|---|---|

| The Cisco Support and Documentation website provides online resources to download documentation, software, and tools. Use these resources to install and configure the software and to troubleshoot and resolve technical issues with Cisco products and technologies. Access to most tools on the Cisco Support and Documentation website requires a Cisco.com user ID and password. |

Feature Information for mLACP

The following table provides release information about the feature or features described in this module. This table lists only the software release that introduced support for a given feature in a given software release train. Unless noted otherwise, subsequent releases of that software release train also support that feature.

Use Cisco Feature Navigator to find information about platform support and Cisco software image support. To access Cisco Feature Navigator, go to www.cisco.com/go/cfn. An account on Cisco.com is not required.

| Table 2 | Feature Information for mLACP |

| Feature Name |

Releases |

Feature Information |

|---|---|---|

| Multichassis LACP (mLACP) |

12.2(33)SRE 15.0(1)S |

Cisco's mLACP feature addresses the need for interchassis redundancy mechanisms when a carrier wants to dual home a device to two upstream PoAs for redundancy. The mLACP feature enhances the 802.3ad LACP implementation to meet this requirement. The following commands were introduced or modified: backbone interface, debug acircuit checkpoint, debug lacp, ethernet mac-flush mirp notification, interchassis group, lacp failover, lacp max-bundle, lacp min-bundle, member ip, mlacp interchassis group, mlacp lag-priority, mlacp node-id, mlacp system-mac, mlacp system-priority, monitor peer bfd, redundancy, show ethernet service instance interface port-channel, show ethernet service instance id mac-tunnel, show lacp, status decoupled, status peer topology dual-homed. |

Glossary

active attachment circuit--The link that is actively forwarding traffic between the DHD and the active PoA.

active PW--The pseudowire that is forwarding traffic on the active PoA.

BD--bridge domain.

BFD--bidirectional forwarding detection.

DHD--dual-homed device. A node that is connected to two switches over a multichassis link aggregation group for the purpose of redundancy.

DHN--dual-homed network. A network that is connected to two switches to provide redundancy.

H-VPLS--Hierarchical Virtual Private LAN Service.

ICC--Interchassis Communication Channel.

ICCP--Interchassis Communication Protocol.

ICPM--Interchassis Protocol Manager.

ICRM--Interchassis Redundancy Manager.

LACP--Link Aggregation Control Protocol.

LAG--link aggregation group.

LDP--Link Distribution Protocol.

MCEC--Multichassis EtherChannel.

mLACP--Multichassis LACP.

PoA--point of attachment. One of a pair of switches running multichassis link aggregation group with a DHD.

PW-RED--pseudowire redundancy.

standby attachment circuit--The link that is in standby mode between the DHD and the standby PoA.

standby PW--The pseudowire that is in standby mode on either an active or a standby PoA.

uPE--user-facing Provider Edge.

VPLS--Virtual Private LAN Service.

VPWS--Virtual Private Wire Service.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

Feedback

Feedback