OpenStack supports

FWaaS (Firewall-as-a-Service) that allows users to add perimeter firewall

management to networking. FWaaS supports one firewall policy and logical

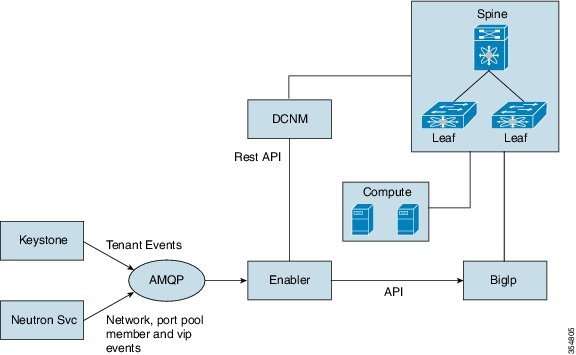

firewall instance per project/tenant. With Cisco Programmable Fabric, each

compute node is attached to a leaf node, which hosts the physical default

gateways for all the VMs on the compute node.

A VM based Firewall

such as CSR1000v can be inserted into the Cisco Programmable Fabric

architecture and used as FWaaS in OpenStack. FWaaS insertion into the fabric

will be automatically triggered via OpenStack FWaaS functionality, including

configuring the firewall rules and policy. The leaf switch will be configured

using its auto-configuration feature and VRF and network templates from DCNM

for both its internal VRF/network and external VRF/network for a given tenant.

While the initial

effort is based on a Cisco Adaptive Security Appliance (ASA) firewall, the same

architecture can allow the insertion of VM based firewall device into the

fabric as well. Since the firewall is actually acting as a router, OSPF will be

transparently programed by the enabler when FWaaS is provisioned from

OpenStack.

Note |

Only physical ASA

will be supported in first release. CSR1000v (virtual firewall) is not

supported.

|

FWaaS

Integration

OpenStack FWaaS

adds perimeter firewall management to Networking. In OpenStack, routing is

achieved through a router plugin. The router plugin uses the underlying Linux

kernel routing implementation, to achieve routing. Every router created in

OpenStack, has a Linux namespace created for it. If a VM in a subnet wants to

communicate with another subnet, those subnets are added as interfaces to the

router. In Juno release, every project is limited to one Firewall. The Firewall

can have a policy, which can have multiple rules. The rules specify the

classifier and actions. The firewall rules are applied to all the routers in

the project and further to all the interfaces of a router.

OpenStack

Firewall with Nexus Fabric

You can have

firewall modules from different implementations with Nexus Fabric. When

OpenStack is integrated with the Nexus Fabric, it is generally used as a tenant

edge firewall. The following needs to be supported:

The following

section describes the packet flow for a tenant when a perimeter firewall is

added through OpenStack in the presence of Physical ASA device acting as the

firewall.

Firewall

Manager

Firewall manager

is responsible for acting on firewall related events from OpenStack such as

policies, rules and Firewall creation/deletion and modification. The firewall

and its associated rules and policies are decoded and stored per tenant. Based

on the configuration, this module dynamically loads the Firewall Driver module.

This module calls the Firewall Driver module when a firewall configuration gets

added/deleted or modified.

For creating a

Firewall, you need to create the rules, policy containing the rules and a

Firewall containing the policy. You can create this in any order. For example,

a Firewall can be created without any policy attached and later on, policies

can be attached to the Firewall. Similarly, policies can be created without any

rules attached at the beginning and then the policies can be updated with the

rules. So, the Firewall module is responsible for caching the information and

calling the modules to prepare the fabric or configure the Firewall device only

after the complete firewall information is obtained.

Firewall

Module

Firewall module

is responsible for interacting with the actual device and doing the

configuration. Depending on the appliance, the driver may use REST API or CLIs.

Cisco Physical ASA and OpenStack Native firewall are supported.

Firewall module

is responsible for the following:

-

Set-up the

fabric when a firewall is created.

-

Clear the

fabric configuration when the firewall is deleted.

-

Provide APIs

on information about the fabric for other modules including the driver to use.

Feedback

Feedback